2

MEMORY AND YOUR SENSE OF “YOU”

The myth of failing memory

I’m standing in front of the hall closet. I was packing my suitcase in the bedroom and came here to find something, and I now can’t remember what. I walk back to the bedroom to see if something there will remind me. My mind is blank. I walk to the kitchen thinking maybe I had stopped at the hall closet by accident on my way here, hoping that there will be some object, something in plain view, that will remind me why I’m here. I go back to the bedroom and stare at the suitcase and piles of clothes, but there is no clue there either.

This is not the first time it’s happened. In fact, it’s nothing new—I used to do this in my thirties, but back then, I just figured I was distracted. If I wasn’t a neuroscientist, I’d be worried now, in my sixties, that this was a sure sign that my brain is decaying and that I’ll soon be in an assisted living facility waiting for someone to feed me my dinner of smashed peas and pulverized carrots. But the research literature is comforting—these kinds of slips are normal and routine as we age and are not necessarily indicative of any dark, foreboding illness. Part of what explains this is a general neurological turn inward—every decade after our fortieth birthday, our brains spend more time contemplating our own thoughts versus taking in information from the external environment. This is why we find ourselves standing in front of an open closet door with no memory of what we went there for. This is part of the normal developmental trajectory of the aging brain and not always a sign of something more sinister.

The panic that we feel upon forgetting something, particularly when we’re older, is visceral and disturbing. It underscores how important and fundamental memory is—not just to getting things done, but to our deep sense of self. Memories tell us who we are in moments of conflict or doubt. Good memories comfort us. Bad ones haunt us. And the feelings they invoke in us are very personal and intimate.

As philosophers and writers have long known, without memory we lack identity. The Christopher Nolan film Memento makes a vivid case for this, as does the current Netflix hit Westworld, by Christopher’s brother Jonathan. (Now, there’s an argument for the genetic basis of talent. Or is it an argument for shared home environment? Of course, it is the interaction of these two things.) Our very conception of ourselves and who we are is dependent on a continuous thread, a mental narrative of the experiences we’ve had and the people we’ve encountered. Without memory, you don’t know if you’re someone who likes chocolate or not, if clowns amuse or terrify you; you don’t know who your friends are or whether you have the skill to prepare chocolate pots de crème for ten people who are going to arrive at your apartment in an hour.

But if it’s so important, why isn’t memory more reliable? You’d think that eons of evolution would have improved it, but the story of how memory evolved has a few twists and turns, a few counterintuitive features. For one, our memories are less like videotaped recordings of experiences than they are like jigsaw puzzles. That simple fact fuels many jokes about age-related memory loss, such as this one:

Two elderly gentlemen were sitting next to each other at a dinner party.

“My wife and I had dinner at a new restaurant last week,” one of the men said.

“Oh, what’s it called?” asked the other.

“Um . . . I . . . I can’t remember. [Thinks. Rubs chin.] Hmm . . . What is the name of that flower that you buy on romantic occasions? You know, it usually comes by the dozen, you can get it in different colors, there are thorns on the stem . . . ?”

“Do you mean a rose?”

“Yes, that’s it!” (Leans across the table to where his wife is sitting.) “Rose, what was the name of that restaurant we went to last week?”

Memory can indeed seem like a puzzle with many missing pieces. We rarely retrieve all the pieces, and our brains fill in the missing information with creative guesses, based on experience and pattern matching. This leads to many unfortunate misrecollections, often accompanied by the stubborn belief that we are recalling accurately. We cling to these misrememberings, storing them in our memory banks incorrectly, and then retrieving them in a still-incorrect form and with a stronger (misplaced) sense of certainty that they are accurate. George Martin, the Beatles’ producer, described his own experience with this:

There’s this nice fellow named Mark Lewisohn. When I made the film “The Making of Sergeant Pepper” we had him come in as a sort of consultant. And I had George and Paul and Ringo come ’round, and I interviewed them about the making of the album. The interesting thing was there were parts of it that all of us remembered differently. When I was interviewing Paul, he would recollect something and it would be wrong. And I had to keep telling Mark Lewisohn not to correct Paul because for Lewisohn to say, “That’s not right—according to these documents here and these logs, it was this way,” . . . well, for Paul it would be rather humiliating. So Paul tells his story and that’s how he remembers it. The thing about Lewisohn’s logs is they made me realize that my memory was faulty as well—Paul and I would remember something in two different ways and the documents would prove it was done in a completely different, third way.

Why does this happen?

How Memory Works

Memory is not just one thing. It is a set of different processes that we casually use a single term to describe. We talk about memorizing phone numbers, remembering a particular smell, remembering the best route to school or to work, remembering the capital of California and the meaning of the word phlebotomist. We remember that we’re allergic to ragweed or that we just had a haircut three weeks ago. Smartphones “remember” phone numbers for us and smart thermostats learn when we’re likely to be home and will want to set the temperature to seventy degrees. As with many concepts, we have intuitions about what memory is, but those intuitions are often flat-out wrong.

Like other brain systems, memory wasn’t designed; it evolved to solve adaptive problems in the environment. What we think of as memory is actually several biologically and cognitively distinct systems. Only some things that you experience get stored in memory. This is because one of the evolutionary functions of memory is to abstract out regularities from the world, to generalize. That generalization allows us to use objects like toilets and pens—you can use a new toilet, or a new pen, without special training because functionally, it is the same as other toilets or pens you’ve used. Why and how this generalization learning occurs is one of the oldest topics in experimental psychology and has been a specialty of my postdoctoral supervisor Roger Shepard for more than fifty years. (At age ninety, Roger is still active, working on two different books and collaborating on a paper with me—I’m embarrassed to say that I am the bottleneck for the paper, not he.)

Perhaps the most basic example of generalization is food—you learn as a child that the chicken tenders you’re eating today are not identical in size and appearance to ones you ate yesterday but that they are still edible and taste pretty much the same. We see this generalization principle in tool use as well. If you need a knife to cut your food, you might go to the silverware drawer in your kitchen and take whatever knife is there—functionally they are all the same. We generalize like this thousands of times a day without even knowing it. It’s related to memory in that your memory’s representation of chicken tenders, or a table knife, is usually a somewhat generalized impression, not a mental photograph of a particular chicken tender or particular knife.

Two other professors of mine, Michael Posner and Steve Keele, provided some of the first and most interesting evidence of this back in the 1960s. They wanted to find a way to determine what it was about an assortment of similar items that actually got stored in the brain’s memory system—was it the unique features of a specific item or the generalized features of the average item? You can think about this like a family resemblance in your own family—there may be a particular color hair that people in your family tend to have, a particular nose, a particular chin. Not everyone in the family has these, and the hair, nose, and chin have variations from person to person, and yet . . . there is something about them that binds them all together. This is the abstract generalization that Posner and Keele wanted to explore.

Family resemblance includes variability around a prototype—here the prototype, or patriarch, is in the center.

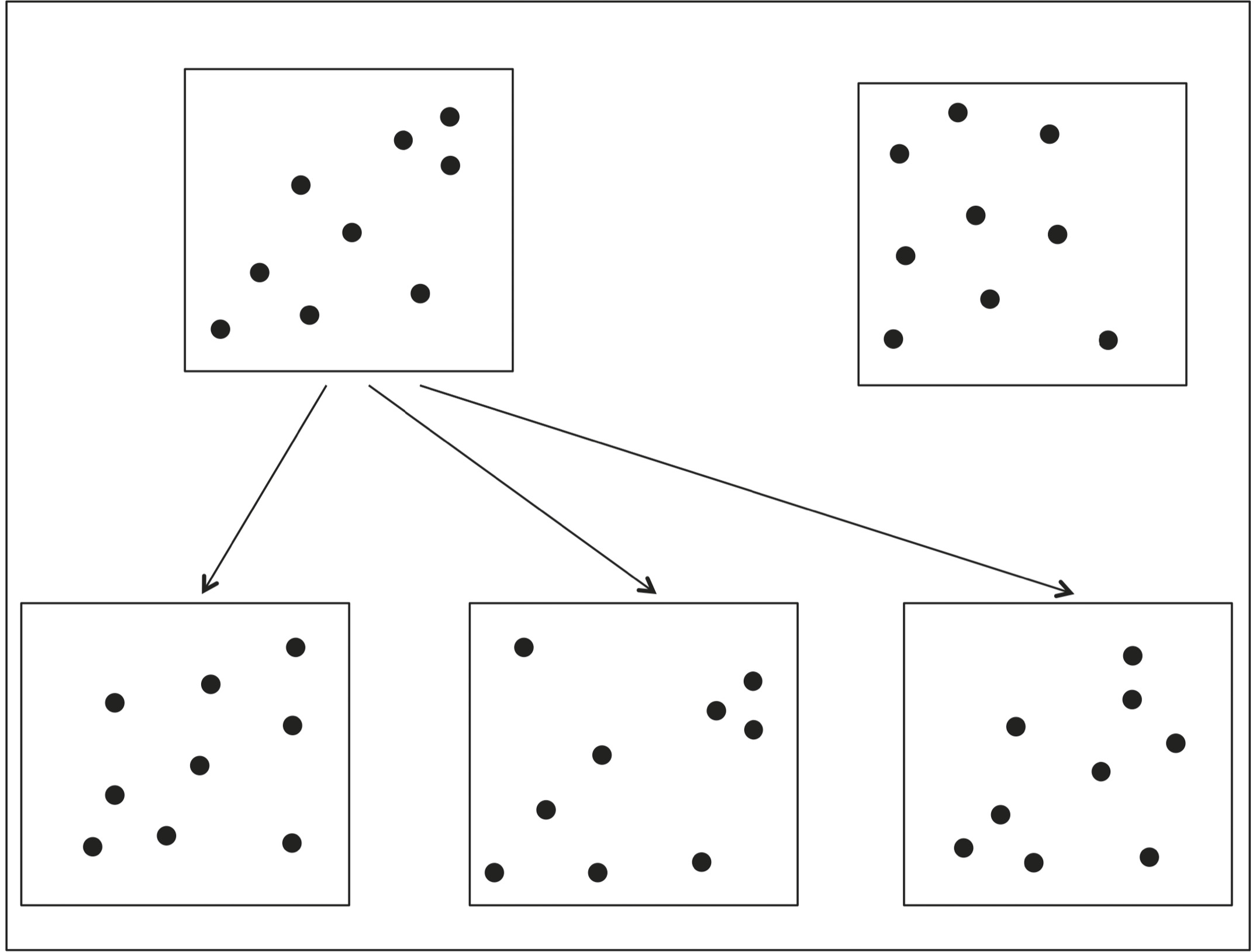

As cognitive psychologists do, Posner and Keele started out with very simple items, much less complex than a human face. They presented computer-generated patterns of dots that they had made by starting with a parent, or prototype, and then shifted some of the dots one millimeter or so in a random direction. This created patterns that all shared a family resemblance with the original—very much like the variation in faces that we see in parents and their children. On the following page is an example of the one they began with (the prototype, upper left) and some of the variations (at the ends of the arrows). The one on the upper right is an unrelated pattern, used as a control in their experiment.

If you look carefully, you’ll see a kind of family resemblance across the four related squares—all have a kind of triangular pattern of three dots in the lower left, although the dots vary in how close together they are. All have a diagonal of three dots running down the center roughly from the upper left to the lower right, and they vary in how stretched out they are and where the first dot begins.

In the experiment, people were shown version after version of squares with dot patterns in them, each of them different. The participants weren’t told how these dot patterns had been constructed. Here’s the clever part: Posner and Keele showed people the descendants (like the ones on the bottom row) and didn’t show them the parents (like the one on the upper left, the parent). A week later the same people came back in and saw a bunch of dot patterns, some old and some new, and simply had to indicate which ones they’d seen before. Although the participants didn’t know this, some of the “new” patterns were actually the parents, the prototypes used to generate the other dot patterns. If people are storing the exact details of the figures—if their memories are like video recordings—this task would be easy. On the other hand, if what we store in memory is an abstract, generalized version of objects, people ought to recall seeing the parent even if they hadn’t—it constitutes an abstract generalization of the children that were created from it. That’s exactly what they found.

As we age, our brains become better and better at this kind of pattern matching and abstraction, and although dot patterns seem pretty far removed from anything of real-world importance, the experiment illuminates that abstraction occurs without our conscious awareness, and it accounts for one of the most widespread traits that oldsters have: wisdom. From a neurocognitive standpoint, wisdom is the ability to see patterns where others don’t see them, to extract generalized common points from prior experience and use those to make predictions about what is likely to happen next. Oldsters aren’t as fast, perhaps, at mental calculations and retrieving names, but they are much better and faster at seeing the big picture. And that comes down to decades of generalization and abstraction.

Now, you might object and say that you have very precise memories for particular objects. You’d recognize if someone switched your wedding ring on you. You know the feel of your favorite pair of shoes. If you have a fancy pen that someone gave you as a gift, you would be sad if you lost it. But if you lost a ten-cent Bic disposable pen, you probably would just reach into your drawer and take out another because they’re interchangeable, which is just another way of saying you’ve generalized them. If you’ve ever tried to take away the favorite fuzzy blanket from a young child, replacing the frayed, worn, and tattered one with a brand-new one, you know that they freak out—to them a blanket is not just a blanket and they don’t generalize: This particular blanket is their special blanket.

In most cases of generalization, it’s not that we can’t notice the difference between this pen and that pen if we were asked to study them, nor is it necessarily that we can’t remember differences—it’s just that we don’t need to. Our memory systems strive to be efficient in the service of not cluttering our minds with unnecessary detail.

Again, we see individual differences in how we generalize. To Lew Goldberg, a car is a car is a car. Its only value is in getting you from one place to another. He doesn’t understand people who collect cars or who have more than one. “Why would you want to have two cars?” he would ask. “It would be like having two dishwashers.” He sees the world of objects transactionally, with little sentimentality or interest in their differences. He does not seem to see the irony that someone whose lifework is studying individual differences in human beings has little interest in the individual differences in human-made objects. He does get excited at the individual differences he finds in nature, between trees, mountains, lakes, rocks, and sunsets. He’s just not a manufactured-objects guy.

Some people do have obsessions with objects in their lives—a favorite pair of boots that you wear way beyond the time when they should probably have been replaced; a favorite sofa that long ago needed re-covering. In cases like these, it’s not that we fail to generalize; it’s that the objects have taken on a special, personal meaning beyond their utility, a sentimentality. And they’ve activated a privileged circuit in memory.

Generalization promotes cognitive economy, so that we don’t focus on particulars that don’t matter. The great Russian neuropsychologist Alexander Luria studied a patient, Solomon Shereshevsky, with a memory impairment that was the opposite of what we usually hear about—Solomon didn’t have amnesia, the loss of memories; he had what Luria called hypermnesia (we might say that his superpower was superior memory). His supercharged memory allowed him to perform amazing feats, such as repeating speeches word for word that he had heard only once, or complex mathematical formulas, long sequences of numbers, and poems in foreign languages he didn’t even speak. Before you think that having such a fantastic memory would be great, it came with a cost: Solomon wasn’t able to form abstractions because he remembered every detail as distinct. He had particular trouble recognizing people. From a neurocognitive standpoint, every time you see a face, it is likely that it looks at least slightly different from the last time—you’re viewing it at a different angle and distance than before, and you might be encountering a different expression. While you’re interacting with a person, their face goes through a parade of expressions. Because your brain can generalize, you see all of these different manifestations of the face as belonging to the same person. Solomon couldn’t do that. As he explained to Luria, recognizing his friends and colleagues was nearly impossible because “everyone has so many faces.”

Memory Systems

The recognition that memory is not one thing, but many different things, has been one of the most important discoveries in neuroscience. Each is influenced by different variables, governed by different principles, stores different kinds of information, and is supported by different neural circuits. And some of these systems are more robust than others, allowing us to preserve accurate memories for a lifetime, whereas others are more fickle, more affected by emotion, and are inconstant.

Remember that evolution happens in fits and starts; it doesn’t start out with a plan or goal. After hundreds of thousands of years of brain evolution, we don’t end up with the kind of neat-and-tidy system we would have if everything had been engineered from the start. It’s likely that the different human memory systems we have today followed separate evolutionary trajectories, as they addressed distinct adaptive problems. So we end up today with one memory system that keeps track of where you are in the world (spatial memory), another that keeps track of which way you turn a faucet on and off (procedural memory), and another that keeps track of what you were just thinking thirty seconds ago (short-term memory). Those age-related memory lapses start to make sense as we see that they tend to affect one memory system but not another.

Our memory systems form a hierarchy. At the highest level are explicit memory and implicit memory. They contain what they sound like—explicit memory contains your conscious recollections of experiences and facts; implicit memory contains things that you know without your being aware of knowing them.

An example of implicit memory is knowing how to perform a complex sequence of actions, such as touch-typing or playing a memorized song on the piano. Normally, we can’t break these down into their subcomponents, the conscious movements of each finger—they are bound together as a bundled sequence in our memories. Even more implicit is conditioning, such as salivating when you open a jar of pickles, or showing aversion to the smell of a food that previously made you sick—you may not be conscious of this, but your body remembers.

Explicit memory comes in two broad types, reflecting two different neurological systems. One of these is general knowledge—your memory of facts and word definitions. The other is episodic knowledge—your memory of specific episodes in your life, often autobiographical. Scientists call the memory for general knowledge semantic memory and the memory for the specific episodes in your life episodic memory. (I think they got the name right for episodic memory, but the term semantic memory has always bothered me because I find it less descriptive. I prefer to think of it as generalized memory, but at this point we’re stuck with the name.)

Semantic memory, your general knowledge store, is all those things that you know without any memory of when you actually learned them. This would be things like knowing the capital of California, your birthday, even your times tables (3 × 1 = 3; 3 × 2 = 6; 3 × 3 = 9; etc.).

In contrast, episodic memory is all those things you know that involve a particular incident or episode. This would be things like remembering your first kiss, your twenty-first birthday party, or what time you woke up this morning. These events happened to you, and you remember the instance of them and the you in them. That’s what distinguishes them from semantic memories—they have an autobiographical component. Do you remember when you learned that 4 + 3 = 7, or when you learned your birth date? Probably not. These are things that you just know, so they’re your semantic memories.

Of course there are variations across people, and exceptions. I was talking about the different types of memory with my friend Felix last year, when he was age nine. By way of demonstration, I asked if he knew what the capital of California was. He said, “Yes, it’s Sacramento.” I then asked if he remembered when he learned it. He said, “Yes.” I slightly skeptically asked if he remembered the actual day that he learned it, assuming he meant that he learned it last year in school or some other general time. He said yes, he remembered the actual day. I asked what day that was. He answered, “Today.” So for Felix, the capital of California was an episodic memory, not a semantic one. It might even stay that way for him, since all of us—my wife and I and Felix and his parents—got a laugh out of how suddenly a college professor was bested by a nine-year-old. A detail that would normally fade into obscurity in the annals of Felix’s brain might become elevated to a kind of special status because emotions were attached to it. This is one of the rules of memory that is now well established: We tend to remember best the episodic component of those things that were imprinted with an emotional resonance, positive or negative, regardless of whether the learning would have normally become semantic or episodic.

But for most people, such episodic memories as this—ones that involve information literacy and general knowledge—become semantic memories over time, and the specific moment of learning becomes lost.

Think how overwhelming it would be if you remembered not just the meaning of every word you knew, and your whole treasure trove of basic knowledge about the world (What continent is Portugal in? Who was born first, Beethoven or Mozart? Who wrote War and Peace?), and you also remembered exactly when and how you learned it. The brain evolved efficiencies to jettison this (normally) unnecessary contextual information, selectively retaining the parts of the knowledge that are likely to come in most handy—the facts. Some people, however, including some with autism spectrum disorders, don’t do this jettisoning and retain all of the details, and it can be either a source of comfort and success for them or a source of irritation and debilitation.

There are some gray areas. Memory for things such as an allergy to ragweed, or your favorite cut of steak, may be semantic—just something you know—or they may be episodic, in that it’s possible that you recall the specific instances involved, the time and place, and conjure them up in memory; for example, the very moment you realized, after swelling like a puffer fish with an allergic reaction, that you can’t brush your bare skin against ragweed on a hike. The biological distinction is that different parts of the brain hold semantic memories versus episodic ones, and this is a critical step toward understanding why memory failure tends to happen to one system rather than the whole of memory—it’s because memory is not just one thing, but several.

Two particular brain regions, crucial to some kinds of memory, are the ones that decay and shrink with age and with Alzheimer’s disease: They are the hippocampus (Greek for seahorse, because its curved shape resembles that sea creature) and the medial temporal lobe (neurology-speak for the middle part of a structure just behind and above your ears). The hippocampus and medial temporal lobe are important for forming some of the kinds of explicit memory, and they’re not needed for implicit memory. This is why eighty-eight-year-old Aunt Marge, lost in a fog of amnesia-induced disorientation, cannot remember you, where she is, or what year it is but still knows how to use a fork, adjust the television station, read, and is excited to see appetizing food, all forms of implicit memory. The impaired brain structures affect her explicit memory but not her implicit memory.

The hippocampus is also necessary for storing spatial navigation and memory for places. Damage to this and to associated temporal lobe regions, as often happens with age, can lead to disorientation and getting lost. In most cases, it doesn’t shrink or decay all at once, and so patients are left with fragmented spatial memories, wandering around, registering some landmarks and familiar sights but not able to string them all together into a meaningful mental map.

All of what I’ve been talking about to this point applies to long-term memory—that more-or-less durable storehouse of memories that can last a lifetime. Short-term memory is another animal entirely. It contains the contents of your thoughts right now and maybe for a few seconds after. If you’re doing some mental arithmetic, thinking about what you’ll say next in a conversation, or walking to the hall closet with the intention of getting a pair of gloves, that is short-term memory.

All of these memory systems—even the healthy ones—are easily disturbed or disrupted. Working backward up this list, short-term memory depends on your actively paying attention to the items that are in the “next thing to do” file. You do this by thinking about them, perhaps repeating them over and over again, or building up a mental image (“I’m going to the closet to get gloves . . .” or “It’s time to take my heart pills—they’re on the kitchen counter near the phone”). But the fragility of the item becomes clear if you start thinking about something else, even momentarily (“I wonder how the grandchildren are doing in their new school? Now, why did I come into the kitchen?”). Any distraction—a new thought, someone asking you a question, the telephone ringing—can disrupt short-term memory. Our ability to automatically restore its contents declines slightly as we age with every decade after thirty.

But the difference between a short-term memory lapse in a seventy-year-old and one in a twenty-year-old isn’t what you think. I’ve been teaching twenty-year-old undergraduates for my entire career and I can attest that they make all kinds of short-term memory errors: They walk into the wrong classroom; they show up to exams without a pencil; they forget something I just taught two minutes ago. I’ve even called on students who were raising their hand and who sheepishly admitted that they forgot what they had to say in the short time it took me to call on them. These are similar to the kinds of things seventy-year-olds do. The difference is how we self-describe these events, the stories we tell ourselves. Twenty-year-olds don’t think, “Oh dear, this must be early-onset Alzheimer’s.” They think, “I’ve got a lot on my plate right now” or “I really need to get more than four hours of sleep.” The seventy-year-old self-observes these same events and worries about brain health. This is not to say that Alzheimer’s- and dementia-related memory impairments are fiction—they are very real, and very tragic for all concerned—but every little lapse of short-term memory doesn’t necessarily indicate a biological disorder.

Distraction also disrupts procedural memory. In procedural memory, a form of implicit memory, you’ve typically practiced a set of movements slowly over time to create a kind of performance. If you learned to drive a manual transmission (stick shift) at some point, you may remember that your first attempts behind the wheel were characterized by a lot of lurching and screeching and probably a few engine stalls. (Mine were certainly that way. I learned on the steep hills of San Francisco, so there was a fair amount of rolling backward and bumping into the car behind me while waiting for the clutch to engage.) The coordination of clutch, brake, and accelerator, while taking into account slope and inertia, is a complex set of actions that need to be synchronized, not to mention making sure you’re in the right gear (more than once I tried to move forward from a stoplight with the transmission in third gear or in reverse). But with practice, all these things somehow mesh together seamlessly so that you no longer need to think about them.

Learning to touch-type, to play a piece on a musical instrument, to dribble and shoot a basketball, to dance choreographed steps, to knit, and to shuffle cards are all things that are difficult at first. But at some point, if you got good at them, you no longer needed to think about them. When that happens, we say that the action has become automatic. It no longer requires our conscious effort and active monitoring. It no longer requires short-term memory. It becomes stored in your brain as an intact unit, a sequence of knowledge. And that is easily disrupted if you try to turn back the clock and once again think about what you’re doing while you’re in the middle of it. The easiest way to break your automatic muscle memory—to stall an engine, to fall off a bike, or to forget how to play Chopin—is to try to reconstruct the earlier, unintegrated pieces of that constructed sequence. It’s when you try to teach someone else to do these things piece by piece that you realize you no longer have piece-by-piece memory, just a holistic, self-governed memory of how to do it.

Long-term memories are also easily disrupted, and when that happens, it can erase, or more often rewrite, your permanent store of information, causing you to believe things that just aren’t so—the state that Beatles producer George Martin described earlier in this chapter. By analogy, suppose you have a Microsoft Word or a Pages or other text document on your computer that you wrote after getting home from a particularly interesting party you went to ten years ago. That document contains a contemporaneous recollection of the events. It is probably imperfect in a few ways. First, you didn’t write down every possible thing that happened because you were unaware of some of them—you couldn’t hear every conversation, or didn’t notice what Carlos was wearing, didn’t know about the last-minute drama in the kitchen when a whole plate of cheese puffs fell on the floor. Second, you didn’t write down every possible thing you were aware of because you selected those events that were important or interesting to you, the things that you wanted to remember. Third, your recollections are biased by your subjective perspective. Fourth, some of your recollections could simply be wrong because you misremembered or misperceived—you thought that John said, “There’s a bathroom on the right,” and what he said was, “There’s a bad moon on the rise.”

Now, if you open the document ten years later, it’s editable. You can change what you wrote, even unintentionally. You might leave the document open while you go get a cup of coffee and the cat steps all over the keyboard, replacing some of the writing with a bunch of gibberish. Someone else might discover the document and edit it. A computer problem could damage the file, obliterating or changing parts of it. Then you (or the cat) hit “save,” or the computer autosaves the file, and there you have it: an altered document that replaces the previous one and becomes your new reality of what happened at that party.

If the edits are subtle enough, or enough time has elapsed since you created the document, you might not even notice. If you’ve forgotten the incident itself and the document is your only record of it, even if it has been changed without your knowledge, it becomes the reality.

This is how memory works in the brain—as soon as you retrieve a memory, it becomes editable, just like a text document; it enters a vulnerable state and can get rewritten without your intent, consent, or knowledge. Often, a memory is rewritten by new information that gets colored in during one recollection, and then that new information gets grafted onto and stored with the old, all seamlessly, without your conscious awareness. This process can happen over and over again until the original memory in your brain has been replaced with subsequent interpretations, impressions, and recollections.

Memory is the way that it is because across human developmental history it solved certain adaptive problems, giving our ancestors a survival advantage over their slower-to-adapt neighbors. Twenty thousand years ago, in a preindustrial age, it’s easy to imagine how such rewriting could be beneficial to our survival. Suppose the freshwater spring where you and your tribe get your water runs dry. You go exploring and find a new one. But the next time you try to find it you get lost, making a bunch of wrong turns, until eventually you find it and figure out a simpler set of landmarks to guide you there. Which mental map would be best to keep in your brain, the one that retraces all those faulty steps, or a new and improved one that keeps only the simpler, helpful landmarks?

Or imagine that you notice a jackal around your campfire and coax it near. It seems friendly enough, and in fact, it lets you pet it and snuggles by your feet one night. But the next day it turns on you, biting you and your sister and making off with that piece of meat that was roasting on the fire. If your memory dwells on the earlier, more pleasant time, you might make the same mistake. Better for you to rewrite your memory of the jackal as an unpredictable predator, not to be taken lightly. (Dogs won us over, but it was a slow process.)

Autobiographical memory is perhaps the system that is most closely associated with your sense of self, of who you are and what experiences shaped you. The autobiographical memory system informs your life choices in important ways. Without it, you wouldn’t know if you are capable of hiking for two hours, if you can eat food with peanuts in it, or whether or not you’re married.

And yet, the autobiographical memory system is prone to huge distortions. It’s a goal-oriented system. It recalls information that is consistent with your goals or perspective. We all tend to recontextualize our own life stories and the memories that formed them, based on the stories we tell ourselves or others tell us. Our original memories become corrupted, in effect, to conform to the more compelling narrative.

We also do a lot of filling in based on logical inferences. I don’t have many specific memories of the last time I visited London, but using my semantic memory, my general knowledge of London travel, I assume I took the Tube, that it was gray-skied, that I was jet-lagged, and that I drank especially good tea. Because I can easily picture myself riding the Tube from all the times that I have over the last forty years, that image can become grafted into my autobiographical memory for the most recent trip to London, and before I know it, I have a “memory” of riding the Tube last year that isn’t really my memory—it’s an editorial insertion, and we’re usually not aware that we’re doing it.

Memories can also be affected and rewritten by the mood you’re in. Suppose you’re in a grumpy, irritable mood—maybe because you’ve just arrived in Los Angeles from London (with its great public transit), and you are fed up with the lousy public transit system in LA. To cheer yourself up you recall a time walking in Griffith Park with a friend that ordinarily is a happy memory. But your current mood state can cause a reevaluation of that as a less happy time—instead of focusing on the great walk, you conjure up the memory of all the traffic on the way there, the difficulty you had parking. All this rewrites the extracted memory before it gets put back in the storage locker of your brain, so that the next time you retrieve the memory, it is no longer as happy as it was before.

There is a famous case of mass memory rewriting involving the attacks on the Twin Towers of the World Trade Center in New York City on September 11, 2001. You’ll notice that it conceptually parallels the story I described about finding a new freshwater spring.

Eighty percent of Americans say that they remember watching the horrifying television images of an airplane crashing into the first tower (the North Tower), and then, about twenty minutes later, the image of a second plane crashing into the second tower (the South Tower). But it turns out this memory is completely false! The television networks broadcast real-time video of the South Tower collision on September 11, but video of the North Tower collision wasn’t discovered until the next day and didn’t appear on broadcast television until then, on September 12. Millions of Americans saw the videos out of sequence, seeing the video of the South Tower impact twenty-four hours earlier than the video of the North Tower impact. But the narrative we were told and knew to be true, that the North Tower was hit about twenty minutes before the South Tower, causes the memory to stitch together the sequence of events as they happened, not as we experienced them. This caused a false memory so compelling that even President George W. Bush falsely recalled seeing the North Tower collapse on September 11, although television archives show this to be impossible.

And so a huge misunderstanding that most of us have about our personal memories is that they are accurate. We think it because some of them feel accurate; they feel as though they are like video recordings of things that happened to us, and that they haven’t been tampered with. And that’s because our brains present them to us that way.

Another way that our memories are defective is that we often store only bits and pieces of events or facts, and then our brains fill in the missing pieces based on logical guesses. Again, our brains do this so often that we don’t even notice that they’re doing it. So much of our mental activity has gaps in it. Speech sounds may be obscured by noise, your view of something may be occluded by other objects, not to mention your momentary view of the world being interrupted, on average, fifteen times a minute by blinking. The brain mixes up—confabulates—what it really knows with what it infers, and doesn’t often make a meaningful distinction between the two.

When we age, we begin to confabulate more, as our brains slow down and the millions of memories we hold begin to compete with one another for primacy in our recollection, creating an information bottleneck. We all have, etched in our minds as true, things that never happened, or are combinations of separate things that did.

Confabulation shows up particularly vividly in people who have had a stroke or other brain injury and are having trouble piecing fragmented memories together. Neuroscientist Michael Gazzaniga has written about this as a lesson in lateralization—the idea that the left and right hemispheres perform some distinct functions. (If you’re right-handed, confabulation takes place in your left hemisphere. If you’re left-handed, the confabulation could be taking place in either hemisphere—lefties have a less predictable lateralization of brain function than righties do.)

Gazzaniga tells the story of a patient who was in the hospital after a right-hemisphere stroke but had no memory of what brought her there—she was convinced that the hospital was her home. When Gazzaniga challenged her by asking about the elevators just outside her room, she said, “Doctor, do you have any idea how much it cost me to have those put in?” That’s the left hemisphere confabulating, making things up, in order to keep a coherent story that fits with the rest of our thoughts and memories. She had no memory of being brought to the hospital, and no ability to process this new information, and so, as far as her left hemisphere was concerned, she was still at home.

Think about the last children’s birthday party you were at, and try to remember as many details as you can—walk through the sequence of events in your mind. This is what an attorney might ask you to do at a trial if you were a witness. You might remember things like whether the attendees played pin the tail on the donkey, whether there was cake, whether the birthday kid opened all the presents in front of everyone or decided to do that later. But other details may be lost—whether there was a trampoline in the backyard, whether the other kids were given party favors. Other people and photographs might remind you of things, and those help to trigger some memories.

But still there are gaps. How many different kinds of beverages were served? If you were a bartender or ran a catering company you might have noticed; otherwise not. What color temperature was the light bulb in the bathroom? If you were in the lighting business you might have noted whether it was cool white or warm white or daylight or yellowish. But otherwise, probably not. Memory is filtered by your own interests and expertise. Other gaps: Did the lights in the living room flicker at one point? The insurance investigator wants to know because there was an electrical fire the next day. I suppose that they could have flickered, you think. Yes, now that I think about it, they did. I distinctly remember it. I can picture it happening. But there were no lights on in the living room—the fuse had previously blown. Your memory’s not as reliable as you think, is it? Once you’ve lived awhile, and collected a number of experiences, it’s quite easy to imagine things happening the way they’re described, and these imaginings become grafted on your memories. Trial attorneys know this and use it as a way to make juries doubt a witness’s testimony. Human memory makes logical inferences from the available information, and it delivers them to you with a potent mix of fact and confabulatory fiction.

I had surgery a few years ago and spent several days in bed on pain-killing opioids. They left me a bit disoriented, to say the least. I couldn’t remember what day of the week it was, or even what month it was. I recall looking out the window and seeing garbage trucks. Ah! It must be Monday, garbage day. My semantic memory of garbage day was intact, even if my awareness of the day of the week was compromised. I saw that lettuce and onions in the vegetable garden outside were just getting started—in Los Angeles, that means it must be February. I could answer the kinds of questions that doctors ask in order to get a read on your cognitive state, without actually knowing the answers, but by inferring them from my surroundings.

A friend suffered a stroke and is now making these kinds of inferences all the time, masking her inabilities and confounding her doctors. She was a woman with great dignity and independence before the stroke, and these kinds of questions make her feel trapped. When the two of us were alone, I asked her what year it was and I saw that she surreptitiously glanced at a magazine on her table and used that date. I asked her the time of day, and seeing the crust of a sandwich on a plate nearby, she guessed “early afternoon.” I asked who the president was and she said she didn’t know but could probably figure it out. That seemed unlikely to me, and I didn’t want to embarrass her, so I dropped it.

So is your autobiographical memory accurate? Are any of our memory systems? Yes and no. Our memory for perceptual details can be strikingly accurate, particularly in domains we care about. I knew a housepainter in Oregon, Matthew Parrott, who could walk into a house and identify the finish (flat, eggshell, satin, semigloss, or high-gloss), the brand (Benjamin Moore, Sherwin-Williams, Pratt and Lambert, Glidden), and often the precise shade of white, just by looking at the walls. And he could study the texture on the drywall and infer how many different “mudders” (drywall texture contractors) had worked on the house. “Look here,” he said, “notice these swirls—they were done by a left-handed mudder.” This was his business and he was especially good at it. (He told me that his father had been in the business before him. “My fadder was a mudder,” he said.) A lighting designer might remember particular colors and intensities of bulbs. A musician might know, just by listening, the brand and model of the instrument being played.

I conducted an experiment in 1991 in which I simply asked random college students to sing their favorite song from memory. I compared what they sang to the CD recordings of those songs to see how accurate their musical memories were. The astonishing finding was that most people hit the exact notes, or very near to them. And these were people without musical training. But, of course, if it’s your favorite song, you probably know it well. This finding contradicted decades of work on memory that showed the great inaccuracies in recollections. So we are left with a bit of a messy picture—memories are astonishingly accurate, except when they aren’t. Paul McCartney and George Martin have completely different memories of who played what instrument on something as important as a Beatles album. But fans can sing near-perfect versions of those same Beatles songs.

The way that memories are organized in the brain is mediated by memory tags. No one has ever seen a memory tag in the brain, so at the moment, they are just a theory that helps explain how memory works—we may see them in the near future as brain-imaging technology improves.

Think back to that hypothetical birthday party that I described earlier. There are a multitude of queries that could trigger memory tags for that party:

-

When’s the last time you were at a party?

-

Where were you the last time you ate hors d’oeuvres?

-

When’s the last time you saw Bob and Kate?

-

Do any of your friends have a trampoline in their backyard?

-

What did you do last Saturday?

Each of these is a way into the memory for that party, and there are probably hundreds more. If there was a particular smell there that you haven’t smelled since, encountering it again, even in another context, is likely to bring back a stream of associated memories. Our memories are therefore associative. The events that constitute them link to one another in an associative network. It’s as though you have a giant index in the back of your head that lets you look up any possible thought or experience and then points to where to find it. Some memories are easier to retrieve because the cue we use—the index entry—is so unique that there’s only one memory with which it could be associated; think, for example, of your first kiss. Others are difficult to retrieve because the cue taps into hundreds or thousands of similar entries. That’s why it’s difficult to remember things like what time you woke up two Mondays ago—waking up is such a day-to-day, routine event that unless something extraordinary happened two Mondays ago, you pull up a bunch of similar-sounding awakenings that are hard to distinguish from one another. In other cases, memories are easier to retrieve because you’ve retrieved them many times before—the act of pulling a memory out can improve its future accessibility (although, as we’ve seen, pulling it out can also distort it and reduce its accuracy under some circumstances).

A great deal of research on memory over the last century has been concerned with the question of where in the brain memories are located. It seems like a logical question, but as with many things in science, the answer is counterintuitive: They are not stored in a particular place. Memory is a process, not a thing; it resides in spatially distributed neural circuits, not in a particular location, and those circuits are different for semantic and episodic memory, procedural and autobiographical memory.

If the idea of something not existing in a particular place makes you uncomfortable, consider government, universities, and corporations—these are real entities, but like memory, they don’t really exist in a particular, well-defined place. You could point to a particular building where the government has some offices—the state capitol, say—and argue that this is the location of the government. But if that building becomes condemned, the individuals who work there would just move to another building and we would say that the government is there now. Or with the rise in telecommuting, we might find that the employees of the state government are scattered around the state, working from their homes. Where is the government now? A major function of government is enacting traffic rules and regulations. Where are traffic laws located? Actually, they’re distributed in the brains of every person with a driver’s license. (We hope.)

Some parts of the memory process are localized. The temporal lobes and hippocampus are responsible for the consolidation of memories—a variety of neurochemical processes that take experiences, massage them, organize them, and otherwise prepare them for storage. This action is catalyzed by sleep and by the distinctive neurochemistry of dreaming, including the modulation of acetylcholine in the brain (remember this chemical because it plays an important part in aging and memories). But consolidation is merely the preparatory process. If memories aren’t stored in a particular place, how do they work? The way I came to learn about this was largely through luck, or—to take the developmental science approach—opportunity.

Like most scientists, I spend a large proportion of my time poring over journal articles written by other scientists about their newest findings. My mother and father are both history buffs, and from an early age, we had dinner table discussions about the American West, classical Greece, and biblical times. When I was eight years old, my parents co-founded a club dedicated to studying the history of the town I grew up in, the Moraga Historical Society.

My grandfather died when I was ten, and he left me his Encyclopædia Britannica from 1910. I spent hours on my bedroom floor learning about the world as it appeared to people in 1910. There were no entries for airplane, automobile, radio, or penicillin. Entries on food preservation (with an emphasis on salting and drying), aeronautics (filled with photographs of dirigibles and zeppelins), and Alaska (“formerly called Russian America”) were an intriguing counterpoint to what we know now. And so, not surprisingly, when I studied neuroscience as a student, I gravitated toward the field’s history; I started looking backward to what scientists were writing about it in the late 1800s. I was fascinated by many of the things we think we are discovering for the first time but that were either previously discovered or intuited by earlier scientists, often one hundred or more years ago.

Memory is a perfect example of modern scientists forgetting what came before. (How’s that for irony?) When I entered graduate school in 1992, memory researchers were focused on understanding two problems: What kinds of things are likely to be remembered versus forgotten, and what were the roles of the temporal lobes and hippocampus? There was confusion, disagreement, and outright ignoring of the basic issue of how a memory gets stored or retrieved. It turns out this is something that a group of researchers had already worked on in the early 1900s, but their discovery held no currency for years until it was resurrected and revived by a large body of evidence that had no other explanation.

I entered the PhD program at the University of Oregon, and Doug Hintzman was my mentor, an expert in human memory. During the spring of my first year, I went down to the Bay Area to visit the Psychology Department at UC Berkeley to give a talk about my research. Two professors there, Erv Hafter and Steve Palmer, had invited me. (After obtaining my PhD, I went on to do a postdoctoral fellowship with Steve, and, years later, Erv officiated at my wedding.)

During that visit, Steve introduced me to a professor who had been an inspiration to him for many years, Irv Rock. (Yes, there are two people with identical-sounding first names in this story, Erv and Irv.) Irv had retired from Rutgers at age sixty-five and moved to Berkeley to work with Steve. Irv Rock had studied under the last of the Gestalt psychologists, an influential group of scientists formed in Germany in the 1890s. If you’ve ever heard the phrase “the whole is greater than the sum of the parts,” that comes from the research of the Gestalt psychologists (indeed, the word gestalt has entered the English language to mean a unified whole form). One can think of a suspension bridge as a gestalt—the functions and utility of the bridge are not easily understood by looking at pieces of cable, girders, bolts, and steel beams; it is only when they come together to form a bridge that we can see how a bridge is different from, say, a construction crane that might be made out of the same parts.

Irv was seventy when I met him, and I was thirty-five. He and I bonded over our love of salty pickles and the history of science. More than one hundred years earlier, Gestalt psychologists believed that each time you experience something—a walk around your neighborhood, worrying thoughts about your future, the taste of a pickle—it lays down a trace in the brain and leaves a kind of chemical residue. This trace, or residue, theory was largely ignored for one hundred years, but not by Irv. He introduced me to the richness of the Gestalt psychology writings. It was like reading the 1910 Britannica again on the floor of my bedroom. Their papers had a contemporary feel to them and a ring of truth—they simply lacked the rigorous experimental protocols that we apply today.

Meanwhile, back at the University of Oregon, Doug Hintzman was developing a contemporary version of the residue theory—multiple-trace theory. In Doug’s conception, extending the work of the gestaltists, every mental experience lays down a trace in memory. Doug is a true scientist. He doesn’t jump to conclusions, he is measured and cautious in his approach, and he doesn’t really have a pet theory—he just designs clever experiments and waits to see what the data tell him. And they told him that trace theory was the most efficient account for thousands of memory observations.

Here’s how Doug explained it to me in one of our first meetings (as recorded in my lab notebook from 1992):

The number of times an event is repeated affects several aspects of memory performance. By performance, I mean your ability to retrieve the event at some later time. The more times the event has been presented, the more accurate you’ll be in recall and recognition, and the shorter will be the time it takes you to retrieve it from memory. These effects may not all be due to the same underlying process, but it’s most parsimonious to assume, lacking clearly contradictory evidence, that they are.

That underlying process is multiple-trace theory, or MTT. Every experience lays down a unique trace, and repetitions of an experience don’t overwrite earlier traces; they simply lay down more, near-identical but unique traces of their own.

The more traces that there are for a given mental event, the more likely you’ll recall it and that you’ll recall it accurately and rapidly. This is how you learn things—by repeating them, playing around with them, exploring them—laying down multiple, related traces of the concept, experience, or skill. Interestingly, MTT also accounts for the astonishing findings of Posner and Keele in the 1960s, about abstraction in those random dot patterns. The creation of multiple, related traces facilitates the extraction of information common among them, and this occurs in brain cells without having to involve the hippocampus.

The beauty of MTT is that it unifies explicit and implicit memory, and semantic and episodic memory. There might be many different systems, but they are governed by one process. That one process stores episodic and semantic traces, and then abstract knowledge as such does not have to be stored but can be derived from the pool of traces of specific experiences. The reason you get better after practicing procedures, like scales on the piano, is because you have so many traces to draw on. And you can play those scales on different pianos because your brain, automatically as a part of the biology of memory, forms an abstract representation of the piano keyboard that is independent of any one particular keyboard.

I’ve come to believe that MTT is the correct way of looking at memory. Each experience we have, even purely mental ones—every thought, desire, question, answer—is preserved as a trace in memory. But these are not stored in a special “memory” location like they would be in a computer. When you experience something—say, looking at the letter a typed in this book, or imagining your next beach vacation—a certain network of brain cells becomes active. The same is true when you cry during a sad movie, become fearful while walking on a shaky bridge, or look into your baby’s eyes—these experiences are represented uniquely in assemblies of brain cells. The act of storing a memory entails keeping track of what that original activation pattern was and then corralling as many as you can of those original brain cells to fire in the same way they did during the original experience. The part of the brain that does the keeping track is initially the hippocampus and allied parts of the temporal lobes, which act as a kind of index or table of contents. Over time, those indexes are no longer needed and the memories reside entirely in the same cells that were involved in the original experience.

If you’re like most people, you probably have a core set of memories that you toss over in your mind regularly and have done so throughout your life—major life events, or funny stories that your parents told you or that you tell your kids.

Many memory theorists are still not convinced of MTT. Some don’t even know much about it. But it is the explanation that is most consistent with the data. And in terms of aging, it provides a compelling explanation for why we forget recent events when we age but still remember older ones: The older ones created more memory traces, either through repetition or through multiple recollections of them. Add to that the uniqueness of some memories, or at least the unique memory tags associated with them, and it explains why some memories are easier to retrieve than others—they aren’t as easily confused with other memories. They stand out.

Storing and retrieving memories is an active process. One of the great historical figures in memory research, Frederic Bartlett, avoided naming his 1932 book Memory because he felt it implied something static. Instead, he called his landmark book Remembering to reflect an active, adaptive, and changing process. Think of it this way. The neurons you use to taste chocolate are members of a unique circuit of neurons that convey that experience to you. If you want to enjoy that memory sometime later, you have to gather the members of that neuronal circuit together to form the same circuit. In this way, you make the neurons members of that group once again—you “re-member” them.

The key to remembering things is to get involved in them actively. Passively learning something, such as listening in a lecture, is a sure way to forget it. Actively using information, generating and regenerating it, engages more areas of the brain than merely listening, and this is a sure way to remember it. Many older adults complain of not being able to remember the names of people they’re introduced to at parties. Generating the information, being active with it, simply means using the person’s name just as you hear it. “Nice to meet you, Tom.” “Have you read any good books lately, Tom?” “Oh, you’re from Grand Forks, Tom. I’ve never been there.” This can boost your memory by 50 percent with very little effort. Laboratory work by Art Shimamura at UC Berkeley has shown that this kind of generating and regenerating items of information increases brain activity and retention, especially among older adults.

What Does All This Mean?

We need to fight against complacency and passive reception of new information. And we need to fight these with increasing vigilance every decade after sixty. Fortunately, there are things we can do, strategies we can employ, to increase the durability and accuracy of memory. For short-term memory problems, training our attentional networks helps us to focus on what is going on right now and to store with clarity and increased precision the most important things we are thinking and sensing. This can be done by slowing down and practicing mindfulness; trying to mono-task instead of multitasking; and trying to follow the Zen master’s advice of be here now.

Next, we can externalize our fallible memories to objects in the world that don’t change as readily as brain cells. We can do this by writing things down and list making. There also exist computer and cell phone applications for building memory, and those are an integral part of the program of healthy brain practices, such as Neurotrack, the memory baseline measurement and enhancement tool developed by a team of scientists at Stanford, the Karolinska Institute, and Cornell.

We tend to remember best those things that we pay the most attention to. And the deeper we pay attention, the more likely those things are to form robust memories in our brains. If you see a bird outside your window and notice the yellow feathers under its chin, that’s deeper and more elaborate processing than simply noticing the bird. If you start to turn over in your mind the difference between this bird and others you’ve seen before, noticing differences in the tail and beak shape, for example, you’re processing more deeply still. This depth of processing is now well established to be one of the key features that aids deep memory. When a musician can play a thousand songs from memory, they haven’t learned the songs from paying only superficial attention to them, but by elaborating deep processing on them, by registering the differences and similarities among other songs they know. Along these lines, there’s a growing body of research suggesting that if we need to remember something, we should draw it—drawing something forces you into the kind of deep processing that is required.

Attention is regulated by structures in the prefrontal cortex and by dopamine-sensitive and GABA-sensitive neurons there. GABA stands for gamma-aminobutyric acid, and it is an inhibitory neurochemical in the brain. Earlier I noted that the prefrontal cortex doesn’t mature until the twenties. And it is our human prefrontal cortex that has increased in size, massively, compared to monkeys—in fact, it’s the only brain area to show much of a difference between us and our primate cousins. Knowing that the prefrontal cortex is responsible for cognitive control, planning, and generally being alert and conscientious, you might think that the species-related and age-related changes are to pack it full of “intelligence” neurons or something like that. But in fact, the biggest difference in the human versus monkey prefrontal cortex, and between the teenage and adult prefrontal cortex, is the presence of neurons that are GABA receptors—lots of them. That’s right, the inhibition neurochemical. Much of what it means to be human, and to be an adult, involves inhibiting responses that we might naturally make. Think about it: not punching someone because you’re mad at them; delaying gratification and continuing to work on that important project when you know there is something good on TV; saying no to that third alcoholic beverage; eating healthy foods even though the unhealthy ones are tempting.

Those GABA and dopamine neurons, in combination, help us to focus on what we choose to, without giving in to distraction. Yet with aging, the prefrontal cortex loses some of its pizazz and zing, and we do find ourselves more distracted. We need to make more of an effort to focus.

Federal judge Jack Weinstein (age ninety-eight) says, “I keep thinking of Dr. Spock who wrote all of the child-rearing books that we followed when I was a young parent and [laughs] I remember him saying on the radio—I heard this program maybe seventy years ago—you have to try to have little tricks to deal with your memory loss and he gave this example which has stayed with me. He said if you’re listening to the radio or watching TV and it says it’s going to rain outside, at that moment—before you forget it—you get your umbrella and hang it on your door so that you pick it up on the way out.” Your visible environment is reminding you. Cognitive neuroscientist Stephen Kosslyn calls these cognitive prostheses.

Joni Mitchell (age seventy-six) also uses the environment of her house. “I remember in Dr. Zhivago, that as soon as Julie Christie walks in the door she puts her keys on the counter right by the door. Brilliant, I thought—that way she always knows where they’ll be. I’ve done that ever since. When I built my new house in British Columbia about ten years ago, I had a whole extra set of small drawers built into the kitchen to hold things that I’m always losing track of, one drawer for each: batteries, matches, chopsticks, Scotch tape, and those kinds of things. I can’t stand not being able to find things. I wish I had done this years ago.”

Lots of people have different tricks for remembering things when they leave the house. George Shultz, former US secretary of state (age ninety-nine), explains, “You have a routine. I have my hearing aid in my right jacket pocket. Always the same pocket. My keys to my house in another pocket and my wallet in another.” Filmmaker Jeffrey Kimball (age sixty-three) has a mental checklist of five things he always has when he leaves the house, repeating it like a mantra: reading glasses, wallet, keys, cell phone, binoculars (he’s an avid bird-watcher). And he leaves the wallet and keys inside his shoes by the door when he gets home.

I have two friends who had to undergo chemotherapy for cancer. They were warned that they might experience cognitive lapses, or “chemo-head.” They both put systems in place using the technology they had available. Just fifteen years ago they might have had to buy multiple timers and label them for different things they needed to do during the day. Now they do it on their smartphones by programming “appointments” into their cloud-based calendar. They programmed an appointment for each time they needed to take a pill, see a doctor, or fill out a health status report. They’d program little things like “Take a shower” or “Get dressed for grandkids coming over.” They might enter “Call doctor fifteen minutes from now,” which gave them time to sit and reflect on what they wanted to talk about.

They both recovered completely and have kept using their electronic calendars as a combination to-do list and sticky-paper reminder system. They love the freedom of being able to relax their minds, to let go of worrying about what they might be forgetting. They live more in the moment. And just the act of writing things down, of paying close attention to what they want to schedule, has improved their memories.

Does Memory Really Decline with Age?

I mentioned earlier that the hippocampus and the medial temporal lobes tend to shrink with age, and that the prefrontal cortex changes in ways that may make us more distractible. Distractibility is the enemy of memory encoding. I also suggested that every little memory lapse we experience after a certain age doesn’t necessarily mean decline is imminent. And yet, it is commonly said that memory loss with age is to be expected. Neuroscientist Sonia Lupien, an expert on stress, has studied the detrimental effects that stress has on memory and the way it can raise cortisol levels. She had a hunch that the particular way in which older adults are given memory tests stressed them out, thereby causing them to perform more poorly than they otherwise would.

“I don’t believe in age-related memory impairment,” Lupien says. “If it exists at all, it’s much less than people think. I studied the methodology in the experiments that claim age-related memory loss. The older people who came in had cortisol levels that were through the roof before we even started testing. Think about it: We test them in unfavorable environments. Normally, novelty, unpredictability, lack of control, and threat to ego are the four big stressors in humans. And when we test older adults’ memory, we’re subjecting them to all four!”

Almost every study of older adults’ memory is conducted in a university lab. This is a familiar environment to the young people who serve as controls in these studies—they’re all students at the university. But the older adults don’t find it familiar at all. They’re looking around for parking. They don’t know where the elevators are in the building. They finally show up, stressed out that they’re late, and they are greeted by a cheerful young research assistant who they know is looking for possible memory defects. That is stressful.

There are also time-of-day effects. Testing often takes place in the late morning or early afternoon. The twenty-one-year-old controls have just woken up and are at their peak mental ability, but an older adult has probably been awake since five A.M. “We use a favorable environment and a favorable time for the college-age control participants,” Lupien says. “But not for the older adults.”

Lupien turned the traditional form of memory testing on its head to remove any advantages experienced by the control group of college students. She had her older adult participants come into the laboratory for a get-acquainted visit before the testing day, so that when they came in for a second visit, they’d be less stressed about how to get there and find the right room. On both occasions, instead of being greeted by a young student with whom they had little in common (and by whom they might have felt intimidated), they were met by Betsy, a seventy-two-year-old research assistant. On the day of testing, Betsy shared some refreshments with them to allow them time to get over any residual stress they experienced in traveling to and even being in the lab. After an appropriate “cool-down” time, Betsy brought out a photo album and went through it with them. She might show them a picture of a woman named Laura who keeps a cat as a pet. Or a picture of a backyard with a nice elm tree in it. In reality, Betsy was showing them the stimuli for the memory test. Later, when shown the picture of Laura, the participants answered, “Oh. She’s the one with the cat.” When asked about the tree in the backyard, they correctly recalled it was an elm. Relieved of all these stressors, including the pressure of actually being evaluated, and fears that they might come up short, the older adults performed as well as younger controls.

There’s another explanation for the sometimes poor performance of older adults on memory tests: sensory decline. Uncorrected losses to vision and hearing could account for 93 percent of the variability in cognitive performance. When put in a quiet environment, hearing-impaired older adults performed as well as younger adults; they also performed better when given more time.

Deborah Burke, who directs the Pomona College Project on Cognition and Aging, found that retrieval of words, and in particular proper names, can decline with age among older adults, and that it is a by-product of atrophy in the left insula, a region associated with retrieving the phonological form of the word. That is, we don’t actually forget the word itself, just the sound of it—that’s why we feel as though we still know the word, why it feels like it’s on the tip of our tongues, and why if someone volunteers it we recognize it as the right word. None of those things happen when we truly forget something.

What It Feels Like to Be You

Your memory is an indispensible part of who you are. What does it feel like to be you? When you step outside into the sun on the first warm spring day, do you focus on the way the heat feels on your skin, or on the blue sky, the smells, the colors of the trees? Some of us have an internal focus—when experiencing new situations we turn inward; the first thing we notice is how our bodies feel: warm, cold, itchy, pressure on the skin, the way our clothes fit either loosely or tightly. Others of us have an external focus—being alive involves experiencing the outside world and focusing on it and other people in it.

There are lots of other ways in which being you feels different from being someone else—the memories you associate with current experiences, both good and bad, or the activities you like doing. When Alzheimer’s and dementia set in, we can lose access to these idiosyncratic and very personal ways of being in the world. Our personalities change; our memories become lost or, worse, confabulated. Simple things like eating fresh berries don’t feel familiar. People may feel that they are in someone else’s body. This can lead to a great deal of anxiety. Individuals with dementia are often agitated, uncomfortable, angry, and confused. And for good reason—they don’t feel at home in their own bodies, their own surroundings.

Part of compassionate care is giving them back a sense of self. Touch can do this—the simple act of a kiss on the cheek or rubbing the back. Music can do it too—listening to songs that you know well that stretch back into childhood can wake up and reactivate the neural circuits that give you that strong sense of “I am me.”