3

PERCEPTION

What our bodies tell us about the world

The British philosopher John Locke proposed what we now call Locke’s challenge: Try to imagine a smell that you’ve never smelled before. Or try to describe a novel taste to someone who hasn’t experienced it. Locke’s insight was that everything we know about the world we know through our senses. Locke was not just a one-idea guy, by the way. He was the first to propose that our sense of self comes from the continuity of consciousness. And it was he who first wrote about the importance of the separation between church and state—the same idea that Alexander Hamilton and the founding fathers of the United States later incorporated into the US Constitution.

Locke’s challenge put the senses front and center in our understanding of human information processing. His observation changed the way that we think about knowledge—what it is, and how we acquire it. How does the brain go from an apparently undeveloped state to one that is more adult-like? The single most important factor is input from the outside world. The brain learns to become fully functional through its interactions with its environment. Without that, the brain never becomes fully adult-like, never reaches its full potential. This is a lesson that applies equally to animals and humans and to children and adults of all ages. Interactions with the outside world are crucial. Roboticists and AI engineers have learned the hard way that no matter how fast their CPUs can process algorithms, the biggest barrier to achieving human-like capabilities is a lack of sensory input and integration of inputs across these artificial senses.

We learn in elementary school that humans have five senses. Less well-known is that some animals use other senses that we lack. Some are rather exotic. Sharks, for example, can detect the neural firings of species they want to eat using an electrical sense. Bees find flowers by detecting electric fields (flowers have a slightly negative electrical charge, which contrasts with the bees’ slightly positive charge). Snakes find their prey using an infrared heat detector, and elephants are sensitive to vibrations through special receptors in their feet. Many animals have sharper senses than we do—a dog’s sense of smell is a million times more sensitive than ours. (With that much olfactory power, I imagine that sniffing at a fire hydrant might give a smart dog a pretty good idea of who’s in the neighborhood, their diet, and possibly even the overall health status of the local canine population.)

Sensory receptors are specialized cells, constantly detecting and collecting information in the world and transmitting it to the brain. Our eardrums, for example, respond to disturbances of molecules in air or liquid—vibrations. Our eyes register the amplitude and frequency of light waves. (Light is a form of electromagnetic energy, and so our visual sense is not that different from sharks’ and bees’ electrical senses—the difference is in how our brains interpret that information.) Our tactile receptors register temperature, wetness, pressure, and injury; they exist even on some of our internal organs—think back to your last stomachache. Our taste and smell receptors detect the chemical content of objects.

Once our sensory receptors pick up information from the outer world, they send electrical impulses to areas of the brain that are specialized for interpreting those signals. The spike-spike-spike of electrical pulses coming from the various sensory receptors brings us the entire range of sensory experiences: sour, sweet, hot, cold, painful, soothing, loud, soft, bright, dark, red, purple, fragrant, acrid, and dozens more. There is nothing “sour” about the nerve impulse coming off your tongue—“sour” occurs in your gustatory cortex, a part of the brain dedicated to interpreting these impulses. Sir Isaac Newton, a contemporary of Locke’s, knew that the rich perceptual experiences we have are created in our brains, not out there in the world. He wrote that the light waves illuminating a blue sky are not themselves blue—they only appear blue because our retina and cortex interpret light of a particular frequency, 650 terahertz, as blue. Blueness is an interpretation that we place on the world, not something that is objectively there.

The Logic of Perception

We tend to think that our senses give us an undistorted view of the world—that what we see, hear, or experience through our senses is reality. But nothing could be further from the truth that science pursues. If I shine light waves of different colors on a screen, some will seem brighter than others even if I carefully control the luminance output of the projector. (Our eyes are most sensitive to green light and least sensitive to blue, meaning that even if green, blue, and red colors are presented at equal luminance, the green will appear brighter and the blue dimmer than red.) This might be due to the hundreds of thousands of years, when, even prior to walking on two legs, our eyes scanned green leaves looking for food. Musical instruments or voices that are precisely the same amplitude will not be perceived as having the same loudness because some frequencies simply sound louder, to us, to our brains, than others.

Our internal perceptual system constructs a version of reality that is in some ways better for our survival needs than what our sensory receptors detect. For example, the auditory frequencies our brains are most sensitive to are the ones that distinguish one vowel or consonant from another in speech—our brains effectively favor some frequencies over others to help us understand one another better. The lens of the eye is shaped such that straight lines in the world should appear slightly curved, and certain curved lines should appear straight. But they don’t—the visual cortex “knows” the distortion of the lens and makes corrections. Then there’s chromatic aberration—colors of light coming from the same source do not strike the retina on a common focal point, because of their different wavelengths, but the brain compensates so that we perceive them coming from the same source. These are just a few of the hundreds of brilliant compensatory adjustments performed by the brain, and they occur unconsciously.

My friend and mentor Irv Rock (who was age seventy when we met at Cal) wrote an influential book called The Logic of Perception that summarized his life’s work. As he noted, the signals hitting our sensory receptors are often incomplete or distorted, and our sensory receptors don’t function perfectly. There are other cases in which what our receptors might tell us about the world is wrong and the brain needs to step in. Rock further showed how our perceptual system uses logical inferences to help us perceive the world. Perception doesn’t just happen—it entails a string of logical inferences and is the outcome of unconscious inference, problem solving, and outright guesses about the structure of the physical world.

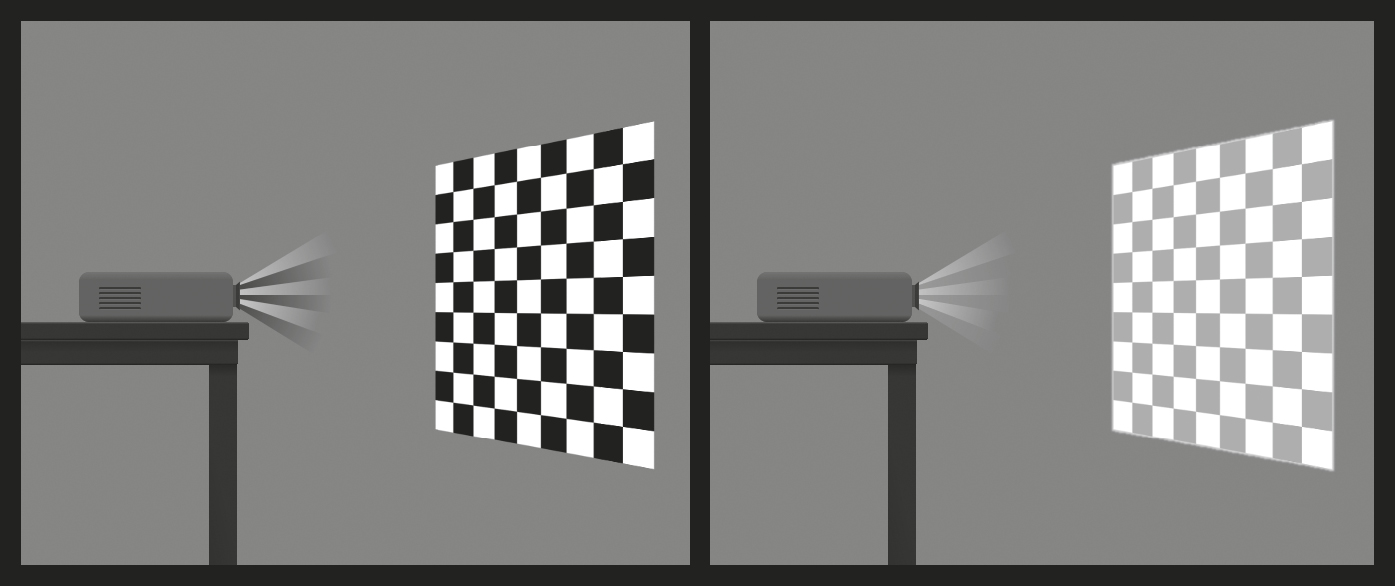

Brightness constancy is a striking example. When you go to a movie theater, the screen is white and the projector shines a bright light on it. But of course, there are dark images on the screen—villains wearing black hats, cats with black fur, George Clooney wearing a black tuxedo. But black is the absence of light, so how does the projector do it? The answer is that it doesn’t—your brain has to make some inferences. All that the projector can do is shine light or not shine light on that white screen. Everything on the screen that appears black is in reality just the color of the screen. What’s happening is that your brain has evolved to make inferences about colors and brightness as they appear in relation to other colors and brightnesses. (There is a current new rage for black movie screens and some theaters have them because they render truer blacks.)

On the left is how you perceive a checkerboard that is projected. On the right is what the projector is actually projecting. Your brain uses the logic of perception to infer that the image on the left is what was intended. Your brain does this unconsciously—knowing that this principle is at work doesn’t allow you to shut off the neural circuitry that makes it so. Our brains know that George Clooney’s tuxedo is not gray and thus render it the blackest of blacks.

The visual illusion by Edward Adelson on the following page makes the point. Squares A and B are exactly the same shade of gray, but they appear to be different because the brain—through an automatic process of logical inference—distorts the image coming from the eye and corrects it. The brain assumes that because the square is in a shadow it must be lighter than it appears. If you cut a piece of paper about the size of this figure, and cut out little squares revealing only A and B, you will see that this is true.

There are dozens and dozens of such principles. Another is color constancy. If you’ve ever seen a photograph taken indoors, back in the old days of analog photography and actual film, you may have noticed that the entire scene has a kind of yellowish hue, and people’s skin tones look unnatural. That’s because the camera’s lens saw the room as it really was, yellowed by incandescent lights. But your eye doesn’t see it that way, because your brain employs color constancy. That red dress looks the same to you indoors and outdoors, but it does not look the same to the camera.

Most of the examples of this we know of come from vision and hearing because those are the two most studied senses. But there are examples of them in the other senses. If I touch your toe and your forehead at the same instant, you will perceive that they were touched at the same time. But it takes a lot longer for the nerve impulse from your toe to reach your brain than the impulse from your forehead. What really happens is that your brain gets a message from the forehead touch first, and then the toe touch comes in and the brain has to subtract a delay constant (factoring in how long neural transmission takes) and then come to the logical conclusion that both were touched at the same time.

Irv Rock referred to the logic of perception because he believed that the brain did all of this based on probabilities. Given what is hitting the sensory receptors, the brain tries to work out what is the most likely thing that is happening. Perception is the end product of a chain of events that starts with sensory input and includes a cognitive, interpretive component. Have I convinced you that the brain is full of tricks and that the world is not always as it appears? Here’s where it gets really interesting. The brain fills in missing information without your knowing it. And the older you get, the more it does this. This perceptual completion is also based on the logic of perception. If you’re talking to someone in a crowded room, it’s likely that some of their words will be masked by other conversations, clinking glasses, or footsteps. But you can still interpret what they’re saying. For years I’ve used a demo in my classes of a person speaking a sentence in which one syllable has been completely removed and replaced by a cough. Students know that the cough is there, but they don’t realize that a syllable has been excised, and they have no trouble understanding—their perceptual system simply fills in the missing information. Perception is a constructive process—it builds for us a representation of what is out there in the world, a mental image that allows us to interact with the world as our brain concludes it is, and not as it may merely appear to be.

There are illusions in each of the five senses. A West African berry called miracle fruit can create a taste illusion, removing the sensation of bitterness from foods. Drinking orange juice after brushing your teeth makes it taste sour. There are even motor illusions: As kids, my sister and I would stand in a doorway with our hands by our sides, then press them outward against the frame as hard as we could for about one minute. When we left the doorframe, our hands would oddly float up without our volition—a kind of motor control illusion.

Perceptual completion emerges in infancy between around four and eight months, and adult-like adjustments come at around age five. A more everyday example of perceptual completion involves the blind spot in our visual field. In the part of the retina where the optic nerve passes through, there are no cones and rods, and so no visual image is projected there—and yet our brains fill in the missing information based on what surrounds it.

There is a trend, as we age, to get better and better at this sort of perceptual completion, precisely because we have experienced so much more of the world that our mental database of what is likely and what is unlikely is informed by millions more observations. These observations become data for the (unconscious) statistical processor of our brains. Through neuroplasticity, this changes the wiring of our brains with each new observation. So even though our sensory receptors begin to show wear as we age, and our brains show atrophy, decreased blood flow, and other deficits, our perceptual completion can improve. This is another of the many compensatory mechanisms that bring an advantage to the aging brain. Older people may well be more efficient and accurate at dealing with degraded signals than young people, because their perceptual system has more experience with the world.

The Berlin and Innsbruck Experiments

An astonishing example of neuroplasticity, of the brain rewiring itself, comes from a series of experiments begun in the 1800s by Hermann von Helmholtz. I think of Helmholtz as one of the fathers of modern cognitive neuroscience, and his work deeply inspired Irv Rock. Helmholtz was interested in all the senses and how they worked, and he was a tinkerer. He knew that our visual system could adapt to new experiences, but he wondered what the limits of that neuroplastic adaptation were; he studied this using distorting glasses.

Volunteers in Berlin wore prismatic glasses that shifted their visual field several inches to the left or right. Then Helmholtz asked them to reach for a coffee cup or pen or some other object nearby. Because the visual information was now shifted, their hands would go to the wrong place, several inches away from the object they wanted to grasp. Within an hour, their brains began to adjust, using perceptual adaptation. This was neuroplasticity in action! The brain took in the new information and rewired the motor system to accommodate the change. When the glasses were removed, the participants again made mistakes in the opposite direction for a short time, but the brain readapted quickly.

The prism adaptation experiment showed the degree to which the visual system and the movement (motor) system in our brains are connected and interdependent. It strongly suggests that our brains contain a spatial map of our surroundings. After putting on prism glasses we experience that this internal map is wrong and that it needs to be updated. These adaptations produce changes in the brain, in the sensory cortex, the motor cortex, the intraparietal sulcus, a region associated with error detection and correction, and in the hippocampus, the seat of spatial maps.

You’ve experienced these spatial maps if you’ve ever reached for a glass of water on the night table next to your bed in the dark, or found your way to the bathroom in the middle of the night without turning on the lights. The mental maps can be high-resolution, durable, and stable over time. Yet they’re also changeable if new information comes in that contradicts them.

Helmholtz thought that the degree of adaptation depended on the length of time the prismatic glasses were worn, but it has been shown recently that the process is instead dependent on the number of interactions between the visual and motor system that you have. In fact, if you just wear distorting glasses and don’t interact with the environment actively, no adaptation will occur. If a nurse moves your arm for you, no matter how many times, you won’t adapt. It’s not the eyes or visual cortex that make the adaptation in cases like this; it’s the motor (motion) system and brain circuits that govern the interaction between vision and movement. But as few as three interactions can bootstrap the brain to rewire itself.

Helmholtz’s pioneering experiments became a fascination in Innsbruck, Austria, and led to a series of experiments in the 1920s and 1930s. In one, people wore left-right reversing goggles. The adaptation took a lot longer than a simple shift of the visual image to the left or right, but astonishingly, full adaptation did occur. At least one brave soul drove a motorcycle through the streets of Innsbruck while wearing them (then again, perhaps it was the Innsbruck pedestrians witnessing it who were brave).

Pushing the adaptation notion to an extreme, the Innsbruck experiments included a pair of inverting goggles that turned the world upside down. The drive for the brain to adapt to perceptual changes is so powerful that it even corrected for this by eventually turning everything the right way around in the perceiver’s brain. For the first three days of wearing them, the participants made many mistakes. One held a cup upside down as it was about to be filled. Another tried to step over a lamppost, thinking that its top was on the ground. For two days there was a gradual adjustment, and by the fifth day, when participants woke up in the morning and viewed the world through their inverting goggles, everything appeared right-side up. They could navigate, go about their daily activities, as though nothing had happened. Various real-life activities, such as watching a movie or a circus performance, going to a tavern, motorcycling, biking, and going on ski tours, were part of the experience of the goggle-wearing participants. When they eventually took the goggles off, the right-side-up world appeared upside down to them. But the readaptation back to normal took only a few minutes. The brain was able to reset itself to the mode of perception it had known for decades with amazing quickness.

Why does the original adaptation take so long and the readaptation, going back, happen so fast? It’s the difference in the biology between really well-trodden paths and the changes that happen over a few days. All learning results in synaptic connections. Things that have been learned and practiced many times produce greater synaptic strength, and so it is easier to return to them.

This may all seem merely theoretical, or something that would only interest geeky perception neuroscientists. But it has helpful clinical uses as well. Consider strokes, something that affects one-quarter of people over age seventy.

Following a stroke, nearly one-third of people experience hemispatial neglect, also known as unilateral neglect. This causes the stroke survivor to ignore one side of their body or visual field and to be unaware that they have a deficit. As you can imagine, it is a leading cause of falls and other injuries. A reliable way to treat hemispatial neglect is through the use of prismatic glasses that gradually shift the patient’s attention toward the side that is neglected.

The prism adaptation experiments are also relevant for all of us who wear glasses. Ophthalmologists study these experiments to learn about the extent to which the visual system can adapt to distortions. If you find a new pair of strong glasses uncomfortable, your eye doctor may counsel you to just give it a couple of weeks. The stronger the lens, the higher its refractive index, and so the greater the distortions it will cause in the images around you. In really strong prescriptions, you might even see rainbow-like colors appearing around the edges of your visual field. In time, the brain can usually adapt to these (although it can be rough going until it does).

I experienced prism adaptation myself when I was an undergraduate at MIT. After learning about the experiments in my neuropsychology class, I built a pair of prism glasses one Friday afternoon that shifted the entire world about thirty degrees to the left. Before the experiment, my hands knew where things were in the world, and they simply found them. Once I put on the glasses, all bets were off. I tried to pick up the coffee cup on my dorm room desk and I missed it by twelve inches. On my second attempt, I tried to adjust, but I still missed it. I had to extend my hand and move it slowly to the right until it met the cup. Walking was a special challenge. Trying to navigate the long hallway in my dorm, I kept bumping into the wall. I dared not leave the building. But I stayed committed to the project. I walked around, reaching for things. I ate meals and read my textbooks with the glasses on.

That night, my dreams were full of little episodes of me reaching for things, walking around, and in my dreams I managed to grab hold of everything I reached for. When I woke up in the morning, the world and my interactions with it appeared normal. It felt miraculous. I spent the weekend walking around with these weird-looking glasses, studying, eating in the cafeteria, and successfully getting food from the plate into my mouth.

I took the prism glasses off Monday morning, two and half days after the experiment began. The world was completely shifted to the right now by thirty degrees, and I bumped into walls again, spilled eggs on my clean shirt, completely missed doorknobs, and so on. My brain had successfully adapted to the original shift and would have to relearn and readapt to this new one. By noon everything was back to normal—the readaptation took much less than the original adaptation time. This is because the adaptation required me to learn something new; the readaptation simply required that I reactivate those very well-established pathways and synaptic connections that already existed.

The prism adaptation experiments are a compelling story of short-term neuroplasticity—the brain adapting to changing conditions and rewiring itself. These experiments also tell a story about sensory integration—the interactions between the motor system and the visual system—not just about the visual system itself.

We are constantly updating our own representation of our body, where it is in space, and how it relates to us and to others—we do this through a combination of touch and vision. One illustration of this is the “rubber hand illusion.” In it, the experimenter hides your hand from you, say, underneath a table, and puts a rubber hand on top of the table oriented in the same way that your own hand would be. The experimenter puts one of his hands on yours, out of sight and underneath the table, and another on the visible rubber hand. Next, the experimenter strokes both hands in synchrony. After just one minute, most people come to believe strongly that the rubber hand is their own. This is because the visual system informs the tactile system, and when there is ambiguity or a conflict, the visual system usually wins. Interestingly, this can happen even if the rubber hand doesn’t look especially realistic, or if it has a different skin tone or complexion—your visual system works in tandem with your tactile system to override the knowledge that you have about which one really is your hand. The illusion doesn’t occur if you simply watch the rubber hand without having your own hand surreptitiously stroked—the synchronous tactile-visual input is necessary. The illusion can also work with other parts of your body. Astonishingly, it works with your face. In the enfacement illusion, you watch a video of someone else’s face being stroked with a Q-tip in synchrony with an experimenter stroking yours, and you come to feel that the other face belongs to you, even if it doesn’t really look that much like you! What all this means is that your very sense of self is constructed, that it is built out of perceptual inputs and is malleable.

The power of vision to overcome other senses is captured in a Warner Bros. cartoon short directed by Chuck Jones called “Mouse Wreckers.” Two mice, living behind the baseboards of a large human house, feel constrained by the presence of the house cat and plot to drive him crazy so that he’ll move out. In one sequence, they nail all of the furniture in the living room to the ceiling and remove the ceiling lamp and attach it to the floor. When the cat wakes up from a nap, he looks around and sees the ceiling lamp next to him. He looks up and sees the couch, the coffee table, the easy chair, and so on and concludes that he must be on the ceiling and that the floor—where he belongs—is where all the furniture is. Panicked, he tries to jump up toward the ceiling, where he thinks he’ll be safe. But his claws are unable to hold on as gravity pulls him back down. He keeps making leaps toward the ceiling (which he is certain is the floor) and keeps falling back down. His visual system has turned out to be an unreliable indicator of reality, but he can’t override it. The brilliance of the cartoon lies, in part, in the fact that it is based on this well-established neuroscientific principle.

Airplane pilots spend dozens of hours learning not to place too much emphasis on their visual system, or, for that matter, on their vestibular system, and instead to “trust the gauges.” Our brains and bodies have not had time to evolve systems for accurately interpreting the sensations that come from being in flight, and so they can be unreliable. Many fatal accidents have occurred from pilots ignoring their gauges and instead relying on their perceptions. This is what happened to John F. Kennedy Jr. Flying at dusk in poor weather, he may have been unable to distinguish the sky from the water he was flying above and he may have had the bodily and visual sensation that he was upside down. His gauges would have told him whether this was true or not, but he may have thought that the gauges were malfunctioning—this happens occasionally, but less often than our senses mislead us. It seems he turned the plane upside down and flew into the water, thinking he was ascending into the sky. This is also believed to be what is responsible for aviation accidents in the Bermuda Triangle—pilots become disoriented with no landmarks, they confuse the air and the sea, and while ignoring their gauges but trusting their (unreliable) senses, they fly their planes right into the deep.

Exploratory behaviors are the key to building sensory experience and neuroplasticity. Children fail to develop normal behavior if they are victims of motor deprivation. This was shown in an experiment with kittens. One young kitten was able to move around its environment more or less freely. A littermate was placed in a cart that replicated the movements of the first kitten. If the first kitten turned left, so did the second kitten’s cart. If the first kitty jumped, so did the second kitten, via its cart. The two kittens received essentially the same visual stimulation, but only one was actively exploring its environment. The passive kitten did not develop normal behaviors. It didn’t blink at an approaching object. It didn’t extend its paws to ward off a collision when carried gently downward toward the floor, and it didn’t avoid a visual cliff. (A visual cliff is an apparent drop of several feet underneath a safe piece of thick glass; in other words, there is no actual danger, but higher mammals with an intact sense of motor behaviors will avoid it. This is why glass-bottomed walkways, although popular in some cities, make a lot of people nauseous or cause their hearts to flutter—it’s a deeply embedded reaction.) Those toys in the crib for your grandchild are most effective if the child can reach for and grasp them, not just stare at them passively. And if their hands and legs can actually cause something to move, that is even better training for them. Older adults who want to maintain their sense of balance and orientation must not just observe the environment but also move around in it.

Neuroplastic adjustments occur throughout the life span. As babies grow into adulthood, the brain needs to adapt to the way that sensory information changes with increasing separation between the eyes and ears and increased size of the tongue. Your tactile system has to adapt to changes in the distance between parts of your growing body. The growth of bones and muscles requires a gradual modification of the signals your brain sends to initiate smooth, coordinated movements—these are all forms of compensatory neuroplasticity.

Our touch sense can remap very quickly. After pain is experienced in a localized part of the skin, such as from an injection or pinprick, an area around the affected area, up to five-eighths of an inch, can immediately become hypersensitive. This kind of neuroplasticity may protect us from harm if nerve cells are damaged by an injury, by allowing us to be more sensitive to pain surrounding the initial site. That feeling of pain can motivate us to withdraw from a dangerous environment or remove thorns and splinters and other foreign objects from our skin.

When a limb or digit or other body part is amputated, the nerve endings in it are severed. But the other end of the nerve fibers still connects to the brain. As a result, many people report feeling sensations in a body part that is no longer there; indeed, phantom limb pain affects 90 percent of amputees. Using a visual-touch therapeutic approach that is conceptually similar to the rubber hand, therapists can promote neural remapping by touching parts of the patient’s body, stump, or surrounding area while the patient watches and experiences touch sensations that were previously associated with the missing limb. The synchronized seeing and feeling speeds up the process. Similar techniques help in treating referred pain, pain that originates in one set of sensory receptors but feels as though it’s coming from another.

A different approach to phantom limb pain was pioneered by Vilayanur Ramachandran of UC San Diego. People who suffer from phantom limb pain often experienced paralysis or pain in the limb before it was amputated and complain of their (phantom) limb having a cramp or being in an uncomfortable or clenched position. Ramachandran places a mirror in the center, with one limb on each side of it, and instructs the patient to look at the side of the mirror with the intact limb—the patient now sees two limbs, one a mirror image of the other. The patient is then asked to move both of their limbs symmetrically. Of course, they can’t actually move the phantom limb, but as they look into the mirror, they see two limbs moving—their intact limb and its reflection. The brain is fooled into thinking it is seeing the phantom limb in motion, and this causes a welcome remapping of the circuits that were causing the discomfort, cramp, or clenching, and in many cases, their pain is relieved.

Age-Related Dysfunctions

Vision

Perhaps the most well-known and reliable marker of aging is declining vision, specifically, an inability to read. Starting around age forty, people begin showing up at the reading glasses section of the nearby drugstore or make appointments with an optometrist.

My first recollection of adult aging was exactly on my fiftieth birthday. It was late December, and I had awakened early to carpe the diem. It was still dark outside—a product of the short days of a Montreal winter—and I held the morning newspaper out in front of me at arm’s length so that my eyes could focus on the letters, just as I had done hundreds of mornings before. But on this morning, my arms seemed to be shorter, the letters too small and blurry for me to read. My first thought was that the Times had changed their font. I rooted through the recycling basket to find yesterday’s paper and I had just as much trouble with that. I tried holding the newspaper farther away until the letters came into focus, but my arms were about an inch or two too short. It felt like somehow my arms had shrunk during the night because holding the paper at arm’s length had been working for me so well for the last year or so.

This change in vision is called presbyopia. It occurs because of age-related changes in proteins in the lens, making the lens harder and less elastic over time. Age-related changes also take place in the muscle fibers surrounding the lens. With less elasticity, the eye has a harder time focusing up close, which takes more muscle tension than focusing far away.

You would think that if it’s just a muscle issue you might be able to do exercises to prevent it from happening or to slow down the process, but there is no evidence supporting this, nor for preventing the lens hardening. Because proteins are at the root of this, and DNA encodes for protein synthesis, there may be genetic therapies in our lifetimes that can address these problems. But for now, reading glasses or surgical correction of presbyopia are in the future for most of us.

Most of us will experience something like this change in our visual system. Because of adaptation, we don’t notice the soft transition between good eyesight and weakening eyesight. We hold things farther away, we install higher-output light bulbs; we increase the font size for text messages on our phones. Our brain adapts in a continuous fashion using its powerful pattern-recognition systems. The signal our retina sends to our brain is blurred, and from the actual input stream we might not be able to tell a lowercase c from a lowercase o, but context is everything. One combination of letters forms a word (look) and one does not (lcck). And sometimes we need a larger context, evaluating not just the single word, but the sentence and semantic context surrounding it: Please lock the door. Even if the data reaching the brain for the second word is ambiguous or appears as lcck, your brain sorts it out, automatically, and without your conscious awareness.

This automatic correction of the input stream is a form of perceptual completion, and something that the brain has had a chance to practice throughout our entire lives. As we age, the ratio of how much our brains rely on the input signal versus our perceptual inferencing shifts with every decade after forty. Our magnificent pattern-matching brains do more and more filling in, not just because our senses require them to, but because they’ve had so many more experiences than young brains that it is simply more efficient to make inferences than to try to decode every little perceptual detail. Have you ever read a word and then found out a few moments later you read it wrong? You go back and look at the word and you could’ve sworn you saw one word, and now you see another. Your pattern-matching brain simply made an error and sent a realistic, vivid representation of the wrong word up to your conscious awareness.

This happened to me just the other day. I was planning a trip to New York and my hotel gave me a bunch of vouchers for free meals and attractions on Coney Island. That day, at lunchtime, a friend served bratwurst and put out several jars of condiments, including one of a new mustard I’d never seen. I looked at the jar and I clearly and assuredly read Coney Island and decided to try some. It was delicious, and so I looked at the bottle more carefully to write down the brand, and only then did I realize the words I had seen were in fact Honey Mustard. My brain had Coney Island on its mind and so Honey became Coney and then Mustard had just enough letters in common with Island to fill in and replace what was actually there. Here was pattern matching and perceptual completion run amok: The words Coney and Honey differ by only a single letter, and both Island and Mustard have the form s-blank-a-blank-d. (Plus, to my aging visual system, which has come to rely on statistical inferencing, the stylized r probably looked like an n.)

Perceptual completion is a kind of categorization, a cognitive-driven effect, and is called top-down processing, as opposed to purely stimulus-driven perception, which is called bottom-up. When we’re young, or when we’re learning something new, we have few preconceptions and so we see things more as they really are. The process of maturing, and of aging, involves categorization of things. We tend to categorize more as we get older because in most cases, it is mentally efficient to do so. A brand-new study shows that this kind of automatic categorization is dependent to a large degree on how prevalent category members are. Like an efficient filing clerk, we tend to combine things, to make larger categories, so that we don’t end up with a bunch of mental file folders with only a single item in them.

If you’re shown a bunch of blue dots and a bunch of purple dots in equal numbers and asked to label them “blue” or “purple,” you’ll have no trouble doing this. But if I decrease the total number of blue dots present, you’ll begin to classify some of the purple dots as blue—the rarity of blue dots causes you to expand your category.

This doesn’t happen just with colors, but with emotional stimuli as well. Asked to categorize faces as threatening or benign, you’ll expand your definition of threatening if the proportion of threatening faces decreases below a certain level. The same is true with more abstract judgments, such as judging whether a certain behavior is ethical: In the absence of clearly unethical behaviors, behaviors that were seen as acceptable before now loom as unethical. This has large societal implications. As one study’s authors explain, “When violent crimes become less prevalent, a police officer’s concept of ‘assault’ should not expand to include jay-walking. What counts as a ripe fruit should depend on the other fruits one can see, but what counts as a felony, a field goal, or a tumor should not, and when these things are absent, police officers, referees, and radiologists should not expand their concepts and find them anyway. . . . Although modern societies have made extraordinary progress in addressing a wide range of social problems, from poverty and illiteracy to violence and infant mortality, the majority of people believe that the world is getting worse. The fact that concepts grow larger when their instances grow smaller may be one source of that pessimism.”

It’s certainly easy to fall into pessimism if you’re losing the sensory systems you’ve relied on most of your life. Another common visual problem is cataracts, which are a clouding of the lens of one or both eyes. You can get them at any age, but they more often show up after forty, although they tend to be small and therefore don’t affect vision much. By age sixty they can begin to cause blurred vision, and by age eighty, more than half of Americans will have them. Normally, light passes through the lens and is projected on the retina in back of the eye. The cataracts cause a blurry image. The lens is mostly made up of water and—you guessed it—proteins. As we age, some of those proteins clump together and cloud a region of the lens.

Remember that evolution is dependent on reproduction to transmit survival advantages across multiple generations. Consequently, evolution does not generate adaptive improvements for conditions that occur outside of normal reproductive age. Hence, there has been no evolutionary pressure to favor people who don’t get cataracts or presbyopia, and so they are ever present in aging populations.

There is an emerging but still small body of evidence linking cataracts to smoking and diabetes. Healthy practices going back to your teen years can influence this outcome years later. The best cataract protection comes from wearing sunglasses when you’re outdoors starting at a young age. Cataract surgery replaces your cloudy, protein-clumpy lens with an artificial lens. It is one of the most common and safest operations performed if it is done by a qualified doctor at a good facility. Starting at age sixty, you should have your eyes examined every two years for early detection of cataracts, macular degeneration, glaucoma, and other diseases of the eye. Early detection of these can save your ability to see.

Hearing

Perhaps the next most common failing is hearing—presbycusis—and most of us will end up needing hearing aids at some point. Presbyopia and presbycusis come from the Greek root presby, which means old. (Presbyterians are so called because their religion follows a system of church governance by the elders of the church.) As with vision, the loss tends to be gradual. There are several causes of hearing loss, and scientists haven’t fully worked out the story yet. In part, hair cells in the ear stiffen and no longer conduct the necessary electrical signals up toward the brain.

Like the ultraviolet rays of the sun damaging the lens of the eye, environmental factors can damage the ear: Noise-induced hearing loss, from prolonged exposure to sudden loud sounds in the workplace or at rock concerts, can damage the hair cells of the ear irreversibly. The best preventative is to wear earplugs when you’re going to be around loud noises. High blood pressure, diabetes, and chemotherapy can also irreparably damage the hair cells. Another possible cause is age-related deterioration in mitochondrial DNA in the different structures of the cochlea (inner ear). Oxidative stress is thought to be a major cause of mutations in the mitochondrial DNA that cause the deterioration. You’ve probably heard of antioxidants—oxidative stress occurs when there is a chemical imbalance between antioxidants and chemical free radicals in your body, compromising the body’s ability to detoxify. This imbalance can lead to problems with lipids, proteins, and DNA and can trigger a number of diseases. An antioxidant is a molecule that can donate an electron to a free radical, thereby neutralizing it. Foods that are high in antioxidants, such as blueberries, might be a promising way to forestall or correct this, but it’s too early to tell; evidence for the effectiveness of antioxidant foods is mixed. Still, the Mayo Clinic and other experts recommend making them a regular part of your diet while the evidence comes in, not just for fending off hearing loss, but for a range of maladies including cancer and Alzheimer’s (more on that later).

Hearing loss affects one-third of people in the United States between the ages of sixty-five and seventy-four, and (as I mentioned earlier) nearly half of people over seventy-five. Hearing loss in someone who was born with hearing is far more socially isolating than vision loss, because it gets to the very core of how we communicate with one another. Even when deaf individuals learn sign language, they still experience social isolation from the hearing community, most of whom do not know sign language. From the brain standpoint, once input from the ear has diminished, entire populations of neurons are left without external stimulation. What do you suppose they do? They make up their own stimulation, or resort to random firing, resulting in auditory hallucinations, some of which can be musical. Loss of input to the visual system can result in visual hallucinations. This happens often enough that there’s a name for it: Charles Bonnet syndrome.

The auditory hallucinations caused by hearing loss often manifest as tinnitus, a ringing in the ears, which affects one out of five adults, and which can be intermittent or chronic. Most patients with tinnitus do not find it severe, but a great many find it distracting, annoying, or intrusive, interfering with sleep, work, and leisure activities. Many experience emotional distress because of it. Chronic tinnitus almost certainly leads to a reduction in quality of life; it’s difficult to experience the peacefulness and serenity of a quiet environment when you’ve got a constant ringing in your ears. As one researcher said, “The notion of peace and quiet is no longer an option for many tinnitus patients.”

Tinnitus does appear to be occurring in the brain, not in the ear, although it is experienced as coming from the ear. It has been compared to phantom limb pain in that it results from a loss of input to a cortical area. The newest hypothesis is that it results from homeostatic neural plasticity: Neurons in the auditory cortex that have grown accustomed to receiving input at a wide range of frequencies, across an entire lifetime, suddenly find themselves with no input due to peripheral, age-related hearing loss. In order to obtain a stable supply of the full range of expected stimuli (homeostasis), these neurons start amplifying spontaneous and random activity, causing the tinnitus—ringing in the ears. An experimental therapy for tinnitus based on this idea shows promise. Neurons in the inner ear fire in response to very specific frequencies. Because the ringing typically occurs at a specific and unchanging frequency, fatiguing those neurons selectively can bring relief. An adjustable noise machine or even a hearing aid can be programmed to provide stimulation at the precise frequency of the tinnitus, thus giving those orphaned neurons some stimulation, which causes them to calm down and, voilà, the tinnitus disappears.

Hearing aid technology has benefited enormously from the digital electronics revolution. As recently as a generation ago, hearing aids were little more than an updated version of those huge ear horns that people used to amplify sound in the 1700s. Modern ones can be programmed by an audiologist to emphasize some frequencies more than others and to focus on sounds coming from a particular direction, which, ironically, was something the old ear horns could do well and the “improved” analog hearing aids could not. (If you wanted to emphasize different frequencies, you’d choose a differently sized and shaped horn; if you wanted to hear sounds from a particular direction you’d get one with tubing turned toward that direction—front, behind, up, down.) There are many different brands of hearing aids, and they vary a great deal in cost, but the most important factor in how helpful one will be is the quality of the audiologist doing the tuning and personal adjustments—a really good audiologist with a mediocre hearing aid is better than the reverse.

For a hearing aid to work, however, you need to still have viable hair cells. Because hair cells are frequency selective—they only fire off an electrical signal to the frequencies they are tuned for—you can have hearing loss that is confined to particular frequencies. This is where the frequency tuning of digital hearing aids is most helpful.

But what if your hair cells are completely shot? A relatively new device, a cochlear implant, can work for many people who become profoundly deaf. A microphone, similar to that in a hearing aid, picks up sounds from the environment and is usually mounted behind your ear. It connects to a device that is surgically implanted into the cochlea, a part of the inner ear. A cochlear implant can allow truly deaf people to hear, but the current technology does not restore hearing anywhere near to normal levels. This is because your cochlea is normally receiving information on thousands of auditory channels, and these provide information across the frequency range of human hearing—providing the low sounds of thunder or a bass violin, the high sounds of cicadas in the summer or cymbals in a drum set, and everything in between. All those channels allow us to have precise resolution of frequencies, giving music, speech, laughter, and environmental noises their distinctive acoustic and psychological colors. In contrast, a cochlear implant typically has only twelve to twenty-two channels of information. If properly configured, it can provide a kind of scratchy, noisy signal that allows you to understand speech, but music and other sounds don’t come through well. New bio-nanotechnologies are certain to improve this in the years to come.

Touch

Our sense of touch also declines with age. Decreased blood flow to the extremities—hands and feet—can impair the touch receptors there; older adults may not be able to feel that the shower is slippery or be able to differentiate between hot and cold water. With age, the touch sensors in the pads of the fingers deteriorate, producing a loss of sensitivity. We fumble with things. Arthritis makes it painful to move our fingers and toes. As Atul Gawande writes, “Loss of motor neurons in the cortex leads to losses of dexterity. Handwriting degrades. Hand speed and vibration sense decline. Using a standard mobile phone, with its tiny buttons and touch screen display, becomes increasingly unmanageable.”

Patches of skin may become numb as our touch sensors wear out or myelination decreases. There’s also a common affliction of old age that is going to sound made-up but is real: a patch of skin on your back, typically just out of reach, that itches intermittently, and sometimes incessantly. And scratching it provides no relief—none at all! Because the condition arises from damage within certain nerve pathways, those same pathways block the relief that scratching usually provides. The condition is called notalgia paresthetica. There is no known cure, and few treatments. An anti-inflammatory gel, diclofenac (brand name Voltaren), can provide relief to some patients, and there are promising results with CBD cream and oil, based on cannabinols.

Taste and Smell

We are more familiar with disorders of the visual and auditory systems than with disorders of the other senses, but taste and smell can also be impacted by aging. Olfactory dysfunctions can show up in three different ways: a reduced sense of smell called hyposmia, a complete loss of the ability to smell called anosmia, and an altered perception in which things smell differently than they should, called phantosmia. (If these foreign-sounding words bother you, just look at the prefixes to remember what they mean: Hypo- is too little of something, a- is without something, and phantom-, well, you know that means something that isn’t there.) Phantosmia often shows up as the illusory experience of something smelling burned, spoiled, rotten, or otherwise unpleasant.

Decreased sense of smell is very common among older adults, reported by half of those between the ages of sixty-five and eighty and three-quarters of those over eighty. It’s not merely an inconvenience. Your sense of smell isn’t merely there so that you can smell the pine needles in the forest or the perfume of a loved one. It is critical for our ability to perceive health- and life-threatening situations such as dangerous fumes, polluted environments, and rotten or decayed food, and it serves as an early detection system for fire. Also important is that if you can’t smell, you can’t really taste anymore, either. Without smell, an onion is easily confused for an apple (with your eyes closed) and you’ve lost an important mechanism for keeping rotten foods out of your body. A disproportionate number of older adults die in accidental natural gas poisonings and petroleum explosions every year because they cannot detect these odors. The risk of dying of any cause is 36 percent higher for older adults with an olfactory impairment.

Women generally have a more refined sense of smell than men and can detect odors that men can’t, thanks to having a larger concentration of olfactory neurons. In order for you to smell something, chemicals from the object you’re smelling need to enter the nasal cavity through either the nostrils or the mouth, where they come in contact with a layer of mucus covering a skin-like layer of cells that house our smell receptors. There are more than 350 different receptor proteins in the human system, and in combination with one another, they allow us to detect a trillion different smells.

These cells can become damaged through normal usage, and normally they’re repaired, just like damaged skin cells. With aging, however, the repairs become more difficult or impossible, due to cumulative damage from pollution and from viral and bacterial infections. Another factor limiting repair is age-related telomere shortening. Telomeres are protective caps at the end of DNA sequences that become shorter with each replication. The forefront and cutting edge of antiaging research is seeking to find a way to inhibit the shortening of telomeres, and we may see that in our lifetimes.

Another factor in the way the world smells to us is the neurotransmitter acetylcholine. Like all neurotransmitters, acetylcholine is involved in several functions in the brain and body; it is too simplistic to think that each brain chemical controls a single behavior, emotion, or response. Acetylcholine is part of the brain’s cholinergic system, and it is necessary for the consolidation of memories during stage 4 sleep. It is also intimately involved in the sense of smell, facilitating attention, learning, and memory for odors. As with many neurochemicals and hormones, acetylcholine production diminishes with old age. In some cases, an olfactory deficit signals an age-related disease, such Alzheimer’s, Parkinson’s, and Korsakoff’s syndrome, as well as the non-age-related diseases of ALS (amyotrophic lateral sclerosis) and Down syndrome; all of these appear to be associated with damage to the cholinergic system. Drugs that enhance cholinergic activity, such as rivastigmine, may be helpful to older adults to relieve symptoms, whether or not the olfactory deficit is related to a disease or is simply an independent disorder.

It’s tempting to think of taste only for the pleasure it brings—a good meal, a fine wine, your favorite dessert, your loved one’s skin. But taste is an important sense for other reasons. Taste deficits alter food choices and lead to poor nutrition, weight loss, and reductions in immune-system function when we don’t ingest the vitamins and minerals we need. In addition, taste helps us prepare the body to digest food by triggering the production of saliva, alongside gastric, pancreatic, and intestinal fluids. The pleasurable feelings from eating tasty food become more important in old age when other sources of sensory gratification—like physical contact—may have become compromised or are less frequent.

You may have learned in school that we can detect four tastes: sour, salty, sweet, and bitter. Taste scientists (yes, there is such a thing) identified a fifth taste, umami, which detects the presence of an amino acid, glutamic acid, that is typically described as a meaty or brothy taste. We encounter it in meats, fish, mushrooms, and soy sauce, and it is also present in breast milk in about the same proportion as in soup broths. Yet even this new, fifth taste doesn’t complete the picture. Our taste sense allows us to detect fat content of foods, in particular, emulsified oils; this is what gives ice cream with a high butterfat content its compelling “mouthfeel.” We can also detect chalky tastes, such as those found in the calcium salts that are a principal ingredient of antacid tablets, and metallic tastes, such as we encounter in foods rich in iron or magnesium. These varied taste sensations help us to maintain a balanced diet of essential nutrients.

Many older adults complain that food lacks flavor. This is usually due to olfactory deficits—smell works in tandem with taste to convey the flavor of food and drink, and sensors inside the cheeks feed into the brain’s olfactory centers. Other causes of taste deficit are a history of upper respiratory infections, head injury, drug use, and an age-related reduction in saliva production. All of these factors can cause loss of appetite, loss of pleasure from eating, malnutrition, and even depression.

The loss of flavor can result from normal age-related declines in the sensory receptors themselves, but in many cases they result from diseases (especially liver disease and cancers) or medications. The list of medications that can alter taste and smell reads like the medicine cabinet of a typical seventy-five-year-old. They include certain lipid-lowering drugs, antihistamines, antibiotics, anti-inflammatories, asthma medication, and antidepressants. Chemotherapy, general anesthesia, and other medical treatments can also cause permanent damage to the chemistry-based senses of taste and smell.

The most noticeable problem in taste perception affecting older adults is an upward change in thresholds—it takes more of a given flavor to be perceived as the same amount as before. For a typical older individual, with one or more medical conditions and taking three prescription medications, the number of flavor molecules required to detect a taste shows marked increases depending on the flavor. If you’re typical, you’ll need to have a whopping twelve times the amount of salt to detect that salt is even present compared to when you were in your fifties. For bitter tastes, such as quinine, seven times the amount; for the brothy-meaty flavor of umami, five times the amount; and for sweeteners, around three times the normal amount. (Maybe this is why Grandma always has candies around to offer the grandkids.)

Another aspect of taste declines with age—the change threshold, or the amount of a flavor you have to add to an existing one to detect that it has changed. You’re probably more familiar with this idea from the auditory or visual domains. Suppose you’re at home and you notice your refrigerator making a humming noise. It has to be loud enough for you to notice, and that’s called the minimum discrimination threshold. A separate question that concerns sensory neuroscientists is how much louder or softer that refrigerator hum would have to change before you noticed. Of course this depends on factors such as whether you’re paying close attention to it or not, but these thresholds can be measured reliably. Some playful psychologists many years ago named this threshold the JND, for just noticeable difference.

We study JNDs for all kinds of things. How much would I have to change the intensity, or brightness, of the lighting in a room before you noticed it had increased or decreased? In a room well-lit by one hundred bulbs, each producing fifteen hundred lumens, a single photon change will go unnoticed. But in a completely dark room, with your eyes adapted to the darkness, you will notice a single photon. Or imagine that you’re carrying a shopping bag with groceries in it. How much weight do I have to add or take away before you notice? If I add a single grain of rice, you will not notice. If I add a one-pound box of rice you probably will, but it depends on how much was already in the bag—we notice an increase from one pound to two pounds, but not from fifty pounds to fifty-one pounds.

With taste among older individuals, the JNDs change, in just the direction you might predict: It takes a greater change to notice a change at all. That is, JNDs for taste are increased in older individuals. There are also age-related changes in sensitivity across different areas of the tongue and the mouth. A teenager may experience a burst of flavor as soon as they put a SweeTart or Starburst candy in their mouth. An older adult may have to swirl it around in their mouth a bit. All of this leads to a reduced ability of older adults to identify foods just on the basis of taste—the sight, smell, and sound of foods become more important. Aging research scientist Susan Schiffman (age seventy-nine) advises that “switching among different foods on the plate at a meal reduces sensory adaptation or fatigue. . . . Providing meals with a variety of tastes and flavors increases the likelihood that at least one item on the plate will be appealing.” Now I understand why my mentor Irv Rock at age seventy-three loved to order variety plates when we went out for lunch. The thali at Indian and Nepalese restaurants we frequented in Berkeley, or the mezze platter at Middle Eastern restaurants, gave him a way to keep his taste and smell receptors stimulated. Or maybe he was just high on Factor V and liked to try new things.

When I turned sixty, I began to notice that the foods I was eating weren’t as flavorful as I remembered them being. Was this a memory trick, were modern commercially farmed foods less flavorful, or had my taste buds changed? In the spring of that year I had the opportunity to go to Mexico to meet with former president Vicente Fox and discuss his strategies and advice for successful aging. While in Centro Fox, near León, in central Mexico, I enjoyed three of the most delicious, flavorful meals of my life. There was nothing wrong with my taste buds; it was the food I was eating! As happens with many people over sixty, my doctor had suggested a diet that was low in calories, salt, and red meat, no bread or pasta, low in carbohydrates, and with zero refined sugar. I had given up my morning breakfast of tasty granola, bacon, and omelets for oatmeal and egg whites—in short, I was eating a bland diet, not by design, but as a by-product of trying to eat healthy. The fix? When I got home, I started putting Cholula and Tabasco sauce on my egg whites, and spicing up my morning oatmeal with cinnamon and nutmeg, and my zest for food came back without my gaining any weight or increasing my cholesterol numbers.

Unfortunately, there are no prosthetics available for olfactory and gustatory deficits, the equivalent of hearing aids and glasses. To be safety conscious, a gas detection device with a visual signal is advised for people with olfactory impairment so that dangerous fumes won’t go undetected. The odors of food gone bad can also go overlooked, so some culinary alert system may be needed, perhaps as simple as an understanding caregiver.

Disgust is a complex emotion that arises from thoughts or perceptions that we find repellant—the taste or smell of rotten food can cause disgust, as can a betrayal by a loved one or public figure who violates trust. Because our reactions to certain smells and tastes are so immediate and so visceral, it’s tempting to think that there is some molecule or quality in the object that is disgusting, but this would be wrong—the brain has to interpret it as disgusting, and not all brains react the same way. Some of this is learned—if you ate a bad cantaloupe that made you sick to your stomach, you may find all cantaloupes disgusting for quite some time. But, of course, disgusting is in the brain of the beholder. Dogs, for example, seem to show no disgust—they will eat or roll in practically anything. There is a cultural overlay as well—Americans find it disgusting that people in other cultures eat grasshoppers, ants, dogs, and monkeys, and I’m sure there are a great many who find our diet of cheeseburgers and potato chips unimaginable.

Perception and Complex Environments

We evolved in complex, varied, natural environments. Some scientists have come to appreciate the wisdom that Joni Mitchell offered in her song “Woodstock”: “And we’ve got to get ourselves back to the garden.” Plants, dirt, sky, and wildlife offer stimulation to our perceptual systems. Perhaps the visual input is the first thing you think of, but there are sounds and smells as well; the taste of wet air before a rain; the touch of tree bark or rocks underneath our feet. Because everything we know originates with our senses, keeping our senses stimulated is critical to keeping our brains active, alert, and healthy. Neurologist Scott Grafton is a big believer in the healing power of the outdoors. “Take old people out of a complex environment and they age quicker,” he notes. “The brain doesn’t just need physical activity to remain vital, but complex physical activity—the brain needs it to stay healthy and engaged.” Something as simple as walking in a new environment provides this critical brain input. Your feet have to adjust to different surfaces and angles, your ankles need to move in conjunction with your feet. Your eyes are scanning the surroundings for new things as you take in information from all the other senses. Many older adults have an urge to travel, and this may originate in an adaptive, biological drive that will serve to keep them healthy for longer, especially if the travel involves walking tours of new places. Those who lack the mobility or finances for exotic travel can benefit from visiting a local park, forest, or garden, or even a dense city street with all of its bustling activity. These sensory inputs cause otherwise dormant and complacent neurons to perk up, to fire, and to make new connections. Neuroplasticity is what keeps us young, and it is only a walk in the park away.