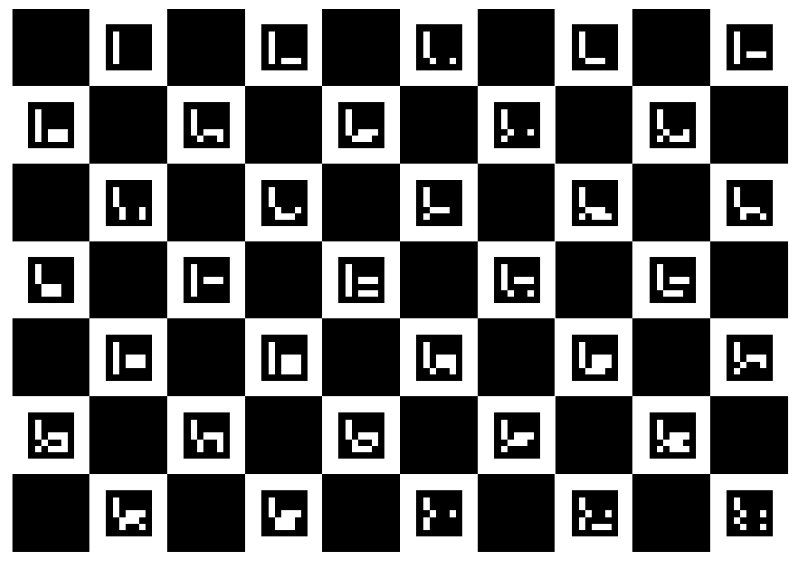

To perform camera calibration as we discussed earlier, we must obtain corresponding 2D-3D point pairings. With ArUco marker detection, this task is made simple. ArUco provides a tool to create a calibration board, a grid of squares and AR markers, in which all the parameters are known: number, size, and position of markers. We can print such a board with our home or office printer, with the image for printing supplied by the ArUco API:

Ptr<aruco::Dictionary> dict = aruco::Dictionary::get(aruco::DICT_ARUCO_ORIGINAL);

Ptr<aruco::GridBoard> board = aruco::GridBoard::create(

10 /* N markers x */,

7 /* M markers y */,

14.0f /* marker width (mm) */,

9.2f /* marker separation (mm) */,

dict);

Mat boardImage;

board->draw({1000, 700}, boardImage, 25); // an image of 1000x700 pixels

cv::imwrite("ArucoBoard.png", boardImage);

Here is an example of such a board image, a result of the preceding code:

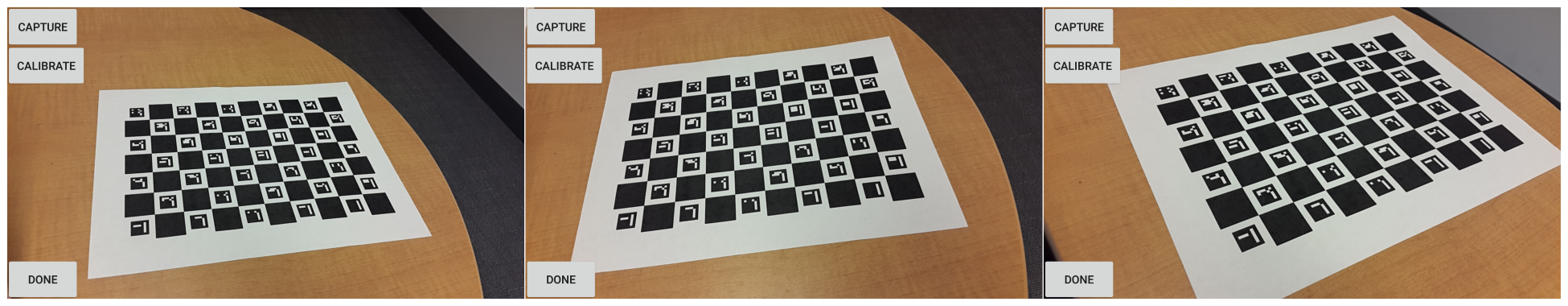

We need to obtain multiple views of the board by moving either the camera or the board. It is handy to paste the board on a piece of rigid cardboard or plastic to keep the paper flat while moving the board, or keep it flat on a table while moving the camera around it. We can implement a very simple Android UI for capturing the images with just three buttons, CAPTURE, CALIBRATE, and DONE:

The CAPTURE button simply grabs the grayscale image buffer, as we saw earlier, and calls a native C++ function to detect the ArUco markers and save them to memory:

extern "C"

JNIEXPORT jint JNICALL

Java_com_packt_masteringopencv4_opencvarucoar_CalibrationActivity_addCalibration8UImage(

JNIEnv *env,

jclass type,

jbyteArray data_, // java: byte[] , a 8 uchar grayscale image buffer

jint w,

jint h)

{

jbyte *data = env->GetByteArrayElements(data_, NULL);

Mat grayImage(h, w, CV_8UC1, data);

vector< int > ids;

vector< vector< Point2f > > corners, rejected;

// detect markers

aruco::detectMarkers(grayImage, dict, corners, ids, params, rejected);

__android_log_print(ANDROID_LOG_DEBUG, LOGTAG, "found %d markers", ids.size());

allCorners.push_back(corners);

allIds.push_back(ids);

allImgs.push_back(grayImage.clone());

imgSize = grayImage.size();

__android_log_print(ANDROID_LOG_DEBUG, LOGTAG, "%d captures", allImgs.size());

env->ReleaseByteArrayElements(data_, data, 0);

return allImgs.size(); // return the number of captured images so far

}

Here is an example of ArUco marker boards detected using the previous function. A visualization of the detected markers can be achieved with cv::aruco::drawDetectedMarkers. Points from the markers that were detected properly will be used for calibration:

After obtaining enough images (around 10 images from various viewpoints is usually sufficient), the CALIBRATE button calls another native function that runs the aruco::calibrateCameraAruco function, with the saved arrays of point correspondences as follows:

extern "C"

JNIEXPORT void JNICALL

Java_com_packt_masteringopencv4_opencvarucoar_CalibrationActivity_doCalibration(

JNIEnv *env,

jclass type)

{

vector< Mat > rvecs, tvecs;

cameraMatrix = Mat::eye(3, 3, CV_64F);

cameraMatrix.at< double >(0, 0) = 1.0;

// prepare data for calibration: put all marker points in a single array

vector< vector< Point2f > > allCornersConcatenated;

vector< int > allIdsConcatenated;

vector< int > markerCounterPerFrame;

markerCounterPerFrame.reserve(allCorners.size());

for (unsigned int i = 0; i < allCorners.size(); i++) {

markerCounterPerFrame.push_back((int)allCorners[i].size());

for (unsigned int j = 0; j < allCorners[i].size(); j++) {

allCornersConcatenated.push_back(allCorners[i][j]);

allIdsConcatenated.push_back(allIds[i][j]);

}

}

// calibrate camera using aruco markers

double arucoRepErr;

arucoRepErr = aruco::calibrateCameraAruco(allCornersConcatenated,

allIdsConcatenated,

markerCounterPerFrame,

board, imgSize, cameraMatrix,

distCoeffs, rvecs, tvecs, CALIB_FIX_ASPECT_RATIO);

__android_log_print(ANDROID_LOG_DEBUG, LOGTAG, "reprojection err: %.3f", arucoRepErr);

stringstream ss;

ss << cameraMatrix << endl << distCoeffs;

__android_log_print(ANDROID_LOG_DEBUG, LOGTAG, "calibration: %s", ss.str().c_str());

// save the calibration to file

cv::FileStorage fs("/sdcard/calibration.yml", FileStorage::WRITE);

fs.write("cameraMatrix", cameraMatrix);

fs.write("distCoeffs", distCoeffs);

fs.release();

}

The DONE button will advance the application to AR mode, where the calibration values are used for pose estimation.