For eye detection, it is important to crop the input image to just show the approximate eye region, just like doing face detection and then cropping to just a small rectangle where the left eye should be (if you are using the left eye detector), and the same for the right rectangle for the right eye detector.

If you just do eye detection on a whole face or whole photo, then it will be much slower and less reliable. Different eye detectors are better suited to different regions of the face; for example, the haarcascade_eye.xml detector works best if it only searches in a very tight region around the actual eye, whereas the haarcascade_mcs_lefteye.xml and haarcascade_lefteye_2splits.xml detect work best when there is a large region around the eye.

The following table lists some good search regions of the face for different eye detectors (when using the LBP face detector), using relative coordinates within the detected face rectangle (EYE_SX is the eye search x position, EYE_SY is the eye search y position, EYE_SW is the eye search width, and EYE_SH is the eye search height):

| Cascade classifier | EYE_SX | EYE_SY | EYE_SW | EYE_SH |

| haarcascade_eye.xml | 0.16 | 0.26 | 0.30 | 0.28 |

| haarcascade_mcs_lefteye.xml | 0.10 | 0.19 | 0.40 | 0.36 |

| haarcascade_lefteye_2splits.xml | 0.12 | 0.17 | 0.37 | 0.36 |

Here is the source code to extract the left eye and right eye regions from a detected face:

int leftX = cvRound(face.cols * EYE_SX);

int topY = cvRound(face.rows * EYE_SY);

int widthX = cvRound(face.cols * EYE_SW);

int heightY = cvRound(face.rows * EYE_SH);

int rightX = cvRound(face.cols * (1.0-EYE_SX-EYE_SW));

Mat topLeftOfFace = faceImg(Rect(leftX, topY, widthX, heightY));

Mat topRightOfFace = faceImg(Rect(rightX, topY, widthX, heightY));

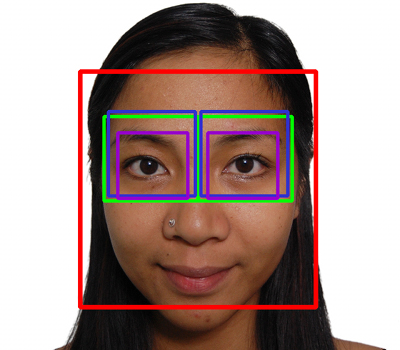

The following photo shows the ideal search regions for the different eye detectors, where the haarcascade_eye.xml and haarcascade_eye_tree_eyeglasses.xml files are best with the small search region, and the haarcascade_mcs_*eye.xml and haarcascade_*eye_2splits.xml files are best with larger search regions. Note that the detected face rectangle is also shown, to give an idea of how large the eye search regions are compared to the detected face rectangle:

The approximate detection properties of the different eye detectors while using the eye search regions are given in the following table:

| Cascade classifier | Reliability* | Speed** | Eyes found | Glasses |

| haarcascade_mcs_lefteye.xml | 80% | 18 msec | Open or closed | no |

| haarcascade_lefteye_2splits.xml | 60% | 7 msec | Open or closed | no |

| haarcascade_eye.xml | 40% | 5 msec | Open only | no |

| haarcascade_eye_tree_eyeglasses.xml | 15% | 10 msec | Open only | yes |

Reliability values show how often both eyes will be detected after LBP frontal face detection, when no eyeglasses are worn and both eyes are open. If the eyes are closed, then the reliability may drop, and if eyeglasses are worn, then both reliability and speed will drop.

Speed values are in milliseconds for images scaled to the size of 320 x 240 pixels on an Intel Core i7 2.2 GHz (averaged across 1,000 photos). Speed is typically much faster when eyes are found than when eyes are not found, as it must scan the entire image, but haarcascade_mcs_lefteye.xml is still much slower than the other eye detectors.

For example, if you shrink a photo to 320 x 240 pixels, perform a histogram equalization on it, use the LBP frontal face detector to get a face, then extract the left eye region and right eye region from the face using the haarcascade_mcs_lefteye.xml values, then perform a histogram equalization on each eye region. Then, if you use the haarcascade_mcs_lefteye.xml detector on the left eye (which is actually in the top-right of your image) and use the haarcascade_mcs_righteye.xml detector on the right eye (the top-left part of your image), each eye detector should work in roughly 90 percent of photos with LBP-detected frontal faces. So if you want both eyes detected, then it should work in roughly 80 percent of photos with LBP-detected frontal faces.

Note that while it is recommended to shrink the camera image before detecting faces, you should detect eyes at the full camera resolution, because eyes will obviously be much smaller than faces, so you need as much resolution as you can get.

For many tasks, it is useful to detect eyes whether they are open or closed, so if speed is not crucial, it is best to search with the mcs_*eye detector first, and if it fails, then search with the eye_2splits detector.

But for face recognition, a person will appear quite different if their eyes are closed, so it is best to search with the plain haarcascade_eye detector first, and if it fails, then search with the haarcascade_eye_tree_eyeglasses detector.

We can use the same detectLargestObject() function we used for face detection to search for eyes, but instead of asking to shrink the images before eye detection, we specify the full eye region width to get better eye detection. It is easy to search for the left eye using one detector, and if it fails, then try another detector (the same for the right eye). The eye detection is done as follows:

CascadeClassifier eyeDetector1("haarcascade_eye.xml");

CascadeClassifier eyeDetector2("haarcascade_eye_tree_eyeglasses.xml");

...

Rect leftEyeRect; // Stores the detected eye.

// Search the left region using the 1st eye detector.

detectLargestObject(topLeftOfFace, eyeDetector1, leftEyeRect,

topLeftOfFace.cols);

// If it failed, search the left region using the 2nd eye

// detector.

if (leftEyeRect.width <= 0)

detectLargestObject(topLeftOfFace, eyeDetector2,

leftEyeRect, topLeftOfFace.cols);

// Get the left eye center if one of the eye detectors worked.

Point leftEye = Point(-1,-1);

if (leftEyeRect.width <= 0) {

leftEye.x = leftEyeRect.x + leftEyeRect.width/2 + leftX;

leftEye.y = leftEyeRect.y + leftEyeRect.height/2 + topY;

}

// Do the same for the right eye

...

// Check if both eyes were detected.

if (leftEye.x >= 0 && rightEye.x >= 0) {

...

}

With the face and both eyes detected, we'll perform face preprocessing by combining the following steps:

- Geometrical transformation and cropping: This process includes scaling, rotating, and translating the images so that the eyes are aligned, followed by the removal of the forehead, chin, ears, and background from the face image.

- Separate histogram equalization for left and right sides: This process standardizes the brightness and contrast on both the left- and right-hand sides of the face independently.

- Smoothing: This process reduces the image noise using a bilateral filter.

- Elliptical mask: The elliptical mask removes some remaining hair and background from the face image.

The following photos shows the face preprocessing Step 1 to Step 4 applied to a detected face. Notice how the final photo has good brightness and contrast on both sides of the face, whereas the original does not: