Having obtained the tracks in principle, we need to align them in a data structure that OpenCV's SfM module expects. Unfortunately, the sfm module is not very well documented, so this part we have to figure out on our own from the source code. We will be invoking the following function under the cv::sfm:: namespace, which can be found in opencv_contrib/modules/sfm/include/opencv2/sfm/reconstruct.hpp:

void reconstruct(InputArrayOfArrays points2d, OutputArray Ps, OutputArray points3d, InputOutputArray K, bool is_projective = false);

The opencv_contrib/modules/sfm/src/simple_pipeline.cpp file has a major hint as to what that function expects as input:

static void

parser_2D_tracks( const std::vector<Mat> &points2d, libmv::Tracks &tracks )

{

const int nframes = static_cast<int>(points2d.size());

for (int frame = 0; frame < nframes; ++frame) {

const int ntracks = points2d[frame].cols;

for (int track = 0; track < ntracks; ++track) {

const Vec2d track_pt = points2d[frame].col(track);

if ( track_pt[0] > 0 && track_pt[1] > 0 )

tracks.Insert(frame, track, track_pt[0], track_pt[1]);

}

}

}

In general, the sfm module uses a reduced version of libmv (https://developer.blender.org/tag/libmv/), which is a well-established SfM package used for 3D reconstruction for cinema production with the Blender 3D (https://www.blender.org/) graphics software.

We can tell the tracks need to be placed in a vector of multiple individual cv::Mat, where each contains an aligned list of cv::Vec2d as columns, meaning it has two rows of double. We can also deduce that missing (unmatched) feature points in a track will have a negative coordinate. The following snippet will extract tracks in the desired data structure from the match graph:

vector<Mat> tracks(nViews); // Initialize to number of views

// Each component is a track

const size_t nViews = imagesFilenames.size();

tracks.resize(nViews);

for (int i = 0; i < nViews; i++) {

tracks[i].create(2, components.size(), CV_64FC1);

tracks[i].setTo(-1.0); // default is (-1, -1) - no match

}

int i = 0;

for (auto c = components.begin(); c != components.end(); ++c, ++i) {

for (const int v : c->second) {

const int imageID = imageIDs[g[v].image];

const size_t featureID = g[v].featureID;

const Point2f p = keypoints[g[v].image][featureID].pt;

tracks[imageID].at<double>(0, i) = p.x;

tracks[imageID].at<double>(1, i) = p.y;

}

}

We follow up with running the reconstruction function, collecting the sparse 3D point cloud and the color for each 3D point, and afterward, visualize the results (using functions from cv::viz::):

cv::sfm::reconstruct(tracks, Rs, Ts, K, points3d, true);

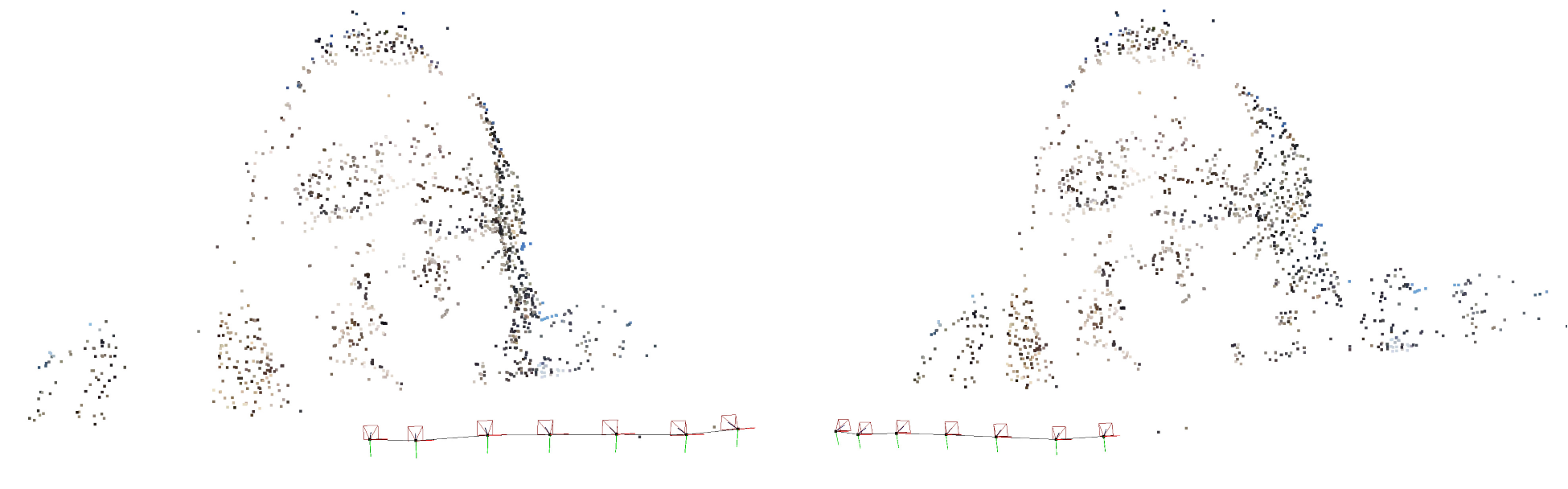

This will produce a sparse reconstruction with a point cloud and camera positions, visualized in the following image:

Re-projecting the 3D points back on the 2D images we can validate a correct reconstruction:

See the entire code for reconstruction and visualization in the accompanying source repository.

Notice the reconstruction is very sparse; we only see 3D points where features have matched. This doesn't make for a very appealing effect when getting the geometry of objects in the scene. In many cases, SfM pipelines do not conclude with a sparse reconstruction, which is not useful for many applications, such as 3D scanning. Next, we will see how to get a dense reconstruction.