The neural network is inspired by the structure of the brain, in which multiple neurons are interconnected, creating a network. Each neuron has multiple inputs and multiple outputs, like a biological neuron.

This network is distributed in layers, and each layer contains a number of neurons that are connected to all the previous layer's neurons. This always has an input layer, which normally consists of the features that describe the input image or data, and an output layer, which normally consists of the result of our classification. The other middle layers are called hidden layers. The following diagram shows a basic three-layer neural network in which the input layer contains three neurons, the output layer contains two neurons, and a hidden layer contains four neurons:

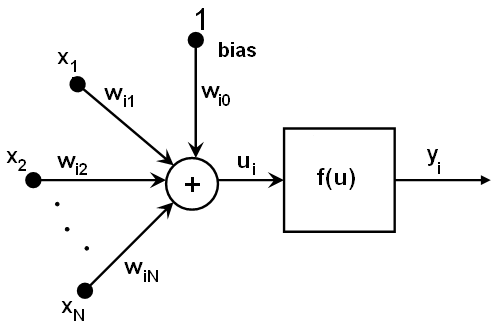

The neuron is the basic element of a neural network and it uses a simple mathematical formula that we can see in the following diagram:

As we can see, for each neuron, i, we mathematically add all the previous neuron's output, which is the input of neuron i (x1, x2...), by a weight (wi1, wi2...) plus a bias value, and the result is the argument of an activation function, f. The final result is the output of i neuron:

The most common activation functions (f) on classical neural networks are the sigmoid function or linear functions. The sigmoid function is used most often, and it looks as follows:

But how can we learn a neural network with this formula and these connections? How do we classify input data? The learn algorithm of neural networks can be called supervised if we know the desired output; while learning, the input pattern is given to the net's input layer. Initially, we set up all weights as random numbers and send the input features into the network, checking the output result. If this is wrong, we have to adjust all the weights of the network to get the correct output. This algorithm is called backpropagation. If you want to read more about how a neural network learns, check out http://neuralnetworksanddeeplearning.com/chap2.html and https://youtu.be/IHZwWFHWa-w.

Now that we have a brief introduction to what a neural network is and the internal architecture of NN, we are going to explore the differences between NN and deep learning.