Gunnar Farneback proposed this optical flow algorithm and it's used for dense tracking. Dense tracking is used extensively in robotics, augmented reality, and 3D mapping. You can check out the original paper here: http://www.diva-portal.org/smash/get/diva2:273847/FULLTEXT01.pdf. The Lucas-Kanade method is a sparse technique, which means that we only need to process some pixels in the entire image. The Farneback algorithm, on the other hand, is a dense technique that requires us to process all the pixels in the given image. So, obviously, there is a trade-off here. Dense techniques are more accurate, but they are slower. Sparse techniques are less accurate, but they are faster. For real-time applications, people tend to prefer sparse techniques. For applications where time and complexity are not a factor, people tend to prefer dense techniques to extract finer details.

In his paper, Farneback describes a method for dense optical-flow estimation based on polynomial expansion for two frames. Our goal is to estimate the motion between these two frames, which is basically a three-step process. In the first step, each neighborhood in both frames is approximated by polynomials. In this case, we are only interested in quadratic polynomials. The next step is to construct a new signal by global displacement. Now that each neighborhood is approximated by a polynomial, we need to see what happens if this polynomial undergoes an ideal translation. The last step is to compute the global displacement by equating the coefficients in the yields of these quadratic polynomials.

Now, how this is feasible? If you think about it, we are assuming that an entire signal is a single polynomial and there is a global translation relating the two signals. This is not a realistic scenario! So, what are we looking for? Well, our goal is to find out whether these errors are small enough so that we can build a useful algorithm that can track the features.

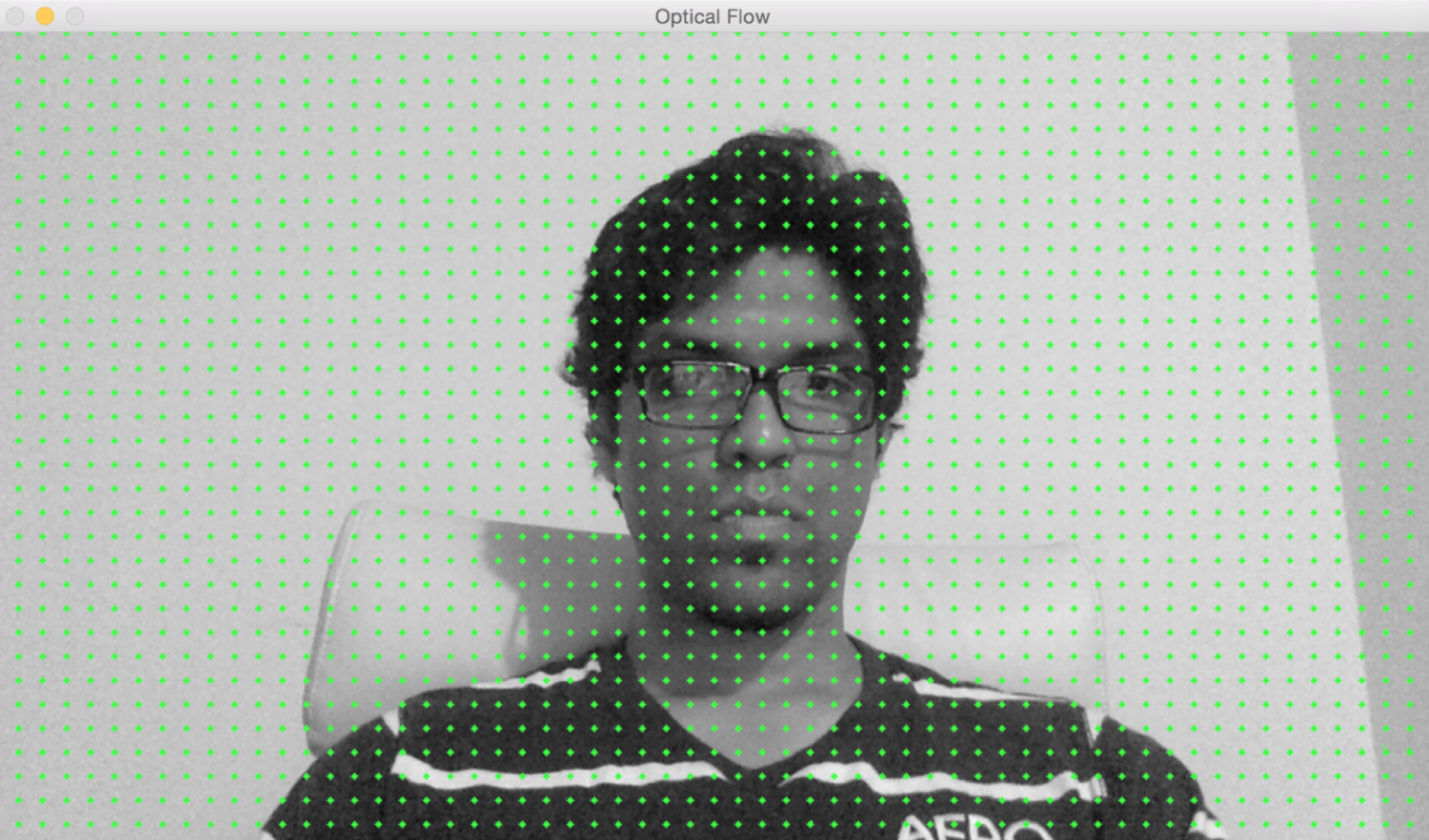

Let's look at a static image:

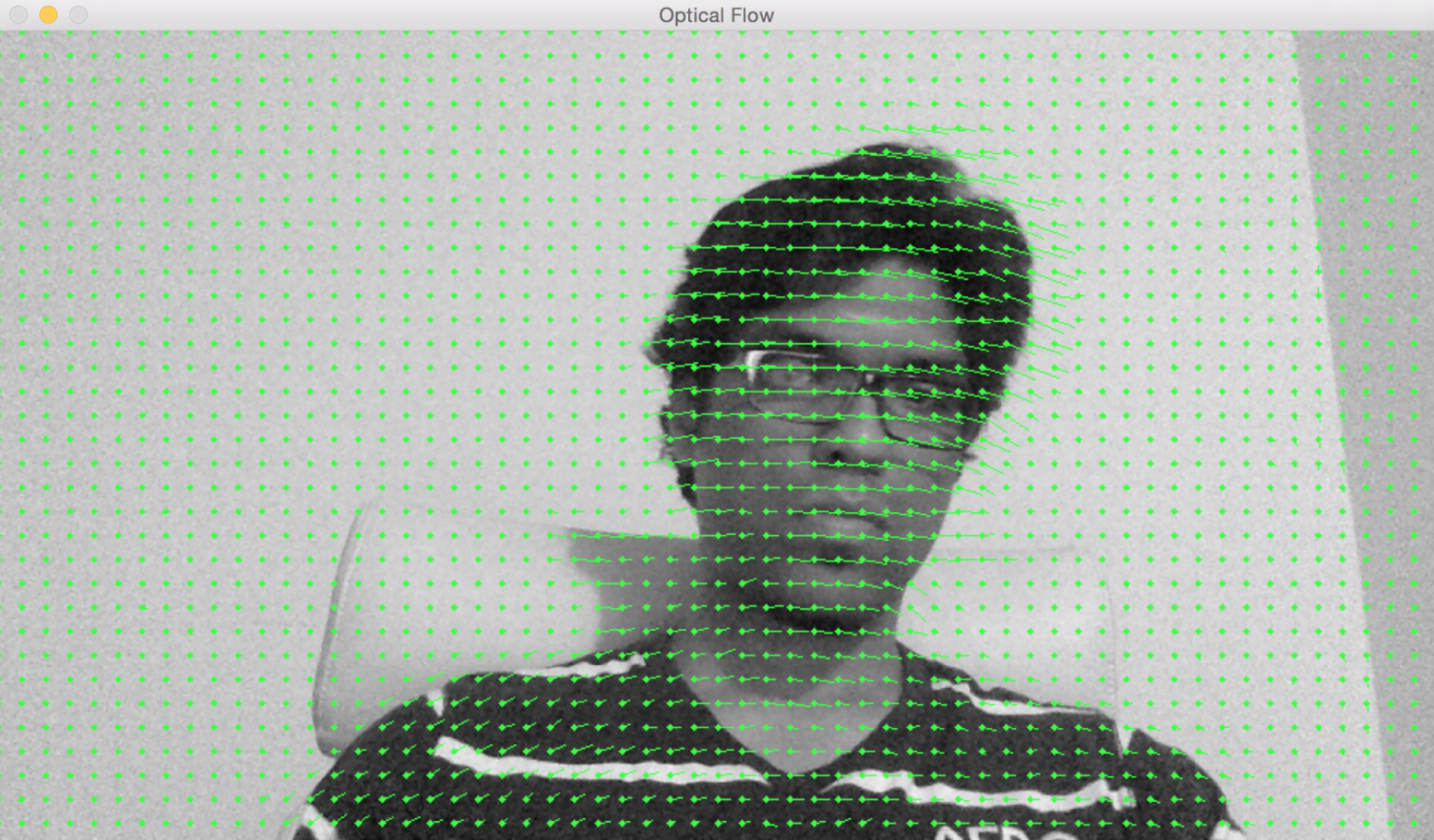

If I move sideways, we can see that the motion vectors are pointing in a horizontal direction. It is simply tracking the movement of my head:

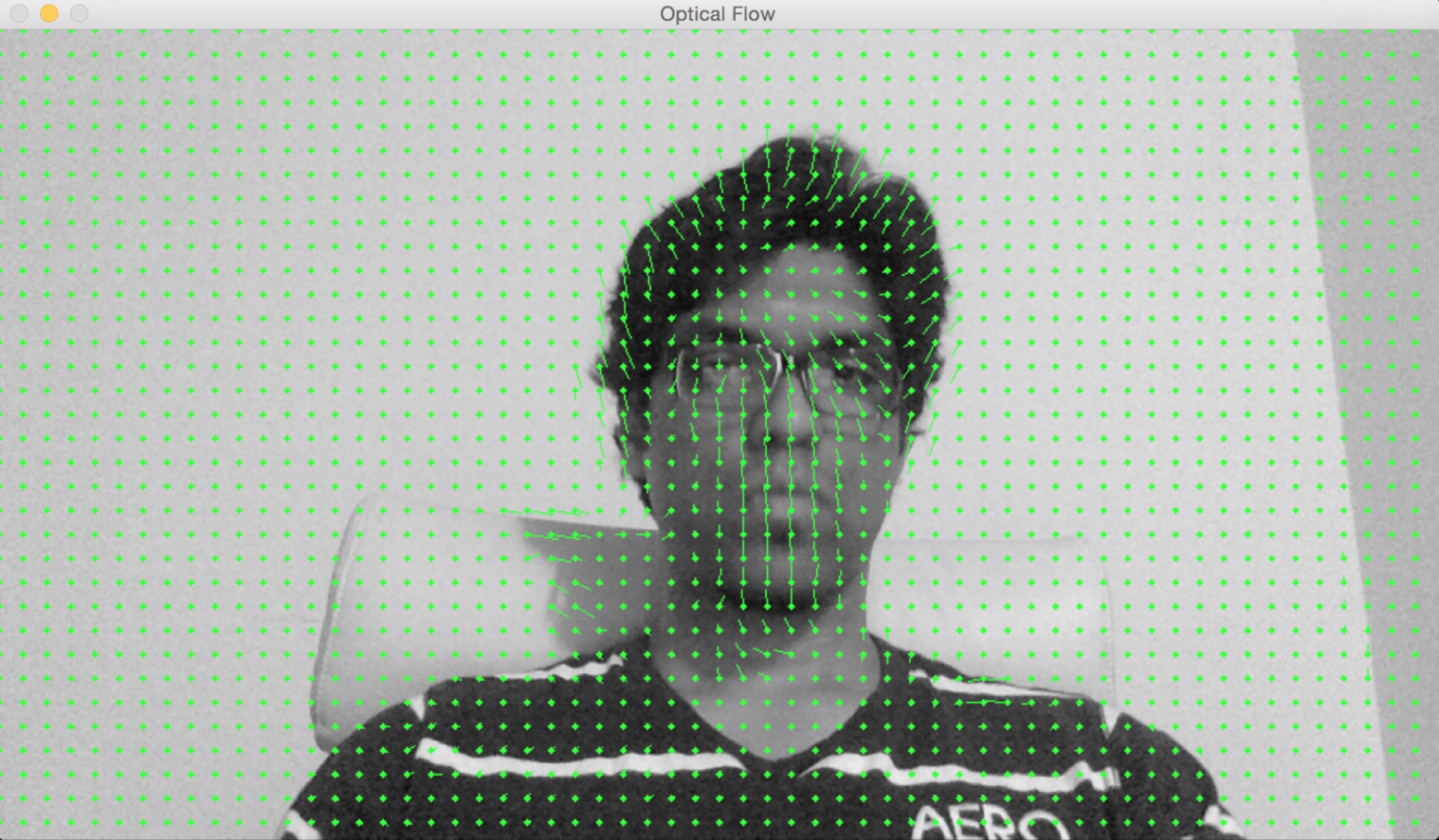

If I move away from the webcam, you can see that the motion vectors are pointing in a direction perpendicular to the image plane:

Here is the code to do optical-flow-based tracking using the Farneback algorithm:

int main(int, char** argv)

{

// Variable declaration and initialization

// Iterate until the user presses the Esc key

while(true)

{

// Capture the current frame

cap >> frame;

if(frame.empty())

break;

// Resize the frame

resize(frame, frame, Size(), scalingFactor, scalingFactor, INTER_AREA);

// Convert to grayscale

cvtColor(frame, curGray, COLOR_BGR2GRAY);

// Check if the image is valid

if(prevGray.data)

{

// Initialize parameters for the optical flow algorithm

float pyrScale = 0.5;

int numLevels = 3;

int windowSize = 15;

int numIterations = 3;

int neighborhoodSize = 5;

float stdDeviation = 1.2;

// Calculate optical flow map using Farneback algorithm

calcOpticalFlowFarneback(prevGray, curGray, flowImage, pyrScale, numLevels, windowSize, numIterations, neighborhoodSize, stdDeviation, OPTFLOW_USE_INITIAL_FLOW);

As we can see, we use the Farneback algorithm to compute the optical flow vectors. The input parameters to calcOpticalFlowFarneback are important when it comes to the quality of tracking. You can find details about those parameters at http://docs.opencv.org/3.0-beta/modules/video/doc/motion_analysis_and_object_tracking.html. Let's go ahead and draw those vectors on the output image:

// Convert to 3-channel RGB

cvtColor(prevGray, flowImageGray, COLOR_GRAY2BGR);

// Draw the optical flow map

drawOpticalFlow(flowImage, flowImageGray);

// Display the output image

imshow(windowName, flowImageGray);

}

// Break out of the loop if the user presses the Esc key

ch = waitKey(10);

if(ch == 27)

break;

// Swap previous image with the current image

std::swap(prevGray, curGray);

}

return 1;

}

We used a function called drawOpticalFlow to draw those optical flow vectors. These vectors indicate the direction of motion. Let's look at the function to see how we draw those vectors:

// Function to compute the optical flow map

void drawOpticalFlow(const Mat& flowImage, Mat& flowImageGray)

{

int stepSize = 16;

Scalar color = Scalar(0, 255, 0);

// Draw the uniform grid of points on the input image along with the motion vectors

for(int y = 0; y < flowImageGray.rows; y += stepSize)

{

for(int x = 0; x < flowImageGray.cols; x += stepSize)

{

// Circles to indicate the uniform grid of points

int radius = 2;

int thickness = -1;

circle(flowImageGray, Point(x,y), radius, color, thickness);

// Lines to indicate the motion vectors

Point2f pt = flowImage.at<Point2f>(y, x);

line(flowImageGray, Point(x,y), Point(cvRound(x+pt.x), cvRound(y+pt.y)), color);

}

}

}