Eating is the only obligatory energy input in our lives. Some people’s demand for food barely goes above the essential minimum, made up of their BMR and the small amount of energy needed for maintaining personal hygiene: housebound elderly people and meditating Indian sa-dhus are excellent examples. The rest of us prefer to go well beyond just covering basal metabolic and indispensable activity demands. As soon as people move beyond subsistence living (when they eat just enough to carry out necessary tasks but their food supply has no significant surplus, so they can find themselves repeatedly undernourished), they diversify and transform their diets in ways that follow some remarkably consistent trends. I will describe these transformations, as well as some of their consequences, in the first section of this brief survey of energy in everyday life.

The second section will look at energy in our homes: how we keep warm (and cool) by burning fuels, and how we use electricity to extend the day, energize household appliances, and power the still-expanding selection of electronic devices whose uses range from heating food to storing and reproducing music. Globally, household energy use is claiming an increasingly higher share of overall energy consumption, and hence it is most welcome that almost all these diverse conversions have steadily improving efficiencies. I will detail several of these notable achievements, and then end the section with a brief look at the electricity demand of new electronic devices.

Thirdly, I will consider the energy needs of modern modes of transport. Increasing mobility is one of the most obvious attributes of modern civilization, and few factors correlate better with the overall standard of living than car ownership. In affluent countries, car ownership has long ceased to be a privilege, and many low-income countries are now acquiring cars at much faster rates than early adopters did at a similar stage of their economic development. The energy use of passenger cars thus deserves special attention – but the transformation of flying from an uncommon experience to everyday reality has been an even more revolutionary development. Flying has broadened personal horizons far beyond national confines: almost every airport able to receive large commercial airliners can be now reached from any other such airport in less than twenty-four hours flying time, many directly, others with changes of aircraft (the door-to-door time may be considerably longer, due to travelling time to and from the airport and infrequent connections). Remarkably, inter-continental travel, at close to the speed of sound, is an activity whose energy costs compare very favorably with many modes of contemporary driving.

The next section will address everyday energy encounters and realities. Many experiences exemplify large and incessant flows of energy, from the spectacular to the mundane, such as watching a television broadcast of a rocket launch from Cape Canaveral, seeing multi-lane traffic streaming down urban freeways, or walking past brightly-lit houses. On the other hand, few people think about energy flows when buying a plastic hamper, discarding a piece of aluminum foil, or installing a new staircase. But the objects around us do not have only mass, distinct shapes, function, or sentimental value: their production required fuel and electricity conversions, and each of them thus embodies a certain amount of energy. If you are a careful shopper you will know many of their prices: I will introduce you to some of their energy costs.

Finally, there is yet another hidden, or at least largely ignored, reality behind everyday energy use: it is increasingly probable that whenever you heat a house, start a car, or fly on vacation, you will rely on fuels that came not only from another country, but from another continent. Similarly, when you flip a light switch, play a Mozart concerto, or send an email it is increasingly likely the electrons performing those tasks originated in another country, or that your electricity was generated from imported fuels. We do not have to import toasters or toys from Asia, but everyday life for most of the world’s people could not go on without intricate, and increasing, international dependence on traded energies.

Food intakes: constants and transitions

The fundamentals of human energetics are constrained by the necessities of heterotrophic metabolism: we have to cover our BMR just to be alive, we need additional food energy for growth and tissue repair, and we cannot lead healthy lives without either labor-related or recreational activities which, depending on duration and intensity, may require either marginal additions or substantial increments to our normal diet. Nearly everything else has changed, not only in comparison with traditional, pre-industrial, and largely rural settings, but also with early generations of the modern, industrialized, and urban world. Dietary transitions have profoundly changed the composition of average diets, mechanization and the use of agricultural chemicals have intensified food production, and socio-economic changes and food processing aimed at mass markets have introduced new eating habits.

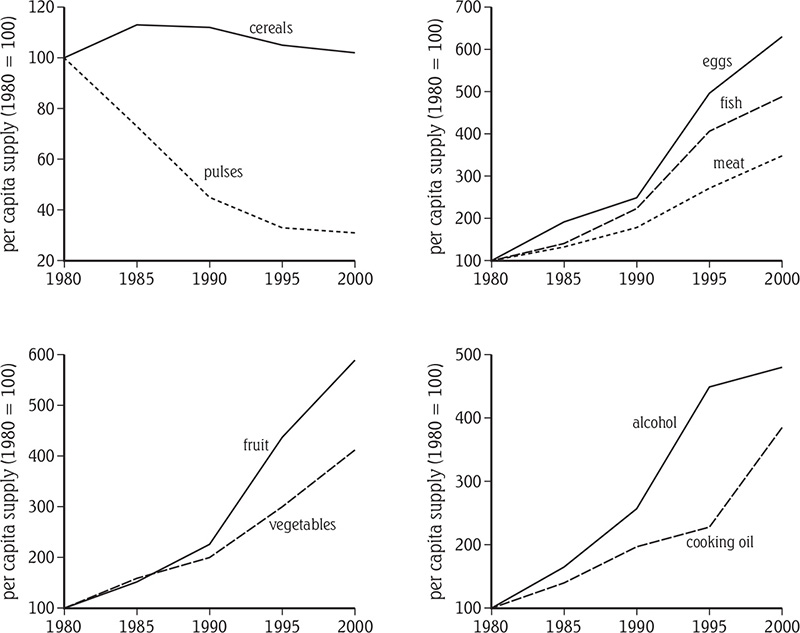

Dietary transitions happen in all populations, as they become modern, industrial, and post-industrial urban societies (Figure 24). These shifts share common features; national food balance sheets (reflecting food availability at retail level) indicate how far they have gone. Generally, as incomes rise so does the average per caput availability of food. The daily average is less than 2,000 kcal in the world’s most malnourished populations, around 2,500 kcal in societies with no, or only a tiny, food safety margin, and well above 3,000 kcal in Europe, North America, and Australia. Remarkably, Europe’s top rates, more than 3,300 kcal a day, are found not only in such rich countries as Germany, France Denmark and Belgium but also in Greece. The only notable departure from this high average is Japan, with about 2,700 kcal/day, now more than ten percent below China’s mean!

Finding out how much food actually is consumed is a challenging task, and neither dietary recalls nor household expenditure surveys yield accurate results. To test the reliability of the first method, try to list every item (and the approximate amount) that you have eaten during the past three days and then convert these quantities into fairly accurate energy values! Unless itemized in great detail, a family’s food expenditures tell us nothing about the actual composition (and hence overall energy content) of the purchased food, the level of kitchen waste, or consumption in a family.

The best available evidence, from a variety of food consumption surveys, shows that in affluent countries about 2,000 kcal are actually eaten per day per caput, with the average as low as 1,700 kcal/day for adult females and about 2,500 kcal/day for adult men. If these figures are correct, this means these countries waste 1,000–1,600 kcal per caput, or as much as a third to two-fifths of their available supply of food energy every day – and several national studies of food waste have confirmed this astounding level of loss. But some reported intakes underestimate real consumption: the extraordinarily high, and rising, obesity rates (particularly in North America, where about a third of people are obese and another third overweight) are a result of insufficient physical activity and excessive food consumption. The second key feature of dietary transitions is a major shift in both relative and absolute contributions of basic macronutrients.

Figure 24 China’s dietary transition, 1980–2000 (plotted from data in various editions of China Statistical Yearbook)

DIETARY TRANSITIONS

The most far-reaching dietary change has been the universal retreat of carbohydrate staples such as cereal grains (including rice, wheat, corn, and millet) and tubers (including white and sweet potatoes, and cassava). In affluent countries, they now supply just 20–30 percent of the average per caput energy intake, a third or half the traditional level. In Europe, this trend is shown by the declining consumption of bread, the continent’s ancient staple: for example, in France, daily per caput intake fell from 600 g a day in 1880 to just 130 g a day by 2015, a nearly eighty percent drop. In Asia, rice consumption in Japan more than halved in the two post-World War II generations (to less than 60 kg annually per caput by 2000), making it an optional food item rather than a staple. A similarly rapid decline of rice intake has occurred in Taiwan, South Korea, and since the mid-1980s, China. This quantitative decline has been accompanied by a qualitative shift in cereal consumption, from whole grains to highly milled products (white flour). Post-1950 consumption of tubers also fell in all affluent countries, often by 50–70 percent compared to pre-World War II levels.

The retreat of starchy staples has been accompanied by a pronounced decline in the eating of high-protein leguminous grains (beans, peas, chickpeas, lentils, and soybeans). These were a part of traditional diets because of their unusually high protein content (twenty to twenty-five percent for most and about forty percent for soybeans), and every traditional society consumed them in combination with starchy staples (containing mostly just two to ten percent protein) to obtain essential dietary amino acids. As animal proteins became more affordable, traditional legume intakes tumbled to just a few kilograms per caput annually in North America and Japan. Among the more populous countries Brazil, at more than 15 kg a year (mostly black beans, feijão preto), has the highest per caput consumption of dietary legumes. Yet another key shift in carbohydrate consumption is the rising intake of refined sugar (sucrose), which was almost unknown in traditional societies, where sweetness came from fruits and honey. In some Western countries, the intake of added sugar exceeds 60 kg/caput annually (or up to twenty percent of all food energy), mainly because of excessively sweet carbonated beverages, confectionary and baked products, and ice cream.

The energy gap created by falling intakes of starchy foods has largely been filled by higher consumption of lipids and animal protein. Modern diets contain many more plant oils – both polyunsaturated (peanut, rapeseed, and corn) and monounsaturated (olive) varieties, and saturated (coconut and palm) kinds – than did traditional intakes. Meat, animal fats, fish, eggs, and dairy products provided no more than ten percent of food energy in many traditional societies, but they now account for about thirty percent in affluent countries. The eating of meat has changed from an occasional treat to an everyday consumption, adding up annually to as much as 120 kg/caput (bone-in weight) in the U.S. and nearly 100 kg/caput in Spain. High-protein meat and dairy diets (with as much as sixty percent of all protein coming from animal foods) have resulted in substantial increases in average height and weight, and reoriented the rich world’s agricultures from food crop to animal feed crop production.

Dietary transformations have changed some traditional food habits radically, and the Mediterranean diet perhaps best illustrates this. For decades, this diet has been extolled as the epitome of healthy eating with great cardiovascular benefits, and the main reason for the relatively high longevities in the region. But during the two post-World War II generations, there was a gradual change to increased intake of meat, fish, butter, and cheese, and decreased consumption of bread, fruit, potatoes, and olive oil. For example, olive oil now provides less than half of all lipids in Italy and Spain, and Spaniards now eat more meat than the Germans or French. The true Mediterranean diet now survives only among elderly people in rural areas.

Household energies: heat, light, motion, electronics

A modern house is a structure that provides shelter, but also contains a growing array of energy-conversion devices that increase the comfort, ease the daily chores, and provide information and entertainment for its inhabitants. In colder climates, heating usually accounts for most household energy; after World War II both Europe and North America saw large-scale shifts to more convenient, and more efficient, forms. Well-designed solid fuel (coal, wood, or multi-fuel) stoves have efficiencies in excess of thirty percent but, much like their wasteful traditional predecessors, they still require the laborious activities of bringing the fuel, preparing the kindling, starting the fire, tending it, and disposing of the ashes. Heating with fuel oil is thus a big advance in convenience (the fuel is pumped from a delivery truck into a storage tank and flows as needed into a burner) but it has been supplanted by natural gas.

For decades, affordability or aesthetics guided house design; energy consumption only became an important factor after OPEC’s first round of price increases in 1973–1974. Cumulatively impressive savings in energy consumption can come from passive solar design (orienting large windows toward the southwest to let in the low winter sun), superinsulating walls and ceilings, and installing at least double-glazed, and in cold climates triple-glazed, windows. Fiberglass batting has an insulating value about eleven times higher than the equivalent air space, and more than three times higher than a brick. Consequently, the walls of a North American house framed with 4” x 6” wooden studs (2” x 4” are standard), filled with pink fiberglass, covered on the inside with gypsum sheets (drywall) and on the outside by wooden sheathing and stucco will have an insulation value about four times higher than a more sturdy looking, 10 cm thick, brick and stucco European wall. A triple-glazed window with a low-emittance coating (which keeps ultraviolet radiation inside the house), has an insulating value nearly four times as high as a single pane of glass. In hot climates, where dark roofs may get up to 50°C warmer than the air temperature, having a highly reflective roof (painted white or made of light-colored materials), which will be just 10°C warmer, is the best passive way to reduce the electricity needed for air conditioning – by as much as fifty percent. Creating a better microclimate, for example by planting trees around a house (and thus creating evapotranspirative cooling), is another effective way to moderate summer energy needs.

MODERN INDOOR HEATING AND COOLING

In North America, domestic furnaces heat air, which is then forced through metal ducts by an electric motor-powered fan and rises from floor registers (usually two to four per room). The best natural gas-fired furnaces are now about ninety-seven percent efficient, and hence houses equipped with them do not need chimneys. In Europe hot-water systems (using fuel oil and natural gas to heat water, which circulates through radiators) predominate.

Many Americans insist on raising their thermostats to levels that would trigger their air conditioning in summer (about 25°C); in most countries, the desirable indoor temperature is between 18 and 21°C. Even after their industrial achievements became the paragon of technical modernization, many Japanese did not have central heating in their homes; families congregated around the kotatsu, a sunken space containing, traditionally, a charcoal brazier, later replaced by kerosene, and then electric, heaters.

Engineers express the annual requirement for heating as the total number of heating-degree days: one day accrues for each degree that the average outdoor daily temperature falls below the specified indoor level. American calculations, based on a room temperature of 20°C, show that the coldest state, North Dakota, has about 2.6 times as many as does the warmest, Florida, while the Canadian values, based on 18°C, show Vancouver has less than 3,000 and Winnipeg nearly 6,000 heating-degree days a year.

Space cooling (first as single-room window units, later as central systems) began its northward march up North America as electricity became more affordable. Its spread changed the pattern of peak electricity consumption: previously the peaks were during the coldest and darkest winter months, but the widespread adoption of air conditioning moved short-term (hours to a couple of weeks) consumption peaks to July and August. Air conditioning is still relatively rare in Europe, but has spread to not only all fairly affluent tropical and subtropical places (Singapore, Malaysia, Brunei, Taiwan) but also to the urban middle class of monsoonal Asia (from Pakistan to the Philippines, with the largest concentrations in China’s megacities) and humid Latin America. The relative costs of heating and cooling depend, obviously, on the prevailing climate and the desired indoor temperature.

But what makes modern houses so distinct from their predecessors is the still-expanding array of electricity uses, which requires an elaborate distribution network to ensure reliable and safe supply, and could not work without transformers.

Electricity is generated at voltages between 12.5 and 25 kV, but (as explained in Chapter 1) a combination of low current and high voltage is much preferable for long-distance transmission. So, the generated low current is first transformed (stepped-up) to between 138 and 765 kV before being sent to distant markets, transformed again (stepped-down) to safer, lower, voltages for distribution within cities (usually 12 kV) and then stepped-down to even lower voltages (110–250 V depending on the country) for household use. Transformers can reduce or increase the voltage with almost no loss of energy, very reliably, and very quietly. They use the principle of electromagnetic induction: a loop of wire carrying an alternating current (the transformer’s primary winding) generates a fluctuating magnetic field, which induces a voltage in another loop (the secondary winding) placed in the field, and vice versa. The total voltage induced in a loop is proportional to the number of its turns: if the secondary has twice as many turns as the primary, the voltage will double. Transformers range from massive devices with large cooling fins to small, bucket-size units mounted on poles in front of houses.

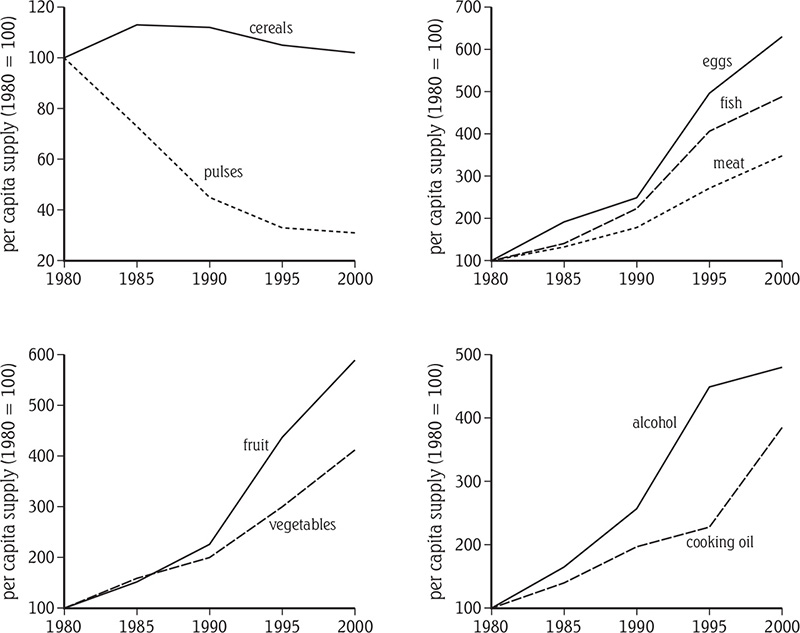

Household electricity use began during the 1880s with low-power lighting, and by 1920 had extended to a small selection of kitchen appliances. Refrigerators and radios came next, then, post-1950 Western affluence brought a remarkable array of electrically powered devices for use in the kitchen and workshop, and in recreation and entertainment. However, no other segment of modern energy use has seen such improvements in terms of efficiency, cost, and hence affordability, as has electric lighting. During the early period of household electrification, the norm was one low-power incandescent light bulb (40 or 60 W) per room, so a typical household had no more than about 200–300 W of lighting. Today, American houses will have commonly 60-80 lights (some in groups of two to six), adding up to more than 3,000 W. But it would be wrong to conclude that this house receives ten times as much light as a house in 1900: the actual figure is much higher and, moreover, the flood of new light is astonishingly cheaper.

The first, Edisonian, carbon-filament light bulbs of the early 1880s converted a mere 0.15 percent of electricity into visible radiation; even two decades of improved design later, they were still only about 0.6 percent efficient. The introduction of tungsten, the first practical metallic filament and placing it in a vacuum within the bulb, raised the performance to 1.5 percent by 1910; filling the bulb with a mixture of nitrogen and argon brought the efficiency of common light bulbs to about 1.8 percent by 1913. The development of incandescent lights has been highly conservative; hence the filament light bulb you buy today is essentially the same as it was four generations ago. Despite their inefficiency and fragility, and the fact that better alternatives became widely available after World War II, incandescent light bulbs dominated the North American lighting market until the end of the twentieth century.

The origin of more efficient, and hence less expensive light sources, predates World War I but discharge lamps entered the retail market only during the 1930s. Low-pressure sodium lamps came first, in 1932, followed by low-pressure mercury vapor lamps, generally known as fluorescent lights. These operate on an entirely different principle from incandescent lights. Fluorescent lights are filled with low-pressure mercury vapor and their inside surfaces coated with phosphorous compounds; the electrical excitation of the mercury vapor generates ultra-violet rays, which are absorbed by the phosphors and re-radiated in wavelengths that approximate to daylight. Today’s best indoor fluorescent lights convert about fifteen percent of electricity into visible radiation, more than three times as much as the best incandescent lights, and they also last about twenty-five times longer (Figure 25).

Metal halide lights, introduced in the early 1960s, have a warmer color than the characteristic blue-green (cool) of early fluorescents, and are about ten percent more efficient. Another important step was to make the discharge lights in compact sizes, and with standard fittings, so they would not require special fixtures and could replace incandescent lights in all kinds of household applications. Initially, these compact fluorescents were rather expensive but large-scale production has lowered prices. Instead of a 100W incandescent light, all we need is a 23W compact, which will last 10,000 hours (nearly fourteen months of continuous light). Light emitting diodes (LED) are even better: they will last 50,000 hours and their efficacy (light produced per watt) will soon surpass that of today’s most efficient low-pressure sodium lamps used for outdoor illumination.

Figure 25 Improvements of lamp efficacy, 1880–2000 (based on an image at http://americanhistory.si.edu/)

When these technical advances are combined with lower electricity prices and higher real wages, electric light appears to be stunningly cheap. In the U.S., the average (inflation-adjusted to 2000 values) cost of electricity fell from 325 cents per kilowatt hour (kWh) in 1900, to about seven cents in 2015, while the average (inflation-adjusted) hourly manufacturing wage rose from $4 in 1900, to about $22 in 2015. Factoring in efficiency improvements, a lumen of U.S. electric light was three orders of magnitude (roughly 3,000 times) more affordable in 2015 for a family of a factory worker than it was in 1900! Only the post-1970 fall in microprocessor prices offers a more stunning example of performance and affordability gains.

The second most common category of household devices is appliances that pass current through high-resistance wires to generate heat. The highest demand comes from electric stoves (with ovens and usually four stovetop heating elements, adding up to as much as 8-9 kW on the highest settings). Clothes dryers rate up to 4 kW, a hair dryer 1.5 kW. The ubiquitous two-slice toaster rates between 750 and 1,100 W, and small appliances, such as coffee makers and rice cookers and hot water thermopots (common in all but the poorest households of East Asia), draw from 500 to 1,500 W. Small electric heaters are used to make cold rooms less chilly in many northern countries, but all-electric heating is common only in the world’s two leading producers of inexpensive hydroelectricity, Canada (especially in Quebec and Manitoba) and Norway (where some sixty percent of households rely on electric space heating).

Small motors are the third important class of common household electricity converter. During Canadian winters, the most important motor (rated at 400–600W) is the one that runs the blower that distributes the air heated by the natural gas furnace in the basement. But the one that comes on most often is a smaller, 100–200 W, motor that compresses the working fluid in a refrigerator. Small motors also convert electricity to the mechanical (rotary) power needed for many household tasks in the kitchen or a workshop previously performed by hand. The single-phase induction motor, patented by Nikola Tesla (1856–1943) in 1888 and distinguished by its characteristic squirrel-cage rotor, is the most common. These sturdy devices run for years without any maintenance, powering appliances from sharp-bladed food processors and dough mixers (between 250–800 W) to floor, desktop, and ceiling fans (100–400 W).

Finally, there is a major and diverse class of electricity converters: electronic devices. The ownership of TVs and microwave ovens is almost universal, not only in affluent countries but among the growing middle classes of Asia and Latin America. Personal computers, rapidly ascendant during the 1980s and 1990s, are now much less popular than lighter, portable laptops, tablets and smartphones notebooks, while the largest flat-screen televisions have grown to wall-size dimensions. These new electronic gadgets have small unit-power requirements: flat screen televisions draw less than 100W, the active central processing units of desktop computers around 100 W, monitors up to 150 W, a laptop about 50 W. This means that, for example, emailing non-stop for twelve hours will consume 0.6 kWh, or as much electricity as an average clothes dryer will use in ten minutes. But given the hundreds of millions of computers (and printers, fax machines, copiers, and scanners) now owned by households, and the energy needed by the internet’s infrastructure (servers, routers, repeaters, amplifiers), this latest domestic electricity market adds up already to a noticeable amount of the total electricity demand in the world’s richest countries, and the web traffic will further increase that demand. For example, by 2020 the U.S. data centers are expected to use fifty-three percent more electricity than in 2013 and that would require about twenty large (1 GW) stations operating with seventy percent capacity factor.

Electronic devices are the main reason why modern households use electricity even when everything is turned off. All remote-controlled devices (televisions, video recorders, audio systems), as well as security systems, telephone answering machines, fax machines, and garage door openers use, even when idle, small (sometimes more than ten watts, usually less than five) amounts of electricity. In America these phantom loads (vampire power) now commonly add up to 50 W per household, and nationwide, they consume more electricity than is used annually in Hong Kong or Singapore. But most of these losses could be drastically reduced by installing controls that limit the leakage to less than 0.1 W per device.

Transport energies: road vehicles and trains

As individual economies become more affluent, the average number of people per car is converging toward the saturation level of just above, and in some cases, even slightly less than, two. This growth in car ownership has been achieved thanks to the mass production of affordable designs of family cars. Ford’s Model T (1908–1927, a total of about fifteen million vehicles) was the trendsetter and the Volkswagen (Beetle) its single most successful embodiment. This car’s initial specifications were made by Adolf Hitler in 1933, and its production (in Germany between 1945 and 1977, then in Brazil, and until 2003 in Mexico) amounted to 21.5 million vehicles. France’s contribution was the Renault 4 CV, Italy’s the Fiat Topolino, and Britain’s the Austin Seven. With spreading affluence came more powerful high-performance cars, larger family cars, and, starting in the U.S. during the 1980s, the ridiculously named sports utility vehicle (SUV: what sport is it to drive it to work or a shopping center?).

In 2015 the average number of people per passenger vehicle was about 1.5 in Italy, and 1.7 in Japan, France and the U.S.. China is now the world’s largest car market but in 2015 there were still nearly eight people per car. The total number of vehicles worldwide (passenger cars, buses, vans, trucks) passed 1.25 billion in 2014. Recent annual passenger car sales have been in excess of seventy million units, with nearly two-thirds sold in North America and China. Volkswagen, Toyota, GM and Renault-Nissan were the largest carmakers in 2015, selling about half of all vehicles made worldwide. The typical car use varies widely, from less than 5,000 km a year in some crowded Asian cities, to just above 10,000 km in major European countries (a rate that has been fairly steady for a long time), to about 18,000 km in the U.S.. Unfortunately, motor vehicles are also responsible for 1.25 million accidental deaths every year (and more than ten times as many serious injuries), and motor vehicle emissions are the principal cause of the photochemical smog that now affects, seasonally or continuously, almost all mega-cities and their surrounding areas (an increasing incidence of respiratory problems and damage to crops are its major impacts).

Refinery statistics show the total global output of about 3.7 billion tonnes in 2015, including nearly one billion tonnes of gasoline and more than 1.3 billion tonnes of diesel fuel. Passenger cars are by far the largest consumers of gasoline but some of it is also used by small airplanes, and by tens of millions of boats, snowmobiles, lawnmowers, and other small motors, while most of the diesel fuel is consumed by trucks, trains, and ships.

The fundamentals of internal combustion engines, the world’s most abundant mechanical prime movers, have not changed for more than a century: a cycle of four strokes (intake, compression, combustion, exhaust), the use of gasoline and sparking ignition, and the conversion of the reciprocating movement of pistons to the rotary motion of a crankshaft. But a steady stream of technical improvements has made modern engines and transmissions lighter, more durable, and more reliable, and vehicles more affordable. The widespread use of microprocessors (American cars now have as many as fifty, with a combined power greater than that of the processors in the 1969 Apollo 11 lunar landing module) to control automatic transmission, anti-lock brakes, catalytic converters, and airbags has made cars more reliable, and converted them into complex mechatronic machines.

Despite these advances, internal combustion engines remain rather inefficient prime movers and the overall process of converting the chemical energy of gasoline to the kinetic energy of a moving passenger car is extraordinarily wasteful. A modern gasoline-fueled, four-stroke engine, in good repair, will convert no more than twenty percent of its fuel into reciprocating motion: the rest is waste heat transferred to the exhaust (nearly forty percent), cooling water (a similar share), and heat generated by engine friction. In towns or cities, where most of the world’s cars are used, at least five percent of the initial energy input is lost idling at red lights; in cities with heavy traffic this loss may be easily of the order of ten percent. Finally, an increasing share of energy is used by auxiliary functions, such as power steering and air conditioning. This means that no more than thirteen and as little as seven percent of the energy of the purchased gasoline will make it to the transmission, where friction losses will claim a further five percent, leaving just two to eight percent of the gasoline actually converted to the kinetic energy of the moving vehicle.

ENERGY EFFICIENCY OF CARS

Even an efficient compact car (around six liters/100 km) will need nearly 2 MJ of fuel per kilometer. With a single occupant its driver (weighing about 70 kg) will account for around five percent of the vehicle’s total mass, so the specific energy need will be nearly 30 kJ/kg of body mass. An adult briskly walking the same distance will need 250 kJ, 3.5 kJ/kg, an order of magnitude lower. This difference is not surprising, when we consider the huge energy waste needed to propel the vehicle’s large mass (this has been steadily creeping up even for small European cars, from about 0.8 t in 1970 to 1.4 t in 2015; the average weight of new U.S. vehicles is now 1.8 t).

What is surprising is that even the most efficient cars have an energy cost per p-km only marginally better than that of the latest airliners; for many vehicles the cost is much higher than flying. High fuel taxes in Europe and Japan have kept vehicle sizes smaller there than in North America, where the first deliberate improvements in efficiency were forced only by OPEC’s two rounds of dramatic crude oil price increases. There, the Corporate Average Fuel Economy (CAFE) standards doubled the fleet’s performance to 27.5 mpg (8.6 l/100 km) between 1973 and 1987: better engines and transmissions, lighter yet stronger bodies, and reduced aerodynamic drag were the main factors. Japanese automotive innovations were particularly important for making efficient, reliable, and affordable cars. In the 1990s, Toyotas (basic Tercels and upscale Camrys) and Hondas (Civics and Accords) became America’s bestselling cars; by the end of the twentieth century, most of them were actually made in the U.S. and Canada. Unfortunately, the post-1985 decline of crude oil prices eliminated further CAFE gains, and the overall fleet performance actually worsened with the rising popularity of vans, pick-up trucks, and SUVs. CAFE standards began to rise again in 2011 (to 30.2 mpg) and the goal is 50 mpg by 2022. Even so, in 2016 the least efficient SUV (Mercedes Benz AMG G65) rated just 12 mpg (19.6 l/100 km) so that with a driver and a single passenger that vehicle consumes 3.4 MJ/p-km. In contrast, the first generation of airliners consumed about 5 MJ/p-km, the Boeing 777 needs about 1.5 MJ/p-km on a short flight and the Boeing 787 (Dreamliner) on an intercontinental flight will use as little as 0.9 MJ/p-km.

Standard fuel economy ratings are established during controlled tests, which simulate city and highway driving. Actual performance in everyday driving is rarely that good. What can we do to improve it? Under-inflated tires are probably the most common cause of easily avoidable energy loss: their flexing absorbs energy, and their larger footprint increases rolling friction and heats up the tire. Bad driving (and idling) habits (rapid acceleration, or running the engine when waiting for more than a minute or so) add to wasted fuel. As far as the choice of fuel is concerned, there is absolutely no advantage, in higher efficiency, cleanliness, or speed, in filling up with a premium (high-octane) gasoline. Octane ratings rise with the fuel’s ability to resist knocking; all new cars are designed to run well on regular gasoline (87 octane). No matter what the make or type of vehicle, the efficiency of driving follows a pronounced hump-shaped curve: at its maximum between 45 and 55 km/h, somewhat worse (ten to twenty percent) at lower speeds, but as much as forty percent less efficient at speeds above 100 km/h. This, and the noticeable reduction in fatal accidents, is the key argument for limiting the maximum speed to no more than 110 km/h.

In contrast, the fastest rapid intercity trains now travel at up to 300 km/h, and each of them can carry more than 1,000 passengers in comfortable, airline-style seats. They have an accident rate nearly an order of magnitude lower than driving, and no form of land transport can match their low energy cost per p-km (nearly always below 1 MJ). The Japanese shinkansen, the first rapid train (its scheduled service started on October 1, 1964) and the French TGV move people with less than 0.4 MJ/p-km, their maximum short-term speeds in regular service are close to, or even above, 300 km/h.

All rapid trains are powered by electric motors supplied via pantographs from overhead copper (or copper-clad steel) wires. The shinkansen pioneered the design of a rapid train without a locomotive: instead, every car has four motors (the latest 700 series trains have sixty-four motors with a total capacity of 13.2 MW). This arrangement makes the frequent accelerations and decelerations of relatively short inter-station runs much easier, moreover, the motors act as dynamic brakes, when they become generators driven by the wheels. The best proof of the train’s (and track’s) admirable design and reliability is the fact that during the first fifty years of its service (1964-2014) the Tōkaidō line between Tōkyō and Ōsaka had carried more than five billion people without a single accidental fatality (Figure 26).

The TGV (its first line has been operating since 1981) also uses its synchronous motors for dynamic braking at high speeds but, unlike the shinkansen, it has two locomotives (each rated at 4.4 MW) in every trainset. Several European countries have been trying to catch up with French accomplishments: Spain got its first TGV train (the Madrid-Seville AVE) in 1991, while Italy’s ETR500 has been operating since 1993, both with top speeds of 300 km/h. In contrast, Amtrak’s New York to Washington Acela, with a peak speed of 240 km/h, remains North America’s only rapid train service, a singularity explained by low population density and highly competitive road and air links. But every large metropolitan area in North America, Europe, and Japan has several major lines, and many have fairly dense networks of slower commuter trains powered either by electricity or diesel locomotives.

Figure 26 Tōkaidō line Nozomi train in Kyōtō station (photo Vaclav Smil)

Flying high: airplanes

Commercial flight remained a rare, expensive, and uncomfortable experience as long as it was dominated by propeller engines (that is, until the late 1950s). Passengers had to endure insufficiently pressurized cabins, propeller noise, vibration induced by the four-stroke engines, and a great deal of turbulence (low cruising altitudes subjected the relatively small airplanes to bumpy conditions), for many hours. The speed of propeller airplanes gradually increased, but even the best machines did not pass 320 km/h, and hence it took (with three stops needed for refueling) fifteen and a half hours to fly from New York to Los Angeles and more than eighty hours from England to Japan. The capacity of these planes was also limited: the legendary DC-3 (first flown in 1935) accommodated at most thirty-six people and PanAm’s huge Clipper could take only seventy-four passengers.

Jet airplanes changed everything, with maximum air speeds above 900 km/h, capacities of up to five hundred people, trans-American crossing in less than six hours, and cruising altitudes of 10–12 km, high above tropospheric turbulence. The first modern jet airliners were derived from the largest post-World War II military planes: the most important was the B-47 Stratojet bomber, with its swept wings and engines hung on struts under the wings, two enduring design features of all large commercial jets. Many innovations were needed to improve the performance and reduce the operating cost of these machines to make air travel an affordable and fairly common event. Stronger aluminum alloys, entirely new composite materials, advances in aerodynamic design, and ingenious electronic navigation and landing devices (radar above all), were the essential ingredients but better engines made the greatest difference.

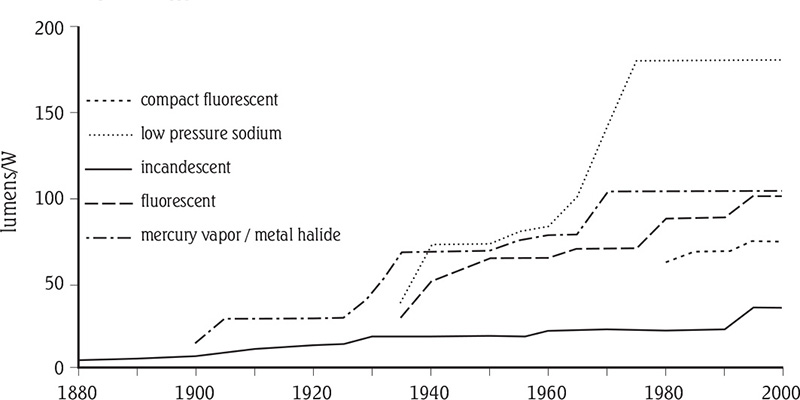

All jet airplanes are propelled forward as a reaction to the backward thrust of their engines. The first commercial planes, of the 1950s, used slightly modified military turbojet engines. These compact machines compressed all the air that passed through the frontal intake (compression ratios were just 5:1 for the earliest designs; now they commonly go above thirty) and fed it into the combustor, where a fine fuel spray was ignited and burned continuously; the escaping hot gases first rotated a turbine which drove the compressor and as they left through a nozzle, created the thrust that propelled the plane. Thrust reversers are flaps deployed after landing to force the gas forward and slow down the aircraft (the engine cannot reverse the flow of hot gas). Turbojets were also configured to rotate (by using reduction gears) propellers: these turbo-props are now common only among small commuter aircraft, while all large planes are now powered by turbofans.

TURBOFAN ENGINES

Turbojets have two basic disadvantages for commercial aviation: peak thrust occurs at very high, supersonic speeds and fuel consumption is relatively high. These drawbacks can be remedied by placing large-diameter fans in front of the engine, driven by a second turbine placed after the primary rotor that powers the compressor; they compress the incoming air to about twice the inlet pressure. Because this compressed air bypasses the combustor, it reduces specific fuel consumption but adds another (cool and relatively slow) stream of exhaust gases to the rapid outflow of hot gas from the core and generates more thrust. Figure 27 is the cutaway view of GE90, a turbofan powering Boeing 777 jetliners, with a bypass ratio of nine. The highest bypass ratios in 2016 were Rolls-Royce Trent 1000 turbofan (used to power Boeing 787) at 10 and Pratt & Whitney’s PW1000 G (powering Airbus A320neo and Bombardier CSeries) at 12. Today’s turbofans can get planes airborne in less than twenty seconds, guaranteeing a much less harrowing experience for white-knuckled fliers!

Turbofans are also much quieter, as the high-speed core exhaust is enveloped by slower-moving bypass air. The diameters of the largest turbofans are nearly 3.5 m (or just a few percent less than the internal fuselage diameter of a Boeing 737!) and because the temperature of their combustion gases (around 1500°C) is above the melting point of the rotating blades an efficient internal air cooling is a must. Despite such extreme operating conditions, turbofans, when properly maintained, can operate for up to 20,000 hours, the equivalent of two years and three months of non-stop flight. Their extraordinary reliability results in fewer than two accidents per million departures, compared to more than fifty for the turbojets of the early 1960s.

Figure 27 Cutaway view of GE90 jet engine (image courtesy of General Electric)

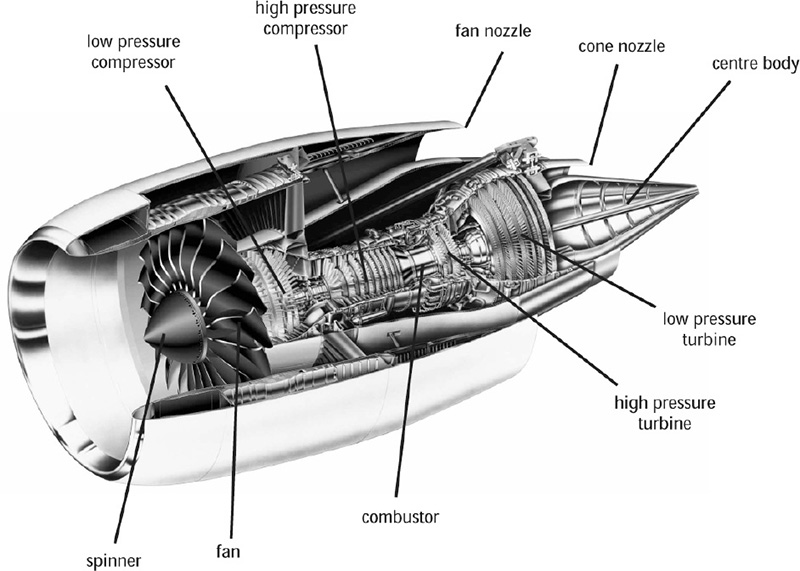

With so much power at our command, it is not turbofans that now limit the size of commercial planes but rather the expense of developing new designs, unavoidable infra-structural constraints (length of runways, loading and offloading capacity, number of terminal slots), and safety considerations that make planes of more than 1,000 passengers unlikely. The twin-deck Airbus 380 has been certified for the maximum of 853 passengers but actual configurations in service range from 379 passengers (Singapore Airlines, three-class layout) to 615 passengers (Emirates, two-class layout). The development of the A380 was a key strategic move by Airbus (established in 1970) as it fights Boeing for dominance in the global market for large passenger aircraft. In 2003 Airbus was, for the first time, slightly ahead of Boeing in terms of overall annual deliveries for large passenger planes (but Boeing regained the primacy in 2012). Its most common planes are the smaller jets of the A320 family (107-230 passengers) and the larger planes of the A330 and A340 (four-engine) family, typically for about 350 passengers. But the Boeing 737 remains the bestselling airliner, and the pioneering, and daring 747 (Figure 28) remains the most revolutionary, if not the best, ever built (with more than 1,500 sold by 2016). Boeing’s other highly successful designs include the 767, which has dominated trans-Atlantic flights, and the 777, whose model 200 LR (test-flown in March 2005), is the world’s longest-range (17,395 km) airplane, able to fly directly between any two airports in the world. Boeing’s latest model, 787, was designed to be the world’s most energy-efficient and most comfortable airliner, with a new cabin layout, larger windows, and better air quality but its service entry was delayed by six years and its final cost was a multiple of the original budget projection.

Figure 28 Boeing 747-8I in Lufthansa service (from Wikimedia)

Kerosene is a much better fuel for jet engines than gasoline: it has a higher specific density (0.8 as against 0.72 g/l) and hence higher energy density (34.5 compared to 32.0 MJ/l) so more can be stored in available tanks, and as a heavier refined fuel it is cheaper, it has lower evaporation losses at high altitudes, a lower risk of fire during ground handling, and produces more survivable crash fires. Jet A fuel, used in the U.S., has a maximum freezing point of –40°C, while Jet A-1, with a freezing point of –47°C, is used on most long international flights, particularly on northern and polar routes during winter. Airliners store their fuel in their wings; some also have a central (fuselage) and a horizontal stabilizer tank. They must carry enough fuel for the projected flight, and for any additional time that might be needed due to weather conditions (such as unexpectedly strong headwinds), or airport congestion.

As already noted, airliners offer a surprisingly energy-efficient form of long-distance passenger transport. Improvements of inherently highly efficient gas turbines, and their ability to carry fairly comfortably hundreds of passengers mean they waste less fuel per passenger than intercity two-passenger car drives longer than about 500 km. The unsurpassed rapidity and convenience of long-distance flying pushed the global figure to nearly six trillion p-km during 2016, a clear testimony of the extent to which jet-powered flight has changed the way we do business, maintain family ties, and spend our free time. And because of more efficient jet engines, fuel consumption has been rising at a somewhat slower rate and by 2015 it was equivalent to about seven percent of all liquid fuels used in transportation.

Embodied energies: energy cost of materials, food – and energy

People have always asked “how much” when buying goods and services but before OPEC’s first round of steep oil price increases only those companies whose energy bills accounted for most, or a very large share, of their overall production costs traced their energy expenditures in detail, to better manage and reduce those outlays. With rising energy prices came many studies that calculated the energy costs of products ranging from bulk industrial goods (basic metals, building materials, and chemicals) to consumer products (cars to computers), to foodstuffs. This information has not changed the ways of an average Western consumer, who is still utterly unaware of either the overall or the relative energy cost of everyday products and services, but it has been very helpful to producers trying to reduce the energy cost of their activities: only a detailed account of the fuel and electricity needs of the individual processes or the components of a product can identify the management opportunities and technical improvements that will help to minimize those outlays.

Energy cost analysis is simple only when it is limited to a single process, with one, or a few, obvious direct energy inputs (for example, coke, natural gas and electricity for producing pig iron in a blast furnace). It becomes very complex, and involves debatable choices of analytical boundaries, when the goal is to find the overall energy cost of such complex industrial products as a passenger car. A complete analysis should account not only for energies used in the assembly of the car, but also for fuels and electricity embodied in structural materials (metals, plastics, rubber, glass), as well as in the vehicle’s electronic controls. The third level of energy cost finds the costs of capital equipment, used to produce major material inputs and build the production facilities. The next step is to find the energy cost of the energy that has made all this possible, but at that point it becomes clear that the inclusion of higher-order inputs will result in rapidly diminishing additions to the overall total: capturing the first two or three levels of a product’s energy cost will usually give us eighty to ninety percent of the total.

It should come as no surprise that among many published figures of energy cost there are nearly identical values, reflecting the widespread diffusion of numerous advanced industrial techniques, or the worldwide use of identical processes or products: as noted in the previous section, the world has now only two makers of large passenger airplanes, and only three companies make most of their engines. International comparisons show many basically identical products with substantially different energy inputs: such disparities are caused by differences in industrial infrastructure and management. I will comment on the energy costs of a small number of the most important items (judged by the overall magnitude of their output) in four key categories: bulk raw materials, large-volume industrial inputs, major consumer products, and basic foodstuffs. All values will be expressed in gigajoules per tonne (GJ/t) of the final product (for comparison, energy content of one tonne of crude oil is 42 GJ/t).

Basic construction materials require only extraction (wood, sand, and stone) and heat processing (bricks, cement, and glass). Excavating sand may take as little as 0.1 GJ/t, quarrying stone less than 1 GJ/t, despite the near-total mechanization of modern lumbering, a tonne of construction wood costs mostly between 1.5-3 GJ/t, and the best ways to produce cement now require less than 3.5 GJ/t. Making concrete (a mixture of cement, sandy or gravel aggregate, and water) costs very little additional energy, but reinforcing concrete with steel makes it nearly three times as energy expensive. The most energy-intensive materials commonly used in house construction are insulation and plate glass, up to 10 GJ/t. Integrating these inputs for entire buildings results in totals of around 500 GJ (an equivalent of about 12 tonnes of crude oil) for an average three-bedroom North American bungalow, and more than 1,500 times as much for a 100 storey skyscraper of 1000 m2 per floor. In commercial buildings and residential highrises, steel is most commonly the material with the highest aggregate energy cost.

ENERGY COST OF METALS

Steel remains the structural foundation of modern civilization: it is all around us, exposed (in vehicles, train and ship bodies, appliances, bridges, factories, oil drilling rigs, and transmission towers) or hidden (in reinforced concrete, and skyscraper skeletons); touched many times a day (kitchen and eating utensils, surgical instruments, and industrial tools) or buried underground (pipes, pipelines, and piled foundations). Globally, about thirty percent is now made from recycled scrap, but most still comes from large blast furnaces. Technical advances lowered the typical energy cost of pig iron (so-called because it is cast into chunky ingots called pigs) to less than 60 GJ/t by 1950 and to just 20 GJ/t by the year 2000. The most highly efficient operations now produce semi-finished iron products (ingots, blooms, billets, slabs) for less than 20 GJ/t. Pig iron is an alloy that contains from 2 to 4.3 percent carbon, while steel has commonly just 0.3–0.6 percent and no more than one percent). This seemingly small quantitative difference results in enormous qualitative gains: cast iron has poor tensile strength, low impact resistance, and very low ductility.

Steel has high tensile strength, high impact resistance, and remains structurally intact at temperatures more than twice as high as iron. Its alloys are indispensable, making everything from stainless cutlery to giant drilling rigs. For nearly a century, pig iron’s high carbon content was lowered, and steel made by blasting the molten metal with cold air in open hearth furnaces; only after World War II were these replaced by basic oxygen and electric arc furnaces. The processing of steel was concurrently revolutionized by abandoning the production of steel ingots (which had to be reheated before they were shaped into slabs, billets, or bars) and instead continuously casting the hot metal. These innovations brought enormous (up to one thousand fold) productivity gains, and great energy savings. The classic sequence of blast furnace, open hearth furnace, and steel ingots needed two to three times as much energy for semi-finished products as the modern combination of blast furnace, basic oxygen furnace, and continuous casting.

The reduction of non-ferrous metals from their ores requires substantially more energy than iron-smelting. The production of aluminum from bauxite remains, despite substantial efficiency gains during the twentieth century, particularly energy-intensive, averaging about 175 GJt (titanium, widely used in aerospace, needs four times as much). Hydrocarbon-based plastics have replaced many metallic parts in vehicles, machines, and devices, because of their lower weight and resistance to corrosion, but their energy cost is fairly high, between 75–120 GJ/t. Motor vehicles are the leading consumers of metals, plastics, rubber (another synthetic product), and glass. Their energy cost (including assembly) is typically around 100 GJ but this accounts for no more than about twenty percent of the vehicle’s lifetime energy costs, which are dominated by fuel, repairs, and road maintenance.

The energy costs of common foodstuffs range widely, due to different modes of production (such as intensity of fertilization and pesticide applications, use of rain-fed or irrigated cropping, or manual or mechanized harvesting) and the intensities of subsequent processing. The typical costs of harvested staples are about 4 GJ/t for wheat, corn, and temperate fruit, and around 10 GJ/t for rice. Produce grown in large greenhouses is most energy intensive; bell peppers and tomatoes cost as much as 40 GJ/t. Modern fishing has a similarly high fuel cost per tonne of catch. These rates can be translated into interesting output/input ratios: harvested wheat contains nearly four times as much energy as was used to produce it but the energy consumed in growing greenhouse tomatoes can be up to fifty times higher than their energy content.

These ratios show the degree to which modern agriculture has become dependent on external energy subsidies: as Howard Odum (1924-2002) put it in 1971, we now eat potatoes partly made of oil. But they cannot simplistically be interpreted as indicators of energy efficiency: we do not eat tomatoes for their energy but for their taste, and vitamin C and lycopene content, and we cannot (unlike some bacteria) eat diesel fuel. Moreover, in all affluent countries, food’s total energy cost is dominated by processing, packaging, long-distance transport (often with cooling or refrigeration), retail, shopping trips, refrigeration, cooking, and washing of dishes: at least doubling, and in many cases tripling or quadrupling, the energy costs of agricultural production.

According to many techno-enthusiasts, advances in electronics were going to lead to the rapid emergence of a paper-free society, but the very opposite has been true. The post-1980 spread of personal computers has been accompanied by a higher demand for paper. Since the late 1930s, global papermaking has been dominated by the sulfate process, during which ground-up coniferous wood and sulfate are boiled, under pressure, for about four hours to yield a strong pulp that can either be used to make unbleached paper, or bleached and treated to produce higher quality stock. Unbleached packaging paper takes less than 20 GJ/t; standard writing and printing stock is at least forty percent more energy intensive (requiring more energy than steel!).

Last, but clearly not least, a few numbers regarding the energy cost of fossil fuels and electricity. These costs are usually expressed as energy return on investment (EROI). Given the wide range of coal qualities and great differences in underground and surface coal extraction (see Chapter 4), it is not surprising that coal’s EROI ranges widely, between 10 (for underground coal in thinner seams) and 80 (for large surface mines). Similarly, crude oil from the richest Middle Eastern fields can contain far more than 100 times as much energy as it costs to produce it, but the EROI for small oil fields of low productivity can be no higher than 10. But refining requires more energy to separate the crude oil into many final products and it will raise the final energy costs of refined oil products.

As we have seen, thermal electricity generation in large power plants (with boilers and steam turbogenerators) is, at best, about forty percent efficient; typical rates (including high-efficiency flue gas desulfurization and the disposal of the resulting sulfate sludge) may be closer to thirty-five percent. The energy costs of constructing the stations and the transmission network equate to less than five percent, and long-distance transmission losses subtract at least another seven percent. Electricity produced in a large thermal station fueled by efficiently produced surface-mined bituminous coal would thus represent, at best, over thirty percent, and more likely from twenty to twenty-five percent of the energy originally contained in the burned fuel. Because of their higher construction costs, the net energy return is lower for nuclear stations, but we cannot do a complete calculation of the energy costs of nuclear electricity because no country has solved the potentially very costly problem of long-term disposal of radioactive wastes.

Global interdependence: energy linkages

In pre-industrial societies, the fuels needed for everyday activities overwhelmingly came from places very close to the settlement (for example, in villages, wood from nearby fuel wood lots, groves or forests, and crop residues from harvested fields) or were transported relatively short distances. There were some longer shipments of wood and charcoal, but international fuel deliveries became common only with the expansion of coal mining and the adoption of railways and steam-powered shipping. The energy transitions from coal to crude oil and natural gas, and the growing prominence of electricity have profoundly changed the pattern of energy supply – yet, who, during the course of daily activities, thinks about these impressively long links?

Electricity used by an English household may have been generated by burning coal brought from Colombia, and the coking coal used to produce Chinese pig iron could have come not only from North China but also from Australia and Canada; the gasoline in a New Yorker’s car may have originated as crude oil pumped from under the ocean floor in the Gulf of Mexico, some 2,000 km away, refined in Texas and taken by a coastal tanker to New Jersey; the natural gas used to cook rice in a Tokyo home may have come by tanker from Qatar, a shipping distance of nearly 15,000 km; and the electricity used to illuminate a German home may have originated as falling water in one of Norway’s hydroelectricity stations.

Energy accounts for a growing share of the global value of international trade: about eight percent in 2000, seventeen percent by 2014. The global fuel trade added up to more than $3 trillion in 2014 (twice as much as the trade in all agricultural products). In mass terms, the global fuel trade – in 2015 more than a trillion cubic meters of natural gas, more than 1.2 billion tonnes of coal, and nearly three billion tonnes of crude oil and refined products – towers above all other bulk commodities, such as ores, finished metals, and agricultural products. Crude oil leads both in annually shipped mass and monetary value. In 2015 the Middle East accounted for a third of all crude oil exports, and the EU, China, U.S. and Japan for nearly 60 percent of all crude oil imports.

Tankers carry about two-thirds of all crude oil and refined products exports from large terminals in the Middle East (Saudi’s Rās Tanūra is the world’s largest), Africa, Russia, U.S., Latin America, and Southeast Asia to huge storage and refining facilities in importing countries (U.S., a large crude oil importer, is also a large exporter of refined products). The rest of the world’s crude oil exports go by pipelines, the safest and cheapest form of mass land transport. The U.S. had constructed an increasingly dense network of oil pipelines by the middle of the twentieth century, but major export lines were only built after 1950. The longest (over 4,600 km, with an annual capacity of 90 million tonnes) was laid during the 1970s to carry oil from Samotlor, a super-giant field in Western Siberia, first to European Russia and then to Western Europe.

In contrast to crude oil, only thirty percent of the world’s natural gas production was exported in 2015. Two-thirds of it is moved through pipelines. Russia, Canada, Norway, the Netherlands, and Algeria are the largest exporters of piped gas; the U.S., Germany, and Italy its largest importers. The world’s longest (6,500 km) and largest-diameter (up to 1.4 m) pipelines carry gas from West Siberia’s super-giant fields to Italy and Germany. There they meet the gas networks that extend from Groningen, the giant Dutch field, the North Sea field (brought first by undersea pipelines to Scotland), and Algeria (crossing the Sicilian Channel from Tunisia and then the Messina Strait to Italy).

Overseas shipments of natural gas became possible with the introduction of liquefied natural gas (LNG) tankers, which carry the gas at –162°C in insulated steel tanks; on arrival at their destination the liquefied cargo is re-gasified and sent through pipelines. The first LNG shipments were sent from Algeria to France and the U.K. during the 1960s; in 2015 LNG accounted for a third of natural gas exports. The major importers are Japan (which buys a third of world’s supply, from the Middle East, Southeast Asia, and Alaska), South Korea, China and Taiwan.

In comparison to large-scale flows of fossil fuels, the international trade in electricity is significant in only a limited number of sales or multinational exchanges. The most notable one-way transmission schemes are those connecting large hydrogenerating stations with distant load centers. Canada is the world leader, selling hydroelectricity from British Columbia to the Pacific Northwest, from Manitoba to Minnesota, the Dakotas and Nebraska, and from Québec to New York and the New England states. Other notable international sales of hydroelectricity take place between Venezuela and Brazil, Paraguay and Brazil, and Mozambique and South Africa. Most European countries participate in a complex trade in electricity, taking advantage of seasonally high hydrogenerating capacities in the Scandinavian and Alpine nations.