The CIFAR-10 Dataset

Do you remember back in Chapter 6, Getting Real, when I introduced MNIST? Those handwritten digits looked like a formidable challenge back then. By now, our neural networks are making short work of them. Since we passed 99% accuracy on MNIST, it’s getting hard to even tell apart actual improvements from random fluctuations.

What do you do when your neural networks are too cool for MNIST? You turn to a more challenging dataset: CIFAR-10.

What CIFAR-10 Looks Like

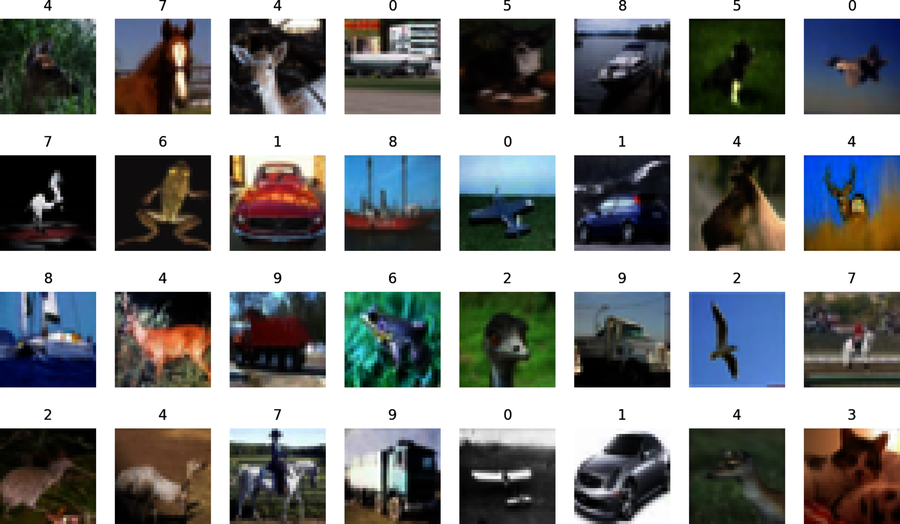

In the field of image recognition, MNIST is considered an entry point. A tougher benchmark is the CIFAR-10 dataset.[27] CIFAR stands for Canadian Institute For Advanced Research, and 10 is the number of classes in it. A random sample of CIFAR-10 images is shown in the figure.

Like MNIST, CIFAR-10 contains 60,000 images in the training set and 10,000 in the test set, but the images in CIFAR-10 are thougher to classify. Even though they look so pixelated, they’re bigger than MNIST’s digits. Instead of being matrices, CIFAR-10 images are tensors—that is, arrays with more than two dimensions. To be precise, they’re (32, 32, 3) tensors: 32 by 32 pixels with 3 color channels for red, green, and blue. That makes for a grand total of 3,072 variables—almost four times as many as MNIST’s 784.

However, the challenge of CIFAR-10 isn’t in the size of its examples as much as in their complexity. Instead of relatively simple digits, the images represent real-world subjects such as horses and ships. For example, check out how different the birds (labeled 2 in our sample) look from each other. By contrast, see how similar objects can be even when they belong to different classes, like the seagull and the fighter jet, or cars and trucks. With so many confusing subjects, CIFAR-10 puts even powerful neural networks through their paces.

Let’s dust off the best neural network we’ve built so far and see how it copes with these images.

Falling Short of CIFAR

I retrieved our best neural network so far—the solution to Hands On: The 10 Epochs Challenge—and modified it to train on CIFAR-10 instead of MNIST. Keras, bless its binary soul, comes with built-in support for CIFAR-10. So I only had to change a few lines, which are marked by small arrows in the left margin:

| | import numpy as np |

| | from keras.models import Sequential |

| | from keras.layers import Dense, BatchNormalization |

| | from keras.optimizers import Adam |

| | from keras.initializers import glorot_normal |

| | from keras.utils import to_categorical |

| » | from keras.datasets import cifar10 |

| | |

| » | (X_train_raw, Y_train_raw), (X_test_raw, Y_test_raw) = cifar10.load_data() |

| | X_train = X_train_raw.reshape(X_train_raw.shape[0], -1) / 255 |

| | X_test_all = X_test_raw.reshape(X_test_raw.shape[0], -1) / 255 |

| | X_validation, X_test = np.split(X_test_all, 2) |

| | Y_train = to_categorical(Y_train_raw) |

| | Y_validation, Y_test = np.split(to_categorical(Y_test_raw), 2) |

| | |

| | model = Sequential() |

| | model.add(Dense(1200, activation='relu')) |

| | model.add(BatchNormalization()) |

| | model.add(Dense(500, activation='relu')) |

| | model.add(BatchNormalization()) |

| | model.add(Dense(200, activation='relu')) |

| | model.add(BatchNormalization()) |

| | model.add(Dense(10, activation='softmax')) |

| | |

| | model.compile(loss='categorical_crossentropy', |

| | optimizer=Adam(), |

| | metrics=['accuracy']) |

| | |

| | history = model.fit(X_train, Y_train, |

| | validation_data=(X_validation, Y_validation), |

| » | epochs=25, batch_size=32) |

Aside from loading CIFAR-10 instead of MNIST, the only change I made is the number of epochs. I bumped it up from 10 to 25, to give more training time to the network. When I ran this program, I had to wait for a few minutes as Keras downloaded CIFAR-10 to my computer—and then for a few hours as it churned through those 25 epochs. Here is what I got in the end:

| | Train on 50000 samples, validate on 5000 samples |

| | Epoch 1 - loss: 1.7835 - acc: 0.3665 - val_loss: 1.9901 - val_acc: 0.3244 |

| | … |

| | Epoch 25 - loss: 0.9512 - acc: 0.6596 - val_loss: 1.4610 - val_acc: 0.5216 |

The numbers are in: our neural network misclassifies about half the images in the validation set. Sure, it’s more accurate than the 10% we’d get by random guessing, but that doesn’t make it great.

It seems that CIFAR-10 is too much to handle for a fully connected neural network. Let’s see what happens if we switch to a different architecture—one that’s more fit to deal with images.