Unreasonable Effectiveness

As you read through this book, you might have been surprised by the capabilities of simple programs like our first MNIST classifier. And yet, little prepares you for the uncanny capabilities of modern deep networks. In the words of one famous researcher, those networks are “unreasonably effective.”[31] In a sense, they do nothing more than recognize patterns—and yet, they increasingly beat us at quintessentially human tasks, like facial recognition or medical diagnosis. How is that even possible?

Here’s the short answer: we don’t really know. As I write, nobody understands why deep neural networks work so well on so many tasks. Indeed, there’s a lot of ongoing research bent to explain that fact.

At first sight, it’s not even obvious why deeper networks work better than more shallow ones. A famous theorem from 1989 (called the “universal approximation theorem”) proves that, with enough hidden nodes, even a humble three-layered network can approximate any function—that is, any possible dataset.[32] If shallow networks are good enough for any dataset, at least in theory, then why are deeper networks so much more accurate?

That question has been traditionally hard to answer because neural networks are mostly opaque—that is, it’s hard to understand why a network takes a certain decision. You might be able to explain a small network by peeking at its internals, but as the numbers of nodes and layers grow, it quickly becomes impossible to wrap your mind around all those numbers. Indeed, researchers spend a lot of time inventing techniques to explain the decision making of neural networks in human terms.

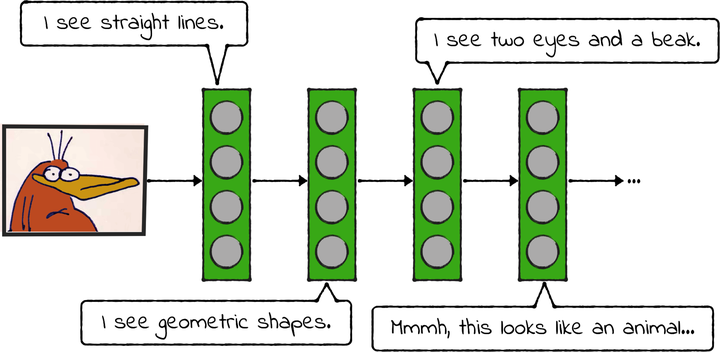

A scientific paper from 2016 made a leap forward in explaining how deep networks see the world (“Towards Better Analysis of Deep Convolutional Neural Networks,” by Mengchen Liu, Jiaxin Shi, Zhen Li, Chongxuan Li, Jun Zhu, and hixia Liu).[33] Using novel techniques, the authors visualized the “thinking” of a deep CNN, and showed that its layers work like levels of abstractions. As an image moves through the network, the first layers identify basic geometric features, like vertical or horizontal lines. Deeper layers identify more complex geometries, like circles. And even deeper layers catch higher-level details, like a human face, the fins of a Cadillac, or the beak of a platypus. In other words, each layer in a network outputs higher-level features for the next layer to work on, as illustrated in the diagram.

That’s a striking discovery for a few reasons. First, that process resembles the workings of our visual cortex. As far as we know, neurons in the visual cortex are organized hierarchically, with lower-level neurons catching simple details, and higher-level neurons arranging those details into the face of our loved one, or the dismal sight of a fine on our windshield.

So, are neural networks similar to the wetware in our craniums? That’s still hard to tell. On the one hand, it seems unlikely that our brains involve a mechanism similar to backpropagation. On the other hand, there are a few parallels between brains and neural networks, and this hierarchy of abstractions seems to be one of them.

There is a more practical reason why this progressive abstraction is crucial to AI. For many years, artificial intelligence has required a preliminary step of human analysis. For example, speech recognition usually required painstaking preparation: a human had to divide speech samples into sentences, words, and phonemes so that the computer could work on the basic building blocks of speech. This process is called feature engineering, or feature extraction. As you can imagine, it’s error-prone and time-consuming.

Deep learning, however, made feature engineering mostly obsolete. Why bother breaking down a sentence into phonemes, or an image into geometric shapes, when the layers in a deep network do that on their own, unassisted? By now, we know that’s one of the reasons that makes deep networks so good on unstructured data: they identify relevant features on their own, often better than a human could.

This book opened with a story. In Chapter 1, How Machine Learning Works, I told you about my friend’s impossible mission, when she was asked to code a pneumonia detector for X-ray scans. That task seemed impossible because we can’t really spell out an algorithm to recognize pneumonia—so we can’t code a computer to do it. Deep learning solved this problem by turning it on its head: you don’t have to tell the computer which features are important. It will find out on its own.

And that, as far as we understand today, is what makes deep learning tick.