Let's take some well-known CNN, say VGG16, and see in detail how exactly the memory is being spent. You can print the summary of it using Keras:

from keras.applications import VGG16

model = VGG16()

print(model.summary())

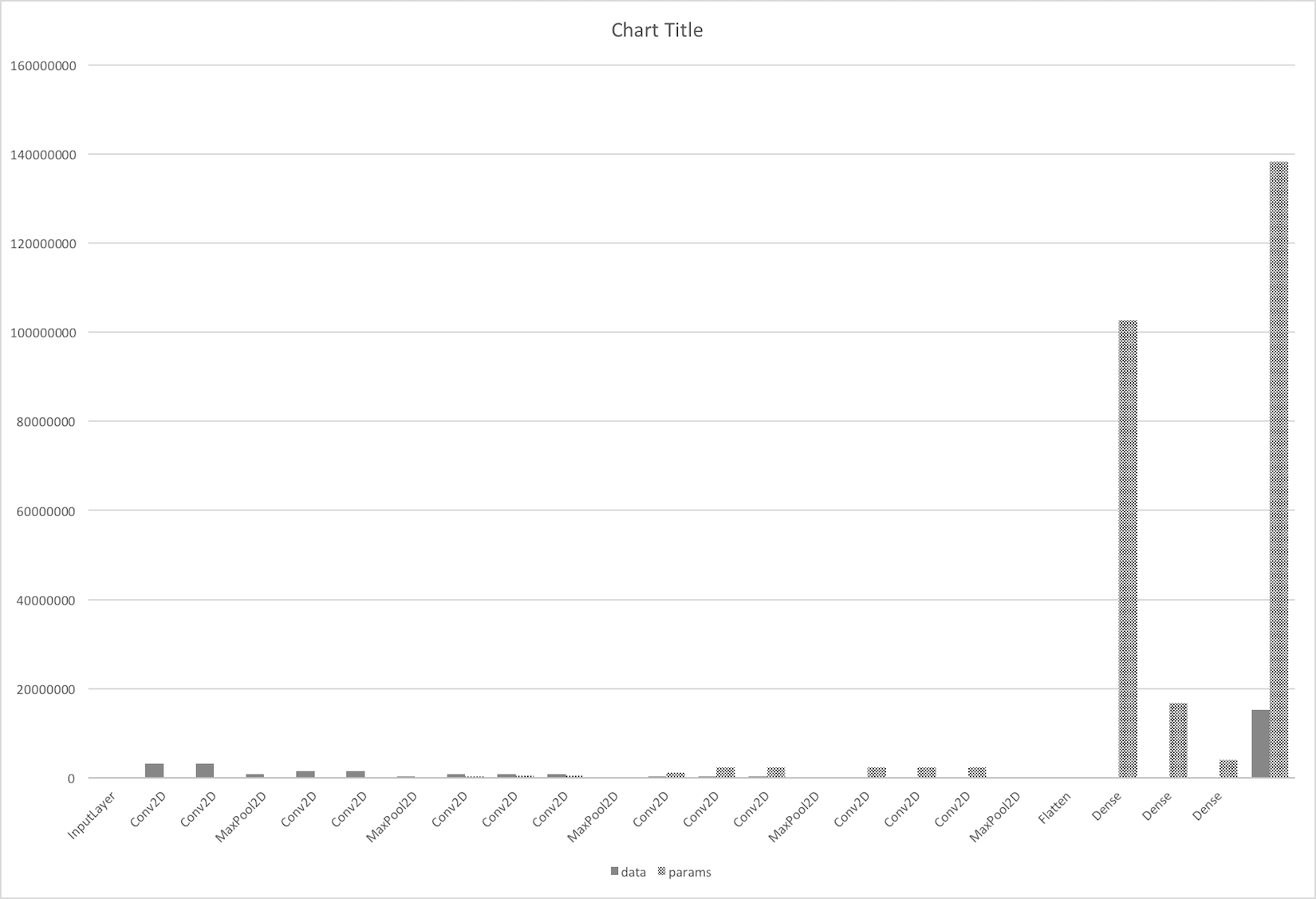

The network consists of 13 2D-convolutional layers (with 3×3 filters, stride 1 and pad 1) and 3 fully connected layers ("Dense"). Plus, there are an input layer, 5 max-pooling layers and a flatten layer, which do not hold parameters.

|

Layer |

Output shape |

Data memory |

Parameters |

Number of parameters |

|

InputLayer |

224×224×3 |

150528 |

0 |

0 |

|

Conv2D |

224×224×64 |

3211264 |

3×3×3×64+64 |

1792 |

|

Conv2D |

224×224×64 |

3211264 |

3×3×64×64+64 |

36928 |

|

MaxPool2D |

112×112×64 |

802816 |

0 |

0 |

|

Conv2D |

112×112×128 |

1605632 |

3×3×64×128+128 |

73856 |

|

Conv2D |

112×112×128 |

1605632 |

3×3×128×128+128 |

147584 |

|

MaxPool2D |

56×56×128 |

401408 |

0 |

0 |

|

Conv2D |

56×56×256 |

802816 |

3×3×128×256+256 |

295168 |

|

Conv2D |

56×56×256 |

802816 |

3×3×256×256+256 |

590080 |

|

Conv2D |

56×56×256 |

802816 |

3×3×256×256+256 |

590080 |

|

MaxPool2D |

28×28×256 |

200704 |

0 |

0 |

|

Conv2D |

28×28×512 |

401408 |

3×3×256×512+512 |

1180160 |

|

Conv2D |

28×28×512 |

401408 |

3×3×512×512+512 |

2359808 |

|

Conv2D |

28×28×512 |

401408 |

3×3×512×512+512 |

2359808 |

|

MaxPool2D |

14×14×512 |

100352 |

0 |

0 |

|

Conv2D |

14×14×512 |

100352 |

3×3×512×512+512 |

2359808 |

|

Conv2D |

14×14×512 |

100352 |

3×3×512×512+512 |

2359808 |

|

Conv2D |

14×14×512 |

100352 |

3×3×512×512+512 |

2359808 |

|

MaxPool2D |

7×7×512 |

25088 |

0 |

0 |

|

Flatten |

25088 |

0 |

0 |

0 |

|

Dense |

4096 |

4096 |

7×7×512×4096+4096 |

102764544 |

|

Dense |

4096 |

4096 |

4097×4096 |

16781312 |

|

Dense |

1000 |

1000 |

4097×1000 |

4097000 |

Total memory for data: Batch_size × 15,237,608 ≈ 15 M

???Total memory: Batch_size × 24M 5; 4 bytes ≈ 93 MB

Reference:

http://cs231n.github.io/convolutional-networks/#case

https://datascience.stackexchange.com/questions/17286/cnn-memory-consumption

Total parameters: 138,357,544≈138M