The problem with only using adversarial loss is that the network can map the same set of input images to any random permutation of images in the target domain. Any of the learned mappings can, therefore, learn an output distribution that is similar to the target distribution. There can be many possible mapping functions between  and

and  . Cycle consistency loss overcomes this problem by reducing the number of possible mappings. A cycle consistent mapping function is a function that can translate an image x from domain A to another image y in domain B, and generate back the original image.

. Cycle consistency loss overcomes this problem by reducing the number of possible mappings. A cycle consistent mapping function is a function that can translate an image x from domain A to another image y in domain B, and generate back the original image.

A forward cycle consistent mapping function appears as follows:

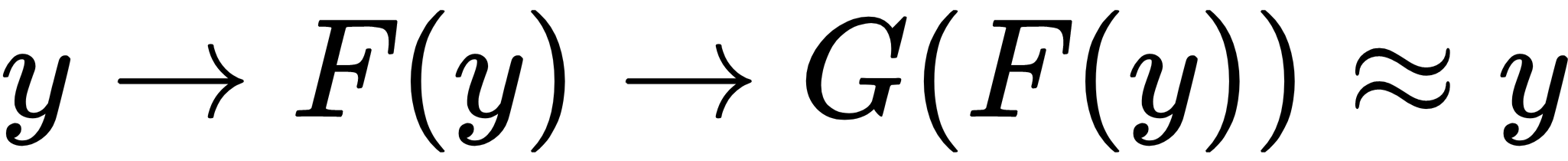

A backward cycle consistent mapping function looks as follows:

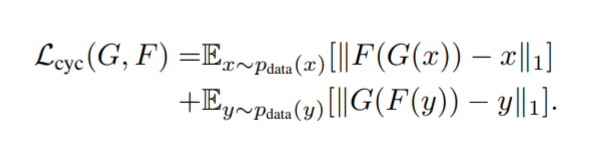

The formula for cycle consistency loss is as follows:

With cycle consistency loss, the images reconstructed by F(G(x)) and G(F(y)) will be similar to x and y, respectively.