Whenever you capture a video feed with AVFoundation, there are several classes involved. This is in contrast with the extremely easy-to-use UIImagePickerController, which enables the user to take a picture, crop it, and pass it back to its delegate.

The foundation for our login screen is AVCaptureDevice. This class is responsible for providing access to the camera hardware. On an iPhone, this is either the front or back camera. AVCaptureDevice also provides access to camera hardware settings, such as the flash or camera brightness.

The AVCaptureDevice works in conjunction with AVCaptureDeviceInput. AVCaptureDeviceInput is responsible for reading video data from the camera and making this data available. The available data is never read directly; it is made available through a subclass of AVCaptureOutput.

AVCaptureOutput is an abstract base class. This means that you never use this class directly, but instead you make use of one of its subclasses. There are subclasses available for photos, such as AVCaptureStillImageOutput, which is used for capturing photos. There's also AVCaptureVideoOutput. This class provides access to raw video frames that can be used, for example, to create a live video preview. This is exactly what we will use later.

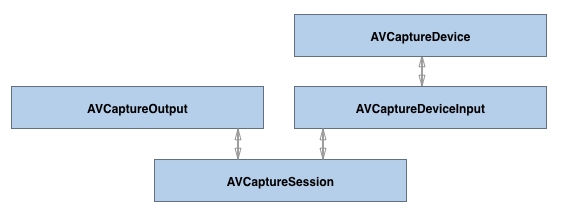

The input and output class instances are managed by AVCaptureSession. AVCaptureSession ties the input and output together and ensures that everything works. If either the input or the output generates an error, AVCaptureSession will raise the error.

To recap, these are the four classes that are involved when you work with the camera directly:

- AVCaptureDevice

- AVCaptureDeviceInput

- AVCaptureOutput

- AVCaptureSession

The capture device works together with the device input. The output provides access to the output of the capture input and the session ties it all together. The following diagram illustrates these relationships:

Let's implement our live video preview.