WHY THERE IS SO MUCH UNCERTAINTY ABOUT ENVIRONMENTAL CHANGE

Perhaps too the difficulty is of two kinds, and its cause is not so much in the things themselves as in us.

Aristotle Metaphysics

Effective action may not require perfect understanding, but a sensible, realistic grasp is essential. After generations of intensifying scientific research and management experience we have such a knowledge to ease our way through many difficult environmental challenges—but we still lack it in a much larger number of cases, including most of the matters of critical global importance. Moreover, we will not be able to resolve most of the uncertainties during the next one or two generations, that is, during the time of increasing pressures to adopt unprecedented preventive and remedial actions.

Undoubtedly the greatest obstacle to a satisfactory understanding of the Earth’s changing environment is its inherent complexity, above all, the intricate and perennially challenging relationship of parts and wholes, a great yin-yang of their dynamic interdependence. But this objective difficulty is greatly complicated by subjective choices and biases of people studying the environment: overwhelmingly, they favour dissection and compartmentalization to synthesis and unification, they rely excessively on modelling which presents too frequently a warped image of reality, and they are not immune to offering conclusions and recommendations guided by personal motives and preferences.

This makes it very difficult to separate clear facts from subtle biases and wilder speculations even for a scientist wading through a flood of publications on fashionable research topics. Policy-makers often select interpretations which suit best their own interests, and the general public is exposed almost solely to findings with a sensationalizing, catastrophic bias. To come up with generally acceptable sets of priorities for effective remedies is thus very difficult—and bureaucratization and politicization of environmental research and management make it even harder to translate our imperfect understanding into sensible actions. Before addressing these complications, I will first review the genesis of our environmental understanding and the emergence of environmental pollution and degradation as matters of important public concern.

MODERN CIVILIZATION AND THE ENVIRONMENT

Yet we can use nature as a convenient standard, and the meter of our rise and fall.

Ralph Waldo Emerson, The Method of Nature (1841)

Shortly after the beginning of the twentieth century the age of the explorer was coming to the close. Yet another generation was to elapse before the last isolated tribes of New Guinea were contacted by the outsiders (Diamond 1988), but successful journeys to the poles—Robert Peary’s Arctic trek in 1909 and Roald Amundsen’s Antarctic expedition in 1911—removed the mark of terra incognita from the two most inaccessible places of the Earth (Marshall 1913).

Four centuries of European expansion had finally encompassed the whole planet. But this end of a centuries-old quest was not an occasion for a reflection on limits and vulnerabilities of the Earth: such feelings were still far in the future, in the time of Apollo spacecrafts providing the first views of the blue-white Earth against the blackness of the cosmic void.

For an overwhelming majority of people life at the beginning of the early twentieth century differed little from the daily experience of their ancestors two, ten, or even twenty generations ago. They were subsistence peasants, relying on solar energies and on animate power to cultivate low-yielding crops. Even the industrializing countries of Europe and North America were still largely rural, horses were the principal field prime movers, and there were hardly any synthetic fertilizers. Environmental degradation and pollution accompanied the advancing urbanization and mass manufacturing based on coal combustion, but these effects were highly localized.

Grimness of polluted industrial cityscapes was an inescapable sign of a new era —but not yet a matter of notable public, governmental or scientific concern. Study of the environment had little to do with changes brought by man. The earliest attempt at a grand synthesis of man’s impact on natural environments (Marsh 1864) was so much ahead of its time that it had to wait almost another century for its successor (Thomas 1956). But foundations were being laid for a systematic understanding surpassing the traditional descriptions. During the nineteenth century most of the available information about the Earth’s environment was admirably systematized in such great feats of advancing science as writings of Alexander von Humboldt (1849), Henry Walter Bates (1863) and Alfred Russel Wallace (1891), or in the maps of the British Admiralty. But our understanding of how the environment actually works was only beginning.

Rapidly advancing biochemistry and geophysics were laying the foundations for understanding the complexities of grand biospheric cycles. By the late 1880s Hellriegel and Wilfarth (1888) identified the nitrogen-fixing symbiosis between legumes and Rhizobium bacteria, by the late 1890s Arrhenius (1896) published his explanation of the possible effects of atmospheric CO2 on the ground temperature and Winogradsky isolated nitrifying bacteria (Winogradsky 1949). At the same time, Charles Darwin’s (1859) grand syntheses turned attention to the interplays between organisms and their surroundings, and physiologists offered revolutionary insights into the nutritional needs of plants, animals and men (Atwater 1895). Still, the blanks dominated, and the absence of highly sensitive, reproducible analytical methods precluded any reliable monitoring of critical environmental variables.

Wilhelm Bjerknes set down the basic equations of atmospheric dynamics in 1904—but climatology remained ploddingly descriptive for a few more decades (Panofsky 1970). Need for an inclusive understanding of living systems was in the air—but ecological energetics was only gathering its first tentative threads (Martinez-Alier 1987). And environmental studies everywhere retained an overwhelmingly local and specific focus and a heavily descriptive tilt with quantitative analyses on timid sidelines.

Everyday treatment of the environment did not show any radical changes—but new attitudes started to make some difference. The closing decades of the nineteenth century saw the establishment of the first large natural reserves and parks, and the adoption of the first environmental control techniques after a century of industrial expansion which treated land, waters and air as valueless public goods. The new century brought the diffusion of primary treatment of urban waste water and the invention of electrostatic control of airborne particulates (Bohm 1982). The two world wars and the intervening generation of economic turmoil were not conducive to greater gains in environmental protection, but a number of ecological concepts found its way into unorthodox economics as well as into some fringe party politics (Bramwell 1989).

Alfred Lotka (1925) published the first extended work putting biology on a quantitative foundation, and Vladimir Ivanovich Vernadsky (1929) ushered the study of the environment on an integrated, global basis with his pioneering book on the biosphere. Arthur Tansley defined the ecosystem, one of the key terms of modern science (Tansley 1935), and Raymond Lindeman, following Evelyn Hutchinson’s ideas, published the first quantification of energy flows in an observed ecosystem (Lindeman 1942). While its understanding was advancing, the environment of industrialized countries kept on deteriorating. Three kinds of innovations, commercialized between the world wars, accounted for most of this decline.

Thermal generation of electricity, accompanied by emissions of fly ash, sulphur and nitrogen oxides and by a huge demand for cooling water and the warming of streams, moved from isolated city systems to large-scale integrated regional and national networks (Hughes 1983). Availability of this relatively cheap and convenient form of energy rapidly displaced fuels in most industrial processes and started to change profoundly household energy use with the introduction and diffusion of electric lights, cooking and refrigeration. The last demand was largely responsible for the development of a new class of inert compounds during the 1930s: their releases are now seen as the most acute threat to the integrity of the stratospheric ozone (Manzer 1990).

The automobile industry shifted from workshops to mass production, making cars affordable for millions of people (Lacey 1986) and spreading the emissions of unburned hydrocarbons, nitrogen oxides and carbon monoxide over urban areas and into the countryside which became increasingly buried under asphalt and concrete roads. And the synthesis of plastics grew into a large, highly-energy-intensive industry generating toxic pollutants previously never present in the biosphere, and it introduced into the environment a huge mass of virtually indestructible waste (Katz 1984).

Post-1950 developments amplified these trends and introduced new environmental risks as the world entered the spell of its most impressive economic growth terminated only by the Organization of Petroleum-Exporting Countries’ (OPEC’s) 1973–4 quintupling of oil prices. In just 25 years global consumption of primary commercial energy nearly tripled, that of electricity went up about eightfold, and there were substantial multiples in demand for all leading metals, as well as in ownership of consumer durables. New environmental burdens were introduced with the rapidly expanding use of nitrogenous fertilizers derived from synthetic ammonia first produced in 1913 (Holdermann 1953; Engelstad 1985), and with the growing applications of the just discovered pesticides (dichloro-diphenyltrichloro-ethane (DDT) was first used on a large scale in 1944): nitrates in groundwater and streams and often dangerously high pesticide residues in plant and animal tissues.

Environmental pollution, previously a matter of local or small regional impact, started to affect increasingly larger areas around major cities and conurbations and downwind from concentrations of power plants as well as the waters of large lakes, long stretches of streams and coastlines and many estuaries and bays. Better analytical techniques were recording pollutants in the air, waters and biota thousands of kilometres from their sources (Seba and Prospero 1971). Only a few remedies were introduced during the 1950s. Smog over the Los Angeles Basin led to an establishment of the first stringent industrial emission controls in the late 1940s (Haagen-Smit 1970). London’s heavy air pollution, culminating in 4000 premature deaths during the city’s worst smog episode in December 1952, brought the adoption of the Clean Air Act, the first comprehensive effort to clean a country’s air (Brimblecombe 1987).

Electrostatic precipitators became a standard part of large combustion sources, black particulate matter started to disappear from many cities and visibility improved; this trend was further aided by the introduction of cleaner fuels—refined oils and natural gas—in house heating. But as the years of sustained economic growth brought unprecedented affluence to larger shares of North American and European populations, they also spread a growing uneasiness about intensifying air pollution and about degradation of many ecosystems. By the early 1960s these cumulative concerns pushed the environment into the forefront of public attention.

ENVIRONMENT AS A PUBLIC CONCERN

We cannot consider men apart from the rest of the country, nor an inhabited country apart from its inhabitants without abstracting an essential part of the whole.

A.J.Herbertson (1913)

Human impacts became first a matter of widespread public discourse and political import in the United States. Rachel Carson’s (1962) influential warning about the destructive consequences of pesticide residues in the environment has been often portrayed as the prime mover of new environmental awareness. In reality, the impulses came from many quarters, ranging from traditional concerns about wilderness preservation to new scientific findings about the fate of anthropogenic chemicals.

Mounting evidence of worsening air pollution was especially important in influencing the public opinion as sulphates and nitrates, secondary pollutants formed from releases of sulphur and nitrogen oxides started to affect large areas far away from the major sources of combustion. Europeans were first to note this phenomenon in degradative lake and soil changes in sensitive receptor areas (Royal Ministry of Foreign Affairs 1971). The decade brought also the first exaggerated claims about environmental impacts. Commoner (1971) feared that inorganic nitrogen carries such health risks that limits on fertilization rates, economically devastating for many farmers, may be needed soon to avert further deterioration. His misinterpretation was soon refuted (Aldrich 1980), but there was no shortage of other targets. Once the interest in environmental degradation began, the western media, so diligent in search of catastrophic happenings, and scientists whose gratification is so often achieved by feeding on fashionable topics, kept the attention alive with an influx of new bad news.

These concerns were adopted by such disparate groups as the leftist student protesters, who discovered yet another reason why they should tear down the ancien régime, and large oil companies which advertised their environmental pedigrees in double-page glossy spreads (Smil 1987). Search for the causes of environmental degradation brought theories ranging from an influential identification of the Judaeo-Christian belief (man, made in God’s image, set apart from nature and bent on its subjugation) as the principal root of environmental problems (White 1967), to predictable, and even more erroneous, Marxist-Leninist claims putting the responsibility on capitalist exploitation.

White overdramatized the change of the human role introduced by Christianity (Attfield 1983), and ignored both a greater complexity of Christian attitudes and an extensive environmental abuse in ancient non-Christian societies (Smil 1987). Emptiness of ideological claims was exposed long before the fall of the largest Communist empire by detailed accounts of Soviet environmental mismanagement (Komarov 1980).

In the summer of 1970 came the first attempt at a systematic evaluation of global environmental problems sponsored by the Massachusetts Institute of Technology (Study of Critical Environmental Problems 1970). The items were not ranked but the order of their appearance in the summary clearly indicated the relative importance perceived at that time. First came the emissions of carbon dioxide from fossil fuel combustion, then particulate matter in the atmosphere, cirrus cloud from jet aircraft, the effects of supersonic planes on the stratospheric chemistry, thermal pollution of waters and DDT and related pesticides. Mercury and other toxic heavy metals, oil on the ocean and nutrient enrichment of coastal waters closed the list.

Just a month later Richard Nixon sent to the Congress the first report of the President’s Council on Environmental Quality, noting that this was the first time in the history that a nation had taken a comprehensive stock of the quality of its surroundings. Soon afterwards his administration fashioned the Environmental Protection Agency by pulling together segments of five departments and agencies: environment entered the big politics. And it entered international politics with the UN-organized Conference on Human Environment in Stockholm in 1972 where Brazilians insisted on their right to cut down all of their tropical forests—and Maoist China claimed to have no environmental problems at all (UN Conference on the Human Environment 1972).

OPEC’s actions in 1973–4, global economic downturn and misplaced worries about the coming shortages of energy, turned the attention temporarily away from the environment but new studies, new revelations and new sensational reporting kept the environmental awareness quite high. Notable 1970’s concerns included the effects of nitrous oxide from intensifying fertilization on the fate of stratospheric ozone (CAST 1976), carcinogenic potential of nitrates in water and vegetables (NAS 1981), and both the short-term effects of routine low-level releases of radio-nuclides from nuclear power plants and the long-term consequences of high-level radioactive wastes to be stored for millennia (NAS 1980).

And with the economic plight of poor nations worsened by the higher prices of imported oil came the western ‘discovery’ of the continuing dependence of all rural and some urban Asian, African and Latin American populations on traditional biomass energies (Eckholm 1976) and the realization of how environmentally ruinous such reliance can be in the societies where recent advances in primary medical care pushed the natural increase of population to rates as high as 4 per cent a year. Naturally, attention focused also on other causes of deforestation and ensuing desertification in subtropical countries and soil erosion and flooding in rainy environments: inappropriate ways of farming, heavy commercial logging, often government-sponsored conversion of forests to pastures. Unexpected recurrence of an Indian food shortages and the return of severe Sahelian droughts served as vivid illustrations of these degradative problems.

To this must be added the effects of largely uncontrolled urban and industrial wastes, including releases of toxic substances which would not be tolerated in rich countries, appalling housing and transportation in urban areas, misuse of agricultural chemicals and continuing rapid losses of arable land to house large population increases and to accommodate new industries. Not surprisingly, an ambitious American report surveying the state of the environment devoted much of its attention to the environmental burdens of the poor world (Barney 1980).

In human terms this degradation presented an especially taxing challenge to the world’s most populous country. When Deng Xiaoping’s ‘learning from the facts’ replaced Mao Zedong’s ‘better red than expert’, stunning admissions and previously unavailable data made it possible to prepare a comprehensive account of China’s environmental mismanagement whose single most shocking fact may be the loss of one-third of farmland within a single generation—in a nation which has to feed a bit more than one-fifth of mankind from 1/15 of the world’s arable land (Smil 1984). And the environmental problems of the poor world were even more prominent in yet another global stock-taking in 1982, the Conference on Environmental Research and Priorities organized by the Royal Swedish Academy of Sciences (Johnels 1983).

Environmental mishaps and worries during the 1980s confirmed and intensified several recurring concerns (Ellis 1989). Such accidents as the massive cyanide poisoning in Bhopal, and the news about the daunting effort to clean up thousands of waste sites in the USA, have turned hazardous toxic wastes into a lasting public concern. The Chernobyl disaster in 1986 strengthened the fears of nuclear power. And the discovery of a seasonal ozone hole above Antarctica (Farman et al. 1985) revived the worries about the rapid man-induced changes of the atmosphere, a concern further intensified with a new wave of research on the imminent global warming.

Concatenation of these mishaps and worries led to a widespread adoption of environmental change as a major item of national and international policy-making during the late 1980s. Such an eager embrace involves an uncomfortably large amount of posturing, token commitments and obfuscating rhetoric—but it is a necessary precondition for an adoption and eventual enforcement of many essential international treaties. Montreal Protocol of 1987 (and its subsequent perfection), aimed at the reduction and elimination of chlorofluorocarbons, is, so far, the best example of such actions (Wirth and Lashof 1990).

But in a more fundamental way this is a very atypical example: that unprecedented international agreement was based on a strong scientific consensus, on a body of solid understanding (Rowland 1989). A much more typical situation is one of limited understanding, major uncertainties, and impossibility to offer a firm guidance for effective management. Reasons for this can be found in a combination of widespread objective research difficulties, limited utility of some preferred analytical techniques, and of inherent—but largely ignored—weaknesses of scientific inquiry.

ON PARTS AND WHOLES

There is, indeed, a difficulty about part and whole…namely, whether the part and the whole are one or more than one, and in what way they can be one or many, and, if they are more than one, in what way they are more than one.

Aristotle, Physics

Modern science emerged and has flourished as an assemblage of particularistic endeavours, a growing set of intensifying, narrowly-focused probes which have been bringing great intellectual satisfaction as well as rich practical rewards. But integration embracing interaction of complex, dynamic assemblages—what came to be called systems studies—is a surprisingly young enterprise. Smooth functioning of many biospheric interactions is indispensable for civilization’s survival and to understand how the whole works is impossible by just focusing on disjointed parts. Key variables may be easy to identify but difficult to know accurately, and what should be included in order to represent properly the basic dynamics of the system is almost always beyond our reach.

I will reach for an outstanding example to the essentials of human survival. For more than a century it has been appreciated that life on the Earth depends critically on incessant transfers of nitrogen from the atmosphere to soils, plants, and animals and waters and then back to the air: maintenance of high rates of nitrogen fertilization is a key precondition for continuation of highly productive farming. Clearly, we should know a great deal about the nitrogen cycle—and we do. None of the grand biogeochemical cycles has been studied for so long, so extensively and in such a detail, and the sum of our knowledge is impressive (Bolin and Cook 1983; Smil 1985a; Stevenson 1986).

Fertilizing a field

And yet when a new spring comes around and an Iowa farmer is to inject ammonia into his corn field, or a Jiangsu farmer is to spread urea on his paddy, scientific knowledge proves to be a very uncertain guide. Laboratories will test soils and recommend the optimum nitrogen application rate but this advice does not have the weight of a physical law. Identical soil samples can come back from different laboratories with recommendations entailing several-fold differences in the total fertilizer cost and more than a twofold disparity in the total amount of nitrogen to be applied per hectare (Daigger 1974). That crop fertilization remains as much an art as a science is the inevitable consequence of the astonishing complexity of the nitrogen cycle.

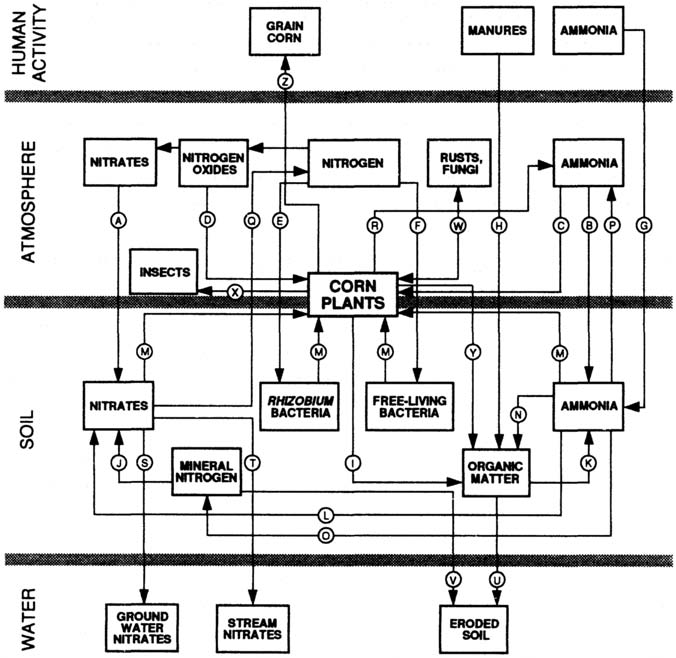

The final product of this system’s operation—grain yield in this example—cannot be predicted on the basis of even a very accurate knowledge of such key parts as nitrogen content available in the soil, the plant’s known nitrogen requirements or the fertilization timing and rates. And while we have identified every important reservoir and every major flux of the element, such qualitative appreciation of individual parts does not add up to a confident understanding of the whole. A farmer wishing to know the nitrogen story of his field would not only have to set down a long equation describing the dynamics of this intricate system—but he would have then to quantify all the fluxes (Figure 1.1).

Nitrogen inputs to his field will come naturally from the atmosphere, as nitrates (A) and ammonia (B) in precipitation and in dry deposition and as ammonia (C) and nitrogen oxides (D) absorbed by plants and soil. If an Iowa corn crop is to follow the last year’s soybeans, Rhizobium bacteria living symbiotically with the roots of those leguminous plants have fixed considerable amount of nitrogen (E) and a much smaller amount will be contributed by free-living nitrogen fixers (F); soybeans, beans or vetch could have preceded a Jiangsu rice crop, which may get more biotically-fixed nitrogen from Azolla in paddy water. Both fields will receive not only plenty of synthetic fertilizer (G), but also animal manures (H), above all from pigs.

More nitrogen was put into the soil by incorporation of crop residues from the previous harvest (I). Weathering (J), microbial decomposition of soil fauna proteins in the soil (K), and nitrification (L), a microbial oxidation of ammonia held in the soils to nitrates, are other important flows. Storage should account for nitrogen used by the crop (M), and by soil micro-organisms (N), and for ammonia absorbed by the soil’s clay minerals (O). Outputs should trace ammonia volatilization (P), denitrification (Q), that is, microbial reduction of nitrate to nitrogen, nitrogen losses from plant tops (R), leaching of nitrates into ground waters (S) and streams (T), organic (U) and inorganic (V) nitrogen losses in eroded soil, losses owing to microbial attack (W), insect feeding (X), harvesting losses (Y) and, of course, the harvest taken to the farm or storage (Z).

If the farmer would have numbers ready to be attached to this alphabetfull array of key variables he could fertilize with precision, with minimized losses and with the lowest costs. In practice, he knows exactly only one variable—the amount of synthetic fertilizer (G) he plans to apply to the field. How much will be removed in the crop he can only guess: drought can ruin his harvest—but a crop grown with optimum moisture could have used more fertilizer to produce a much higher yield (Figure 1.2).

Nitrogen in manures and crop residues (H, I) can be readily estimated—but with errors no smaller than 25–35 per cent. Estimating nitrogen in precipitation is much more uncertain but there are at least sparse measurements to provide some quantitative basis. Values on dry deposition and absorption (A-D) are almost universally lacking: we simply do not have even adequate techniques to measure these inputs properly.

Figure 1.1 Intricacies of nitrogen cycling in a crop field preclude any accurate management of the nutrient’s flows. Letters marking the flows are keyed to the discussion in the text.

Source: The cycle chart is modified from Smil (1990).

Fixation by symbiotic bacteria (E) and free-living organisms (F) is undoubtedly the most important natural input of nitrogen but we do not have accurate rate estimates even for leguminous crops which have been studied for decades in experimental plots: published extreme rates for most common leguminous species show five to sixfold differences (Dixon and Wheeler 1986). As for other input rates, all we can offer are just the right orders of magnitude and the same is true for all common nitrogen losses (P-X): again, there is no shortage of measured values but, as all of the processes are critically influenced by many site-specific conditions, adoption of typical values may result in large errors.

Figure 1.2 Optimal crop fertilizing depends on the unpredictable supply of moisture.

Source: Based on Aldrich (1980).

Even on this fairly small scale reliable understanding eludes us. I dwelled on this example because it deals with a mundane yet fundamental activity replicated many millions of times every year around the world. We may be sending probes out of our planetary system but it is beyond our capability to chart and to forecast accurately nitrogen fluxes of a single crop field and hence to optimize fertilization. But do not the current imperfect practices work? Indeed, but what portions of those fertilizers leak into waters and atmosphere where we do not want to have more nitrate or N2O to contaminate wells, streams, lakes and bays, to acidify precipitation and to contribute to planetary warming and destruction of stratospheric ozone?

How much money—nitrogen fertilizer is almost always the single largest variable cost in modern farming—and labour is wasted, and how sustainable can the cropping be with large nitrogen losses in eroding soils and with declining reserves of the element in formerly nitrogen-rich ground? We may be maximizing the output, but we are very far from optimizing the performance of the whole system because we are not managing our agroecosystems in a sustainable manner, and because we are contributing to advancing environmental degradation. And so it really does not work—and even our best understanding assiduously translated into most careful agronomic practices allows for only a limited number of improvements (Runge et al. 1990).

Understanding plants and using black boxes

This inability to gain a satisfactory holistic grasp comes up repeatedly in the study of life and a single plant defeats us no less than a single field. Clifford Evans (1976) put it perfectly:

In the broadest terms the main difficulties lie in the inaccessibility of the plant growing in its natural surroundings—physical inaccessibility because the great majority of methods of investigation involve gross interference with the plant, or with its environment, or both…and intellectual inaccessibility. The human mind has no intuitive understanding of higher plants: understanding…can only be reached by intellectual processes which attempt to follow through the consequences of particular observations and to integrate them with others.

Yet the overwhelming majority of those particular observations has come from studying plants in artificial environments, in enclosed growth chambers or in greenhouses. This eliminates the omnipresent natural interferences determining photosynthesis of free-growing plants. And even a perfect replication of natural environment would founder on the problem of timescale: how to integrate, interpret and extrapolate brief observations to understand lives of plants spanning months, years and decades? Raup (1981a) suggested a new foundation for the study of plants which would not be based solely on their forms, processes and organization:

Perhaps we should start with the inherited capacities of its species for adjustment to lethal disturbances that come from outside agents. The frame would have a large element of randomness, but there would be no more randomness than the species have been coping with for a long time.

Physicists like to illustrate their difficulties with Heisenberg’s famous uncertainty principle. In fixing definite position and velocity of a particle any conceivable experiment carries uncertainty and it is simply impossible to measure precisely both variables (Cassidy 1992). An ecologist could be hardly sympathetic. The physicists’ lack of absolute certainty is replaced by a probabilistic expression: impossibility to pinpoint does not mean the loss of control and understanding. In contrast, an ecologist cannot replicate identical conditions in a plant or an ecosystem to pinpoint their behaviour. Field experiments with ecosystems are at best extremely difficult and very costly, and most often they are simply impossible. Unlike in physics or chemistry, most of the evidence in ecology is non-experimental and hence open to radical shifts in interpretation.

A generation of rapidly expanding ecological modelling has not been crowned with a confident outlook. Peters (1991) found that the predictions ‘are often vague, inaccurate, qualitative, subjective and inconsequential. Modern ecology is too often only scholastic puzzle-solving’. Life’s complexity accounts for a large part of these failures: as Edward Connor and Daniel Simberloff (1986) concluded, ‘ecological nature is more complex and idiosyncratic than we have been willing to concede’. Plants remember everything. We are unable either to intuit this whole or to study it as a whole. We have to dissect to understand but, while our particular observations may reach amazing depths, they may be of very limited value in piecing together the grand picture. And during these dissections, even when working with caution, we can irretrievably destroy some key parts or relationships.

Once again Clifford Evans (1976): ‘The biologist is always confronted by a player on the other side of the board. A false move, and the system on which the biologist thinks he is working turns into something else’. Emerson (1841) understood this perfectly: ‘We can never surprise nature in a corner; never find the end of a thread; never tell where to set the first stone’. Understanding of social processes faces these obstacles on a still more complex plane. Herbertson’s (1913) observations sum up this challenge:

It is almost impossible to group precisely the ideas of a community into those which are the outcome of environmental contact, and those which are due to social inheritance… It is no doubt difficult for us, accustomed to these dissections, to understand that the living whole, while made up of parts with different structures and functions, is no longer the living whole when it is so dissected, but something dead and incomplete.

The crop-growing example also helps to illustrate the problem of parts and wholes when seen from the viewpoint of individual competence. Trying to understand behaviour of complex systems through an ever finer dissection of their parts is a universal working mode of modern science, a source of both astonishing discoveries—but also of massive ignorance spreading throughout our black-box civilization. Naturally, traditional farmers did not have any understanding of the physical or biochemical bases of their world but by observation, trial and error they reached a surprising degree of control over their environment.

They grew their crops in rotations we still find admirable, combined soybeans and rice, lentils and wheat or beans and corn in a single meal (to receive proper ratios of all essential amino acids from an overwhelmingly vegetarian diet), could build their houses, stoves and simple furniture, and the meadows and forests furnished them with a herbal pharmacopoeia which contained plenty of placebos but also many ingredients we still find irreplaceable. I do not want to idealize the hard life of traditional peasants—just to point out that even a small village could replicate all the modest but fairly efficacious essentials of that civilization.

Possibilities of average human life were greatly circumscribed, yet it could be seen as a whole, and open to risky, but decisive, action. Our positions are fundamentally different as the vast accumulated knowledge is almost infinitely splintered and we produce things, manage our affairs and lay out long-range plans by constant recourse to black boxes. A perfect illustration of these dealings is Klein’s (1980) sci-fi story. As a group of scientists from Harvard’s Physics Department ‘pull back’ a man present in the same building centuries from now, they are overjoyed that he is a professor not a janitor. But he can answer their questions on how numerous scientific breakthroughs were achieved and how the future works only with repeated ‘that’s not my field’.

The only realist in the time-breaking group is not surprised as he asks: ‘How much do you think you’d know if you went back into the Dark Age? Could you tell them how to build an aeroplane? Or perform an appendectomy? Or make nylon? What good would you be?’ Even the very people doing these things would be of little help as they, in turn, rely on numerous black-box ingredients and processes whose provision is in the hands of other specialists, who, in turn use other black boxes, circuitously ad infinitum.

Industrialization has done away with many existential strictures—but also with the relative simplicity of action. As the complexities of modern society have progressively reduced the effects of natural forces they have also limited the scope of individual action: as greater freedoms require greater control every change has cascading effects far beyond its initial realm. Jacques Ellul’s (1964) lucid explications see the central problem of modern civilization in its dominance by la technique: it gives us unprecedented benefits and almost magical freedoms but in return we have to submit to its rules and strictures.

Modern man knows very little about the totality of these techniques on which he so completely depends, he just follows its dictates in everyday life: the more sophisticated the technique the harder the understanding of the underlying wholes and the more limited the true individual freedom. A large part of humanity is already irrelevant to production process and, although in so many ways it may be much freer than its ancestors, it certainly feels its slipping control of things. One may not agree with every detail of Ellul’s arguments but it is impossible to deny the fragmentation of understanding accompanying advances of the technique.

Resulting reversal of human perceptions of the parts and the whole has profound effects on our understanding and management of environmental change. Our ancestors were awed by the intricate working of the creation whose mysteries they never hoped to penetrate—but individually they had an impressive degree of practical understanding and a surprising amount of effective control of their environment. In contrast, as a civilization, we are either in control of previously mysterious wholes (such as breeding cycles of many infectious diseases), have at least sufficient early warnings (by, for example, watching hurricane paths from satellites), or feel confident that we can understand them once we put in more research resources.

But individually the overwhelming majority of people, including the scientists, has no practical understanding of civilizational essentials and our controls rest on mechanical application of many black-box techniques. Our understanding resides in a myriad of pieces forming a web of planetary knowledge which Teilhard de Chardin (1966) labelled the noosphere. Obviously, it is much easier to use this large reservoir of particularized expertise as a base for even more specialized probes, a strategy responsible for rapid advances of scientific subdisciplines.

With unprecedented modes of environmental management—be they cost-driven or command-and-control adjustments on levels ranging from individual to global—we would be adding more levels of complexity. Underlying reasons for such changes, often disputed by the elites in charge, would be generally understood only in terms of simplest black-box explanations—but they would require profound transformations of established ways of living and they would have obviously enormous impacts on the whole society. But during the past generation we have witnessed an intellectual shift aimed at rejoining the splintered knowledge into greater wholes as quantitative modelling, greatly helped by rising powers and declining costs of computers, moved far beyond the traditional confines of engineering analyses to simulate such complex natural phenomena as the Earth’s climate or the biospheric carbon cycle.

So explosive has been this reach that one can find few complex natural or anthropogenic phenomena that have not been modelled. These exercises are no longer mere intellectual quests: when the decisions-makers turn to scientists for advice in complex policy matters, computer modelling now has almost always a prominent, if not the key, role in formulating the recommendations: decisions shaping the future of the industrial civilization originate increasingly with these new oracles. According to the ancient Greeks, the centre of their flat and circular world was either Mount Olympus, the abode of the gods—or Delphi, the seat of the most respected oracle. Industrial civilization appears to have little left to do with gods. A possibility that we might be shifting the centre of our intellectual world into the realm of computer modelling makes me very uncomfortable. A closer look at the limits of those alluring exercises will explain why.

And the Colleges of Cartographers set up a Map of the Empire which had the size of the Empire itself and coincided with it point by point… Succeeding generations understood that this Widespread Map was Useless, and not without Impiety they abandoned it to the Inclemencies of the Sun and of the Winters.

Suarez Miranda, Viajes de Varones Prudentes (1658)

Computer modellers must empathize with cartographers in Miranda’s imaginary realm. Their predicaments are insolvable. Simple, large-scale maps will omit most of the real world’s critical features and their heuristic and predictive powers will be greatly limited: often they will offer nothing but a restatement of well-appreciated knowledge in a different mode. Yet offering perfect, point by point, replications of complex, spatially extensive realities would presuppose such a thorough understanding of structures and processes that there would be no need for setting up these duplicates which may end up as the Widespread Map.

Modelling is undoubtedly a quintessential, and inestimably useful, product of human intelligence: it allows us to understand and to manage complex realities through simpler and cheaper (often also safer) thought processes, and computers elevated the art to new levels of complexity. But modelling is also always an admission of imperfect understanding. Alfred Korzybski’s (1933) reminder is hardly superfluous: ‘A map is not the territory it represents, but, if correct, it has a similar structure to the territory, which accounts for its usefulness… If the structure is not similar, then the traveller is led astray…’

Not surprisingly, structure of many models dealing with the biospheric change is not yet sufficiently similar to the complexities of systems subsuming multivariate interactions of natural and anthropogenic processes. This obvious reality does not discredit a use of inadequate models—but it requires to pay close attention to their limits. For any experienced modeller this is quite clear, but mistaking imperfect maps for real landscapes has been a common enough lapse: results of modelling exercises can come out with plenty of attached qualifiers, but such information is the first to be lost when the conclusions are concentrated into executive summaries or squeezed into news columns. And, of course, there are always some modellers who make unrealistic claims for their creations: their reasons may range from understandable enthusiasm to a dubious pursuit of large grants.

The error of mistaking maps for realities has been strengthened with the diffusion of computer modelling. For modellers the machines opened up unprecedented possibilities of toying with ever larger and more complex systems, for common consumers of their efforts they lent a misplaced aura of greater reliability. The Limits to Growth (Meadows et al. 1972)—based on Jay Forrester’s (1971) business dynamics models—started this trend right on the planetary scale. An untenably simplistic model of global population, economy and environment (Figure 1.3) predicting an early collapse of the industrial civilization was presented by its authors as a realistic portrayal of the world and this misrepresentation was uncritically accepted by news media.

Figure 1.3 How not to forecast: a section of the global model from The Limits to Growth which used excessively large, and fundamentally meaningless, aggregates such as ‘pollution’, linked by no less abstract generation rates and multipliers.

Source: Meadows et al. (1972) The Limits of Growth.

More complex and somewhat more realistic computer models followed during the 1970s and the 1980s but their print-outs have hardly greater practical value than their celebrated predecessor (Hafele 1981; Hickman 1983). Their most obvious shortcoming is the inevitably high level of aggregation which results in meaningless generalizations or in incongruous groupings. Models on smaller scales can avoid these ludicrous aggregations but even when carefully structured they are still plagued by inherent incompleteness. Reviewing the state-of-the-art in modelling reality, Denning (1990) concluded that ‘experts themselves do not work from complete theories, and much of their expertise cannot be articulated in language’.

These weaknesses are especially important in modelling those human activities which have the greatest effect on the global environment. A perfect illustration is an unpardonable omission in energy modelling. Energy conversions are by far the largest source of air pollutants and a principal source of greenhouse gases, and in order to avoid health, material and ecosystemic damage and to prevent potentially-destabilizing climatic changes we would like to understand the prospects of national and global energy use. Since 1973 (after the OPEC’s first crude oil price rise) modellers have constructed many long-range simulations of energy consumption—but, as Alvin Weinberg (1978) pointed out, while there is no doubt that historically we have been led to use more energy to save time, among scores of post-1973 energy models not a single one took into account this essential trade-off between time and energy.

Instead, they focused on achievement of politically-appealing but economically-dubious self-sufficiency, on ill-defined diversification of supply (with ludicrously-exaggerated assumptions about the penetration rates of new techniques) or on a rigid pursuit of maximized thermodynamic efficiency where everything is subservient to the quest for better conversions (a goal which may make little economic or environmental sense). This crucial omission can be remedied: time-saving performance can be incorporated into more realistic simulations but there are many other essentials left out of all models of energy supply and demand, as well as from their more general analogies designed to forecast the course of national economies or even the trends of global developments.

Unpredictable personal decisions—ranging from a sudden abdication of a shah to a course taken by a small group of men in a white stucco building on Pennsylvania Avenue to an inexplicable miscalculation of an aggressive dictator, events whose eventual effects the protagonists themselves could not imagine—have a profound long-term impact on the environment as they can lead to rapid shifts in energy prices or, as seen in Saddam Hussein’s destruction of Kuwaiti oilfields, directly to enormous environmental damage. After two generations of forecasting studies we can point to a number of utterly wrong forecasts. Even relatively short-term forecasts almost invariably miss the eventual reality by wide margins (Figure 1.4). But much more interesting is a smaller category of studies whose overall predictions were fairly good, but which still missed the essential changes.

Figure 1.4 Futility of forecasting. I have collected two dozen American mid- to short-term energy forecasts of the country’s total primary energy consumption in 1985. Expectedly, the closer they were made to the target year, the more realistic they tended to be, but even those looking ahead less than a decade were, on the average, about 25 per cent too high.

A notable example are forecasts in Resources for Freedom (President’s Materials Policy Commission 1952). Looking a quarter century ahead they came rather close to the actual total commercial energy use in 1975—but they got its proportions of individual fossil fuels very wrong (coal’s demise was so much swifter!), and they forecast that transportation energy use will grow slower and the industrial energy use faster than the overall average, reverse of the actual development (Landsberg 1988).

No matter how complex, no models can incorporate the universe of human passions permeating everyday decision-making and resulting in tomorrow’s outcomes which seemed decidedly impossible yesterday. Three very different examples illustrate these unpredictable but enormously important links between discontinuities of history and long-term effects on the environment. China of the late 1980s became the world’s largest producer of refrigerators—and hence a major new contributor to ozone-destroying chlorofluorocarbon emissions (Smil 1992)—because of a palace coup d’état in 1976 (the overthrow of the Gang of Four) and subsequent reforms introduced by Deng Xiaoping: with Maoists in continuing charge refrigerators could still have been a despicable bourgeois luxury.

Brazil of the early 1990s will not be able to destroy as much of Amazonia’s tropical rain forest as during the mid-1980s because the years of hyperinflation and gross economic mismanagement left the government with much less money to subsidize conversions of forests to grazing land (Fearnside 1990). And the global output of CO2 from the combustion of fossil fuels will slow down because the successor states of the USSR, the country whose fossil fuel combustion was surpassed only by the USA, will first produce less energy because of economic disarray, and eventually they will need less of it because of efficiency improvements. None of these considerations entered any global warming models —yet their cumulative impact will be clearly enormous.

Obviously, using inappropriate models to deal with complex realities can retard rather than advance effective solutions. But the problem is deeper: as Rothenberg (1989) pointed out,

there are even cases where using any model at all is inappropriate. For example, relating to someone in terms of a model precludes the comprehension and appreciation of the richness and unpredictability that distinguish living beings from rocks and tractors.

Replace ‘someone’ by ‘anthropogenic environmental change’—and you are touching the very core of difficulties and inadequacies faced by modellers trying to represent the impact of those transformations on modern civilization. No model can be good enough to enlighten us about our future collective capacity for evolutionary adaptation.

But is not all this trivial? Are not the unpredictabilities of individual human actions and stunning discontinuities of history obvious hallmarks of civilizational evolution? Indeed, but I dwelt on these points precisely because they are repeatedly ignored, ignored in ways which are both costly and embarrassing. Trends and concerns of the day are almost always casting long shadows over the future, and smooth functions of expected developments, linear or exponential, are seen to continue far ahead. Unpredictable discontinuities cannot be, by definition, included—thus excluding any chance of a truly realistic assessment.

What is much less explicable is that the reigning trends and concerns of the day have such an inordinate influence even in the case of cyclical phenomena which are characteristic of a large number of environmental changes. Of course, where complex socio-economic systems are concerned, the existence of cyclicity cannot be equated with easy predictability—frequencies and amplitudes may be far from highly regular—but the inevitability of trend reversals is obvious. Incredibly, during the past generation many modellers have repeatedly ignored these realities, transfixed by the impossible trends of permanent growth or decline.

The record of economic modellers has been most unimpressive, in no small part a result of the profession’s virtual abandonment of reality. Wassily Leontief (1982) exposed the ruling vogue in contemporary economics by noting that ‘page after page of professional economic journals are filled with mathematical formulas leading the reader from sets of more or less plausible but entirely arbitrary assumptions to precisely stated but irrelevant theoretical conclusions’. Watt (1977) looked at the dispiriting forecasting record and found perhaps the fundamental problem in gross underestimation of the magnitude of the task involved in building such interactive models: it is surprisingly hard to put together all necessary submodels which one might expect to be readily available from specialists.

Watt suggested two general reasons for this. First, the overwhelming concentration of academic research on clearly defined problems rather than on complex refractory topics with fuzzy boundaries (parts and wholes again). Obviously, this offers the easiest road for career advances but it makes for a near-permanent choke on widespread system orientation in sciences. Second, a shortcoming already stressed just a few paragraphs ago: a strong preference of most scientists to study phenomena within an implied steady-state environment, assuming the futures of continuous growth or decline and ignoring many nonlinear forces and discontinuities.

Furthermore, Watt was frustrated by the impossibility of conveying the findings in a complex, scientific manner as the decision-makers in the western setting treat the problems largely in accord with linear thinking of constituencies they are trying to satisfy in order to assure their political survival. He urged great efforts in communicating the results in language which the legislators will understand. Watt was after an understandable and desirable goal—an effective diffusion of complex scientific understanding—but with the public increasingly unable to understand even the rudiments of our advances (the black-box syndrome) this is clearly a losing proposition. And I would not hesitate to put a large share of blame for complex quantitative models on the modellers themselves.

Their work has inherent limitations and loud and repeated acknowledgment of this fact would serve them well—but when this is done it is usually in the fine print of an appendix. Modellers’ common transgressions are clustered at the opposite ends of the utility spectrum. On the one hand the non-specialists in quantitative analysis and computer science, driven by a peer pressure into complex modelling, have often a very poor understanding of the capabilities of the whole exercise: exaggerated expectations have been quite common. Many scientists succumb readily to the lure of print-outs ‘revealing’ the future. Building a big model is often intellectually challenging, running it is exciting, glimpsing what they believe to be a possible future is a matter of a classy privilege. There seems to be also a distinct cultural preoccupation betraying the American can-do, fix-it attitude to complexities.

But what about the strictly natural phenomena? Are there not many examples of highly successful simulations of complex natural processes which can be described by a finite number of reactions or equations and precisely quantified by repeated measurements? And are not these endeavours getting steadily more rewarding? Yes in all cases—but only for certain classes of problems. Strict reproducibility of relationships and processes and homogeneity of structures in the inanimate world form a splendid base for the great predictive powers of the physical sciences. But even in this calculable world computer models are frequently defeated by the sheer complexity of the task, by the multitudes of nonlinear ties which make any simulation accurate enough to be used with confidence in decision-making, not just as a heuristic tool, elusive.

Computers, clouds and complexities

Certainly the best example in this intractable category is the modelling of the atmosphere in general, and of any future climatic changes in particular. Even the best current climatic models still have only a rough spatial resolution: their grids are still too coarse and the vertical changes in the principal atmospheric variables are modelled on separate levels. Grids of one degree of latitude by one degree of longitude and forty vertical levels would bring us much closer to the reality: it would have up to 100 times more points than the existing models, but even these exercises are running into computing problems (Mahlman 1989).

Computing power keeps on increasing, but its growth is far from being matched by deeper understanding of ocean circulation, cloud processes and atmospheric chemical interactions: hence even greatly expanded computing capacities would not result in highly reliable simulations. Temperature rise determining the severity and the consequences of the anticipated global warming is the most critical variable, but its predictions come from models which can replicate some of the grand-scale features of global climate but whose current performance is incapable of reproducing the intricacies and multiple feedbacks of natural phenomena.

And although some of these models may be in relatively good agreement as far as the mean global temperature increase is concerned, the overall range (as little as 1.5º and as much as 4.5ºC with doubled pre-industrial CO2 levels) remains still too great to be of any use for practical decision-making. Differences in regional prognoses are much greater, precluding any meaningful use of these simulations in forecasts of local environmental effects. Schlesinger and Mitchell (1987) had to conclude that existing models were frequently of dubious merit because they were physically incomplete or included major errors. Their comparisons showed even qualitative differences. This means that not all of these exercises can be correct, and all of them could be wrong. Treatment of clouds and oceans has been particularly weak.

General circulation models can represent essentials of global atmospheric physics but the iterative calculations are done at such widely-spaced grid points that it is impossible to treat cloudiness in a realistic manner. Even the highest-resolution models use grids with horizontal dimensions of about 400 km, an equivalent of New England and New York combined or half of Norway, and less than 2 km thick vertically (Stone 1992). And yet the clouds are key determinants of planetary radiation balance (Ramanathan et al. 1989). A National Aeronautics and Space Administration (NASA) multisatellite experiment showed that the global ‘greenhouse’ effect of clouds is about 30 W/m2; in comparison, doubling of pre-industrial CO2 concentration would add only about 4 W/m2 (Lindzen 1990). And because the warming effect of higher atmospheric CO2 levels increases only logarithmically, future tropospheric CO2 levels would have to be more than two orders of magnitude higher than today to produce a comparable effect.

In contrast, the clouds’ cooling effect is close to–47 W/m2, resulting in the net effect of about–16 W/m2. Without this significant cloud-cooling effect the planet would be considerably (perhaps by as much as 10–15 K) warmer. Not surprisingly, Webster and Stephens (1984) estimate that a mere 10 per cent increase in the cloudiness of the low troposphere would compensate for the radiative effects of doubled atmospheric CO2. Of course, these facts do not guarantee that the clouds will continue to have such a strong cooling effect in the future. Deficiencies in modelling oceans are no less fundamental. Models assume continuation of their poorly understood present behaviour—but that will most likely also change with changing climate. For example, shifts in oceanic circulation may create major CO2 sinks and alleviate the tropospheric accumulation of the gas (Takahashi 1987).

The smallest forecasts of planetary warming during the next two generations could be largely hidden within overall climatic noise, while the highest calculated response would have a profound effect on snow and ice cover and on the latitudinal climatic boundaries. Where does this leave us? The only reasonable generalization is about the direction of the change: increased concentrations of greenhouse gases will most likely increase surface temperature. But this response was described by John Tyndall (1861) and detailed by Svante Arrhenius (1896) generations ago without computer models and six-figure research expenditures.

And in spite of the continuous growth of computing power and initiation of ambitious national programmes to get much better models, we may not be able to tighten the existing uncertain forecast of 1.5º–4.5ºC with doubled pre-industrial CO2 levels during the 1990s—unless the calculating speeds go up by many orders of magnitude. Because important variations in moisture convection proceed on scales between 100–1000 m we would need grids 1000 times finer than those with 400 km sides, iterations should be done about 1000 more frequently, and vertical resolution would have to improve. These requirements would demand computing speeds about 10 billion times faster than in the early 1990s (Stone 1992).

Initializing such detailed models would also require massive observation effort gathering a huge volume of missing data, and much of new thinking would have to be infused into the modelling. The latter ingredient is probably decisive. J.D.Mahlman, director of the National Oceanic and Atmospheric Administration’s Geophysical Fluid Dynamics Laboratory, concludes that the problem ‘is so quantitatively difficult that throwing money around is not going to solve it by 1999 or whenever’ (Kerr 1990a). But even if the modellers would converge on an identical value we should not take it as an unchallengable result because their exercises are largely one-dimensional, their calculations supplying the answers to questions about what would happen as just one variable changes.

Staying with the global warming example, future tropospheric temperatures will not be only a function of rising emissions of principal greenhouse gases, but will be also influenced by changed stratospheric chemistry, by altered oceanic circulation, by higher concentrations of sulphates and nitrates generated by growing fossil fuel combustion, by extensive changes in the Earth’s albedo and by modification of ecosystems. Some of these links will have amplifying effects, others will have damping impacts: we simply do not know what the net result may be two or three generations ahead.

Change, in nature or in society, is never unidimensional: it always comes about from interplays—additive or multiplicative, synergistic or antagonistic. Dynamics of the process may make irrelevant even a successful identification of the key initial causes: after all, what would be the point of finding the match which lit the fire? There is an even more fundamental limit to the usefulness of quantitative models even in apparently well-definable physical variables: the possibility that the underlying phenomena may not be encompassed by mathematical expressions owing to the fact that in non-linear systems an infinitesimal change in initial conditions can result in entirely different long-term outcomes.

Atmospheric behaviour offers perfect examples of these limitations. Because the basic equations describing atmospheric behaviour are non-linear, Edward Lorenz (1979) found in his pioneering models that numerical inputs rounded to three rather than to six decimal places produced very different forecasts. As it is impossible to establish all the necessary inputs with an identical degree of accuracy, rounding, actual or by default, is always present and models may correspond very poorly even to the next week’s reality. Ultimately, it means that accurate long-range weather forecasting is most likely impossible.

Other natural phenomena display similarly chaotic behaviour: they do not evolve into a steady state, are always changing in a non-linear and unpredictable fashion, but remain within certain limits and their behaviour is marked by spells of stability (Gleick 1987). Poincare (1903) captured this reality long before its popularization by the modern chaos theory:

It may happen that small differences in the initial conditions produce very great ones in the final phenomena. A small error in the former will produce an enormous error in the latter. Prediction becomes impossible, and we have the fortuitous phenomenon.

Still, the homogeneity of many structures and reproducibility of most processes governing the inanimate world form a solid base for gradual advances.

In contrast, the variability of individuals and uniqueness of events is the essence of living. Individuals are unsubstitutable, phenomena are unrepeatable in ways which would produce identical outcomes. Slobodkin (1981) has an excellent analogy which calls for imagining a number of independent universes, none of them more important than any other, so that the physicists would have to follow a procedure of building ‘the theory on superficial information about all universes rather than detailed information about any one of them’. Physics would be then in a situation somewhat akin to the study of life complexes as ecosystems are likely to differ in the same way, and probably to an even greater extent than the hypothetical separate universes. In Cohen’s (1971) summary, ‘physics-envy is the curse of biology’.

But all modellers must, sooner or later, put more of their personalities into the models. Quade (1970) summed up this ultimate constraint perfectly:

The point is that every quantitative analysis, no matter how innocuous it appears, eventually passes into an area where pure analysis fails, and subjective judgement enters…judgement and intuition permeate every aspect of analysis: in limiting its extent, in deciding what hypotheses and approaches are likely to be more fruitful, in determining what the ‘facts’ are and what numerical values to use, and in finding the logical sequence of steps from assumption conditions.

Finally, an important common limitation introduced by the modeller. Abundant evidence shows complex systems behaving in a wide variety of ways. If one wants to impose order then assorted fluctuations or cycles would fit best. Not so with a disproportionately large share of computer models of environmental changes and socio-economic developments. They carry warnings of resource exhaustion, irreversible degradation, runaway growth, structural collapses, pervasive feelings of pre-ordained doom. They display one of the frequent failings of science: pushing preconceived ideas disguised as objective evaluations.

FAILINGS OF SCIENCE

This science is much closer to myth than a scientific philosophy is prepared to admit. It is one of the many forms of thought that have been developed by man, and not necessarily the best. It is conspicuous, noisy, and impudent, but it is inherently superior only for those who have already decided in favour of certain ideology, or who have accepted it without ever having examined its advantages and its limits.

Paul Feyerabend, Against Method (1975)

In his iconoclastic critique of scientific understanding Paul Feyerabend (1975) noted that even ‘bold and revolutionary thinkers bow to the judgement of science… Even for them science is a neutral structure containing positive knowledge that is independent of culture, ideology, prejudice’. This is, of course, a myth. Science is as much a human creation as religion and legendary tales, with its basic beliefs protected by taboo-like reactions, with its unwillingness to tolerate theoretical pluralism, with its prejudices and falsifications, with its irrational attachments and alliances, and with its changing orthodoxies. Its practitioners have too much at stake to be interested in nothing but intellectual challenges of their efforts.

These considerations must be kept in mind when appraising our knowledge of environmental change and all the advice for action which the scientists rain on the society. They are very helpful in understanding one of the most notable features of contemporary environmental studies: overwhelming gloominess in assessing the planetary future. One way to understand this is to realize that in the western industrial civilization science has come to perform several key functions previously reserved for religion. Perhaps, as Zelinsky (1975) noted, ‘the worship of science is the only authentic major religion of the twentieth century’.

Above all, science explains the world according to its laws and it does not suffer questioning of its canons lightly. And from its complex hierarchy of priesthood (from BS to FRS, from lecturers to Nobel Prize laureates) there must rise scholars not content with daily copying of missals and a capella chantings, that is, writing yet another paper for the Journal of XYZ Association and delivering its mutant at a conference where everybody wears a name and a glass during recess.

Prophets as catastrophists

In a time-honoured practice prophets coming from the long line of Graeco-Judaeo-Christian tradition must be bringing bad news. In fact, the worse the news, the greater the prophecy. To compete with the best known one is difficult: ‘And death and hell were cast into the lake of fire. This is the second death. And whosoever was not found written in the book of life was cast into the lake of fire’ (Revelation XX:14–15). Scientific prophets do not promise the second death but the first one they wholesale freely. Obviously, theirs is not the whole science story—but their opinions get invariably much more attention than reserved assessments of scientists trying to cope honestly with the biospheric complexity.

Figure 1.5 During the past generation life expectancies increased appreciably in every poor populous nation. China’s record has been especially impressive: now only a few years separate the country from averages in European nations.

Source: Plotted from data in the World Bank (1991).

This must be surely one of the greatest peculiarities of contemporary research: ‘that the scientific community relates its research agendas to the public with great optimism, but then produces terribly pessimistic analyses of the future’ (Ausubel 1991a). A thriving symbiosis of catastrophist science and media eager to bring new bad news assures a flow of Apocalyptic prognoses, or at least the most pessimistic interpretations of uncertain findings. During the past generation these claims ranged from a widespread expectation of permanent food crises and chronic energy shortages during the 1970s (Brown 1976) to an inevitability of catastrophic climatic change and sea-level rise (Schneider 1989).

Yet both the realities and the best available assessments at the beginning of the 1990s were very different. China, India and Indonesia, containing about one-half of the poor world’s population, were self-sufficient in grain and (with the exception of countries suffering from civil war) average life expectancies rose everywhere (Figure 1.5). International wheat and crude oil prices were lower than a generation ago (Figure 1.6), and the world-wide reserve-production ratio for crude oil was higher than during any year since 1945 (Figure 1.7). Satellite monitoring showed no sign of global warming during the 1980s (Spencer and Christy 1990), and the most detailed appraisal of sea-rise prospects did not endorse scenarios of high flooding (Ad hoc Committee on the Relationship between Land Ice and Sea Level 1985).

Figure 1.6 At the beginning of the 1990s virtually all essential natural resources were cheaper than 10–15 years ago. International prices of crude oil and wheat exemplify this trend.

Source: Plotted from data in World Resources Institute (1992).

But realistic assessments receive much less media attention than catastrophic news, and careful refutations of exaggerated claims are even harder to find. Two outstanding recent examples of the latter phenomenon include the matters of the expanding Sahara and the disappearing frogs, processes widely seen as important indicators of environmental degradation. Declining frog numbers received special attention because frogs were seen as sensitive indicators of the declining habitability of the planet.

An older perception of the expanding Sahara has received much attention ever since the great Sahelian drought of the early 1970s, and the southward march of the desert has been a widely-cited illustration of environmental degradation. Eckholm and Brown (1977) had ‘little doubt…that the desert’s edge is gradually shifting southward’; the area claimed by this march was given as an equivalent of New York state every decade (Smith 1986), and it was claimed that the desert’s front line was advancing in Sudan at a rate of 90–100 km a year (Suliman 1988). At least the last claim should have made any numerate reader suspicious: such an advance would have desertified all of Sudan in less than one generation!

Figure 1.7 No imminent exhaustion: world-wide reserve/production ratio for crude oil stood higher in the early 1990s than at any time since The Oil and Gas Journal started to publish its annual review of reserves and production.

But careful studies show no evidence of this southward march. Satellite monitoring of long-term changes records extensive natural fluctuations, with the Sahara expanding or contracting at a rate of over 600,000 km2 a year (Tucker et al. 1990). Detailed studies of the Sudanese situation have not verified any creation of long-lasting desert-like conditions, no major shifts in the northern cultivation limit, no major changes in vegetation cover and productivity and no major sand-dune formation (Hellden 1991). Temporary changes of crop yields and green phytomass were caused by severe droughts—and were followed by a significant recovery (Figure 1.8). Have these finding been featured in ‘green’ literature? Similarly, ominous declines of frogs, claimed to be a world-wide phenomenon by 1990, may have a much better explanation in random fluctuations brought by drought (Pechmann et al. 1990).

Figure 1.8 Sequential satellite images of Sudan show how the border between vegetation and desert in the Sahelian zone fluctuates with rains. Contractions (shown here during a dry year of 1983) and expansions (during a wet year of 1987), rather than any southward march, describe changes in the region’s plant cover.

Source: This simplified extent of plant coverage is derived from satellite images in Hellden (1991).

Can this worst-case bias be explained only because of the Apocalyptic predilection of scientific prophets? I believe not. Often their frightening conclusions result from problems and processes discussed earlier in this chapter: from misunderstanding the relationships between parts and the whole, from ignoring cyclicity and underestimating the importance of discontinuity, and from naïvely mistaking today’s dubious models for tomorrow’s realities. Less charitably, this Apocalyptic tilt may be an obvious manifestation of self-interest. Catastrophism generates publicity, and to believe that most of the scientists shun the front-page, keynote-speaker, on-camera exposure would be to deny their humanity. Perhaps even more importantly, possibilities of perilous futures can lead to major grants and conferences and to the building of bigger computer models, all the roads to greater scientific glory.

Researchers are sensitive to questions about their motives, but I strongly agree with Ausubel (1991a) that ‘it is inconsistent for us to question the self-interest of the military-industrial complex, the medical establishment and other groups, but not our own’. Tendency to lean toward pessimistic conclusions or advocacy of the worst-case scenarios may have yet another important motive. Big problems may need big solutions which will require extensive government involvement. Consequently, there would be large, and growing, bureaucracies to direct, and long-range plans to lay out. Many scientists have a deep-seated preference for this kind of social or environmental engineering based on their designs.

And as for the Apocalyptically inclined computer modellers, James Lovelock (1979) has perhaps the best explanation, a combination of one-dimensional lives and gambling-like addiction:

Just as a man who experiences sensory deprivation has been shown to suffer from hallucinations, it may be that the model builders who live in cities are prone to make nightmares rather than realities. No one who has experienced the intense involvement of computer modelling would deny that the temptation exists to use any data input that will enable one to continue playing what is perhaps the ultimate game of solitaire.

Encouraging outcomes would clearly discourage further modelling, while alarmist scenarios require more work to detail the looming catastrophes.

Deeper weaknesses

Failings of science have much deeper reasons than the fallibilities of its practitioners. Every one of its principal, and seemingly self-evident, methodological axioms—causality, eventual solubility of all problems and achievement of perfect understanding, and universal validity of its findings—is questionable. Randomness and indeterminacy of many physical and mental processes militate against simplistic causal explanations, limits to both observability and comprehensibility of complex systems preclude the ultimate understanding, and the evolutionary brevity of scientific endeavour and its inherent weaknesses negate any claims for universal validity.

In Zelinsky’s (1975) merciless phrasing, the latter aspiration is

an extreme case of intellectual parochialism or scientific hubris… How can one posit universal validity for conclusions extracted by means of a provisional system of logic from a biased set of data taken by less than infallible observers using imperfect instruments over a period of at most a few centuries…?

And as far as our quantitative knowledge of the Earth’s environment is concerned, the period extends merely over a few decades! But even if methodologically sounder foundations would eliminate catastrophist biases, and even if the possession of much deeper understanding would greatly reduce the challenge of uncertainty and the resulting dilemmas of undecidability, scientific interpretation of complex realities would still face fundamental pitfalls of hierarchization, order and addition (Jacquard 1985).

As soon as our knowledge of objects or phenomena reaches the level where they cannot be adequately characterized by a single parameter, we cannot place them in a hierarchical order. Of course, environmental resources and processes can be understood only through sets of irreducible parameters, a reality excluding any meaningful ranking.

When we are comparing one number to another, nonequality implies that one is greater than the other; when we are comparing sets, it implies only that they are different. This is not a plea motivated by moralistic considerations; it is a statement of logical fact.

(Jacquard 1985)

And it precludes any meaningful ranking of endangered species to be saved, any ‘correct’ allocation of limited resources to reduce different forms of pollution, or any choice of ‘best’ divisions of national responsibilities in efforts requiring global co-operation.