1944

Binary-Coded Decimal

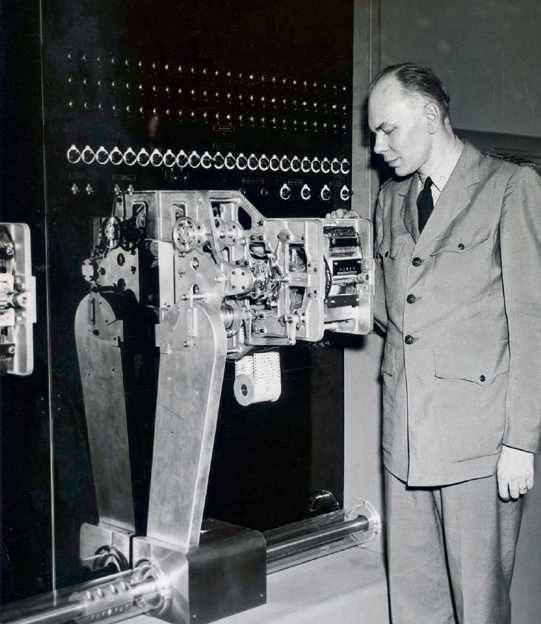

Howard Aiken (1900–1973)

There are essentially three ways to represent numbers inside a digital computer. The most obvious is to use base 10, representing each of the numbers 0–9 with its own bit, wire, punch-card hole, or printed symbol (e.g., 0123456789). This mirrors the way people learn and perform arithmetic, but it’s extremely inefficient.

The most efficient way to represent numbers is to use pure binary notation: with binary, n bits represent 2n possible values. This means that 10 wires can represent any number from 0 to 1023 (210–1). Unfortunately, it’s complex to convert between decimal notation and binary.

The third alternative is called binary-coded decimal (BCD). Each decimal digit becomes a set of four binary digits, representing the numbers 1, 2, 4, and 8, and counting in sequence 0000, 0001, 0010, 0011, 0100, 0101, 0110, 0111, 1000, 1001, and 1010. BCD is four times more efficient than base 10, yet it’s remarkably straightforward to convert between decimal numbers and BCD. Further, BCD has the profound advantage of allowing programs to exactly represent the numeric value 0.01—something that’s important when performing monetary computations.

Early computer pioneers experimented with all three systems. The ENIAC computer built in 1943 was a base 10 machine. At Harvard University, Howard Aiken designed the Mark 1 computer to use a modified form of BCD. And in Germany, Konrad Zuse’s Z1, Z2, Z3, and Z4 machines used binary floating-point arithmetic.

After World War II, IBM went on to design, build, and sell two distinct lines of computers: scientific machines that used binary numbers, and business computers that used BCD. Later, IBM introduced System/360, which used both methods. On modern computers, BCD is typically supported with software, rather than hardware.

In 1972, the US Supreme Court ruled that computer programs could not be patented. In Gottschalk v. Benson, the court ruled that converting binary-coded decimal numerals into pure binary was “merely a series of mathematical calculations or mental steps, and does not constitute a patentable ‘process’ within the meaning of the Patent Act.”

SEE ALSO Binary Arithmetic (1703), Floating-Point Numbers (1914), IBM System/360 (1964)

Howard Aiken inspects one of the four paper-tape readers of the Mark 1 computer.