3.5 Why some design criteria will change in the digital paradigm

Peter is fascinated more and more by the possibilities of digitization. Step by step, robots will be deployed on various levels and they will autonomously interact with us. Bill Gates once said: “A robot in every home by 2025.” Peter believes that this development will take place even earlier. Cars drive autonomously on highways and private sites already, and new possibilities are continuously emerging in the area of cloud robotics and artificial intelligence. New technologies, such as blockchains, will allow us to carry out secure intelligent transactions in open and decentralized systems.

But what does that mean for the design criteria when we develop solutions for systems of tomorrow?

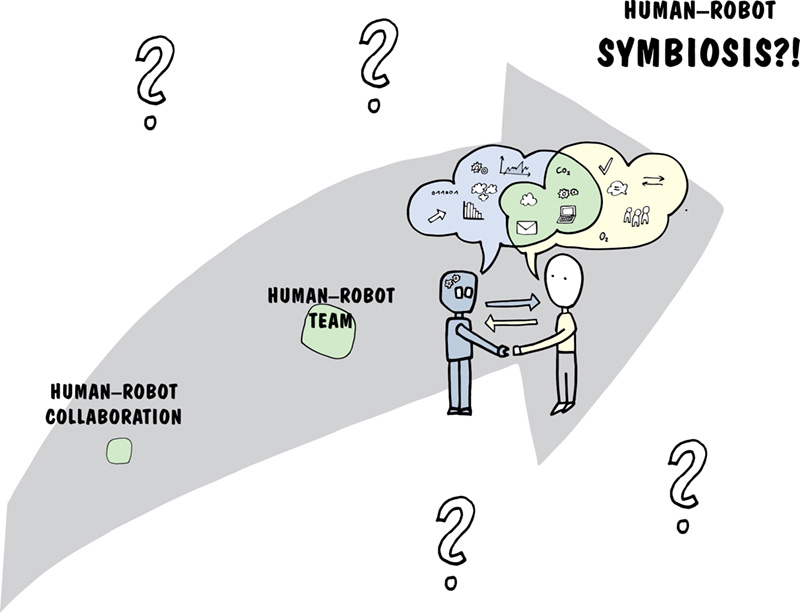

In a nondigitized world, the relationship to people is primary for an improved experience. When we look at the development of digitization with its various priorities, the design criteria are extended over time. For the next big ideas in the field of robotics and digitization, new criteria become relevant, because the systems interact with each other and both (robots and human beings) gain experience and learn from each other. A relationship is created between the robot and the human being. They act as a team.

Therefore, among other things, trust and ethics become important design criteria in the human–machine team relationship. So-called cognitive computing aims at developing self-learning and self-acting robots with human features. Nowadays, many projects and design challenges, depending on the industry, are still in the transition phase from e-business to digital business. Digitization is thus a primary focus for companies if they want to stay competitive and exploit hitherto unknown sources of income through new business models.

The design criteria begin to change when the machines act semi- autonomously. In this case, human beings collaborate with robots. Robots perform individual tasks, while centralized control is still in the hands of human beings.

Things become really exciting when human beings interact with robots as a team. Such teams have far-reaching possibilities and can

- make faster decisions,

- evaluate many decisions synchronously while doing so,

- solve difficult tasks, and

- perform complex tasks.

Relevant criteria, which are to be fulfilled by a human–robot team, are inferred from the specific structure of a task. Design thinking tries to realize the ideal composition of task characteristics and characteristics of team members. But if human beings and robots will act together on a team in the future, the question arises of whether it is more important for us humans to retain decision-making authority or to be part of an efficient team. In the end, a good team performance is probably more important. Creating a functioning team is a complex affair, however, because three systems are relevant in the relationship between human beings and robots: the human being, the machine, and the social or cultural environment.

The great challenge is how the systems understand one another. Machines can simply process data and information. Human beings have the ability to recognize emotions and gear their activities accordingly, while both systems have difficulties in the area of knowledge. Knowing what the others know is pivotal! And then there is the element of the social systems. Human behavior differs widely due to its individual forms of existence in different cultures and different social systems. Not to be forgotten, ethics: How is a robot to decide in a borderline situation? Let’s assume a self-driving truck gets into a borderline situation in which it must decide whether to swerve to the right or the left. A retired couple is standing to the right; on the left, there’s a young mother with a baby buggy. What are the ethical values upon which a decision is made? Is the life of a mother with a small child worth more than that of the retirees?

A human being makes an intuitive decision in such a borderline situation, which is based on his own ethics and the rules known to him. He can decide himself whether he wants to break a rule in a borderline situation, such as failing to brake at a stop sign. A robot follows the rules it has been fed in this respect.

Even a simple action such as serving coffee shows that trust, adaptability, and intention in the human–robot relationship become a challenge for the design of such an interaction.

Today, Peter’s design thinking reflections still focus on human beings. He builds solutions that improve the customer experience or automate existing processes. You might call it digitization 1.0. At higher maturity levels of digitization, things get far more challenging. With increasing maturity, robots also become more autonomous. Not only are individual functions or process chains automated, but robots interact with us on a situation-related basis. Thus they act multidimensionally. Trust, along with adaptability and intention, will be one of the most important design criteria. This means that good design will require all these design criteria in human–machine interaction in the future.

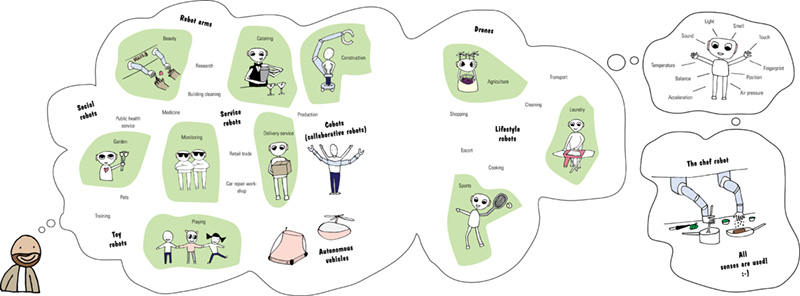

Peter has a new design challenge that he wants to solve in collaboration with a university in Switzerland. He’s in contact with the university’s teaching teams. Peter’s design challenge comprises finding a solution for registering drones and determining their location. For the most part, today, autonomous drones are not yet out and about. But they are getting increasingly more autonomous and will fly by themselves in the future. They will perform tasks in the areas of monitoring, repair, and delivery; render corresponding services; or will be simply of use in the context of lifestyle applications.

The participants in the “design thinking camp” get down to work. A technical solution for registering the drones and identifying their location should be found quickly. Interviews with experts from flight monitoring corroborate the need for such solutions. An incident at a French airport when an airliner evaded a drone at the last minute during a landing only underscores this need.

Because all stakeholders are involved in such a design challenge, the students go one step further and interview passers-by in the city. They soon realize the general population is not very enthusiastic about drones and only accepts them to a limited extent. The design thinking team has come up against a much more formidable problem than the technical solution: the relationship between human and machine. Especially in the cultural environment of Switzerland, where the design challenge takes place, it seems important to pay heed to general norms and standards such as protection from encroachments on the part of government or other actors upon personal freedom. The participants see a complex problem statement here and reformulate their design challenge with the following question:

Based on this new design challenge, the question is illuminated from another side. The result is that the technical solution is put on the back burner, while the relationship between man and machine takes center stage in a more heightened way as the critical design criterion. Expanding the design criteria serves as a basis for a solution in which everybody can identify drones and, at the same time, can get expanded services from the interaction.

In this case, a prototype that was developed consists of an app that is networked with the potential cloud in which the traffic information on the drones flow together. Through the position data, the “Drone Radar App” detects the drone. The key feature is that the drone for which the information is retrieved greets the passer-by with a “friendly nod.” This feature was quite well received by the people interviewed and shows how human behavior can minimize the fear of drones. Other prototypes also show that making contact in a friendly way or an associated service improves this relationship.

Because Peter has carried out the “drone project,” he wonders where else robots will interact with humans in the future. What use cases are there?

Which senses can be captured by robots?

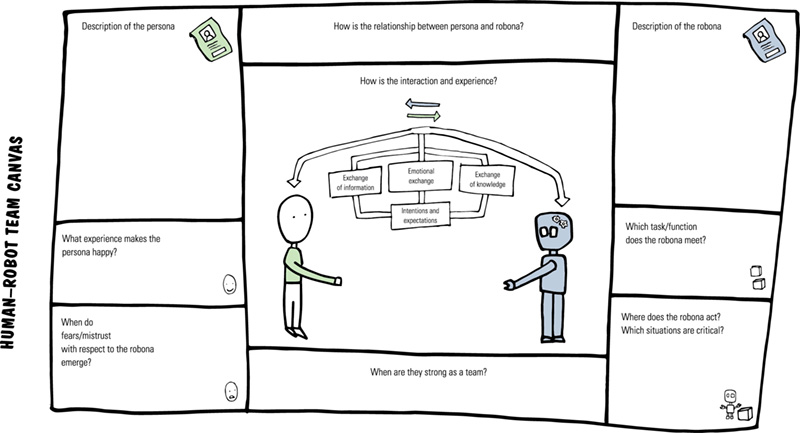

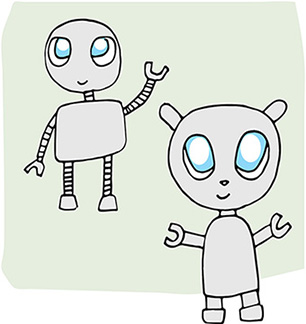

As the examples of autonomous vehicles and drones have shown, the future will be characterized by a coexistence between humans and machines. The relationship between humans and robots will be decisive for the experience. For initial considerations, creating a “robona” together with a persona has proven to be of use.

The creation of a robona arises from the human–robot team canvas (Lewrick and Leifer), with the core question being the one about the relationship between them. Interaction and experience between robona and persona are the crucial issues. For one, information is exchanged between the two. This exchange is relatively easy because certain actions are usually performed 1:1.

Things become more complex when emotions form an integral part of the interaction. Emotions must be interpreted and put in the right context. The exchange of knowledge requires learning systems. Only a sophisticated interplay between these components can properly assess intentions and meet expectations. That complex systems require complex solutions is especially applicable in this environment. The complexity is stepped up a notch in the human–robot relationship and its team goals.

Trust can be built up and developed in different forms. The simplest example is to give a robot a human appearance. Machines might come into being in the future that communicate with people and at the same time make a trustworthy impression on their human interlocutor. The projects of the “Human Centered Robotics Group,” which has created a robot head that reminds the human interlocutor of a manga girl, are good examples of this. The creation, based on a schema of childlike characteristics (big eyes), makes an innocent impression—it makes use of the key stimuli of small children and young animals that emanate from their proportions (large head, small body). The robot also creates trust because it recognizes who is speaking to him: It builds eye contact, thus radiating mindfulness. Not only the way a robot should act but also what it should look like often depends on the cultural context. In Asia, robots are modeled more on human beings, while they are mechanical objects in Europe. The first American robot was a big tin man. The first Japanese robot was a big, fat, laughing Buddha.

Once robots become more similar to human beings, they can be used more flexibly: They help both in nursing care for the elderly and on construction sites. Trust is created when the robot behaves in a manner expected by the human being and in particular when the human feels safe due to this behavior. Robots that do not hurt people in their work—that stop in emergency situations—are trusted. This is the only way they can interact on a team with people. Both learn, establish trust, and are able to reduce disruptions in the process. The theme of trust gets more complicated in terms of human–robot activities in different social systems or when activities are supported by cloud robotics. Then the interface is not represented by big, trust-inducing eyes but by autonomous helpers that direct and guide us and thus provide us with a basis for decisions.

Emotions in the robot–human relationship are just as important as trust. The human expects the robot to recognize emotions and to act accordingly. There is no doubt that human beings have emotions and that their behavior is influenced by emotions. Our behavior in street traffic is a good example: Our driving style is influenced by our emotions. And we react to the driving style of others. We are in a hurry because we must get to an appointment. We are relaxed because we are just starting off on our vacation. We drive aggressively because we have had a bad day. How does a self-driving car handle such emotions and tendencies? The robot must adapt its behavior and, for example, drive faster (more aggressively) or slower (more cautiously). If necessary, it must adjust the route because either we want to enjoy the scenery or get from A to B as quickly as possible. One possibility is that, in the future, we transfer our personality and our preferences like a personal DNA to distributed systems and thus provide a stock of information. Another possibility is for various sensors to transmit additional information in real time, which helps the robot make the right decision for the emotional situation easily and quickly.

Hence the recognition of emotions and the situational adaptation of behavior will be of an even greater significance in the future. The latest developments in this area include “Pepper,” the humanoid robot of the Softbank telecommunications company that can interpret emotions.