| 10 | Through a Crystal Ball |

Two significant flares occurred during August 20–21 as a historically active sunspot group returned to the visible face of the sun. The geomagnetic field was disturbed through August 20. The source of the disturbance was a high-speed solar wind stream that originated from a coronal hole on the sun’s surface. Spacecraft sensors detected solar wind speeds approaching two million miles per hour. There’s a chance for more significant solar flares from the sunspot group during August 25–31 as it continues to trek across the visible face of the sun.

—NOAA/SEC, “Outlook 99-20,” August 24, 1999

When CMEs do make it to the Earth, the compressed magnetic fields and plasma in their leading edges smash into the geomagnetic field like a battering ram. Across a million-mile-wide wall of plasma, the CME pummels the geomagnetic field. Such niceties as whether the polarities are opposed or not make little difference to the outcome. The CME pressure can push the geomagnetic field so that it lays bare the orbits of geosynchronous communication satellites on the dayside of the Earth, exposing them to wave after wave of energetic particles. When the fields are opposed, particles from the CME wall invade the geospace environment, amplify ring currents, and generally cause considerable electromagnetic bedlam, often tracked by increases in the recorded satellite anomalies and power grid GICs. Clearly, we need more advanced warning for solar flares, geomagnetic storms, and CMEs. A successful forecast of how severe a particular solar cycle will be, no matter how accurate, is simply not much more than a statement that “this winter will be more severe than last year.” That isn’t enough information to prepare us for tomorrow’s snowstorm, so is there any way of doing better than just predicting the ups and downs of the next solar cycle? Is there any way we can get the jump on individual day-to-day solar storms and space weather events? With some effort, the answer is, luckily, “Yes,” but, like a Trojan horse, there are actually three kinds of forecasting issues tucked away within this single operation. You can attempt to predict a space weather event before it starts. You can try to predict what it will look like when it is en route to Earth. Or you can predict what it will do when it arrives.

If we can watch the Sun, we can gauge when a CME will come our way and often have two or three days advanced warning. For solar flares, on the other hand, there is still a lot of work to do to provide more than a ten-to-thirty-minute warning before they erupt on the solar surface, and we still can’t predict just how powerful the flare or the stealthy proton releases will be when they get to the Earth. This means, for astronauts, that every flare sighting requires running for cover from the X rays as if your life depended on it. Of course, by the time your instruments register a problem, it could well be too late.

Once solar physicists had studied solar flares for a long enough time, they began to develop a scale for ranking their magnitude. Originally, it was a crude optical scale, but then came satellites equipped with X-ray and proton detectors. The oldest scale measures the flare’s X-ray intensity. This scale is actually rather fluid during the sunspot cycle. During sunspot minimum, the X-ray brightness of the Sun is low, so a flare of a given brightness can be quite spectacular, like a flashlight in a dark cave. But during sunspot maximum, when the Sun is far brighter as an X-ray source, this same flare is nearly invisible, like a flashlight switched on in broad daylight. There are four main classes in increasing order of strength: B, C, M, and X. Each category is broken into ten numerical subcategories: 0–9. An M5.5-class flare, for example, is ten times more powerful than a C5.5-class flare and one-tenth as powerful as an X5.5-class flare.

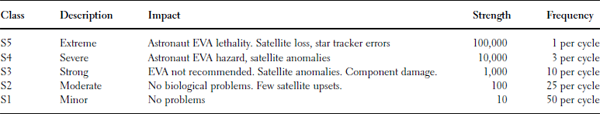

The second scale, recently adopted by NOAA for its space weather alerts, ranks flares on the basis of their energetic particle flows measured at the Earth. An S2 flare has ten times the particle flow of an S1 flare, but the classification of the flare is based on the actual number of particles, not an electromagnetic intensity as for the B-, C-, M-, and X-classes.

TABLE 10.1 Quick Guide to Space Weather Indices

NOTE: The strength of the flare is in units of particles per second per square centimeter per steradian.

Big Bear Solar Observatory is a telescope located on a spit of land in the middle of a lake in Southern California. Unlike most observatories perched high up on the mountaintops, this one was placed on a lake because of the peculiar stability and clarity of the solar images that result from the combination of geographic circumstances. Air turbulence is normally the biggest factor preventing astronomers from seeing small details on the Sun. At Big Bear Lake, the air flows are parallel to the water and help to reduce the amount of turbulence near the telescope. Harold Zirin and William Marquette have spent years perfecting their BearAlert Program for spotting solar flares before they hatch. Armed with real-time solar data, they watch the minute-to-minute changes in an active region traced by its magnetic field and hydrogen emission. As a public test of their methods, over the course of a two-year period, they issued thirty-two “BearAlerts” for sizable flares via e-mail and scored hits on fifteen of them. Because solar conditions generally do not include flares, and because the Sun’s state changes only very slowly from day to day, it is possible to issue a “no flare today” warning and be correct nine times out of ten. This promising score is, of course, useless for anticipating whether a flare will actually happen or not. BearAlerts, and the space weather reports they have evolved into, are issued only when a flare seems about to happen. They are the closest things we have today to keeping ahead of these unpredictable solar storms.

Weather forecasters can usually tell you whether a particular storm has what it takes to unleash lightning discharges over your city during a given two- to six-hour period, but that is their limit. In a similar vein, solar physicists are fast approaching the ability to announce that a given active region will spawn solar flare activity during a set six-day period but, ironically, can’t tell you if one will happen in the next few hours. Like weather forecasters, they can’t tell whether you will get a few major flares that could affect astronaut health or a hail of minor flares that, individually, are unimportant.

The next element of space weather is the solar wind itself, which acts like something like a conveyor belt, connecting the surface of the Sun and activity there with the Earth. After the spectroheliograph was invented in the 1890s, astronomers quickly got an eyeful of fiery prominences, and other phenomena, busily hurling matter into the space surrounding the Sun. But no one appreciated just how far this star stuff could travel until clues to its invisible journey began to show up in the direction that comet tails pointed and in direct spacecraft observations in the early 1960s. It travels at speeds of about a million miles an hour and has a density of about ten to fifty particles per cubic inch, mostly electrons and protons. In fact, it’s a better vacuum by far than what scientists can make in their laboratories. What makes this wind disproportionately complex compared to the breezes you feel on a summer’s day is that it carries a magnetic field along with it.

When two magnetic systems such as the Sun and the Earth interact, the outcome depends on whether the polarities are the same or are opposite to one another. If the wind and geomagnetic polarities are the same on the daytime side, the geometry dictates that they can cause the solar wind to slide over the outskirts of the Earth’s magnetic field and flow smoothly into the depths of space. The interaction of the north-type geomagnetic field with a south-type solar wind field, on the other hand, is usually very dramatic. Hours-long geomagnetic storms and spectacular aurora result, as currents of accelerated particles flow from distant unstable regions in the dynamic magnetotail and into the atmosphere along the field lines. North- and south-type magnetic field lines rage a pitched battle to unkink themselves into a smooth geometric shape. As a result, the magnetosphere picks up energy from the currents of particles that are created and the geomagnetic field becomes wildly unstable in its outer frontiers: the magnetopause. Because the origin of these magnetic storms involves the invisible solar wind whose roots in the solar surface cannot be detected, they seem utterly random and unrelated to specific sunspot groups. We never see them coming. Milder storm conditions can be spawned as the wind constantly changes its strength and polarity. The geomagnetic field responds to these changes with magnetic irregularities called “substorms.” Substorms last a few hours, but are sometimes strong enough to cause aurora to appear in extreme northern and southern latitudes. Even comet tails develop kinks and irregularities that follow the clumpy, and gusting, solar wind.

About one million miles from the Earth, in the general direction of the Sun, a group of NASA satellites serve as our outposts on the solar wind at the L1 Lagrange point. The L1 region is an invisible dimple in the gravitational well of the rotating Earth-Sun system. You could fly right through it and not realize anything unusual was going on. Satellites carefully positioned there, like a pencil balanced on its point, may orbit this invisible point in space, lacking any gravitating matter to hold them to this spot. From this vantage point, SOHO busily watches the solar surface and relays its images back to Earth. The ACE satellite, meanwhile, samples the magnetic field and composition of the solar wind as it rushes by. Like buoys bobbing in the ocean off coast, these satellites tell us of changes in the wind conditions that can signal trouble for the geomagnetic field within forty-five minutes.

In addition to solar flares and the solar wind, the coronal mass ejections, first seen by the OSO-7 satellite and by Skylab in 1973, have been studied in detail, and nearly all of them vouch for a serious consequence should one find its way to the Earth. Soon after being launched by the Sun, in an event that from our vantage point on Earth often engulfs nearly the entire solar disk, they are accelerated to speeds from a gentle 10 km/sec to over 1500 km/sec–nearly two million miles per hour. Within a few days, they can make the journey from the Sun to Earth orbit and can carry up to fifty billion tons of plasma.

The launch of the SOHO satellite in 1995 put the Sun under a twenty-four-hour weather watch. One of the most spectacular instruments on this satellite was LASCO, the Large Area Solar Coronal Observatory. Like its predecessors on OSO-7 and Skylab, it was a coronograph, which manufactured artificial total solar eclipses so that the faint details in the corona could be studied. No sooner had the shutter opened on this instrument, when it began to record vivid images of CMEs leaving the Sun. Within a year, SOHO scientists became adept at using LASCO to anticipate when the Earth would be affected by these disturbances. Eventually, NOAA’s Space Environment Center, whose responsibility was to produce daily space weather forecasts, began to use the LASCO data in 1996 to improve their accuracy. By keeping an eye out for “halo” CME events that were directly aimed at the Earth, it was now a routine matter to achieve a two- to three-day advanced warning at least for the onset of major geomagnetic storms that could cause satellite outages and electrical power blackouts. So long as the SOHO satellite keeps working, it substantially improves our chances of never being caught off guard the way we were during the Quebec 1989 blackout.

Although solar flares are often seen near the birthplaces of CMEs, solar physicists don’t believe they are what actually cause them. CMEs and flares both track yet more subtle underlying conditions that are probably the mother to them both. Flares actually happen at much lower altitudes in the Sun than where the CME plasmas are spawned. Solar physicist Richard Canfield and his colleagues at the University of Montana have spent some time trying to get the jump on CMEs even before SOHO’s instruments can start to pick them up. They think they have found what is triggering at least the major ones that we have to worry about back home. To see the birth of a CME, you can’t use ground-based data at all. You have to use X-ray images of the Sun taken by satellites such as the Japanese-U.S.-British Yohkoh X-ray Observatory.

A major press briefing at NASA Headquarters on March 9, 1999, soon got the news media’s attention, and the Washington Post carried a headline, “Scientists Find Way to Predict Solar Storms,” while ABC News offered, “The Sun’s Loaded Gun: S-Shapes on Surface Foretell Massive Solar Bursts.” The idea that these S-shaped “sigmoid” fields were like a cocked gun ready to fire became the inevitable centerpiece sound bite in many of the reports. During sunspot minimum, about one CME can be produced each day or so. During sunspot maximum, the Sun can spawn a handful of them in a single day. Fortunately, most of these are ejected either on the opposite side of the Sun from the Earth, or at large angles from the Earth so that they miss us about nine times out of ten. When CMEs flare toward the Earth, Great Aurora bloom across the globe, and geomagnetic conditions become dramatically turbulent for days as the great wall of plasma rushes by.

Strong geomagnetic storm conditions are in progress. These levels of activity are possibly the result of a shock observed in the solar wind on October 21 at 01:38 UT originating from a coronal mass ejection on the sun on October 18. This level of disturbance routinely causes power grid fluctuations, increased atmospheric drag, and surface charging on satellites, intermittent navigation system problems, signal fade of high-frequency radio signals, and auroral displays at mid-latitudes. (NOAA/SEC Advisory 99–9, October 22, 1999)

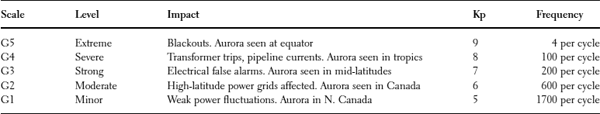

The geomagnetic field and its collections of trapped particles is the last stop for most of the Earth-directed severe space weather events spawned by the Sun. Just as your local weather reporter can tell you about rainfall, temperature, humidity, and pressure as presages for tomorrow’s forecast, space weather can also be charted by keeping track of a handful of numbers. Over the years, scientists devised a number of quantities that gave a quick reading to the level of geomagnetic storminess. Few have turned out to be as popular as the Kp index devised in 1932 by Julius Bartels. In addition to counting sunspots as a barometer of solar activity, the Kp index brings a second dimension to the problem of forecasting: sunspot numbers define how active the Sun is, while Kp tells how vigorous the Earth’s geomagnetic response was to solar activity, or to other phenomena, that can disturb the Earth’s magnetic field.

TABLE 10.2 Geomagnetic Storm Indexes Used in Space Weather Forecasting

Kp is a measure of the largest swings in magnetic activity that you record around the globe during any three-hour period. It’s not a number on a linear scale like temperature; instead, it’s a part of what is called a semilogarithmic scale. A Kp = 9 geomagnetic storm, for example, is about five times stronger than a Kp = 6 storm. Typically, on any given day, the Earth’s field imperceptibly bumps and grinds at Kp levels between 1.0 and 3.0. With a magnetic compass in hand, you would not even know there was a problem at all. These seemingly random gyrations define the normal quiet state of the planetary field, but occasionally it can belt out a disturbance you need to pay attention to. Kp values between 4.5 and 5.5 are classified as small storms like the occasional, harmless, earthquakes seismologists detect every few weeks in the San Francisco Bay area. Large storms require Kp values between 5.6 and 7.5, and these are analogous to the yearly shakes California residents feel that cause the dishes to rattle and the chandelier to swing. Finally, you get to the major “head for the hills” storms that require Kp indices greater than 7.5 and resemble the once-in-a-decade Loma Prieta or 1999 Turkey earthquakes. They are the ones that can cause blackouts. Luckily, geomagnetic storms have to be pretty large before anyone has to seriously worry about what immediate impacts they will have. Only storms with Kp indices greater than about 6.0 seem to have what it takes to shake up electrical systems. On this scale, the Quebec blackout was caused by a 9.0 “mega storm.” There have only been three other ones like it in the last fifty years: in 1940, 1958, and 1989. With that said, space scientists cannot tell you when the next one will happen. One thing is for certain, based on previous patterns: the odds are very high that there may be less than a few minutes warning that the storm will escalate to this level of severity—not enough time for a utility company to do much more than watch and hope for the best. By the time you are forced to use Kp to decide what to do, it is already too late to decide what to do.

So, after one hundred years of research, space physicists have now begun to understand some of the basic rules of space weather forecasting. They know how to measure a set of parameters that track space weather severity. They have at their disposal real-time images of the solar surface and its surroundings. There are many parallels with ordinary weather forecasting, too. Like modern weather forecasters watching a hurricane develop, they can track CMEs as the leave the Sun, but they lose sight of them almost immediately as they enter interplanetary space. Fortunately, just as hurricane watchers on a beach can see an incoming storm hours before it arrives, satellite sentinels at the L1 Lagrange point can anticipate a CME shorefall on Earth within the hour. Meanwhile, solar physicists can anticipate when an active region on the Sun may disgorge a flare, but, like weather forecasters, they cannot predict the times of individual lightning strikes.

Unlike terrestrial weather forecasting, however, the main problem that opposes the further development of newer space weather forecasting techniques is that the data are too sparse to follow all the changes that can have adverse impacts. Research satellites are launched and put into service on the basis of scientific needs, not on the basis of their utility to space weather forecasting. Only NOAA’s monitoring satellites and their military equivalents are specifically designed to serve space weather forecasting needs. But, even if we had a fully working armada of satellites keeping watch on the entire system, this would still not be sufficient to provide detailed forecasts. Some method has to be found for filling in the data gaps, and that method involves the detailed physical modeling and measuring of the system and all its various interactions.

In ordinary weather forecasting, scientists have thousands of stations throughout the globe that report local temperature, pressure, humidity, wind speed, and rainfall. Weather balloons and rockets as well as satellite sensors measure changes in wind speed and pressure across great swaths of vertical space from the ground and into the tropopause. Every minute or hour, a “state of the atmosphere” survey can be made to poll how things are going. To make a forecast about tomorrow’s weather, you plug this data into a sophisticated 3-dimensional model, which extrapolates the current conditions into the future, one small computation step at a time. It’s called the general circulation model, and it is the product of a century’s work in the scientific study of the atmosphere using the tools of classical mechanics, thermodynamics, and the behavior of gases and fluids. When you mix these theoretical ingredients together with the data on a rotating, spherical surface heated by the Sun, and connected to the oceans and land masses, the resulting atmospheric model helps the National Weather Service generate forecasts good enough to make the average person happy. The one-hour forecast is usually bang-on correct. The twenty-four-hour forecast is now routinely accurate for perhaps ninety-five attempts out of one hundred. The three-day forecast is usually good to about seventy attempts out of one hundred, unless you live in Boston–where nothing works. Even the seven-day forecast is better than the toss of a dice in many localities. Weather forecasts are also more accurate the larger the area they apply to. For instance, you may not be able to predict the rainfall in Adams, Massachusetts next Wednesday, but you can tell if El Niño will make the entire East Coast of North America warmer or cooler by two degrees. With long-term climate models, you can even recover the global weather patterns for the spring of A.D. 769.

Now, suppose you only had a dozen weather stations across the globe, and every five or ten years you had to replace some of them at a cost of $150 million each. Suppose, too, that when you replace them you don’t put the new ones in the same locations or equip them with the same instruments. You also don’t get to make the measurements at the same time. Then, added to this, suppose that your forecasting model is still under development because you don’t know what all of the components that affect your weather happen to be. You don’t know how clouds move from place to place, or how the sunlight actually heats the gas, or just what it is that causes rain to form in a cloud. Welcome to the complexities of space weather forecasting:

Solar activity, between December 1–27, is expected to range from low to high levels. Frequent C-class flares are likely. Isolated M-class flares will be possible throughout the period. There are also chances for isolated major flares as potentially active regions 8765, 8766, and 8771 are due to return on December 7. There is a chance for a solar proton event at geosynchronous orbit when the above mentioned regions return starting on December 7. The greater than 2 MeV electron flux at geosynchronous altitude is expected to be at moderate to high levels during December 5–10 with normal to moderate levels during the remainder of the period. The geomagnetic field is expected to be at unsettled to minor storm levels during December 4–8 due to recurrent coronal hole effects. Otherwise, activity is expected to vary between quiet and unsettled levels barring any earth-directed coronal mass ejections (NOAA/SEC Weekly Highlights and Forecasts, December 1, 1999, 2112 UT)

By the 1980s, solar and geospace research had made a number of significant refinements to the best of the theoretical models for how the space weather system functions; much of this was thanks to the advent of powerful supercomputers and new data from dozens of interplanetary observatories and spacecraft. Everyone could now afford their own “workstation” that harnessed more computing power than most of the mainframe computers of the 1960s era. What was dramatic about the new way for researchers to do business was that it was no longer necessary to take mathematical shortcuts that could compromise the accuracy of a theoretical prediction. Nearly photographic renderings of complex fields, plasma flows, and particle currents could be calculated and compared to satellite data as it was taken along the satellite’s actual orbit. Theoretical investigations were now hot on the trail of being able to describe the detailed bumps and wiggles in satellite data, not just their overall shape. Because the calculations were based on “first principles” in physical science, they were powerful numerical testing grounds of our knowledge of the space environment. Glaring deficits in understanding tended to show up like a black eye, impelling theorists to improve the mathematical models still further. The art of modeling space weather systems had matured to the point that the crude averages used in earlier AE-8 and AP-8 models which NASA had developed during the 1970s were no longer necessary or even desirable.

The next big challenge was to combine a number of separate mathematical models into one seamless, coherent, and self-consistent supermodel. The National Weather Service had long enjoyed the benefits of a general circulation model to predict the course of a hurricane or next Tuesday’s rainfall. What space weather forecasters needed was something very much like it. During the 1980s, researchers independently worked on their own theoretical approaches to space weather phenomena, each describing a specific detail of the larger system. In the 1990s, it was time to bring some of these pieces together. Here’s how it is meant work, at least in principle:

In the new scheme of things, a solar surface “module” developed by one group of researchers would take a set of input conditions describing the solar surface and calculate the surface magnetic conditions of the Sun along with the various plasma interactions and flows. This information would be passed on to a solar wind, or CME, module developed by other groups, which would detail the transfer of matter and energy from the solar surface all the way out to the Earth’s orbit. At this point, you would have a forecast of whether the Sun was going to send a CME toward Earth or not.

The output from this solar wind module would then feed a geospace physics module, which would calculate the detailed response of the Earth’s magnetosphere, ring currents, and magnetotail conditions. Finally, there would be an upper atmosphere module that would take the output from the geospace physics module and calculate how the properties, currents, energy, and composition of the Earth’s exosphere-ionosphere-mesosphere system would be modified.

Like a relay race in which a baton is passed from one research team to another, a disturbance on the Sun would be passed up the stack of modules until a specific consequence materialized in the geospace environment. Each of these steps would be updated in near–real time for a “Nowcast” or jumped forward five, ten, forty-eight hours to make extended forecasts based on the current conditions. At least this was the hope. In reality, although the individual parts to the “car” were in hand, there was no agency that could assemble all the parts. No single agency had the financial resources and scientific support to do it alone. The Department of Defense (DoD) might, for instance, have the best available model of the ionosphere. The National Science Foundation (NSF) and NASA might have supported research to develop the best available solar atmosphere model. The knowledge had to be shared and interconnected before it would be possible to make a meaningful forecast. This requires the cooperation of scientists working in many disciplines under many different kinds of grants, across a number of different federal and private agencies.

Even though space environment effects have been known for decades, space weather forecasting is nearly as much an art as a science. By some accounts, we are forty years behind the National Weather Service in being able to detect or anticipate when a solar storm will actually impact the geospace environment and what it is likely to do when it arrives. Meanwhile, the Weather Service has benefited from two critical developments during this same time frame. Powerful “physics-based” programs have been created that run on supercomputers to track atmospheric disturbances from cradle to grave. This is possible because our theoretical understanding of what drives atmospheric disturbances has grown and deepened since 1950. The second factor is a functioning network of weather satellites, which actually watch the globe around the clock and have done so almost continuously since the early 1960s, when Tiros was first placed in orbit. All this atmospheric research and monitoring activity is supported by NOAA’s National Environmental Satellite, Data, and Information Service, which maintains a fleet of polar-orbiting and geosynchronous weather satellites to the tune of $368 million (FY 1997) a year. Some of these satellites, such as the GOES series, even carry space environment monitors. There is no comparable network of nonresearch satellites to keep track of space weather conditions.

Only in the last five years have scientists been able to put in place a ragtag collection of satellites capable of keeping constant, and simultaneous, watch on the solar surface, the solar wind, and its effects on the geospace environment. Although NASA has launched more than sixty research satellites since the early 1960s, studies of the space environment are still regarded as low-profile activities compared to planetary exploration and probing the deep universe. The need for a specific satellite is weighed entirely on its scientific and technological returns to NASA and the space science community, not on any benefit to NOAA or commercial and military space weather applications. This is an attitude very much different than for weather satellites such as the Tiros, GOES, and NOAA series launched by NASA but operated by NOAA. There are dozens of these applications satellites orbiting the Earth that are owned by non-NASA agencies, like NOAA, the Department of the Interior, and the Department of Defense, compared to a handful of working research satellites.

As the twentieth century began to draw to a close nearly forty years after the start of the Space Age, members of the space science community thought that it was a good time to start thinking about the big picture. So in 1993 they went ahead and contacted the National Science Foundation. In response, NSF called for a meeting of government, industry, and academic representatives to discuss what was going on in space weather research and what kinds of things needed to be done. The federal coordinator for meteorology was assigned the task of organizing this huge program which would take quite some effort to set in motion. It was pretty obvious, by then, that several decades of independent work by researchers in many agencies still had left, nevertheless, many things only partially completed in terms of a larger product such as a space weather forecasting model. Like tiling a floor, sometimes it is easier to work at the center of the floor than in the complex boundaries. But some invisible threshold had been crossed, and everyone agreed that the new National Space Weather Program (NSWP) would be worth the cost. According to NSWP, “The predominant driver of the program is the value of space weather forecasting services to the Nation. The accuracy, reliability, and timeliness of space weather specification and forecasting must become comparable to that of conventional weather forecasting.”

NSWP would have to work with such diverse federal agencies as the NOAA, NSF, DoD, and NASA, all having long, historical ties to different segments of the research community and with their own needs for improved forecasting capability. The DoD, for example, has its own space weather service provided by the Air Force’s Fiftieth Weather Squadron in Colorado Springs, and they share in operating the SEC at NOAA in Boulder. Their particular interest is how solar and geomagnetic storms affect the LORAN navigation system, Global Positioning System satellites, and other sensitive satellite real estate. They had one of the best ionosphere models in the world, but were understandably concerned about secrecy issues in just handing over the model’s computer code and operating theory to the non-DoD community.

To start the ball rolling, NSF and the DoD made $1.3 million available in 1996 to augment space weather research in several key areas and promised to increase this amount each year. NSF added this new research directive to its Global Change Research Program through a new initiative called Geospace Environment Modeling. The outcome of this research would be a geospace general circulation model that would take solar wind conditions and forecast their consequences for the entire geospace region. A series of “campaigns,” begun in 1996, would support theoretical modeling grants for researchers to study the magnetotail region and how it causes substorms and the inner magnetosphere with its ring currents. This sounds like a lot of money, but, in reality, nearly half of the $1.3 million per year will disappear into various forms of institutional “overhead” costs including phone bills, office space rental, and health benefits. Out of hundreds of space scientists, only a few dozen or so will be supported each year on this kind of a budget to do the herculean job of building this mammoth space weather modeling system. But it was a far cry from no support at all! By FY 1999, this amount had increased to $2 million, and the NSF was hoping to use this to support twenty to thirty scientists at $50,000 to $100,000 per year, including overhead costs.

NASA already supported much of this activity through its Office of Space Science, which handles Sun-Earth Connection research. NASA’s role in space science has by no means been inconsequential. Since 1958 it has built and launched over sixty solar and space physics research satellites at the behest of the space science community. With Congressional approval, NASA created satellite programs such as Explorer and MIDEX, that paid teams of researchers to build the instruments and the satellites. NASA then launched these payloads. Afterward, NASA provided all the satellite tracking and data archiving services for the duration of the funded mission. Each mission has a budget for Mission Operations and Data Analysis (MO&DA) from which it supports its own investigators to work with satellite data. NASA also hires its own permanent staff of space scientists to support the archiving activities and provide modest enhancements to the format of the data so that the space science community can work with the data more efficiently. Ironically, NASA space scientists and mission scientists cannot apply to the National Science Foundation to support their research. NSF does not support space research using NASA resources. NSF considers any research involving space or satellite data something that NASA should support. Moreover, NASA rarely supports astronomers to carry out ground-based research involving telescopes. NASA “Civil Service” scientists, meanwhile, can only conduct research that enhances the value of the satellite data. Although mission space scientists are sometimes offered permanent jobs with NASA when no hiring freezes are in effect, they usually return to academia or industry and continue their research, sometimes by obtaining both NSF grants and NASA research grants.

Beginning in 1996, NASA’s Office of Space Science tried to set up a Quantitative Magnetospheric Predictions Program that was supposed to result in a comprehensive magnetospheric model. The model would rely on solar wind data provided by its own research satellites such as WIND or ACE and from this compute the consequences for the complete system. It was a promising and exciting new program, and a timely one to boot, but the idea never became a funded NASA program. The message from Congress, and from NASA, to the scientific community was that NASA had already done its fare share of contributing to the National Space Weather Program just by providing the research community with satellites and data. Any work that NASA’s space scientists would do with the archived data would have to focus on providing “value-added” information, not producing a major product such as a new forecasting model. At the request of the non-NASA research community, NASA had put into place a virtual armada of solar and space physics research satellites, and NASA was very happy to supply non-NASA modelers with all the data they needed. After forty years, there was a lot of data to go around.

At the NASA Goddard Space Flight Center, Building 28 is tucked away in a not very well-traveled part of the campus. Deer frequently come out on the front lawn to graze and keep a wary eye out for passing scientists. The 1990s vintage architecture hides a virtual rabbit’s warren of offices and cubicles, each with its own occupant hunched over a computer terminal or reading the latest journal. It is also the home of the National Space Science Data Center, a massive, electronic archive of all of the data obtained by NASA satellites since the early years of space exploration. Satellites numbering 395 have contributed 4,400 data sets and a staggering 15 terabytes of data that grows by 100 gigabytes each month. There are also 500,000 film images from the manned space program, and hundreds of movies and videos.

Sophisticated, interactive programs such as the Consolidated Data Analysis Web (CDAWeb) let scientists extract specific measurements of dozens of different physical properties that define space weather conditions throughout the solar system. You can do this too, if you visit their Internet page! Would you like to see what the solar wind magnetic field was like on January 1, 2000? Enter the date, select the magnetic parameter, and in a few seconds you will get a plot of magnetic field directions from the ACE or WIND satellites. A little more of this data mining will quickly point out a problem. There are big gaps, in both space and time, in the available data for a given parameter you are looking at, because satellites and their measuring instruments have not been flying at the same time to perform coordinated studies of specific phenomena. This lack of coordinated observations began to change in the early 1990s with the International Solar-Terrestrial Program: ISTP.

This $2.5 billion program, inaugurated in 1994, used the vast majority of this money to build four key satellites and to support engineers and other ground crew to keep round-the-clock vigils on spacecraft functions and telemetry. The Solar and Heliospheric Observatory (SOHO) monitors the solar surface at optical and ultraviolet wavelengths to catch CMEs and keep watch on active regions on the Sun. WIND measures the solar wind speed and magnetic field strength at the L1 Lagrange point inside the orbit of the Earth. Next in line is the Geotail satellite whose complex orbit lets it measure activity in the magnetotail of the Earth, watching for changes that herald the onset of geomagnetic substorms. Last, the POLAR satellite looks at the polar regions of the Earth to keep watch on the changes in auroral activity.

In principle, this fleet of satellites can study the cradle-to-grave growth of solar disturbances and track them through a series of satellite handoffs all the way from the solar surface to the auroral belt. The ISTP network has only been in place since 1996, which means that it hasn’t been “on the air” long enough to examine a representative number of solar storm events. In fact, it started its campaign during sunspot minimum when not much was going on at all. Although in 1998 there were some plans to stop funding ISTP at NASA, by 2000 this prospect seems to have vanished, and NASA is now committed to fully funding the ISTP program at least until the satellites themselves begin to fail. SOHO and POLAR have already had their share of technical problems, and SOHO was nearly lost for good during the summer of 1998. Although the funding now seems to be stable, there are real concerns that the satellites themselves will not survive much beyond the peak of Cycle 23, a critical period for catching the Sun at its worst.

Since ISTP became operational, NASA has also provided an array of other satellites beyond the ISTP constellation as new technology and scientific interests arose. By 1998, the Sun, the wind from the Sun, and the geospace environment have been under around-the-clock surveillance by a newer generation of satellites. None of these missions, however, have a carte blanch to do more than a modest amount of research with their data before archiving it for posterity.

The Advanced Composition Experiment (ACE) satellite, launched in 1998, monitors the minute-to-minute changes in the solar wind magnetic field and composition. This $160 million mission hopes to retain NASA funding until its steering gases run out in 2006. Despite the many, and growing, practical benefits of having this satellite operational until the end of Cycle 23, it faces stiff competition from other planned research satellite programs to continue operating beyond 2001. NASA, and the space community, is less interested in practical benefits from a satellite than a steady stream of fundamental insights about space physics processes. The predecessor to ACE, called ISEE-3 and launched in 1978, ran into similar difficulties. NOAA and DoD wanted this satellite to remain at L1 to continue providing real-time solar wind data for space weather forecasting. NASA, at the urging of its science advisory board, yanked it out of this location so that it could flyby Comet Jacobi-Zimmer in 1983. The Air Force made it quite clear to NASA that ISEE-3 was needed for practical purposes, but NASA had to listen to the science community that sponsored the mission to “explore” and do a pre-Halley’s Comet flyby. ACE currently costs $5 million each year to maintain the satellite and to fund research scientists to work with, and archive, the data. Again, NOAA and the Department of Defense, not wishing or being able to secure the funds themselves, rely on NASA to develop and launch satellites, like ACE, to help with their space weather forecasting.

The Transition Region and Coronal Explorer (TRACE) satellite, launched in 1998, uses high-resolution imaging to show the fine magnetic details on the solar surface that older satellites such as Yohkoh could not detect clearly. The promise of better advanced warning for CMEs, and especially for solar flares, will be realized by the crystal-clear images returned by this satellite of magnetic field structures on the solar surface. Even grade school students will study these dramatic images to learn about solar magnetism. The $150 million mission will last until 2003, with no currently planned replacement to continue the exploration of the solar magnetic “fine structure.”

The exciting prospect of actually imaging CMEs as they travel from the Sun will become a reality in 2001 with the launch of the Solar Mass Ejection Imager (SMEI). This satellite, developed by the U.S. Air Force’s Battlespace Environment Division at the Air Force Research Laboratory, will measure sunlight scattered by electrons within the CME and create movies of incoming CMEs. Extensive studies by Bernard Jackson, the University of California, San Diego coinvestigator on the SMEI mission, has already demonstrated how well this technique works using data from the HELIOS satellite in 1977 and radio-wavelength data from ground-based telescopes. As a forerunner to the next generation of CME imagers, it will almost completely take the guesswork out of predicting which CMEs, out of the several thousand the Sun produces every sunspot cycle, will actually collide with the Earth.

Closer to home, the geospace environment will not be left out of this onrush of investigation. The $83 million Imager for Magnetosphere-to-Auroral Global Exploration (IMAGE), launched in March 2000, provides images of nearly the entire geospace region to keep track of the movements of charged particles and their currents. Previous generations of satellites only measured the space weather conditions where they were specifically located. This is like trying to track a hurricane by only using scattered weather stations in Florida and South Carolina. IMAGE replaces this kind of data taking by imaging nearly the entire contents of the magnetosphere cavity. This will revolutionize the study of the magnetosphere in the same way that the first weather satellites photographed and tracked hurricanes from space. Like conventional weather satellites, IMAGE delivers five-minute update images of the global pattern of plasmas, from the magnetopause all the way down to the auroral region. For the first time, space physicists will be able to “see” the flows and changes in these systems of particles that previous satellites could only hint at. The satellite’s prime mission lasts two years, with a much hoped for extension until 2004, assuming that the space science community continues to see this satellite as actively contributing to magnetospheric research. What IMAGE scientists hope to learn from this is how high energy particles circulate and are stored in the magnetosphere, which will then tell space scientists about the latency of energetic particles. In practical terms, it may also illuminate how satellites such as Galaxy IV, DBS-1, and others, sometimes seem to run into trouble long after a space weather event has seemingly passed us by.

In the first decade of the twenty-first century, a new series of NASA satellites such as STEREO, the Global Electrodynamics Connections, and the Magnetosphere Multi-Scale Mission will replace the current fleet. An ever changing hat game will be continued as older satellites run out of fuel or funding and have to be replaced by newer, more capable, satellites designed to explore new issues in the Sun-Earth system.

After the ISTP program disbands as its satellites, one by one, fall out of service from old age, what new program will take its place to coordinate another assault on the space weather issue? The current suite of satellites is mostly a series of independent efforts led by investigators studying specific issues, but there is only a rudimentary attempt at coordinating the observations. In some cases, it is not possible to do this because, for example, a satellite like IMAGE may not live long enough to be on the scene when the STEREO satellites begin taking their data. IMAGE will rely on a, hopefully, one to two year overlap with SOHO and ACE to provide data on the external, interplanetary environment that sets in motion the geomagnetic events IMAGE hopes to investigate. But the key problem is that there is not enough research money outside the satellite operating budget to support scientists in making sense of what they observe. To make matters worse, over the years, the part of a mission’s budget that is set aside for research, MO&DA, often gets robbed during the construction of the satellite to cover cost overruns. One solution is for NASA to create a program, with more available money to go around, to support both new satellites and enhanced MO&DA activities. In 1996, NASA attempted to create the Quantitative Magnetospheric Predictions Program and ISTP. Although the former program did not survive as a new start, ISTP succeeded spectacularly and provided a coordinated investigation of solar activity during the first half of Cycle 23. In 1999, NASA proposed another program to take over from ISTP and to further coordinate space research activities.

The ultimate output of this campaign would be the observational specifications for an operational space weather system and the models to apply to the data to produce accurate and reliable forecasts over the timescales required to be beneficial to humanity’s space endeavors. (NASA, SEC 2000 Roadmap, p. 96)

Every three years, federal agencies are required to develop strategic plans to serve as a basis for governmental policies and strategic planning. In January 2000, George Withbroe, the director of NASA’s Office of Space Science, together with a team of twenty-eight experts, produced the “Sun-Earth Connections 2000 Roadmap.” A significant factor in this document is the renewed emphasis placed on improving our space weather forecasting ability and providing the satellite resources to keep a constant watch on the Sun through the year 2025. Withbroe’s new program, which he calls Living with a Star, is the embodiment of the new strategic plan and will nearly double the $250 million spent on solar and geospace research each year by NASA. With the backing of his advisers from the space science community, he envisions a new suite of satellites to be built in the first decade of the new millennium that will take over from the aging ISTP program and cover the next solar cycle: Cycle 24.

In August 1999, following an unusually lengthy meeting with NASA’s administrator, Daniel Goldin at NASA Headquarters, Goldin gave his go-ahead to Withbroe’s proposal to set up such a new program, and since then Withbroe has been presenting his plan to the scientific community to galvanize support for it. Apparently, it wasn’t the detailed science or the heroic dreams of solar physicists that apparently caught Goldin’s attention. Instead it was an issue, in the post-Challenger NASA age, that has become a critical ingredient to every scientific program administered by NASA: safety. Astronauts can, and will, be affected in a measurable way by radiation exposure. Even though the Occupation and Safety Administration and NASA have agreed upon the 50 rem per year annual limit for astronauts, in today’s radiation-averse society, even this much (equal to thousands of chest X rays) seems an unacceptable health risk. Some solar flares can do far worse than this dosage to a spacesuited astronaut. In a press release by the National Research Council issued on December 10, 1999, they also urged NASA to carefully monitor its astronauts for radiation exposure and to support programs that will enhance our ability to forecast solar storms. Newspapers such as USA Today carried the story, originally covered by the Associated Press, with the headline “Radiation Alert”: “[The NRC] warned that astronauts might receive doses of radiation equal to several hundred chest X-rays from solar flares during planned space construction.”

Although Living with a Star is an exciting new program with profound impacts on space weather forecasting, it still has to meet the challenges of another, even larger program, “Living with the Congress.” NASA may recommend a “new start” program requiring a new “budget line” to be opened in NASA’s annual budget, but it literally requires an act of Congress to make it happen. Although we enter the new millennium with over $200 billion in federal budget excesses each year, NASA’s own budgets are projected to be extremely flat for the foreseeable future, making it very difficult to shake loose the money needed for a new program. Coming as it does as a new proposed expense for NASA during an election-year congressional budget debate in the year 2000, the odds seem pretty slim that Living with a Star will reach ignition temperature. Nevertheless, a rumor has it that sometime in late 1998, while NASA was testifying before Congress, the issue of what NASA was doing about space weather came up in the questioning of NASA’s planned FY 1999 budget. If true, this could be a watershed moment for the future of this entire enterprise at NASA and a promising sign that its time has, at last, arrived.

Solar storms are dramatic changes in our solar system that are the result of solar activity. The ground doesn’t shake, and the sky does not turn black when a solar storm strikes the Earth. . . . Because solar storms attack the very foundation of our high-tech society, scientists are excited to find that satellite data will help them predict solar storms and mitigate their impact on Earth. (“Our Sun: A Look Under the Hood,” NASA Facts)

More than just another NASA program that will benefit NASA and the academic space science community, one of the major beneficiaries of this new program will be the Space Environment Center in Boulder, Colorado. This will happen in the same way that the U.S. Weather Service benefited from the atmospheric research spurred on by the new satellite data provided by NASA in the 1960s. The mission of the SEC is to conduct research on solar-terrestrial physics, develop techniques for forecasting geophysical disturbances, and provide real-time monitoring of solar and geophysical events. The fifty-five employees that work there under a $5 million annual budget issue daily forecasts to a long, and in many cases confidential, list of clients including the U.S. military and commercial satellite owners. Whether you are a global positioning system (GPS) user, a geologist prospecting for minerals, or even a pigeon racer, you may find yourself in need of one of these forecasts to avoid bad conditions that could cost you time and money or get you lost. The modest annual budget for the SEC expended to create these forecasts is insufficient to build its own space weather satellites. It also seems an astonishingly small investment given that over $110 billion in satellite real estate and hundreds of billions of dollars of Gross Domestic Product can be impacted by space weather events.

This promise of substantially improving our space weather forecasting capability, both scientifically and practically, may be stillborn as Congress wrangles over an important technical issue. In May 2000, the House of Representatives deleted NASA’s fledgling Living with a Star program from the Fiscal Year 2001 budget. They were concerned that this program sounded too much like an active space weather forecasting system of its own and not a pure research investigation. NASA’s mission is to do research, not to delve into practical applications. In defense, NASA replied that Congress is giving this agency mixed messages by also instructing the agency to foster more applications-oriented commercial research. Living with a Star was supposed to be the vanguard of this new wave of thinking. It is hoped that funding for the program will be reinstated by the Senate and survive the August–September congressional budget negotiations to follow. During the 2000 election year, there are many pressures being placed upon Congress, and space weather issues may seem far too theoretical and abstract to carry the necessary political support. Still, we have to remain hopeful, because the cost of doing nothing has begun to grown unacceptable with each passing solar cycle.

Because of a lack of data, and a regular stream of it that scientists can count upon over time, our understanding of space weather is still primitive. We cannot anticipate so much as a day in advance what solar region will spawn a solar flare or a coronal mass ejection. We cannot anticipate the properties of a CME with any reliability until it reaches one of NASA’s ageing sentry satellites (ACE, WIND, SOHO) in L1 orbit, one million miles from the Earth. This gives us barely thirty minutes to recognize a problem is on the way. Satellites such as POLAR, IMP-8, and Geotail patrol geospace but cannot be everywhere at once to give us literally a ten-second warning. With resolutions measured in thousands of miles, we cannot anticipate how the geospace environment will respond to a storm at a level of detail that is useful for a specific military or commercial satellite. Instead, many spacecraft designers have to rely on statistical models of the geospace environment that are thirty years old. This is like trying to predict tomorrow’s rainfall in New York City using data from the same day of the year averaged between 1960 and 1970.

It isn’t just the satellite industry and NASA’s manned space program that will benefit from the next generation of forecasting tools provided by Living with a Star and the National Space Weather Program. The third leg of this particular stool is the electrical power industry. Progress in this area has been difficult because of the widespread opinion that an electrical power emergency caused by adverse space weather is so infrequent that it is ignorable. In fact, this is not the case at all, as we discovered in Chapter 4. Every time there is a geomagnetic storm with a severity of Kp = 6 (a G3 event), electrical utility companies in the northern-tier states experience strong GIC currents that trip some of their protection systems and require manual intervention to reset them. When Kp reaches 7 or 8 during a G4 event, dozens of these temporary interruptions sweep across the electrical grid of Japan, North America, Scandinavia, and Great Britain. When Kp reaches 9, (a severe G5 event) as it does at least once every solar cycle, hundreds of equipment failures sweep across North America and Europe in a matter of a few minutes. Depending on the time of year and the amount of operating margin available, blackouts become an expensive and public reality.

PEPCO, VEPCO, and BGandE, despite their locations in regions that are usually not greatly at risk from geomagnetic storms, are no strangers to outages. A January 1999 ice storm turned out the light and heat for over four hundred thousand people in Maryland, Virginia, and Washington, D.C. for up to five days. Although it was not widely reported, it was a major hardship for many residents of the Washington, D.C. area. The electrical utilities were under constant unrelenting attack from private citizens and the media to reconnect their services. One street waited hopelessly by for two days, while the lights on the streets surrounding were quickly brought back on. The bad press and harsh feelings directed at the electrical companies undid years of hard work by the utilities to portray themselves as “friends.” It was not surprising that these same utilities appeared at a conference in Washington, D.C where Bill Feero rolled out his Sunburst system and asked them for support. Even rare geomagnetic events could throw their customers into a frenzy, and the few thousand dollars for the Sunburst system seemed like a bargain.

Meanwhile, an electrical utility company running John Kappenman’s PowerCast system can look at any line, transformer, or other component in their system and immediately read out just what it will do when the solar wind hits the Earth traveling at a million miles per hour. With thirty minutes to spare, it is now possible to put into action a variety of countermeasures to protect the grid from failure. PowerCast has just become operational in Great Britain, where it has been used for several years to improve the reliability of their national power grid. Entry into the North American utility system has been sluggish because, at a cost of a few thousand dollars per month, many utility managers still do not see it as a high priority investment given that space weather disruptions are so infrequent.

Ironically, the ACE satellite seems constantly on the verge of cancellation by NASA to make way for newer missions. The fact that ACE data plays such a vital role in GIC forecasting for the power industry seems to be of no special interest to NASA. NASA is, after all, a research organization supported by the US taxpayer, not a for-profit corporation looking for commercialization opportunities. The viability of the ACE mission at NASA hinges totally on its scientific returns and not its potential for practical applications. NASA also has to make way for future missions with the declining, and politically vulnerable, space research budgets that the U.S. Congress, in its wisdom, has mandated. In England, which uses the PowerCast technology, ACE is seen as an ally in keeping their entire multibillion dollar electrical utility system operating reliably. Rutherford Appleton Labs has invested in its own independent ACE satellite tracking station to intercept the solar wind data. Arslan Erinmez, chief engineer at the National Grid Company in England, notes that “The British power industry would be happy to do anything it can to keep ACE going.” While the destiny of satellites such as ACE turns completely on how well its scientists can convince NASA and Congress not to terminate it, its politically silent, commercial clients both domestic and foreign continue to mine its data to help the power industry keep your electricity flowing.

NASA, and the space scientists that advise this agency, are not interested in building a follow-on satellite to ACE just to supply private industry with a forecasting tool, unless it can be justified on solely scientific terms of advancing our understanding. Even so, any prospective follow-on to ACE, such as the Triana satellite, will have to compete with astronomy missions such as the Next Generation Space Telescope to secure its funding, and with MAP, AXAF and Hubble Space Telescope to maintain their year-to-year operating budget. NASA has been forced into a zero-sum, or even declining, fiscal game by Congress, at a time when space research has exploded into new areas and possibilities. Whether the power industry gets a GIC forecasting tool to keep Boston lights turned on, or NOAA’s Space Environment Center can help satellite owners prevent another major communication satellite outage, hinges on whether at NASA investigating quasars and the nature of dark matter is deemed more important than studying the physics of solar magnetic field reconnection.