3

LOGIC AND CRITICAL THINKING

This modern type of the general reader may be known in conversation by the cordiality with which he assents to indistinct, blurred statements: say that black is black, he will shake his head and hardly think it; say that black is not so very black, he will reply, “Exactly.” He has no hesitation . . . to get up at a public meeting and express his conviction that at times, and within certain limits, the radii of a circle have a tendency to be equal; but, on the other hand, he would urge that the spirit of geometry may be carried a little too far.

—George Eliot1

In the previous chapter, we asked why humans seem to be driven by what Mr. Spock called “foolish emotions.” In this one we’ll look at their irritating “illogic.” The chapter is about logic, not in the loose sense of rationality itself but in the technical sense of inferring true statements (conclusions) from other true statements (premises). From the statements “All women are mortal” and “Xanthippe is a woman,” for example, we can deduce “Xanthippe is mortal.”

Deductive logic is a potent tool despite the fact that it can only draw out conclusions that are already contained in the premises (unlike inductive logic, the topic of chapter 5, which guides us in generalizing from evidence). Since people agree on many propositions—all women are mortal, the square of eight is sixty-four, rocks fall down and not up, murder is wrong—the goal of arriving at new, less obvious propositions is one we can all embrace. A tool with such power allows us to discover new truths about the world from the comfort of our armchairs, and to resolve disputes about the many things people don’t agree on. The philosopher Gottfried Wilhelm Leibniz (1646–1716) fantasized that logic could bring about an epistemic utopia:

The only way to rectify our reasonings is to make them as tangible as those of the Mathematicians, so that we can find our error at a glance, and when there are disputes among persons, we can simply say: Let us calculate, without further ado, to see who is right.2

You may have noticed that three centuries later we are still not resolving disputes by saying “Let us calculate.” This chapter will explain why. One reason is that logic can be really hard, even for logicians, and it’s easy to misapply the rules, leading to “formal fallacies.” Another is that people often don’t even try to play by the rules, and commit “informal fallacies.” The goal of exposing these fallacies and coaxing people into renouncing them is called critical thinking. But a major reason we don’t just calculate without further ado is that logic, like other normative models of rationality, is a tool that is suitable for seeking certain goals with certain kinds of knowledge, and it is unhelpful with others.

Formal Logic and Formal Fallacies

Logic is called “formal” because it deals not with the contents of statements but with their forms—the way they are assembled out of subjects, predicates, and logical words like and, or, not, all, some, if, and then.3 Often we apply logic to statements whose content we care about, such as “The President of the United States shall be removed from office on impeachment for, and conviction of, treason, bribery, or other high crimes and misdemeanors.” We deduce that for a president to be removed, he must be not only impeached but also convicted, and that he need not be convicted of both treason and bribery; one is enough. But the laws of logic are general-purpose: they apply whether the content is topical, obscure, or even nonsensical. It was this point, and not mere whimsy, that led Lewis Carroll to create the “sillygisms” in his 1896 Symbolic Logic textbook, many of which are still used in logic courses today. For example, from the premises “A lame puppy would not say ‘thank you’ if you offered to lend it a skipping-rope” and “You offered to lend the lame puppy a skipping-rope,” one may deduce “The puppy did not say ‘thank you.’ ”4

Systems of logic are formalized as rules that allow one to deduce new statements from old statements by replacing some strings of symbols with others. The most elementary is called propositional calculus. Calculus is Latin for “pebble,” and the term reminds us that logic consists of manipulating symbols mechanically, without pondering their content. Simple sentences are reduced to variables, like P and Q, which are assigned a truth value, true or false. Complex statements can be formed out of simple ones with the logical connectors and, or, not, and if-then.

You don’t even have to know what the connector words mean in English. Their meaning consists only of rules that tell you whether a complex statement is true depending on whether the simple statements inside it are true. Those rules are stipulated in truth tables. The one on the left, which defines and, can be interpreted line by line, like this: When P is true and Q is true, that means “P and Q” is true. When P is true and Q is false, that means “P and Q” is false. When P is false . . . and so on for the last two lines.

|

P |

Q |

P and Q |

|

true |

true |

true |

|

true |

false |

false |

|

false |

true |

false |

|

false |

false |

false |

|

P |

Q |

P or Q |

|

true |

true |

true |

|

true |

false |

true |

|

false |

true |

true |

|

false |

false |

false |

|

P |

not P |

|

true |

false |

|

false |

true |

Let’s take an example. In the meet-cute opening of the 1970 romantic tragedy Love Story, Jennifer Cavilleri explains to fellow Harvard student Oliver Barrett IV, whom she condescendingly called Preppy, why she assumes he went to prep school: “You look stupid and rich.” Let’s label “Oliver is stupid” as P and “Oliver is rich” as Q. The first line of the truth table for and lays out the simple facts which have to be true for her conjunctive putdown to be true: that he’s stupid, and that he’s rich. He protests (not entirely honestly), “Actually, I’m smart and poor.” Let’s assume that “smart” means “not stupid” and “poor” means “not rich.” We understand that Oliver is contradicting her by invoking the fourth line in the truth table: if he’s not stupid and he’s not rich, then he’s not “stupid and rich.” If all he wanted to do was contradict her, he could also have said, “Actually, I’m stupid and poor” (line 2) or “Actually, I’m smart and rich” (line 3). As it happens, Oliver is lying; he is not poor, which means that it’s false for him to say he is “smart and poor.”

Jenny replies, truthfully, “No, I’m smart and poor.” Suppose we draw the cynical inference invited by the script that “Harvard students are rich or smart.” This inference is not a deduction but an induction—a fallible generalization from observation—but let’s put aside how we got to that statement and look at the statement itself, asking what would make it true. It is a disjunction, a statement with an or, and it may be verified by plugging our knowledge about the lovers-to-be into the truth table for or (middle column), with P as “rich” and Q as “smart.” Jenny is smart, even if she is not rich (line 3), and Oliver is rich, though he may or may not be smart (line 1 or 2), so the disjunctive statement about Harvard students, at least as it concerns these two, is true.

The badinage continues:

oliver: What makes you so smart?

jenny: I wouldn’t go for coffee with you.

oliver: I wouldn’t ask you.

jenny: That’s what makes you stupid.

Let’s fill out Jenny’s answer as “If you asked me to have coffee, I would say ‘no.’ ” Based on what we have been told, is the statement true? It is a conditional, a statement formed with an if (the antecedent) and a then (the consequent). What is its truth table? Recall from the Wason selection task (chapter 1) that the only way for “If P then Q” to be false is if P is true while Q is false. (“If a letter is labeled Express, it must have a ten-dollar stamp” means there can’t be any Express letters without a ten-dollar stamp.) Here’s the table:

|

P |

Q |

If P then Q |

|

true |

true |

true |

|

true |

false |

false |

|

false |

true |

true |

|

false |

false |

true |

If we take the students at their word, Oliver would not ask her. In other words, P is false, which in turn means that Jenny’s if-then statement is true (lines 3 and 4, third column). The truth table implies that her actual RSVP is irrelevant: as long as Oliver never asks, she’s telling the truth. Now, as the flirtatious scene-closer suggests, Oliver eventually does ask her (P switches from false to true), and she does accept (Q is false). This means that her conditional If P then Q was false, as playful banter often is.

The logical surprise we have just encountered—that as long as the antecedent of a conditional is false, the entire conditional is true (as long as Oliver never asks, she is telling the truth)—exposes a way in which a conditional in logic differs from a statement with an “if” and a “then” in ordinary conversation. Generally, we use a conditional to refer to a warranted prediction based on a testable causal law, like “If you drink coffee, you will stay awake.” We aren’t satisfied to judge the conditional as true just because it was never tested, like “If you drink turnip juice, you will stay awake,” which would be logically true if you never drank turnip juice. We want there to be grounds for believing that in the counterfactual situations in which P is true (you do drink turnip juice), not Q (you fall asleep) would not happen. When the antecedent of a conditional is known to be false or necessarily false, we’re tempted to say that the conditional was moot or irrelevant or speculative or even meaningless, not that it is true. But in the logical sense stipulated in the truth table, in which If P then Q is just a synonym for not [P and not Q], that is the strange outcome: “If pigs had wings, then 2 + 2 = 5” is true, and so is “If 2 + 2 = 3, then 2 + 2 = 5.” For this reason, logicians use a technical term to refer to the conditional in the truth-table sense, calling it a “material conditional.”

Here is a real-life example of why the difference matters. Suppose we want to score pundits on the accuracy of their predictions. How should we evaluate the conditional prediction, made in 2008, “If Sarah Palin were to become president, she would outlaw all abortions”? Does the pundit get credit because the statement is, logically speaking, true? Or should it not count either way? In the real forecasting competition from which the example was drawn, the scorers had to decide what to do about such predictions, and decided not to count it as a true prediction: they chose to interpret the conditional in its everyday sense, not as a material conditional in the logical sense.5

The difference between “if” in everyday English and if in logic is just one example of how the mnemonic symbols we use for connectors in formal logic are not synonymous with the ways they are used in conversation, where, like all words, they have multiple meanings that are disambiguated in context.6 When we hear “He sat down and told me his life story,” we interpret the “and” as implying that he first did one and then the other, though logically it could have been the other way around (as in the wisecrack from another era, “They got married and had a baby, but not in that order”). When the mugger says “Your money or your life,” it is technically accurate that you could keep both your money and your life because P or Q embraces the case where P is true and Q is true. But you would be ill advised to press that argument with him; everyone interprets the “or” in context as the logical connector xor, “exclusive or,” P or Q and not [P and Q]. It’s also why when the menu offers “soup or salad,” we don’t argue with the waiter that we are logically entitled to both. And technically speaking, propositions like “Boys will be boys,” “A deal is a deal,” “It is what it is,” and “Sometimes a cigar is just a cigar” are empty tautologies, necessarily true by virtue of their form and therefore devoid of content. But we interpret them as having a meaning; in the last example (attributed to Sigmund Freud), that a cigar is not always a phallic symbol.

Even when the words are pinned down to their strict logical meanings, logic would be a minor exercise if it consisted only in verifying whether statements containing logical terms are true or false. Its power comes from rules of valid inference: little algorithms that allow you to leap from true premises to a true conclusion. The most famous is called “affirming the antecedent” or modus ponens (the premises are written above the line, the conclusion below):

If P then Q

P

Q

“If someone is a woman, then she is mortal. Xanthippe is a woman. Therefore, Xanthippe is mortal.” Another valid rule of inference is called “denying the consequent,” the law of contraposition, or modus tollens:

If P then Q

Not Q

Not P

“If someone is a woman, then she is mortal. Stheno the Gorgon is immortal. Therefore, Stheno the Gorgon is not a woman.”

These are the most famous but by no means the only valid rules of inference. From the time Aristotle first formalized logic until the late nineteenth century, when it began to be mathematized, logic was basically a taxonomy of the various ways one may or may not deduce conclusions from various collections of premises. For example, there is the valid (but mostly useless) disjunctive addition:

P

P or Q

“Paris is in France. Therefore, Paris is in France or unicorns exist.” And there is the more useful disjunctive syllogism or process of elimination:

P or Q

Not P

Q

“The victim was killed with a lead pipe or a candlestick. The victim was not killed with a lead pipe. Therefore, the victim was killed with a candlestick.” According to a story, the logician Sidney Morgenbesser and his girlfriend underwent couples counseling during which the bickering pair endlessly aired their grievances about each other. The exasperated counselor finally said to them, “Look, someone’s got to change.” Morgenbesser replied, “Well, I’m not going to change. And she’s not going to change. So you’re going to have to change.”

More interesting still is the Principle of Explosion, also known as “From contradiction, anything follows.”

P

Not P

Q

Suppose you believe P, “Hextable is in England.” Suppose you also believe not P, “Hextable is not in England.” By disjunctive addition, you can go from P to P or Q, “Hextable is in England or unicorns exist.” Then, by the disjunctive syllogism, you can go from P or Q and not P to Q: “Hextable is not in England. Therefore, unicorns exist.” Congratulations! You just logically proved that unicorns exist. People often misquote Ralph Waldo Emerson as saying, “Consistency is the hobgoblin of little minds.” In fact he wrote about a foolish consistency, which he advised “great souls” to transcend, but either way, the putdown is dubious.7 If your belief system contains a contradiction, you can believe anything. (Morgenbesser once said of a philosopher he didn’t care for, “There’s a guy who asserted both P and not P, and then drew out all the consequences.”)8

The way that valid rules of inference can yield absurd conclusions exposes an important point about logical arguments. A valid argument correctly applies rules of inference to the premises. It only tells us that if the premises are true, then the conclusion must be true. It makes no commitment as to whether the premises are true, and thus says nothing about the truth of the conclusion. This may be contrasted with a sound argument, one that applies the rules correctly to true premises and thus yields a true conclusion. Here is a valid argument: “If Hillary Clinton wins the 2016 election, then in 2017 Tim Kaine is the vice president. Hillary Clinton wins the 2016 election. Therefore, in 2017 Tim Kaine is the vice president.” It is not a sound argument, because Clinton did not in fact win the election. “If Donald Trump wins the 2016 election, then in 2017 Mike Pence is the vice president. Donald Trump wins the 2016 election. Therefore, in 2017 Mike Pence is the vice president.” This argument is both valid and sound.

Presenting a valid argument as if it were sound is a common fallacy. A politician promises, “If we eliminate waste and fraud from the bureaucracy, we can lower taxes, increase benefits, and balance the budget. I will eliminate waste and fraud. Therefore, vote for me and everything will be better.” Fortunately, people can often spot a lack of soundness, and we have a family of retorts to the sophist who draws plausible conclusions from dubious premises: “That’s a big if.” “If wishes were horses, beggars would ride.” “Assume a spherical cow” (among scientists, from a joke about a physicist recruited by a farmer to increase milk production). And then there’s my favorite, the Yiddish As di bubbe volt gehat beytsim volt zi gevain mayn zaidah, “If my grandmother had balls, she’d be my grandfather.”

Of course, many inferences are not even valid. The classical logicians also collected a list of invalid inferences or formal fallacies, sequences of statements in which the conclusions may seem to follow from the premises but in fact do not. The most famous of these is affirming the consequent: “If P then Q. Q. Therefore, P.” If it rains, then the streets are wet. The streets are wet. Therefore, it rained. The argument is not valid: a street-cleaning truck could have just gone by. An equivalent fallacy is denying the antecedent: “If P then Q. Not P. Therefore, not Q.” It didn’t rain, therefore the streets are not wet. It’s also not valid, and for the same reason. A different way of putting it is that the statement If P then Q does not entail its converse, If Q then P, or its inverse, If not P then not Q.

But people are prone to affirming the consequent, confusing “P implies Q” with “Q implies P.” That’s why in the Wason selection task, so many people who were asked to verify “If D then 3” turn over the 3 card. It’s why conservative politicians encourage voters to slide from “If someone is a socialist, he probably is a Democrat” to “If someone is a Democrat, he probably is a socialist.” It’s why crackpots proclaim that all of history’s great geniuses were laughed at in their era, forgetting that “If genius, then laughed at” does not imply “If laughed at, then genius.” It should be kept in mind by the slackers who note that the most successful tech companies were started by college dropouts.

Fortunately, people often spot the fallacy. Many of us who grew up in the 1960s still snicker at the drug warriors of the era who said that every heroin user started with marijuana, therefore marijuana is a gateway drug to heroin. And then there’s Irwin, the hypochondriac who told his doctor, “I’m sure I have liver disease.” “That’s impossible,” replied the doctor. “If you had liver disease you’d never know it—there’s no discomfort of any kind.” Irwin replies, “Those are my symptoms exactly!”

Incidentally, if you’ve been paying close attention to the wording of the examples, you will have noticed that I did not consistently mind my Ps and Qs, as I should have if logic consists of manipulating symbols. Instead, I sometimes altered their subjects, tenses, numbers, and auxiliaries. “Someone is a woman” became “Xanthippe is a woman”; “you asked” alternated with “Oliver does ask”; “you must wear a helmet” switched with “the child is wearing a helmet.” These kinds of edits matter: “you must wear a helmet” in that context does not literally contradict “a child without a helmet.” That’s why logicians have developed more powerful logics that break the Ps and Qs of propositional calculus into finer pieces. These include predicate calculus, which distinguishes subjects from predicates and all from some; modal logic, which distinguishes statements that happen to be true in this world, like “Paris is the capital of France,” from those that are necessarily true in all worlds, like “2 + 2 = 4”; temporal logic, which distinguishes past, present, and future; and deontic logic, which worries about permission, obligation, and duty.9

Formal Reconstruction

What earthly use is it to be able to identify the various kinds of valid and invalid arguments? Often they can expose fallacious reasoning in everyday life. Rational argumentation consists in laying out a common ground of premises that everyone accepts as true, together with conditional statements that everyone agrees make one proposition follow from another, and then cranking through valid rules of inference that yield the logical, and only the logical, implications of the premises. Often an argument falls short of this ideal: it uses a fallacious rule of inference, like affirming the consequent, or it depends on a premise that was never explicitly stated, turning the syllogism into what logicians call an enthymeme. Now, no mortal has the time or attention span to lay out every last premise and implication in an argument, so in practice almost all arguments are enthymemes. Still, it can be instructive to unpack the logic of an argument as a set of premises and conditionals, the better to spot the fallacies and missing assumptions. It’s called formal reconstruction, and philosophy professors sometimes assign it to their students to sharpen their reasoning.

Here’s an example. A candidate in the 2020 Democratic presidential primary, Andrew Yang, ran on a platform of implementing a universal basic income (UBI). Here is an excerpt from his website in which he justifies the policy (I have numbered the statements):

(1) The smartest people in the world now predict that ⅓ of Americans will lose their job to automation in 12 years. (2) Our current policies are not equipped to handle this crisis. (3) If Americans have no source of income, the future could be very dark. (4) A $1,000/month UBI—funded by a Value Added Tax—would guarantee that all Americans benefit from automation.10

Statements (1) and (2) are factual premises; let’s assume they are true. (3) is a conditional, and is uncontroversial. There is a leap from (3) to (4), but it can be bridged in two steps. There is a missing (but reasonable) conditional, (2a) “If Americans lose their jobs, they will have no source of income,” and there is the (valid) denial of the consequent of (3), yielding “If the future is not to be dark, Americans must have a source of income.” However, upon close examination we discover that the antecedent of (2a), “Americans will lose their jobs,” was never stated. All we have is (1)—the smartest people in the world predict they will lose their jobs. To get from (1) to the antecedent of (2a), we need to add another conditional, (1a) “If the smartest people in the world predict something, it will come true.” But we know that this conditional is false. Einstein, for example, announced in 1952 that only the creation of a world government, P, would prevent the impending self-destruction of mankind, Q (if not P then Q), yet no world government was created (not P) and mankind did not destroy itself (not Q; at least if “impending” means “within several decades”). Conversely, some things may come true that are predicted by people who are not the smartest in the world but are experts in the relevant subject, in this case, the history of automation. Some of those experts predict that for every job lost to automation, a new one will materialize that we cannot anticipate: the unemployed forklift operators will retrain as tattoo removal technicians and video game costume designers and social media content moderators and pet psychiatrists. In that case the argument would fail—a third of Americans will not necessarily lose their jobs, and a UBI would be premature, averting a nonexistent crisis.

The point of this exercise is not to criticize Yang, who was admirably explicit in his platform, nor to suggest that we diagram a logic chart for every argument we consider, which would be unbearably tedious. But the habit of formal reconstruction, even if carried out partway, can often expose fallacious inferences and unstated premises which would otherwise lie hidden in any argument, and is well worth cultivating.

Critical Thinking and Informal Fallacies

Though formal fallacies such as denying the antecedent may be exposed when an argument is formally reconstructed, the more common errors in reasoning can’t be pigeonholed in this way. Rather than crisply violating an argument form in the propositional calculus, arguers exploit some psychologically compelling but intellectually spurious lure. They are called informal fallacies, and fans of rationality have given them names, collected them by the dozens, and arranged them (together with the formal fallacies) into web pages, posters, flash cards, and the syllabuses of freshman courses on “critical thinking.”11 (I couldn’t resist; see the index.)

Many informal fallacies come out of a feature of human reasoning which lies so deep in us that, according to the cognitive scientists Dan Sperber and Hugo Mercier, it was the selective pressure that allowed reasoning to evolve. We like to win arguments.12 In an ideal forum, the winner of an argument is the one with the most cogent position. But few people have the rabbinical patience to formally reconstruct an argument and evaluate its correctness. Ordinary conversation is held together by intuitive links that allow us to connect the dots even as the discussion falls short of Talmudic explicitness. Skilled debaters can exploit these habits to create the illusion that they have grounded a proposition in a sound logical foundation when in reality it is levitating in midair.

Foremost among informal fallacies is the straw man, the effigy of an opponent that is easier to knock over than the real thing. “Noam Chomsky claims that children are born talking.” “Kahneman and Tversky say that humans are imbeciles.” It has a real-time variant practiced by aggressive interviewers, the so-what-you’re-saying-is tactic. “Dominance hierarchies are common in the animal kingdom, even in creatures as simple as lobsters.” “So what you’re saying is that we should organize our societies along the lines of lobsters.”13

Just as arguers can stealthily replace an opponent’s proposition by one that is easier to attack, they can replace their own proposition with one that is easier to defend. They can engage in special pleading, such as explaining that ESP fails in experimental tests because it is disrupted by the negative vibes of skeptics. Or that democracies never start wars, except for ancient Greece, but it had slaves, and Georgian England, but the commoners couldn’t vote, and nineteenth-century America, but its women lacked the franchise, and India and Pakistan, but they were fledgling states. They can move the goalposts, demanding that we “defund the police” but then explaining that they only mean reallocating part of its budget to emergency responders. (Rationality cognoscenti call it the motte-and-bailey fallacy, after the medieval castle with a cramped but impregnable tower into which one can retreat when invaders attack the more desirable but less defensible courtyard.)14 They can claim that no Scotsman puts sugar on his porridge, and when confronted with Angus, who puts sugar on his porridge, say this shows that Angus is not a true Scotsman. The no true Scotsman fallacy also explains why no true Christian ever kills, no true communist state is repressive, and no true Trump supporter endorses violence.

These tactics shade into begging the question, a phrase that philosophers beg people not to use as a malaprop for “raising the question” but to reserve for the informal fallacy of assuming what you’re trying to prove. It includes circular explanations, as in Molière’s virtus dormitiva (his doctor’s explanation for why opium puts people to sleep), and tendentious presuppositions, as in the classic “When did you stop beating your wife?” In one joke, a man boasts about the mellifluous cantor in his synagogue, and another retorts, “Ha! If I had his voice, I’d be just as good.”

One can always maintain a belief, no matter what it is, by saying that the burden of proof is on those who disagree. Bertrand Russell responded to this fallacy when he was challenged to explain why he was an atheist rather than an agnostic, since he could not prove that God does not exist. He replied, “Nobody can prove that there is not between the Earth and Mars a china teapot revolving in an elliptic orbit.”15 Sometimes both sides pursue the fallacy, leading to the style of debate called burden tennis. (“The burden of proof is on you.” “No, the burden of proof is on you.”) In reality, since we start out ignorant about everything, the burden of proof is on anyone who wants to show anything. (As we will see in chapter 5, Bayesian reasoning offers a principled way to reason about who should carry the burden as knowledge accumulates.)

Another diversionary tactic is called tu quoque, Latin for “you too,” also known as what-aboutery. It was a favorite of the apologists for the Soviet Union in the twentieth century, who presented the following defense of its totalitarian repression: “What about the way the United States treats its Negroes?” In another joke, a woman comes home from work early to find her husband in bed with her best friend. The startled man says, “What are you doing home so early?” She replies, “What are you doing in bed with my best friend!?” He snaps, “Don’t change the subject!”

The “smartest people in the world” claim from the Yang Gang is a mild example of the argument from authority. The authority being deferred to is often religious, as in the gospel song and bumper sticker “God said it, I believe it, that settles it.” But it can also be political or academic. Intellectual cliques often revolve around a guru whose pronouncements become secular gospel. Many academic disquisitions begin, “As Derrida has taught us . . .”—or Foucault, or Butler, or Marx, or Freud, or Chomsky. Good scientists disavow this way of talking, but they are sometimes raised up as authorities by others. I often get letters taking me to task for worrying about human-caused climate change because, they note, this brilliant physicist or that Nobel laureate denies it. But Einstein was not the only scientific authority whose opinions outside his area of expertise were less than authoritative. In their article “The Nobel Disease: When Intelligence Fails to Protect against Irrationality,” Scott Lilienfeld and his colleagues list the flaky beliefs of a dozen science laureates, including eugenics, megavitamins, telepathy, homeopathy, astrology, herbalism, synchronicity, race pseudoscience, cold fusion, crank autism treatments, and denying that AIDS is caused by HIV.16

Like the argument from authority, the bandwagon fallacy exploits the fact that we are social, hierarchical primates. “Most people I know think astrology is scientific, so there must be something to it.” While it may not be true that “the majority is always wrong,” it certainly is not always right.17 The history books are filled with manias, bubbles, witch hunts, and other extraordinary popular delusions and madnesses of crowds.

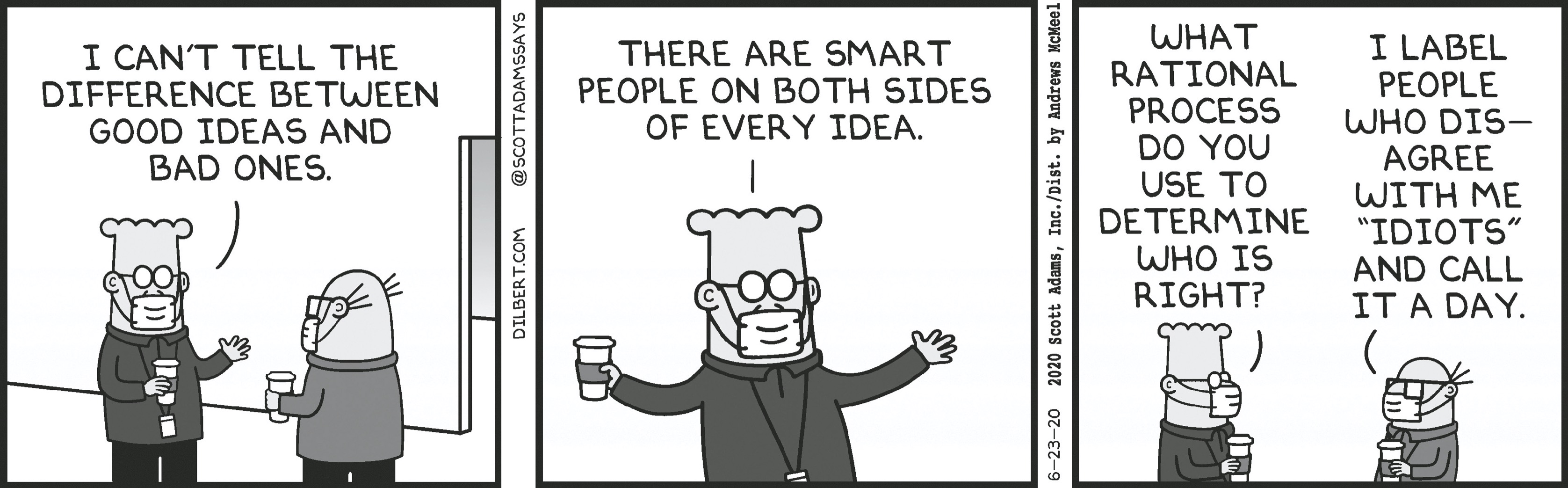

Another contamination of the intellectual by the social is the attempt to rebut an idea by insulting the character, motives, talents, values, or politics of the person who holds it. The fallacy is called arguing ad hominem, against the person. A crude but common version is endorsed by Wally in Dilbert:

DILBERT © 2020 Scott Adams, Inc. Used by permission of ANDREWS MCMEEL SYNDICATION. All rights reserved.

Often the expression is more genteel but no less fallacious. “We don’t have to take Smith’s argument seriously; he is a straight white male and teaches at a business school.” “The only reason Jones argues that climate change is happening is that it gets her grants and fellowships and invitations to give TED talks.” A related tactic is the genetic fallacy, which has nothing to do with DNA but is related to the words “genesis” and “generate.” It refers to evaluating an idea not by its truth but by its origins. “Brown got his data from the CIA World Factbook, and the CIA overthrew democratic governments in Guatemala and Iran.” “Johnson cited a study funded by a foundation that used to support eugenics.”

Sometimes the ad hominem and genetic fallacies are combined to forge chains of guilt by association: “Williams’s theory must be repudiated, because he spoke at a conference organized by someone who published a volume containing a chapter written by someone who said something racist.” Though no one can gainsay the pleasure of ganging up on an evildoer, the ad hominem and genetic fallacies are genuinely fallacious: good people can hold bad beliefs and vice versa. To take a pointed example, lifesaving knowledge in public health, including the carcinogenicity of tobacco smoke, was originally discovered by Nazi scientists, and tobacco companies were all too happy to reject the smoking–cancer link because it was “Nazi science.”18

Then there are arguments directly aimed at the limbic system rather than the cerebral cortex. These include the appeal to emotion: “How can anyone look at this photo of the grieving parents of a dead child and say that war deaths have declined?” And the increasingly popular affective fallacy, in which a statement may be rejected if it is “hurtful” or “harmful” or may cause “discomfort.” Here we see a perpetrator of the affective fallacy as a child:

David Sipress/The New Yorker Collection/The Cartoon Bank

Many facts, of course, are hurtful: the racial history of the United States, global warming, a cancer diagnosis, Donald Trump. Yet they are facts for all that, and we must know them, the better to deal with them.

The ad hominem, genetic, and affective fallacies used to be treated as forehead-slapping blunders or dirty rotten tricks. Critical-thinking teachers and high school debate coaches would teach their students how to spot and refute them. Yet in one of the ironies of modern intellectual life, they are becoming the coin of the realm. In large swaths of academia and journalism the fallacies are applied with gusto, with ideas attacked or suppressed because their proponents, sometimes from centuries past, bear unpleasant odors and stains.19 It reflects a shift in one’s conception of the nature of beliefs: from ideas that may be true or false to expressions of a person’s moral and cultural identity. It also bespeaks a change in how scholars and critics conceive of their mission: from seeking knowledge to advancing social justice and other moral and political causes.20

To be sure, sometimes the context of a statement really is relevant to evaluating its truth. This can leave the misimpression that informal fallacies are OK after all. One can be skeptical of a study showing the efficacy of a drug carried out by someone who stands to profit from the drug, but noting a conflict of interest is not an ad hominem fallacy. One can dismiss a claim that was based on divine inspiration or exegesis of ancient texts or interpreting goat entrails; this debunking is not the genetic fallacy. One can take note of a near consensus among scientists to counter the assertion that we must be agnostic about some issue because the experts disagree; this is not the bandwagon fallacy. And we can impose higher standards of evidence for a hypothesis that would call for drastic measures if it were true; this is not the affective fallacy. The difference is that in the legitimate arguments, one can give reasons for why the context of a statement should affect our credence in whether it is true or how we should act on it, such as indicating the degree to which the evidence is trustworthy. With the fallacies, one is surrendering to feelings that have no bearing on the truth of the claim.

So with all these formal and informal fallacies waiting to entrap us (Wikipedia lists more than a hundred), why can’t we do away with this jibber-jabber once and for all and implement Leibniz’s plan for logical discourse? Why can’t we make our reasonings as tangible as those of the mathematicians so that we can find our errors at a glance? Why, in the twenty-first century, do we still have barroom arguments, Twitter wars, couples counseling, presidential debates? Why don’t we say “Let us calculate” and see who is right? We are not living in Leibniz’s utopia, and, as with other utopias, we never will. There are at least three reasons.

Logical versus Empirical Truths

One reason logic will never rule the world is the fundamental distinction between logical propositions and empirical ones, which Hume called “relations of ideas” and “matters of fact,” and philosophers call analytic and synthetic. To determine whether “All bachelors are unmarried” is true, you just need to know what the words mean (replacing bachelor with the phrase “male and adult and not married”) and check the truth table. But to determine whether “All swans are white” is true, you have to get out of your armchair and look. If you visit New Zealand, you will discover the proposition is false, because the swans there are black.

It’s often said that the Scientific Revolution of the seventeenth century was launched when people first appreciated that statements about the physical world are empirical and can be established only by observation, not scholastic argumentation. There is a lovely story attributed to Francis Bacon:

In the year of our Lord 1432, there arose a grievous quarrel among the brethren over the number of teeth in the mouth of a horse. For thirteen days the disputation raged without ceasing. All the ancient books and chronicles were fetched out, and wonderful and ponderous erudition such as was never before heard of in this region was made manifest. At the beginning of the fourteenth day, a youthful friar of goodly bearing asked his learned superiors for permission to add a word, and straightway, to the wonderment of the disputants, whose deep wisdom he sore vexed, he beseeched them to unbend in a manner coarse and unheard-of and to look in the open mouth of a horse and find answer to their questionings. At this, their dignity being grievously hurt, they waxed exceeding wroth; and, joining in a mighty uproar, they flew upon him and smote him, hip and thigh, and cast him out forthwith. For, said they, surely Satan hath tempted this bold neophyte to declare unholy and unheard-of ways of finding truth, contrary to all the teachings of the fathers.

Now, this event almost certainly never happened, and it’s doubtful that Bacon said it did.21 But the story captures one reason that we will never resolve our uncertainties by sitting down and calculating.

Formal versus Ecological Rationality

A second reason Leibniz’s dream will never come true lies in the nature of formal logic: it is formal, blinkered from seeing anything but the symbols and their arrangement as they are laid out in front of the reasoner. It is blind to the content of the proposition—what those symbols mean, and the context and background knowledge that might be mixed into the deliberation. Logical reasoning in the strict sense means forgetting everything you know. A student taking a test in Euclidean geometry gets no credit for pulling out a ruler and measuring the two sides of the triangle with equal angles, sensible as that might be in everyday life, but rather is required to prove it. In the same way, students doing the logic exercises in Carroll’s textbook must not be distracted by their irrelevant knowledge that puppies can’t talk. The only legitimate reason to conclude that the lame one failed to say “Thank you” is that that’s what is stipulated in the consequent of a conditional whose antecedent is true.

Logic, in this sense, is not rational. In the world in which we evolved and most of the world in which we spend our days, it makes no sense to ignore everything you know.22 It does make sense in certain unnatural worlds—logic courses, brainteasers, computer programming, legal proceedings, the application of science and math to areas in which common sense is silent or misleading. But in the natural world, people do pretty well by commingling their logical abilities with their encyclopedic knowledge, as we saw in chapter 1 with the San. We also saw that when we add certain kinds of verisimilitude to the brainteasers, people recruit their subject knowledge and no longer embarrass themselves. True, when they are asked to verify “If a card has a D on one side it must have a 3 on the other,” they mistakenly turn over the “3” and neglect to turn over the “7.” But when they are asked to imagine themselves as bouncers in a bar and verify “If a patron is drinking alcohol he must be over twenty-one,” they know to check the beverages in front of the teenagers and to card anyone drinking beer.23

The contrast between the ecological rationality that allows us to thrive in a natural environment and the logical rationality demanded by formal systems is one of the defining features of modernity.24 Studies of unlettered peoples by cultural psychologists and anthropologists have shown that they are rooted in the rich texture of reality and have little patience for the make-believe worlds familiar to graduates of Western schooling. Here Michael Cole interviews a member of the Kpelle people in Liberia:

Q: Flumo and Yakpalo always drink rum together. Flumo is drinking rum. Is Yakpalo drinking rum?

A: Flumo and Yakpalo drink rum together, but the time Flumo was drinking the first one Yakpalo was not there on that day.

Q: But I told you that Flumo and Yakpalo always drink rum together. One day Flumo was drinking rum. Was Yakpalo drinking rum?

A: The day Flumo was drinking the rum Yakpalo was not there on that day.

Q: What is the reason?

A: The reason is that Yakpalo went to his farm on that day and Flumo remained in town on that day.25

The Kpelle man treats the question as a sincere inquiry, not a logic puzzle. His response, though it would count as an error on a test, is by no means irrational: it uses relevant information to come up with the correct answer. Educated Westerners have learned how to play the game of forgetting what they know and fixating on the premises of a problem—though even they have trouble separating their factual knowledge from their logical reasoning. Many people will insist, for example, that the following argument is logically invalid: “All things made of plants are healthy. Cigarettes are made of plants. Therefore, cigarettes are healthy.”26 Change “cigarettes” to “salads” and they confirm that it’s fine. Philosophy professors who present students with contrived thought experiments, like whether it is permissible to throw a fat man over a bridge to stop a runaway trolley which threatens five workers on the track, often get frustrated when students look for loopholes, like shouting at the workers to get out of the way. Yet that is exactly the rational thing one would do in real life.

The zones in which we play formal, rule-governed games—law, science, digital devices, bureaucracy—have expanded in the modern world with the invention of powerful, content-blind formulas and rules. But they still fall short of life in all its plentitude. Leibniz’s logical utopia, which requires self-inflicted amnesia for background knowledge, not only runs against the grain of human cognition but is ill suited for a world in which not every relevant fact can be laid out as a premise.

Classical versus Family Resemblance Categories

A third reason that rationality will never be reduced to logic is that the concepts that people care about differ in a crucial way from the predicates of classical logic. Take the predicate “even number,” which can be defined by the biconditional “If an integer can be divided by 2 without remainder, it is even, and vice versa.” The biconditional is true, as is the proposition “8 can be divided by 2 without remainder,” and from these true premises we can deduce the true conclusion “8 is even.” Likewise with “If a person is female and the mother of a parent, she is a grandmother, and vice versa” and “If a person is male and adult and not married, he is a bachelor, and vice versa.” We might suppose that with enough effort every human concept can be defined in this way, by laying out necessary conditions for it to be true (the first if-then in the biconditional) and sufficient conditions for it to be true (the “vice versa” converse).

This reverie was famously punctured by the philosopher Ludwig Wittgenstein (1889–1951).27 Just try, he said, to find necessary and sufficient conditions for any of our everyday concepts. What is the common denominator across all the pastimes we call “games”? Physical activity? Not with board games. Gaiety? Not in chess. Competitors? Not solitaire. Winning and losing? Not ring-around-the-rosy or a child throwing a ball against a wall. Skill? Not bingo. Chance? Not crossword puzzles. And Wittgenstein did not live to see mixed martial arts, Pokémon GO, or Let’s Make a Deal.28

The problem is not that no two games have anything in common. Some are merry, like tag and charades; some have winners, like Monopoly and football; some involve projectiles, like baseball and tiddlywinks. Wittgenstein’s point was that the concept of “game” has no common thread running through it, no necessary and sufficient features that could be turned into a definition. Instead, various characteristic features run through different subsets of the category, the same way that physical features may be found in different combinations in the members of a family. Not every scion of Robert Kardashian and Kristen Mary Jenner has the pouty Kardashian lips or the raven Kardashian hair or the caramel Kardashian skin or the ample Kardashian derrière. But most of the sisters have some of them, so we can recognize a Kardashian when we see one, even if there is no true proposition “If someone has features X and Y and Z, that person is a Kardashian.” Wittgenstein concluded that it is family resemblance, not necessary and sufficient features, that holds the members of a category together.

Most of our everyday concepts turn out to be family resemblance categories, not the “classical” or “Aristotelian” categories that are easily stipulated in logic.29 These categories often have stereotypes, like the little picture of a bird in a dictionary next to the definition of bird, but the definition itself fails to embrace all and only the exemplars. The category “chairs,” for example, includes wheelchairs with no legs, rolling stools with no back, beanbag chairs with no seat, and the exploding props used in Hollywood fight scenes which cannot support a sitter. Even the ostensibly classical categories that professors used to cite to illustrate the concept turn out to be riddled with exceptions. Is there a definition of “mother” that embraces adoptive mothers, surrogates, and egg donors? If a “bachelor” is an unmarried man, is the pope a bachelor? What about the male half of a monogamous couple who never bothered to get the piece of paper from city hall? And you can get in a lot of trouble these days if you try to lay out necessary and sufficient conditions for “woman.”

As if this weren’t bad enough for the dream of a universal logic, the fact that concepts are defined by family resemblance rather than necessary and sufficient conditions means that propositions can’t even be given the values true or false. Their predicates may be truer of some subjects than others, depending on how stereotypical the subject is—in other words, how many of the family’s typical features it has. Everyone agrees that “Football is a sport” is true, but many feel that “Synchronized swimming is a sport” is, at best, truthy. The same holds for “Parsley is a vegetable,” “A parking violation is a crime,” “Stroke is a disease,” and “Scorpions are bugs.” In everyday judgments, truth can be fuzzy.

It’s not that all concepts are fuzzy family resemblance categories.30 People are perfectly capable of putting things in little boxes. Everyone understands that a number is either even or odd, with no in-betweens. We joke that you can’t be a little bit pregnant or a little bit married. We understand laws that preempt endless disputation over borderline cases by drawing red lines around concepts like “adult,” “citizen,” “owner,” “spouse,” and other consequential categories.

Indeed, an entire category of informal fallacies arises from people being all too eager to think in black and white. There’s the false dichotomy: “Nature versus nurture”; “America—love it or leave it”; “You’re either with us or with the terrorists”; “Either you’re part of the solution or you’re part of the problem.” There’s the slippery slope fallacy: if we legalize abortion, soon we’ll legalize infanticide; if we allow people to marry an individual who is not of the opposite sex, we will have to allow people to marry an individual who is not of the same species. And the paradox of the heap begins with the truth that if something is a heap, then it’s still a heap if you remove one grain. But when you remove another, and then another, you reach a point where it’s no longer a heap, which implies that there’s no such thing as a heap. By the same logic, the job will get done even if I put it off by just one more day (the mañana fallacy), and I can’t get fat by eating just one more French fry (the dieter’s fallacy).

Wittgenstein’s response to Leibniz and Aristotle is not just a debating point for philosophy seminars. Many of our fiercest controversies involve decisions on how to reconcile fuzzy family resemblance concepts with the classical categories demanded by logic and law. Is a fertilized ovum a “person”? Did Bill and Monica have “sex”? Is a sport utility vehicle a “car” or a “truck”? (The latter classification put tens of millions of vehicles on American roads that met laxer standards for safety and emissions.) And not long ago I received the following email from the Democratic Party:

House Republicans are ramming through legislation this week to classify pizza as a “vegetable” for the purpose of school lunches. Why? Because a massive lobbying effort of Republican lawmakers by the frozen pizza industry is underway. . . .

In this Republican Congress, almost anything is up for sale to the most powerful lobbyists—including the literal definition of the word “vegetable”—and this time, it’s coming at the expense of our kids’ health.

Sign this petition and spread the word: Pizza isn’t a vegetable.

Logical Computation versus Pattern Association

If many of our judgments are too squishy to be captured in logic, how do we think at all? Without the guardrails of necessary and sufficient conditions, how do we come to agree that football is a sport and Kris Jenner is a mother and, House Republicans notwithstanding, pizza isn’t a vegetable? If rationality is not implemented in the mind as a list of propositions and a chain of logical rules, how is it implemented?

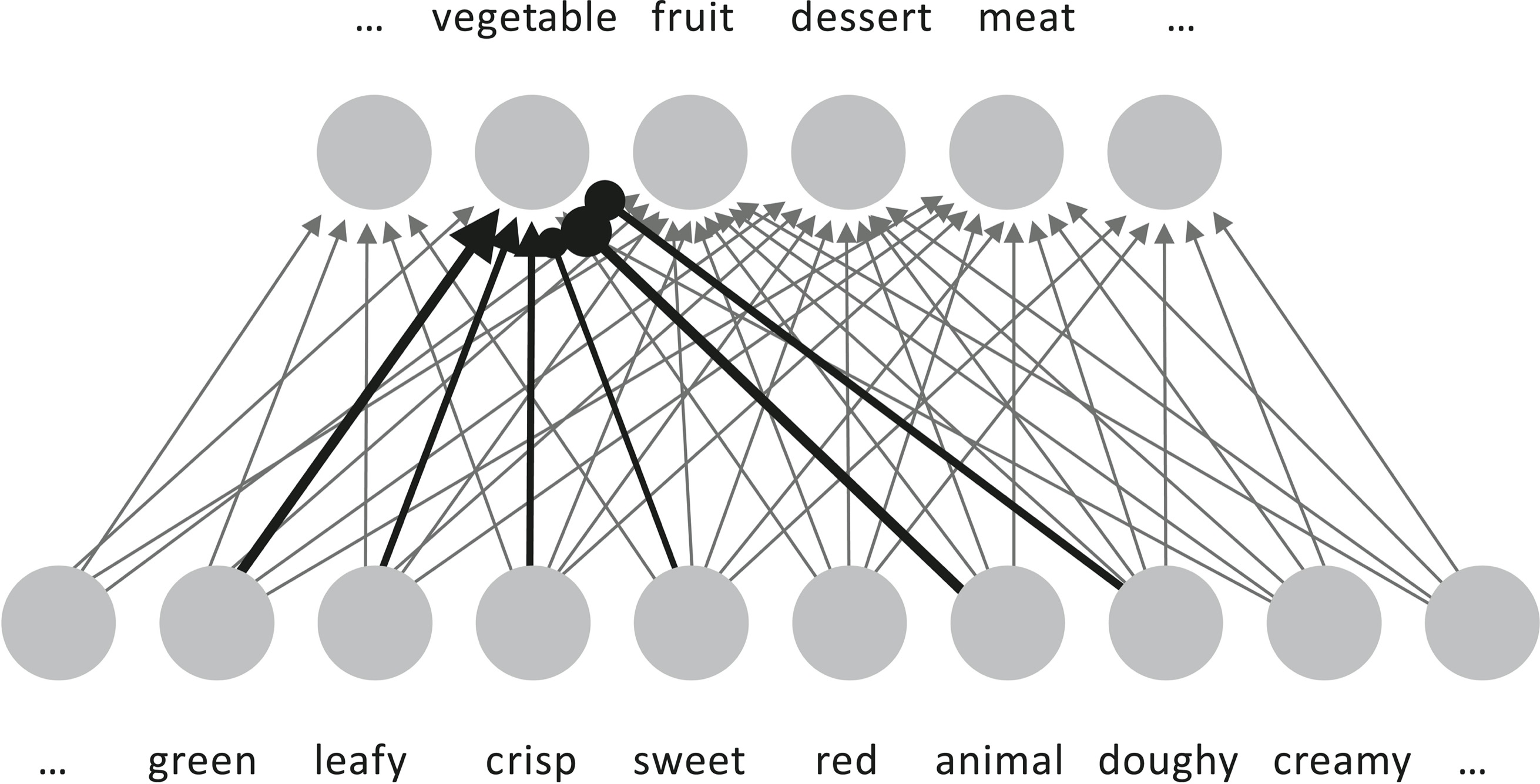

One answer may be found in the family of cognitive models called pattern associators, perceptrons, connectionist nets, parallel distributed processing models, artificial neural networks, and deep learning systems.31 The key idea is that rather than manipulating strings of symbols with rules, an intelligent system can aggregate tens, thousands, or millions of graded signals, each capturing the degree to which a property is present.

Take the surprisingly contentious concept “vegetable.” It’s clearly a family resemblance category. There is no Linnaean taxon that includes carrots, fiddleheads, and mushrooms; no type of plant organ that embraces broccoli, spinach, potatoes, celery, peas, and eggplant; not even a distinctive taste or color or texture. But as with the Kardashians, we tend to know vegetables when we see them, because overlapping traits run through the different members of the family. Lettuce is green and crisp and leafy, spinach is green and leafy, celery is green and crisp, red cabbage is red and leafy. The greater the number of veggie-like traits something has, and the more definitively it has them, the more apt we are to call it a vegetable. Lettuce is a vegetable par excellence; parsley, not so much; garlic, still less. Conversely, certain traits militate against something being a vegetable. While some vegetables verge on sweetness, like acorn squash, once a plant part gets too sweet, like a cantaloupe, we call it a fruit instead. And though portobello mushrooms are meaty and spaghetti squash is pasta-like, anything made from animal flesh or flour dough is blackballed. (Bye-bye, pizza.)

This means we can capture vegetableness in a complicated statistical formula. Each of an item’s traits (its greenness, its crunchiness, its sweetness, its doughiness) is quantified, and then multiplied by a numerical weight that reflects how diagnostic that trait is of the category: high positive for greenness, lower positive for crispness, low negative for sweetness, high negative for doughiness. Then the weighted values are added up, and if the sum exceeds a threshold, we say it’s a vegetable, with higher numbers indicating better examples.

Now, no one thinks we make our fuzzy judgments by literally carrying out chains of multiplication and addition in our heads. But the equivalent can be done by networks of neuron-like units which can “fire” at varying rates, representing the fuzzy truth value. A toy version is shown on the next page. At the bottom we have a bank of input neurons fed by the sense organs, which respond to simple traits like “green” and “crisp.” At the top we have the output neurons, which display the network’s guess of the category. Every input neuron is connected to every output neuron via a “synapse” of varying strength, both excitatory (implementing the positive multipliers) and inhibitory (implementing the negative ones). The activated input units propagate signals, weighted by the synapse strengths, to the output units, each of which adds up the weighted set of incoming signals and fires accordingly. In the diagram, the excitatory connections are shown with arrows and the inhibitory ones with dots, and the thickness of the lines represents the strengths of synapses (shown only for the vegetable output, to keep it simple).

Who, you may ask, programmed in the all-important connection weights? The answer is no one; they are learned from experience. The network is trained by presenting it with many examples of different foods, together with the correct category provided by a teacher. The neonate network, born with small random weights, offers random feeble guesses. But it has a learning mechanism that works by a getting-warmer/getting-colder rule. It compares each node’s output with the correct value supplied by the teacher, and nudges the weight up or down to close the gap. After hundreds of thousands of training examples, the connection weights settle into the best values, and the networks can get pretty good at classifying things.

But that’s true only when the input features indicate the output categories in a linear, more-is-better, add-’em-up way. It works for categories where the whole is the (weighted) sum of its parts, but it fails when a category is defined by tradeoffs, sweet spots, winning combinations, poison pills, deal-killers, perfect storms, or too much of a good thing. Even the simple logical connector xor (exclusive or), “x or y but not both,” is beyond the powers of a two-layer neural network, because x-ness has to boost the output, and y-ness has to boost the output, but in combination they have to squelch it. So while a simple network can learn to recognize carrots and cats, it may fail with an unruly category like “vegetable.” An item that’s red and round is likely to be a fruit if it is crunchy and has a stem (like an apple), but a vegetable if it is crunchy and has roots (like a beet) or if it is fleshy and has a stem (like a tomato). And what combination of colors and shapes and textures could possibly rope in mushrooms, spinach, cauliflower, carrots, and beefsteak tomatoes? A two-layer network gets confused by the crisscrossing patterns, jerking its weights up and down with each training example and never settling on values that consistently separate the members from the nonmembers.

The problem may be tamed by inserting a “hidden” layer of neurons between the input and the output, as shown on the next page. This changes the network from a stimulus-response creature to one with internal representations—concepts, if you will. Here they might stand for cohesive intermediate categories like “cabbage-like,” “savory fruits,” “squashes and gourds,” “greens,” “fungi,” and “roots and tubers,” each with a set of input weights that allow it to pick out the corresponding stereotype, and strong weights to “vegetable” in the output layer.

The challenge in getting these networks to work is how to train them. The problem is with the connections from the input layer to the hidden layer: since the units are hidden from the environment, their guesses cannot be matched against “correct” values supplied by the teacher. But a breakthrough in the 1980s, the error back-propagation learning algorithm, cracked the problem.32 First, the mismatch between each output unit’s guess and the correct answer is used to tweak the weights of the hidden-to-output connections in the top layer, just like in the simple networks. Then the sum of all these errors is propagated backwards to each hidden unit to tweak the input-to-hidden connections in the middle layer. It sounds like it could never work, but with millions of training examples the two layers of connections settle into values that allow the network to sort the sheep from the goats. Just as amazingly, the hidden units can spontaneously discover abstract categories like “fungi” and “roots and tubers,” if that’s what helps them with the classifying. But more often the hidden units don’t stand for anything we have names for. They implement whichever complex formulas get the job done: “a teensy bit of this feature, but not too much of that feature, unless there’s really a lot of this other feature.”

In the second decade of the twenty-first century, computer power skyrocketed with the development of graphics processing units, and data got bigger and bigger as millions of users uploaded text and images to the web. Computer scientists could put multilayer networks on megavitamins, giving them two, fifteen, even a thousand hidden layers, and training them on billions or even trillions of examples. The networks are called deep learning systems because of the number of layers between the input and the output (they’re not deep in the sense of understanding anything). These networks are powering “the great AI awakening” we are living through, which is giving us the first serviceable products for speech and image recognition, question-answering, translation, and other humanlike feats.33

Deep learning networks often outperform GOFAI (good old-fashioned artificial intelligence), which executes logic-like deductions on hand-coded propositions and rules.34 The contrast in the way they work is stark: unlike logical inference, the inner workings of a neural network are inscrutable. Most of the millions of hidden units don’t stand for any coherent concept that we can make sense of, and the computer scientists who train them can’t explain how they arrive at any particular answer. That is why many technology critics fear that as AI systems are entrusted with decisions about the fates of people, they could perpetuate biases that no one can identify and uproot.35 In 2018 Henry Kissinger warned that since deep learning systems don’t work on propositions we can examine and justify, they portend the end of the Enlightenment.36 That is a stretch, but the contrast between logic and neural computation is clear.

Is the human brain a big deep learning network? Certainly not, for many reasons, but the similarities are illuminating. The brain has around a hundred billion neurons connected by a hundred trillion synapses, and by the time we are eighteen we have been absorbing examples from our environments for more than three hundred million waking seconds. So we are prepared to do a lot of pattern-matching and associating, just like these networks. The networks are tailor-made for the fuzzy family resemblance categories that make up so much of our conceptual repertoire. Neural networks thus provide clues about the portion of human cognition that is rational but not, technically speaking, logical. They demystify the inarticulate yet sometimes uncanny mental power we call intuition, instinct, inklings, gut feelings, and the sixth sense.

For all the convenience that Siri and Google Translate bring to our lives, we must not think that neural networks have made logic obsolete. These systems, driven by fuzzy associations and incapable of parsing syntax or consulting rules, can be stunningly stupid.37 If you ask Google for “fast-food restaurants near me that are not McDonald’s,” it will give you a list of all the McDonald’s within a fifty-mile radius. Ask Siri, “Did George Washington use a computer?” and she will direct you to a computer reconstruction of George Washington’s face and the Computing System Services of George Washington University. The vision modules that someday will drive our cars are today apt to confuse road signs with refrigerators, and overturned vehicles with punching bags, fireboats, and bobsleds.

Human rationality is a hybrid system.38 The brain contains pattern associators that soak up family resemblances and aggregate large numbers of statistical clues. But it also contains a logical symbol manipulator that can assemble concepts into propositions and draw out their implications. Call it System 2, or recursive cognition, or rule-based reasoning. Formal logic is a tool that can purify and extend this mode of thinking, freeing it from the bugs that come with being a social and emotional animal.

Because our propositional reasoning frees us from similarity and stereotypes, it enables the highest achievements of human rationality, such as science, morality, and law.39 Though porpoises fit into the family resemblance among fishes, the rules that define membership in Linnaean classes (like “if an animal suckles its young, then it is a mammal”) tell us they are not in fact members. Through chains of categorical reasoning like this, we can be convinced that humans are apes, the sun is a star, and solid objects are mostly empty space. In the social sphere, our pattern-finders easily see the ways in which people differ: some individuals are richer, smarter, stronger, swifter, better-looking, and more like us than others. But when we embrace the proposition that all humans are created equal (“if X is human, then X has rights”), we can sequester these impressions from our legal and moral decision making, and treat all people equally.