Evidence of the test, evaluation, and assessment activities is often critical to the authorizing official in making the risk-based decision concerning the operation of the system under review. As SP 800-53A states: “Building an effective assurance case for security and privacy control effectiveness is a process that involves:

(i) Compiling evidence from a variety of activities conducted during the system development life cycle that the controls employed in the information system are implemented correctly, operating as intended, and producing the desired outcome with respect to meeting the security and privacy requirements of the system and the organization; and

(ii) Presenting this evidence in a manner that decision makers are able to use effectively in making risk-based decisions about the operation or use of the system.

The evidence described above comes from the implementation of the security and privacy controls in the information system and inherited by the system (i.e., common controls) and from the assessments of that implementation. Ideally, the assessor is building on previously developed materials that started with the specification of the organization’s information security and privacy needs and was further developed during the design, development, and implementation of the information system. These materials, developed while implementing security and privacy throughout the life cycle of the information system, provide the initial evidence for an assurance case.

Assessors obtain the required evidence during the assessment process to allow the appropriate organizational officials to make objective determinations about the effectiveness of the security and privacy controls and the overall security and privacy state of the information system. The assessment evidence needed to make such determinations can be obtained from a variety of sources including, for example, information technology product and system assessments and, in the case of privacy assessments, privacy compliance documentation such as Privacy Impact Assessments and Privacy Act System of Record Notices. Product assessments (also known as product testing, evaluation, and validation) are typically conducted by independent, third-party testing organizations. These assessments examine the security and privacy functions of products and established configuration settings. Assessments can be conducted to demonstrate compliance to industry, national, or international information security standards, privacy standards embodied in applicable laws and policies, and developer/vendor claims. Since many information technology products are assessed by commercial testing organizations and then subsequently deployed in millions of information

systems, these types of assessments can be carried out at a greater level of depth and provide deeper insights into the security and privacy capabilities of the particular products.”

1In order to properly gain all the necessary evidence of the proper functioning of the system along with its critical security objectives being met, the assessor often obtains artifacts and evidence from the product review cycle received during acquisition of the security component.

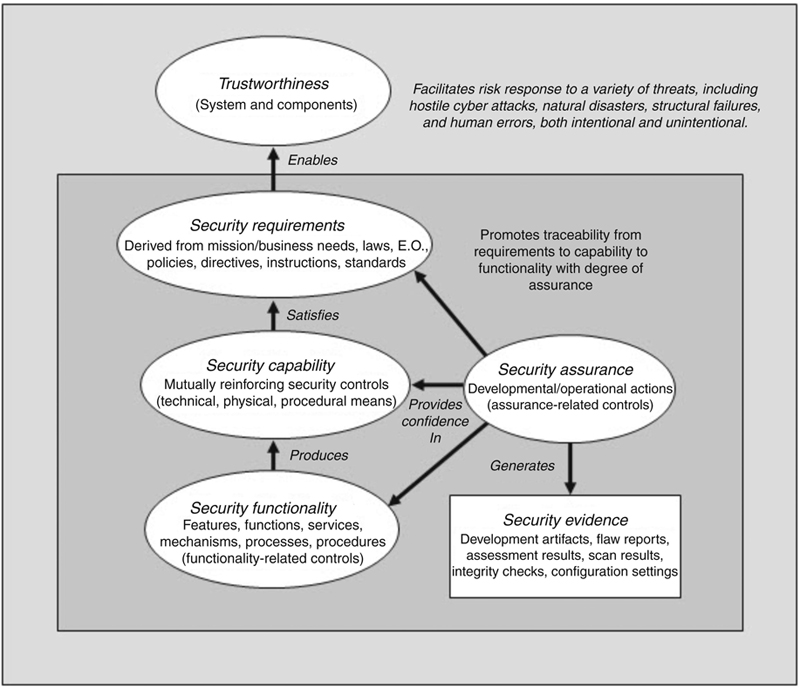

Organizations obtain security assurance by the actions taken by information system developers, implementers, operators, maintainers, and assessors. Actions by individuals and/or groups during the development/operation of information systems produce security evidence that contributes to the assurance, or measures of confidence, in the security functionality needed to deliver the security capability. The depth and coverage of these actions (as described below) also contribute to the efficacy of the evidence and measures of confidence. The evidence produced by developers, implementers, operators, assessors, and maintainers during the system development life cycle (e.g., design/development artifacts, assessment results, warranties, and certificates of evaluation/validation) contributes to the understanding of the security controls implemented by organizations.

The strength of security functionality (The security strength of an information system component (i.e., hardware, software, or firmware) is determined by the degree to which the security functionality implemented within that component is correct, complete, resistant to direct attacks (strength of mechanism), and resistant to bypass or tampering.) plays an important part in being able to achieve the needed security capability and subsequently satisfying the security requirements of organizations. Information system developers can increase the strength of security functionality by employing as part of the hardware/software/firmware development process:

(i) Well-defined security policies and policy models;

(ii) Structured/rigorous design and development techniques; and

(iii) Sound system/security engineering principles.

The artifacts generated by these development activities (e.g., functional specifications, high-level/low-level designs, implementation representations [source code and hardware schematics], the results from static/dynamic testing and code analysis) can provide important evidence that the information systems (including the components that compose those systems) will be more reliable and trustworthy. Security evidence can also be generated from security testing conducted by independent, accredited, third-party assessment organizations (e.g., Common Criteria Testing Laboratories, Cryptographic/Security Testing Laboratories, FedRAMP 3PAO’s, and other assessment activities by government and private sector organizations).

In addition to the evidence produced in the development environment, organizations can produce evidence from the operational environment that contributes to the assurance of functionality and ultimately, security capability. Operational evidence includes, for example, flaw reports, records of remediation actions, the results of security incident reporting, and the results of organizational continuous monitoring activities. Such evidence helps to determine the effectiveness of deployed security controls, changes to information systems and environments of operation, and compliance with federal legislation, policies, directives, regulations, and standards. Security evidence, whether obtained from development or operational activities, provides a better understanding of security controls implemented and used by organizations. Together, the actions taken during the system development life cycle by developers, implementers, operators, maintainers, and assessors and the evidence produced as part of those actions, help organizations to determine the extent to which the security functionality within their information systems is implemented correctly, operating as intended, and producing the desired outcome with respect to meeting stated security requirements and enforcing or mediating established security policies—thus providing greater confidence in the security capability.2

With regard to the security evidence produced, the depth and coverage of such evidence can affect the level of assurance in the functionality implemented. Depth and coverage are attributes associated with assessment methods and the generation of security evidence. Assessment methods can be applied to developmental and operational assurance. For developmental assurance, depth is associated with the rigor, level of detail, and formality of the artifacts produced during the design and development of the hardware, software, and firmware components of information systems (e.g., functional specifications, high-level design, low-level design, source code). The level of detail available in development artifacts can affect the type of testing, evaluation, and analysis conducted during the system development life cycle (e.g., black-box testing, gray-box testing, white-box testing, static/dynamic analysis). For operational assurance, the depth attribute addresses the number and types of assurance-related security controls selected and implemented. In contrast, the coverage attribute is associated with the assessment methods employed during development and operations, addressing the scope and breadth of assessment objects included in the assessments (e.g., number/types of tests conducted on source code, number of software modules reviewed, number of network nodes/mobile devices scanned for vulnerabilities, number of individuals interviewed to check basic understanding of contingency responsibilities).3

Types of evidence

It is recognized that organizations can specify, document, and configure their information systems in a variety of ways, and that the content and applicability of existing assessment evidence will vary. This may result in the need to apply a variety of assessment methods to various assessment objects to generate the assessment evidence needed to determine whether the security or privacy controls are effective in their application.4

Assessment, testing, and auditing evidence is the basis on which an auditor or assessor expresses their opinion on the security operations of the firm being assessed. Assessors obtain such evidence from tests that determine how well security controls work (called “compliance tests”) and tests of confidentiality, integrity, and availability details such as completeness and disclosure of information (called “substantive tests”).

The results of substantive testing would include existence, rights and obligations, occurrence, completeness, valuation, measurement, presentation, and disclosure of a particular transaction of security control in action. Therefore, there are many mechanisms by which the assessor gains the appropriate evidence for the evaluation. These include those given in the next subsections.

Physical Examination/Inspection

Inspection involves examining records or documents, whether internal or external, in paper form, electronic form, or other media, or physically examining an asset. Inspection of records and documents provides audit evidence of varying degrees of reliability, depending on their nature and source and, in the case of internal records and documents, on the effectiveness of the controls over their production. An example of inspection used as a test of controls is inspection of records for evidence of authorization.

• Inspection or count by the auditor of a tangible asset:

• This involves verifying the existence of an asset and the condition of the asset. It is important to record the asset name or model, serial number, or product ID, and compare it to the asset register.

• Different from examining documentation is that the asset has inherent value.

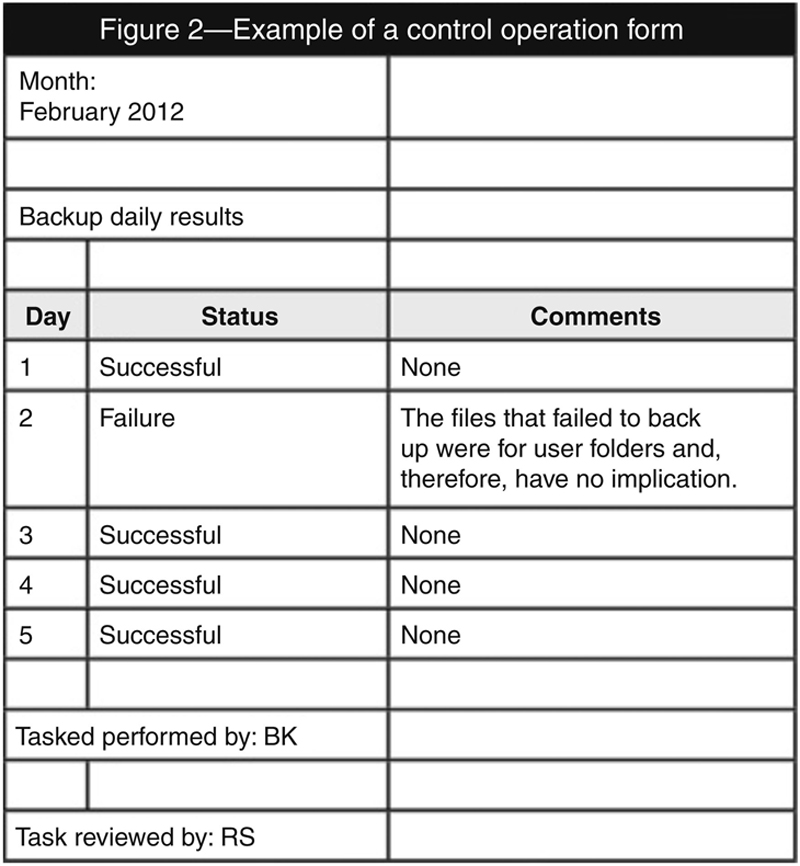

Inspection of records is often used because the reliability of the records depends on the source. Information obtained directly from the system is more reliable than information obtained from the system and then customized by the auditee. For example, most system administrators would rather purge backup status results every three months due to system capabilities. The intention is to free up server space. They usually record the backup results in a spreadsheet that they retain to show that the control has been operating throughout the year. An example of such a form is depicted in figure 2.

The information in figure 2 is less reliable than if the system administrator had saved the results in PDF format in a folder and recorded the data only for monitoring purposes. Using figure 2 would enable confirmation of the recordings. [1]

Confirmation

A confirmation response represents a particular form of audit evidence obtained by the auditor from a third party in accordance with Public Company Accounting Oversight Board (PCAOB) standards (used in financial and corporate environments). Confirmation is the receipt of a written or oral response from an independent third party. The assessor has an organizational request that the third party respond directly to the assessor.

Confirmation, by definition, is the receipt of a written or oral response from an independent third party verifying information requested by the assessor. Because of the independence of the third party, confirmations are a highly desirable, though costly, type of evidence. Some audit standards describe two types of confirmations.

A positive confirmation asks the respondent to provide an answer in all circumstances, while a negative confirmation asks for a response only if the information is incorrect. As you might predict, negative confirmations are not as competent as positive confirmations. Note that SAS 67, Audit Standard, requires confirmation of a sample of accounts receivable due to the materiality of receivables for most companies. Note the provision that confirmations must be under the control of the auditor for maximum reliability of the evidence.

Confirmation is often viewed as audit evidence that is from an external independent source and is more creditable than evidence from an internal source. Most financial auditors confirm balances (e.g., creditor’s balances and debtor’s balances) by sending out confirmation letters to external independent sources such as banks and vendors.

However, in the majority of IT audits, audit evidence is derived from the system configurations. Configurations obtained by an auditor through observation of the system or via a reliable audit software tool are more reliable than data received from the auditee.

Documentation

Documentation consists of the organization’s business documents used to support security and accounting events. The strength of documentation is that it is prevalent and available at a low cost. Documents can be internal or externally generated. Internal documents provide less reliable evidence than external ones, particularly if the client’s internal control is suspect. Documents that are external and have been prepared by qualified individuals such as attorneys or insurance brokers provide additional reliability. The use of documentation in support of a client’s transactions is called vouching.

Documentation review criteria include three areas of focus:

1. Review is used for the “generalized” level of rigor, that is, a high-level examination looking for required content and for any obvious errors, omissions, or inconsistencies.

2. Study is used for the “focused” level of rigor, that is, an examination that includes the intent of “review” and adds a more in-depth examination for greater evidence to support a determination of whether the document has the required content and is free of errors, omissions, and inconsistencies.

3. Analyze is used for the “detailed” level of rigor, that is, an examination that includes the intent of both “review” and “study,” adding a thorough and detailed analysis for significant grounds for confidence in the determination of whether required content is present and the document is correct, complete, and consistent.

Analytical Procedures

Analytical procedures consist of evaluations of financial information made by a study of plausible relationships among both financial and nonfinancial data. They also encompass the investigation of significant differences from expected amounts. Recalculation consists of checking the mathematical accuracy of documents or records. It may be performed manually or electronically.

Analytical procedures are comparisons of account balances and relationships as a check on reasonableness. They are required during the planning and completion phases of all audits and may be used for the following purposes:

1. To better understand the organizational business and mission objectives

2. To assess the organization’s ability to continue as a going concern

3. To indicate the possibility of misstatements (“unusual fluctuations”) in the organizational documented statements

4. To reduce the need for detailed assessment testing

One of the primary ways that assessors and testers verify large system functioning and activities is through the use of sampling.

Sampling

Sampling is an audit procedure that tests less than 100 percent of the population. There are different types of sampling methods that an IS auditor can apply to gather sufficient evidence to address the audit objectives and the rate of risk identified. Sampling methods can be statistical or nonstatistical. Statistical sampling involves deriving the sample quantitatively. The statistical methods commonly used are random sampling and systematic sampling. Nonstatistical sampling involves deriving the sample qualitatively. Commonly used nonstatistical methods are haphazard and judgmental sampling.

The sampling size applied depends on the type of control being tested, the frequency of the control and the effectiveness of the design and implementation of the control.

Type of Controls and Sample Size

The following are the two types of controls:

• Automated controls—Automated controls generally require one sample. It is assumed that if a program can execute a task—for example, successfully calculate a car allowance due based on a base percentage of an employee’s salary—and the program coding has not been changed, the system should apply the same formula to the rest of the population. Therefore, testing one instance is sufficient for the rest of the population. The same is true for the reverse; if the system incorrectly calculates the allowance, the error is extrapolated to the rest of the population.

• Manual controls—Depending on which sampling method an IS auditor uses to calculate the sample size, the following factors should be taken into consideration to determine the sample size:

1. Reliance placed on the control

2. The risk associated with control

3. The frequency of the control occurrence

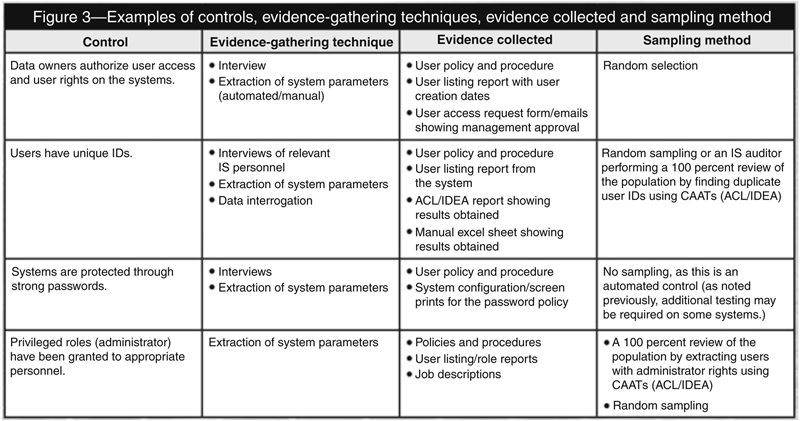

Examples of manual controls include review of audit log monitoring, review of user authorization access forms, and review of daily IT procedures, server monitoring procedure and help-desk functions. Figure 3 provides examples of IT controls, the technique that can be used to gather evidence and the sampling method that can be used.

Quality evidence collected during the audit process enhances the overall quality of the work performed and significantly reduces audit risk. Failure to collect quality evidence may result in the auditor or company facing litigation, loss of reputation and loss of clientele. It is important to ensure that the audit evidence obtained from the auditee is of high quality and supports the understanding of the IT control environment. [1]

Here are the types of evidence that are typically sampled:

• Tangible assets: If, for example, a business states that it owns 300 company cars. You do not hunt down all 300 cars; you just select a sample of the cars to track down to verify their existence.

• Records or documents: Records or documents are also known as source materials, and they are the materials on which the numbers in financial statements are based. For example, the amount of sales that a financial statement represents is derived from the data on customer invoices, which in this case is your sampling unit.

• Reperformance: This term refers to checking the sampling work the client has already done. For example, company policy dictates that no employee is paid unless he or she has turned in a timesheet. The client states that this rule is in use for 100% of all paychecks. You can test this client assertion by taking a sample of payroll checks and matching them to the timesheets.

• Recalculation: This term refers to checking the mathematical accuracy of figures and totals on a document. For example, a sample may have three columns: cost per item, items ordered, and total. You perform recalculation if you verify the figures on the invoice by multiplying cost per item times items ordered and making sure the figure equals the total.

• Confirmation: This term refers to getting account balance verification from unrelated third parties. A good example is sending letters to customers or vendors of the business to verify accounts receivable or accounts payable balances.

Interviews of the Users/Developers/Customers

Questions of users, key personnel, system owners, and other relevant personnel in the organization are often used as a starting point to determine the proper use of and implementation of controls. Interviews are the process of holding discussions with individuals or groups of individuals within an organization to, once again, facilitate assessor understanding, achieve clarification, or obtain evidence.

Typically, the interviews are conducted with agency heads, chief information officers, senior agency information security officers, authorizing officials, information owners, information system and mission owners, information system security officers, information system security managers, personnel officers, human resource managers, facilities managers, training officers, information system operators, network and system administrators, site managers, physical security officers, and users.

Often questionnaire are used to gather data by allowing the IS personnel to answer predetermined questions. This technique is usually used to collect data during the planning phase of the audit. Information gathered through this process has to be corroborated through additional testing. Inquiry alone is regarded as the least creditable audit evidence. This is especially true if the source of the information is from the auditee who performs or supervises the function about which one is inquiring. If inquiry is the only way to get the evidence, it is advisable to corroborate the inquiry with an independent source. If one is auditing proprietary software and the IT officer has no access to the source code and cannot demonstrate from the system configurations that there were no upgrades carried out in the year under review, one can corroborate the inquiry with the users of the applications. Although inquiry is the least creditable when carrying out control adequacy testing, it is deemed sufficient during the planning stage.

SP 800-53A does provide some guidance for the interview process and the detail of how in-depth the interviews should be conducted as follows:

The depth attribute addresses the rigor of and level of detail in the interview process. There are three possible values for the depth attribute:

(i) Basic;

Basic interview: Interview that consists of broad-based, high-level discussions with individuals or groups of individuals. This type of interview is conducted using a set of generalized, high-level questions. Basic interviews provide a level of understanding of the security and privacy controls necessary for determining whether the controls are implemented and free of obvious errors.

(ii) Focused; and

Focused interview: Interview that consists of broad-based, high-level discussions and more in-depth discussions in specific areas with individuals or groups of individuals. This type of interview is conducted using a set of generalized, high-level questions and more in-depth questions in specific areas where responses indicate a need for more in-depth investigation. Focused interviews provide a level of understanding of the security and privacy controls necessary for determining whether the controls are implemented and free of obvious errors and whether there are increased grounds for confidence that the controls are implemented correctly and operating as intended.

(iii) Comprehensive.

Comprehensive interview: Interview that consists of broad-based, high-level discussions and more in-depth,

probing discussions in specific areas with individuals or groups of individuals. This type of interview is conducted using a set of generalized, high-level questions and more in-depth,

probing questions in specific areas where responses indicate a need for more in-depth investigation.

Comprehensive interviews provide a level of understanding of the security and privacy controls necessary for determining whether the controls are implemented and free of obvious errors and whether there are

further increased grounds for confidence that the controls are implemented correctly and operating as intended

on an ongoing and consistent basis, and that there is support for continuous improvement in the effectiveness of the controls.

5

SP 800-53A does provide some guidance for the interview process and the detail of how much should be covered in the interviews as follows:

The coverage attribute addresses the scope or breadth of the interview process and includes the types of individuals to be interviewed (by organizational role and associated responsibility), the number of individuals to be interviewed (by type), and specific individuals to be interviewed. The organization, considering a variety of factors (e.g., available resources, importance of the assessment, the organization’s overall assessment goals and objectives), confers with assessors and provides direction on the type, number, and specific individuals to be interviewed for the particular attribute value described.

There are three possible values for the coverage attribute:

(i) Basic;

Basic interview: Interview that uses a representative sample of individuals in key organizational roles to provide a level of coverage necessary for determining whether the security and privacy controls are implemented and free of obvious errors.

(ii) Focused; and

Focused interview: Interview that uses a representative sample of individuals in key organizational roles and other specific individuals deemed particularly important to achieving the assessment objective to provide a level of coverage necessary for determining whether the security and privacy controls are implemented and free of obvious errors and whether there are increased grounds for confidence that the controls are implemented correctly and operating as intended.

(iii) Comprehensive.

Comprehensive interview: Interview that uses a

sufficiently large sample of individuals in key organizational roles and other specific individuals deemed particularly important to achieving the assessment objective to provide a level of coverage necessary for determining whether the security and privacy controls are implemented and free of obvious errors and whether there are

further increased grounds for confidence that the controls are implemented correctly and operating as intended

on an ongoing and consistent basis, and that there is support for continuous improvement in the effectiveness of the controls.

5Reuse of Previous Work

Reuse of assessment results from previously accepted or approved assessments is considered in the body of evidence for determining overall security or privacy control effectiveness. Previously accepted or approved assessments include:

(i) Those assessments of common controls that are managed by the organization and support multiple information systems; or

(ii) Assessments of security or privacy controls that are reviewed as part of the control implementation.

The acceptability of using previous assessment results in a security control assessment or privacy control assessment is coordinated with and approved by the users of the assessment results. It is essential that information system owners and common control providers collaborate with authorizing officials and other appropriate organizational officials in determining the acceptability of using previous assessment results. When considering the reuse of previous assessment results and the value of those results to the current assessment, assessors determine:

(i) The credibility of the assessment evidence;

(ii) The appropriateness of previous analysis; and

(iii) The applicability of the assessment evidence to current information system operating conditions.

If previous assessment results are reused, the date of the original assessment and type of assessment are documented in the security assessment plan or privacy assessment plan and security assessment report or privacy assessment report.

The following items are considered in validating previous assessment results for reuse:

• Changing conditions associated with security controls and privacy controls over time.

Security and privacy controls that were deemed effective during previous assessments may have become ineffective due to changing conditions within the information system or its environment of operation. Assessment results that were found to be previously acceptable may no longer provide credible evidence for the determination of security or privacy control effectiveness, and therefore, a reassessment would be required. Applying previous assessment results to a current assessment necessitates the identification of any changes that have occurred since the previous assessment and the impact of these changes on the previous results.

• Amount of time that has transpired since previous assessments.

In general, as the time period between current and previous assessments increases, the credibility and utility of the previous assessment results decrease. This is primarily due to the fact that the information system or the environment in which the information system operates is more likely to change with the passage of time, possibly invalidating the original conditions or assumptions on which the previous assessment was based.

• Degree of independence of previous assessments.

Assessor independence can be a critical factor in certain types of assessments. The degree of independence required from assessment to assessment

should be consistent. For example, it is not appropriate to reuse results from a previous self-assessment where no assessor independence was required, in a current assessment requiring a greater degree of independence.

6

Automatic Test Results

Scanners, integrity checkers, and automated test environments are all examples of these types of outputs which are used by the assessors. Scanning reviews typically involve searching for large or unusual items to detect error in the results from the scans. For example, if there is a maximum or minimum loan amount, one can scan through the loan book for amounts outside the stated range. Configuration compliance checkers perform a process of reviewing system configuration and user account details through the use of manual or utility tools/scripts, which are available freely online, developed in-house, or obtained off the shelf on the market. The available software includes, but is not limited to, Microsoft Baseline Security Analyzer (free), DumpSec (free), IDEA examiner, ACL CaseWare, and in-house-developed scripts. Alternatively, the assessor can read system manuals for the system being audited for guidance on how to retrieve system configurations and user accounts manually. For example, to get administrator access on a Windows 2003 server, the IS auditor would follow the following procedure: start → administrative tools → active directory users and computers → built in → select administrator → right click → select properties → select member.

Recalculation consists of checking the mathematical accuracy of documents or records. It may be performed manually or electronically. Automated testing tools are computer programs that run in a Windows environment, which can be used to test evidence that is in a machine-readable format. Additionally, there is software available that can be used to manage the assessment documentation and network sharing of files among the assessment team.

A common technique used during assessments is called data interrogation; this is a process of analyzing data usually through the use of Computer-Aided Audit Tools (CAATs). Generalized audit software can be embedded within an application to review transactions as they are being processed, and exception reports showing variances or anomalies are produced and used for further audit investigations. The most commonly used CAAT method involves downloading data from an application and analyzing it with software such as ACL and IDEA. Some of the tests include journal testing, application input and output integrity checks (e.g., duplicate numbers), gaps on invoices/purchase orders, and summarization of vendors by amounts paid.

In other portions of this handbook, I go into great detail about automated testing so it is unnecessary to further expand this area.

Observation

Observation consists of looking at a process or procedure being performed by others, for example, the auditor’s observation of inventory counting by the company’s personnel or the performance of control activities. Observation can provide audit evidence about the performance of a process or procedure, but the evidence is limited to the point in time at which the observation takes place and also is limited by the fact that the act of being observed may affect how the process or procedure is performed.

It is suggested that observation should be carried out by two assessors. This is to corroborate what the assessor observed and to avoid instances in which management refutes the findings of the observation. In addition, observation is key in establishing segregation of duties. When assessing, where possible, the assessor should spend some time with the organizational administrators. This will afford the assessor the opportunity to see exactly what is happening, not what should happen.

SP 800-53A defines three methods for conducting detailed observations while examining security controls as follows:

1. Observe is used for the “generalized” level of rigor, that is, watching the execution of an activity or process or looking directly at a mechanism (as opposed to reading documentation produced by someone other than the assessor about that mechanism) for the purpose of seeing whether the activity or mechanism appears to operate as intended (or in the case of a mechanism, perhaps is configured as intended) and whether there are any obvious errors, omissions, or inconsistencies in the operation or configuration.

2. Inspect is used for the “focused” level of rigor, that is, adding to the watching associated with “observe” an active investigation to gain further grounds for confidence in the determination of whether the activity or mechanism is operating as intended and is free of errors, omissions, or inconsistencies.

3. Analyze, while not currently used in the assessment cases for activities and mechanisms, is available for use for the “detailed” level of rigor, that is, adding to the watching and investigation of “observe” and “inspect” a thorough and detailed analysis of the information to develop significant grounds for confidence in the determination as to whether the activity or mechanism is operating as intended and is free of errors, omissions, or inconsistencies. Analysis achieves this both by leading to further observations and inspections and by a greater understanding of the information obtained from the examination.

Documentation requirements

The process of documenting the assessment evidence is usually determined by the criteria needed to provide the support documentation to the authorizing official as they make their risk-based decisions concerning the system under review. Multiple types of evidence documentation are produced during the assessment process, as well as actual Assessment Artifact documents. Each of these pieces of documentation require the assessor to identify it, correlate it to the assessment plan step it is relevant to, and determine how it supports the findings and recommendations in the Security Assessment Report (SAR). The SAR is the primary document resulting from the assessment and we will discuss that specific document and its supporting artifacts in the next chapter.

Documentation is the principal record of testing and assessment procedures applied, evidence obtained, and conclusions reached by the assessor. Often evidence is documented in what are known as audit working papers. Audit working papers achieve following objectives:

• Aid in the planning, performance, and review of audit work

• Provide the principal support for audit report and conclusions

• Facilitate third-party/supervisory reviews

Using work papers, a common auditing methodology, provides the following advantages:

• Provides a basis for evaluating the internal audit activity’s quality control program

• Documents whether engagement objectives were achieved

• Supports the accuracy and completeness of the work performed

There are two types of work papers used in today’s processes. They are as follows:

1. Permanent file: Information that is relevant for multiple years on recurring engagements

2. Current file: Information relevant for a given assessment for a particular assessment period

Key characteristics of work papers are as follows:

1. Complete: Each work paper should be completely self-standing and self-explanatory. All questions must be answered; all points raised by the reviewer must be cleared and a logical, well-thought-out conclusion reached for each assessment segment.

2. Concise: Audit work papers and items included on each work paper should be relevant to meeting the applicable assessment objective. Work papers must be confined to those that serve a useful purpose.

3. Accurate: High-quality work papers include statements and computations that are accurate and technically correct.

4. Organized: Work papers should have a logical system of numbering and a reader-friendly layout so a technically competent person unfamiliar with the project could understand the purpose, procedures performed, and results.

Work papers should include the following key elements:

• Name of assessment area.

• Source: The name and title of the individual providing the documentation should be recorded to facilitate future follow-up questions or assessment.

• Scope: The nature, timing, and extent of procedures performed should be included on each work paper for completeness.

• Reference: A logical work paper number cross-referenced to assessment plan steps and issues should be included.

• Sign-off: The preparer’s signature provides evidence of completion and accountability, which is an essential piece of third-party quality review.

• Exceptions: Assessment exceptions should be documented and explained clearly on each work paper using logical numbering that cross-reference to other work papers.

Trustworthiness

The purpose of the development and production of the assessor evidence is to build the trustworthiness for the authorizing official of the assessment results and conclusions provided by the security control assessor. Additionally, the trustworthiness is then built of the operating organization and the system owner in their operational status reporting and ongoing continuous monitoring activities which the authorizing official is participating in during normal operations of the system under review. As SP 800-53A shows us in the following diagram, the security evidence gathered during the assessment process provides the ultimate trustworthiness evaluation criteria for the system owner, authorizing official, and the operational managers to use to run and operate the system under review: