Introduction

What is an assessment?

An assessment of a system or application is the process of reviewing, testing, and evaluating the components, documentation, and all parameters of this system or application for the purpose of ensuring it is as secure as possible, within an organization’s risk tolerance, while it is operational and being utilized for its intended purpose.

As SP 800-53A on page 9 says, “An assessment procedure consists of a set of assessment objectives, each with an associated set of potential assessment methods and assessment objects. An assessment objective includes a set of determination statements related to the particular security or privacy control under assessment. The determination statements are linked to the content of the security or privacy control (i.e., the security/privacy control functionality) to ensure traceability of assessment results back to the fundamental control requirements. The application of an assessment procedure to a security or privacy control produces assessment findings. These findings reflect, or are subsequently used, to help determine the overall effectiveness of the security or privacy control.” This results in determining if the control is, as SP 800-53A points out:

1. Implemented correctly

2. Operating as intended

3. Producing the desired outcome in relation to the security requirements of the system or application under review

Assessment objects identify the specific items being assessed and include specifications, mechanisms, activities, and individuals.

1. Specifications are the document-based artifacts (e.g., policies, procedures, plans, system security and privacy requirements, functional specifications, architectural designs) associated with an information system, in other words – the documentation.

2. Mechanisms are the specific hardware, software, or firmware safeguards and countermeasures employed within an information system, in other words – the technical controls.

3. Activities are the specific protection-related actions supporting an information system that involve people (e.g., conducting system backup operations, monitoring network traffic, exercising a contingency plan), in other words – the processes.

4. Individuals, or groups of individuals, are people applying the specifications, mechanisms, or activities described above, in other words – the people.

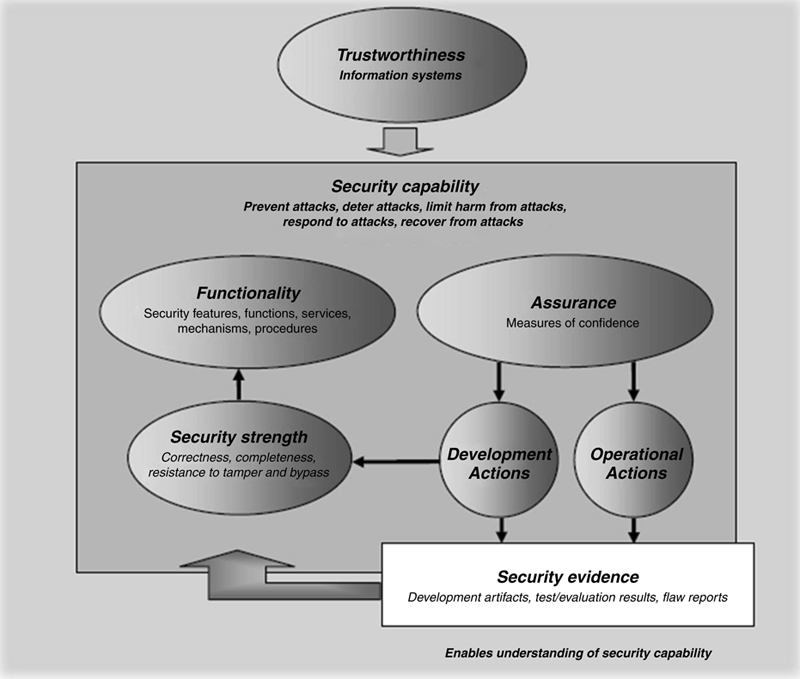

The entire point of these various assessment areas and their coverage of the system and network under review is to provide assessment evidence that the decision makers can use to make their operational decisions from a viewpoint of trustworthiness as found in the following figure from SP 800-53A:

Experiences and the process

I have conducted and overseen over 30 assessment efforts for 11 different agencies and 4 commercial corporations during the past 4 years. There are always areas for improvement for each system. I view this as my job to determine if the system is really as secure as it can get, to make sure all users are reasonably safe and secure, and to make an analysis of the organizational and management policies and procedures to secure the national assets for the governmental agency that has requested our services. My assessment team and I have reviewed large-scale data centers with many highly sensitive government systems installed, small systems for federal microagencies with less than 100 employees, a single server web-based system with public access information, and just about every type of system in between.

Assessment Process

The process I have developed over the years of conducting assessments, validations, and audits in support of authorization efforts has produced multiple ATOs for each and every effort I have been engaged for both contractually as an independent validator and governmentally while working for federal agencies. The typical steps which I follow for an assessment when I conduct a review cover all of the areas defined in this handbook, defined by the SP 800-37, rev. 1, process and usually produce SARs with defined areas of improvement for the organization to mitigate and repair, along with efforts of remediation which were performed and successfully completed during the assessment process. The standard steps are as follows:

1. Gather documents about system: The initial area for review I always start with is the already existing documentation for the system or application under test. These documents should provide the core areas for scrutiny and evaluation.

2. Review statutory and regulatory requirements: In the US governmental process, every system must conform to various statutory (law) and regulatory (policies and regulations) requirements, usually based on the information types used, processed, or held by the system and the system’s mission. Therefore, I look for areas of both compliance conformance and substantive agreement between the system, its documents, and the external requirements.

3. Conduct “GAP” analysis (15 steps): As discussed and defined in Chapter 8, I conduct the “GAP” analysis for all relevant system documents and supporting documentation. The steps are defined in that chapter.

4. Conduct risk assessment per SP 800-30: NIST put out the basic guide for risk assessments, SP 800-30, many years ago and I have adopted the use of that process in all of our endeavors and it has proven to be a trustworthy and successful methodology for conducting risk assessments under all circumstances where I have applied it.

5. Visit site – conduct preassessment: The first visit to the system location is usually conducted as part of the standard governmental project “kickoff” meeting event. We gather the documents during this process if they have not already been delivered to us beforehand.

a. Status: System status, project status, and mission status are all areas for discussion during this initial visit. Equipment status is a primary focus during this step so we can be prepared for any potential area of concern or suspicion.

b. Expectations: During this visit, we conduct some expectation management with both the staff and the on-site management about our process, work efforts during the actual assessment visit, and our interchanges with the on-site personnel.

6. Build SAP and ROE: As part of the standard preparation for conducting an assessment, I will develop the Security Assessment Plan (SAP) which defines what actual steps are to be conducted during the assessment, what tools will be used, and what review techniques with what rigor to be applied will be utilized during the conduct of the actual on-site assessment.

There will be developed as part of this effort a document known as the Rules of Engagement (ROE) which defines the actual steps to be performed for the entire assessment, including any outside testing, interview conduct, and inspections as required by the organization. This ROE is a legal-type document which needs approval at the highest level possible above the AO since it can include penetration testing parameters and outside engagement events. I have often gotten this ROE signed by the organizational CIO or Chief Information Security Officer (CISO), whereas the SAP often needs only the AO signature for final approval.

7. Submit SAP and ROE for approval and acceptance: Once these two documents are finalized, I submit them to the contracting officer, if on contract to perform these activities, and they then route them for approval and signatures. I do not start the actual assessment visit until I have received the signed approved documents back through official means. Since these are legal documents for liability purposes I will not start the on-site engagement until I have these signed documents literally in hand.

8. Conduct assessment: Once the SAP and ROE are approved, I schedule the on-site visit to conduct the actual assessment activities. During this time I and my assessment team prepare for the visit by refamiliarizing ourselves with the tools of the trade, as well as reviewing the documentation for the system under review in order to properly conduct the interviews and examinations.

a. On-site visit: The on-site visit usually will start with an “in-briefing” meeting with the management of the location wherein we discuss expectations for the visit, reconfirm the interview scheduling, and introduce the team to the site staff.

– Interview key personnel: The interviews are conducted with key operational personnel for the system under review, the facilities manager for the location, the security personnel for both the location and the system under review, the system owner, if on-site, the system administration staff members for the location and the system under review, as well as any developers associated with the system under review.

– Examine system – get demo if possible: I will obtain a demonstration of the system from the staff in order to observe the processing, security controls in action as well as the input and output activities for the system. I will have the system run through its normal processing activities while watching it perform each action step. This allows me to see the security controls for Access Control, identification and authentication, system integrity, and system and communications protection in operation to verify their activities.

– Conduct a “security walk-through” inspection: Invariably I get the site facility manager to escort me and I will conduct a security “walk-through” inspection of the facility the system is housed in to include observing the physical security controls, checking out the fire suppression and detection controls in the facility, the HVAC system and its controls, and the utilities connected to the facility and their physical access. I will also observe the backup power capability of the location employed within the system. All of these inspections will verify physical and environmental, maintenance, media protection, and contingency planning controls for the system.

– Test system with tools as defined in SAP: The actual automated testing is conducted on the system with various tools and techniques to obtain evidence of compliance and security control configurations. Most, if not all, of the vulnerability scanners available produce results showing compliance with operating system configurations and patches loaded on the system. We will utilize various scan tools for the components of the system as defined in the SAP, such as database scanners, network mappers, vulnerability scanners, website scanning tools, and other specialized tools if necessary. Other automated tools will also be used for various tests to include file integrity checkers, wireless detection tools, if needed, and, if defined in the SAP and ROE, penetration testing tools to check the various security controls sets including Access Control, identification and authentication, system and communications protection, audit and accounting, and system integrity controls.

9. Initial analysis of results: On completion of the tool(s) running through their operations, I review the results with our assessment team for identification of high- or medium-impact deficiencies or weaknesses. Additionally, we review the outputs from the test to ensure successful completion of the testing.

10. Provide customer/client with Remediation Report for action: Each automated tool used provides a method for immediate identification of problems and issues discovered during the tool operation and we take this data and provide it to the system administration staff either during the testing or right after completion in what I term a “Remediation Report” to allow the on-site staff to correct any deficiency or weakness of significant impact as soon as possible. Often the automated tool will produce results which need interpretation and evaluation since the tool often does not know which areas are externally mitigated. This results in what are known as “false-positive” results which require explanation and then removal from the final report.

11. Receive proof of remediation effort artifact evidence: On remediation, the on-site staff for the system under review provides the assessment team either the opportunity to rerun the automated tools to verify repair or the proof of remediation through the use of “screen shots” or output reports from the devices or machines in question. These documents and artifacts then get attached to or included in the SAR.

12. Build SAR: We start the development of the SAR during the testing phase by reviewing the examination and interview results and building the results from those parts of the evaluation. Once the testing phase is completed and our initial Remediation Report is delivered, we include the results of the scans and other tests in the SAR.

Following the template we have developed for the SAR, all areas are included, along with a full control-by-control results table in the SAR.

13. Review SAR with customer for additional action items: When the initial SAR is completed, we conduct a review of the preliminary results with the client to point out any additional areas or items which can be fixed, repaired, or remediated before the final delivery. The client often then addresses some or all of these areas and provides additional supporting documentation or proof of remediation and we include that in the final SAR.

14. Develop final SAR: Once all the reviews and discussions are completed, I complete the SAR in its final version and prepare the briefing for the AO to go with the report.

15. Develop Certification Letter: On full completion of the SAR and revisions to the SSP as the result of the assessment, I often am asked to complete a Certification Letter to the AO stating the results of the assessment and my opinion on the risks of the system and my recommendation for authorization.

16. Deliver SAR and Certification Letter to system owner and client: This is the final step of the process where I deliver the final SAR, final version of the SSP, and the Recommendation Letter to the AO and to the system owner for action.