We choose not to integrate our cluster with CloudWatch. Actually, I chose not to use it, and you blindly followed my example. Therefore, I guess that an explanation is in order. It's going to be a short one. I don't like CloudWatch. I think it is a bad solution that is way behind the competition and, at the same time, it can become quite expensive when dealing with large quantities of data. More importantly, I believe that we should use services coming from hosting vendors only when they are essential or provide an actual benefit. Otherwise, we'd run a risk of entering the trap called vendor locking. Docker Swarm allows us to deploy services in the same way, no matter whether they are running in AWS or anywhere else. The only difference would be a volume driver we choose to plug in. Similarly, all the services we decided to deploy thus far can run anywhere. The only "lock-in" is with Docker Swarm but, unlike AWS, it is open source. If needed we can even fork it to our repository and build our own Docker Server. That is not to say that I would recommend forking Docker but rather that I am trying to make a clear distinction between being locked into an open source project and with a commercial product. Moreover, with relatively moderate changes, we could migrate our Swarm services to Kubernetes or even Mesos and Marathon. Again, that is not something I recommend but more of a statement that a choice to change the solution is not as time demanding as it might seem on the first look.

I think I run astray from the main subject so let me summarize it. CloudWatch is bad, and it costs money. Many of the free alternatives are much better. If you read my previous books, you probably know that my preference for a logging solution is the ELK (ElasticSearch, LogStash, and Kibana) stack. I used them for both logging and metrics but, since then, metrics solution was replaced with Prometheus. How about centralized logging? I think that ELK is still one of the best self-hosted solutions even though I'm not entirely convinced I like the new path Elastic is taking as a company. I'll leave the discussion about their direction for later and, instead, we'll dive right into setting up the ELK stack in our cluster.

ssh -i devops22.pem docker@$CLUSTER_IP

curl -o logging.yml \

https://raw.githubusercontent.com/vfarcic/docker-\

flow-monitor/master/stacks/logging-aws.yml

cat logging.yml

We went back to the cluster, downloaded the logging stack, and displayed its contents. The YML file defines the ELK stack as well as LogSpout. ElasticSearch is an in-memory database that will store our logs. LogSpout will be sending logs from all containers running inside the cluster to LogStash, which, in turn, will process them and send the output to ElasticSearch. Kibana will be used as UI to explore logs. That was all the details of the stack you'll get. I'll assume that you are already familiar with the services we'll use. They were described in the book, The DevOps 2.1 Toolkit: Docker Swarm (https://www.amazon.com/dp/1542468914). If you did not read it, information could be easily found on the Internet. Google is your friend.

The first service in the stack is elasticsearch. It is an in-memory database we'll use to store logs. Its definition is as follows.

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:5.5.2

environment:

- xpack.security.enabled=false

volumes:

- es:/usr/share/elasticsearch/data

networks:

- default

deploy:

labels:

- com.df.distribute=true

- com.df.notify=true

- com.df.port=80

- com.df.alertName=mem_limit

- com.df.alertIf=@service_mem_limit:0.8

- com.df.alertFor=30s

resources:

reservations:

memory: 3000M

limits:

memory: 3500M

placement:

constraints: [node.role == worker]

...

volumes:

es:

driver: cloudstor:aws

external: false

...

There's nothing special about the service. We used the environment variable xpack.security.enabled to disable X-Pack. It is a commercial product baked into ElasticSearch image. Since this book uses only open source services, we had to disable it. That does not mean that X-Pack is not useful. It is. Among other things, it provides authentication capabilities to ElasticSearch. I encourage you to explore it and make your own decision whether it's worth the money.

I could argue that there's not much reason to secure ElasticSearch since we are not exposing any ports. Only services that are attached to the same network will be able to access it. That means that only people you trust to deploy services would have direct access to it.

Usually, we'd run multiple ElasticSearch services and join them into a cluster (ElasticSearch calls replica set a cluster). Data would be replicated between multiple instances and would be thus preserved in case of a failure. However, we do not need multiple ElasticSearch services, nor do we have enough hardware to host them. Therefore, we'll run only one ElasticSearch service and, since there will be no replication, we'll store its state on a volume called es.

The only other noteworthy part of the service definition is the placement defined as constraints: [node.role == worker]. Since ElasticSearch is very resource demanding, it might not be a wise idea to place it on a manager. Therefore, we defined that it should always run on one of the workers and reserved 3 GB of memory. That should be enough to get us started. Later on, depending on a number of log entries you're storing and the cleanup strategy, you might need to increase the memory allocated to it and scale it to multiple services.

Let's move to the next service.

...

logstash:

image: docker.elastic.co/logstash/logstash:5.5.2

networks:

- default

deploy:

labels:

- com.df.distribute=true

- com.df.notify=true

- com.df.port=80

- com.df.alertName=mem_limit

- com.df.alertIf=@service_mem_limit:0.8

- com.df.alertFor=30s

resources:

reservations:

memory: 600M

limits:

memory: 1000M

configs:

- logstash.conf

environment:

- LOGSPOUT=ignore

command: logstash -f /logstash.conf

...

configs:

logstash.conf:

external: true

LogStash will accept logs using syslog format and protocol and forward them to ElasticSearch. You'll see the configuration soon.

The only interesting part about the service is that we're injecting a Docker config. It works in almost the same way as secrets except that it is not encrypted at rest. Since it will not contain anything compromising, there's no need to set it up as a secret. We did not specify config destination, so it will be available as file /logstash.conf. The command is set to reflect that.

We're halfway through. The next service in line is kibana.

kibana:

image: docker.elastic.co/kibana/kibana:5.5.2

networks:

- default

- proxy

environment:

- xpack.security.enabled=false

- ELASTICSEARCH_URL=http://elasticsearch:9200

deploy:

labels:

- com.df.notify=true

- com.df.distribute=true

- com.df.usersPassEncrypted=false

- com.df.usersSecret=admin

- com.df.servicePath=/app,/elasticsearch,/api,/ui,/bundles,/plugins,\

/status,/es_admin

- com.df.port=5601

- com.df.alertName=mem_limit

- com.df.alertIf=@service_mem_limit:0.8

- com.df.alertFor=30s

resources:

reservations:

memory: 600M

limits:

memory: 1000M

Kibana will provide a UI that will allow us to filter and display logs. It can do many other things but logs are all we need for now. Unfortunately, Kibana is not proxy-friendly. Even though there are a few environment variables that can configure the base path, they do not truly work as expected. We had to specify multiple paths through the com.df.servicePath. They reflect all the combinations of requests Kibana makes. I'd recommend that you replace com.df.servicePath with com.df.serviceDomain. The value could be a subdomain (for example, kibana.acme.com).

The rest of the definition is pretty uneventful, so we'll move on.

We, finally, reached the last service of the stack.

logspout:

image: gliderlabs/logspout:v3.2.2

networks:

- default

environment:

- SYSLOG_FORMAT=rfc3164

volumes:

- /var/run/docker.sock:/var/run/docker.sock

command: syslog://logstash:51415

deploy:

mode: global

labels:

- com.df.notify=true

- com.df.distribute=true

- com.df.alertName=mem_limit

- com.df.alertIf=@service_mem_limit:0.8

- com.df.alertFor=30s

resources:

reservations:

memory: 20M

limits:

memory: 30M

LogSpout will monitor Docker events and send all logs to ElasticSearch. We're exposing Docker socket as a volume so that the service can communicate with Docker server. The command specifies syslog as protocol and logstash running on 51415 as the destination address. Since all the services of the stack are connected through the same default network, the name of the service (logstash) is all we need as address.

The service will run in the global mode so that a replica is present on each node of the cluster.

We need to create the logstash.conf config before we deploy the stack. The command is as follows:

echo '

input {

syslog { port => 51415 }

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

}

}

' | docker config create logstash.conf -

We echoed a configuration and piped the output to the docker config create command. The configuration specifies syslog running on port 51415 as input. The output is ElasticSearch running on port 9200. The address of the output is the name of the destination service (elasticsearch).

Now we can deploy the stack:

docker stack deploy -c logging.yml \

logging

A few of the images are big, and it will take a moment or two until all services are up-and-running. We'll confirm the state of the stack by executing the command that follows.

docker stack ps \

-f desired-state=running logging

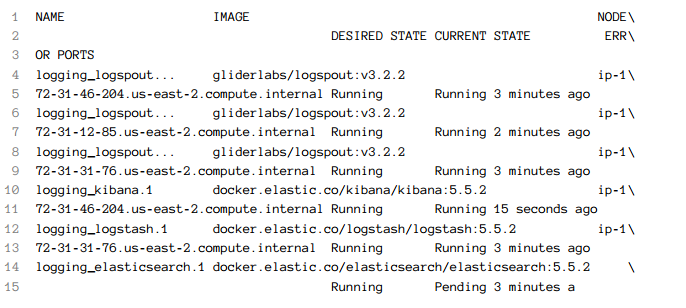

The output is as follows (IDs are removed for brevity):

You'll notice that elasticsearch is in pending state. Swarm cannot deploy it because none of the servers meet the requirements we set. We need at least 3 GB of memory and a worker node. We should either change the constraint and reservations to fit out current cluster setup or add a worker as a new node. We'll go with latter.

As a side note, Kibana might fail after a while. It will try to connect to ElasticSearch for a few times and stop the process. Soon after, it will be rescheduled by Swarm, only to stop again. That will continue until we manage to run ElasticSearch.

Please exit the cluster before we proceed.

exit