Firing an alert as soon as the condition is met is often not the best idea. The conditions of the system might change temporarily and go back to "normal" shortly afterward. A spike in memory is not bad in itself. We should not worry if memory utilization jumps to 95% only to go back to 70% a few moments later. On the other hand, if it continues being over 80% for, let's say, five minutes, some actions should be taken.

We'll modify the go-demo_main service so that it fires an alert only if memory threshold is reached and the condition continues for at least one minute.

The relevant parts of the go-demo stack file are as follows:

services:

main:

...

deploy:

...

labels:

...

- com.df.alertName=mem_limit

- com.df.alertIf=container_memory_usage_\

bytes{container_label_com_docker_swarm_service_name="go-\

demo"}/container_spec_memory_limit_bytes{container_label_com_docker_sw\

arm_service_name="go-demo"} > 0.8

- com.df.alertFor=30s

...

We set the com.df.alertName and com.df.alertIf labels to the same values as those we used to update the service. The new addition is the com.df.alertFor label that specifies the period Prometheus should wait before firing an alert. In this case, the condition would need to persist for thirty seconds before the alert is fired. Until then, the alert will be in the pending state.

Let's deploy the new stack.

docker stack deploy \

-c stacks/go-demo-alert-long.yml \

go-demo

After a few moments, the go-demo_main service will be rescheduled, and the alert labels will be propagated to the Prometheus instance. Let's take a look at the alerts screen.

open "http://$(docker-machine ip swarm-1)/monitor/alerts"

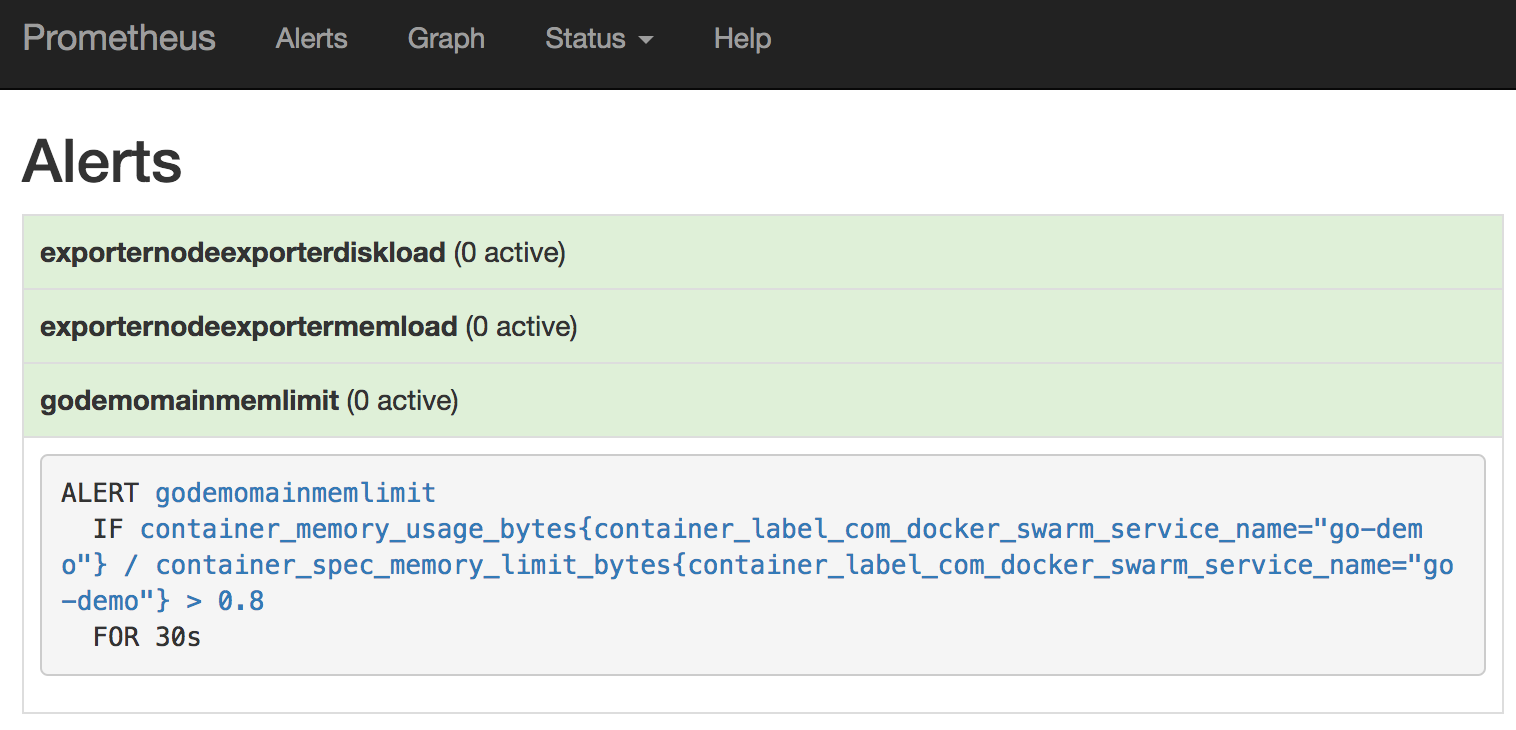

The go-demo memory limit alert with the FOR statement set to thirty seconds is registered:

We should test whether the alert indeed works. We'll temporarily decrease the threshold to five percent. That should certainly trigger the alert.

docker service update \

--label-add com.df.alertIf='container_memory_usage_\

bytes{container_label_com_docker_swarm_service_name="go-demo_main\

"}/container_spec_memory_limit_bytes{container_label_com_docker_swarm_\

service_name="go-demo_main"} > 0.05' \

go-demo_main

Let us take another look at the alerts screen.

open "http://$(docker-machine ip swarm-1)/monitor/alerts"

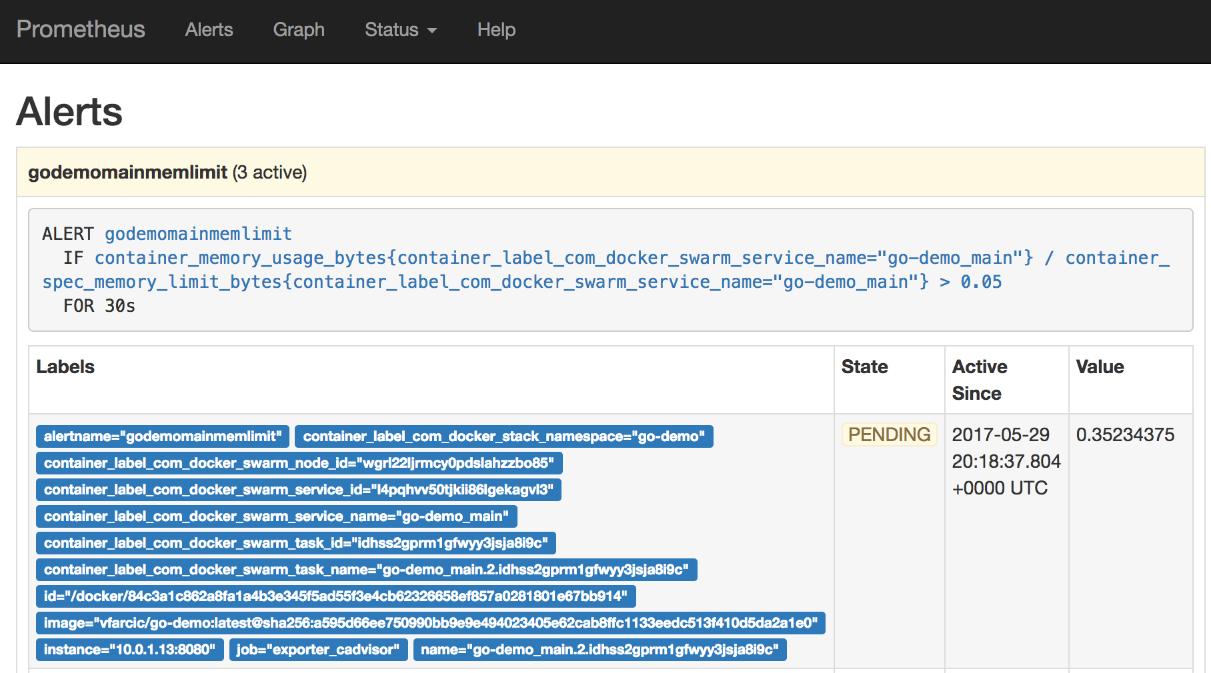

If you opened the screen within thirty seconds since the update, you should see that there are three alerts in the PENDING state. Once thirty seconds expire, the status will change to FIRING. Unfortunately, there is no destination Prometheus can fire those alerts. We'll fix that in the next chapter. For now, we'll have to be content by simply observing the alerts from Prometheus: