Tokens: the words that make up the Ruby language

the Ruby language.

Let’s suppose you write this very simple Ruby program:

10.times do |n| puts n end

… and then execute it from the command line like this:

$ ruby simple.rb 0 1 2 3 etc...

What happens first after you type “ruby simple.rb” and press “ENTER?” Aside from general initialization, processing your command line parameters, etc., the first thing Ruby has to do is open and read in all the text from the simple.rb code file. Then it needs to make sense of this text: your Ruby code. How does it do this?

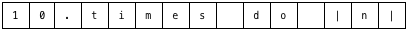

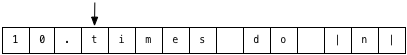

After reading in simple.rb, Ruby encounters a series of text characters that looks like this:

To keep things simple I’m only showing the first line of text here. When Ruby sees all of these characters it first “tokenizes” them. As I said above, tokenization refers to the process of converting this stream of text characters into a series of tokens or words that Ruby understands. Ruby does this by simply stepping through the text characters one at a time, starting with the first character, “1:”

Inside the Ruby C source code, there’s a loop that reads in one character at a time and processes it based on what character it is. As a simplification I’m describing tokenization as an independent process; in fact, the parsing engine I describe in the next section calls this C tokenize code whenever it needs a new token. Tokenization and parsing are two separate processes that actually happen at the same time. For now let’s just continue to see how Ruby tokenizes the characters in my Ruby file.

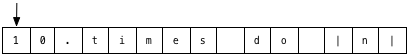

In this example, Ruby realizes that the character “1” is the start of a number, and continues to iterate over all of the following characters until it finds a non-numeric character – next it finds a “0:”

And stepping forward again it finds a period character:

Ruby actually considers the period character to be numeric also, since it might be part of a floating point value. So now Ruby continues and steps to the next character:

Here Ruby stops iterating since it found a non-numeric character. Since there were no more numeric characters after the period, Ruby considers the period to be part of a separate token and steps back one:

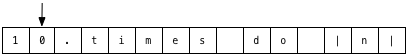

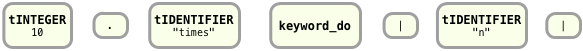

And finally Ruby converts the numeric characters that it found into a new token called tINTEGER:

This is the first token Ruby creates from your program. Now Ruby continues to step through the characters in your code file, converting each of them to tokens, grouping the characters together as necessary:

The second token is a period, a single character. Next, Ruby encounters the word “times” and creates an identifier token:

Identifiers are words that you use in your Ruby code that are not reserved words; usually they refer to variable, method or class names. Next Ruby sees “do” and creates a reserved word token, indicated by keyword_do:

Reserved words are the special keywords that have some important meaning in the Ruby language – the words that provide the structure or framework of the language. They are called reserved words since you can’t use them as normal identifiers, although you can use them as method names, global variable names (e.g. $do) or instance variable names (e.g. @do or @@do). Internally, the Ruby C code maintains a constant table of reserved words; here are the first few in alphabetical order:

alias and begin break case class

Finally, Ruby converts the remaining characters on that line of code to tokens also:

I won’t show the entire program here, but Ruby continues to step through your code in a similar way, until it has tokenized your entire Ruby script. At this point, Ruby has processed your code for the first time – it has ripped your code apart and put it back together again in a completely different way. Your code started as a stream of text characters, and Ruby converted it to a stream of tokens, words that Ruby will later put together into sentences.

If you’re familiar with C and are interested in learning more about the detailed way in which Ruby tokenizes your code file, take a look at the parse.y file in your version of Ruby. The “.y” extension indicates parse.y is a grammar rule file – a file that contains a series of rules for the Ruby parser engine which I’ll cover in the next section. Parse.y is an extremely large and complex code file; it contains over 10,000 lines of code! But don’t be intimidated; there’s a lot to learn here and this file is worth becoming familiar with.

For now, ignore the grammar rules and search for a C function called parser_yylex, which you’ll find about two thirds of the way down the file, around line 6500. This complex C function contains the code that does the actual work of tokenizing your code. If you look closely, you should see a very large switch statement that starts like this:

retry: last_state = lex_state; switch (c = nextc()) {

The nextc() function returns the next character in the code file text stream – think of this as the arrow in my diagrams above. And the lex_state variable keeps information about what state or type of code Ruby is processing at the moment. The large switch statement inspects each character of your code file and takes a different action based on what it is. For example this code:

/* white spaces */ case ' ': case '\t': case '\f': case '\r': case '\13': /* '\v' */ space_seen = 1;

...

goto retry;

… looks for whitespace characters and ignores them by jumping back up to the retry label just above the switch statement.

One other interesting detail here is that Ruby’s reserved words are defined in a code file called defs/keywords – if you open up the keywords file you’ll see a complete list of all of Ruby’s reserved words, the same list I showed above. The keywords file is used by an open source package called gperf to produce C code that can quickly and efficiently lookup strings in a table, a table of reserved words in this case. You can find the generated reserved word lookup C code in lex.c, which defines a function named rb_reserved_word, called from parse.y.

One final detail I’ll mention about tokenization is that Ruby doesn’t use the Lex tokenization tool, which C programmers commonly use in conjunction with a parser generator like Yacc or Bison. Instead, the Ruby core wrote the Ruby tokenization code by hand. They may have done this for performance reasons, or because Ruby’s tokenization rules required special logic Lex couldn’t provide.

Experiment 1-1: Using Ripper to tokenize different Ruby scripts

Now that we’ve learned the basic idea behind tokenization, let’s look at how Ruby actually tokenizes different Ruby scripts. After all, how do I know the explanation above is actually correct? It turns out it is very easy to see what tokens Ruby creates for different code files, using a tool called Ripper. Shipped with Ruby 1.9 and Ruby 2.0, the Ripper class allows you to call the same tokenize and parse code that Ruby itself uses to process the text from code files. It’s not available in Ruby 1.8.

Using it is simple:

require 'ripper' require 'pp' code = <<STR 10.times do |n| puts n end STR puts code pp Ripper.lex(code)

After requiring the Ripper code from the standard library, I call it by passing some code as a string to the Ripper.lex method. In this example, I’m passing the same example code from earlier. Running this I get:

$ ruby lex1.rb 10.times do |n| puts n end [[[1, 0], :on_int, "10"], [[1, 2], :on_period, "."], [[1, 3], :on_ident, "times"], [[1, 8], :on_sp, " "], [[1, 9], :on_kw, "do"], [[1, 11], :on_sp, " "], [[1, 12], :on_op, "|"], [[1, 13], :on_ident, "n"], [[1, 14], :on_op, "|"], [[1, 15], :on_ignored_nl, "\n"], [[2, 0], :on_sp, " "], [[2, 2], :on_ident, "puts"], [[2, 6], :on_sp, " "], [[2, 7], :on_ident, "n"], [[2, 8], :on_nl, "\n"], [[3, 0], :on_kw, "end"], [[3, 3], :on_nl, "\n"]]

Each line corresponds to a single token Ruby found in my code string. On the left we have the line number (1, 2, or 3 in this short example) and the text column number. Next we see the token itself displayed as a symbol, such as :on_int or :on_ident. Finally Ripper displays the text characters it found that correspond to each token.

The token symbols Ripper displays are somewhat different than the token identifiers I showed in the diagrams above. Above I used the same names you would find in Ruby’s internal parse code, such as tIDENTIFIER, while Ripper used :on_ident instead. Regardless, it’s easy to get a sense of what tokens Ruby finds in your code and how tokenization works by running Ripper for different code snippets.

Here’s another example:

$ ruby lex2.rb 10.times do |n| puts n/4+6 end

...

[[2, 2], :on_ident, "puts"], [[2, 6], :on_sp, " "], [[2, 7], :on_ident, "n"], [[2, 8], :on_op, "/"], [[2, 9], :on_int, "4"], [[2, 10], :on_op, "+"], [[2, 11], :on_int, "6"], [[2, 12], :on_nl, "\n"],

...

This time we see that Ruby converts the expression n/4+6 into a series of tokens in a very straightforward way. The tokens appear in exactly the same order they did inside the code file.

Here’s a third, slightly more complex example:

$ ruby lex3.rb array = [] 10.times do |n| array << n if n < 5 end p array

...

[[3, 2], :on_ident, "array"], [[3, 7], :on_sp, " "], [[3, 8], :on_op, "<<"], [[3, 10], :on_sp, " "], [[3, 11], :on_ident, "n"], [[3, 12], :on_sp, " "], [[3, 13], :on_kw, "if"], [[3, 15], :on_sp, " "], [[3, 16], :on_ident, "n"], [[3, 17], :on_sp, " "], [[3, 18], :on_op, "<"], [[3, 19], :on_sp, " "], [[3, 20], :on_int, "5"],

...

Here you can see that Ruby was smart enough to distinguish between << and < in the line: “array << n if n < 5.” The characters << were converted to a single operator token, while the single < character that appeared later was converted into a simple less-than operator. Ruby’s tokenize code is smart enough to look ahead for a second < character when it finds one <.

Finally, notice that Ripper has no idea whether the code you give it is valid Ruby or not. If I pass in code that contains a syntax error, Ripper will just tokenize it as usual and not complain. It’s the parser's job to check syntax, which I’ll get to in the next section.

require 'ripper' require 'pp' code = <<STR 10.times do |n puts n end STR puts code pp Ripper.lex(code)

Here I forgot the | symbol after the block parameter n. Running this, I get:

$ ruby lex4.rb 10.times do |n puts n end

...

[[[1, 0], :on_int, "10"], [[1, 2], :on_period, "."], [[1, 3], :on_ident, "times"], [[1, 8], :on_sp, " "], [[1, 9], :on_kw, "do"], [[1, 11], :on_sp, " "], [[1, 12], :on_op, "|"], [[1, 13], :on_ident, "n"], [[1, 14], :on_nl, "\n"],

...