CHAPTER 61

Robocop on Wall Street

By Michael Berns1

1Director AI and FinTech, PwC

Robocop on Wall Street – this futuristic term coined by a journalist to describe one of the leading RegTech vendors sums up the revolutionary advances in artificial intelligence (AI) which are rapidly and seamlessly bridging the gap between legal/regulatory requirements and technology.

Setting the Scene – The Why

Let’s start with “why”. Why is AI such a powerful tool for legal and compliance use cases? Financial crime has been a thorn in the side of financial services firms ever since financial markets were created, long before the financial crisis. Post crisis, the shock to the entire system and the increased regulatory scrutiny has brought a lot of new financial crime cases to light.

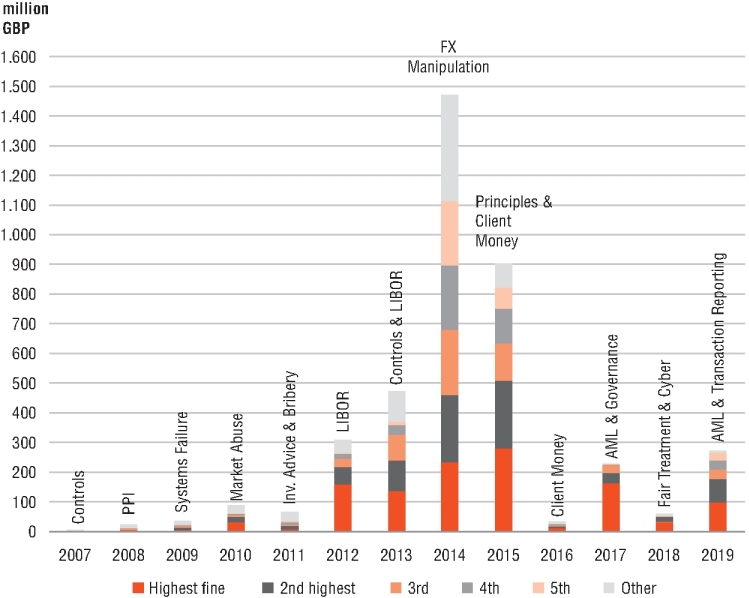

Reviewing Figure 61.1, it is clear that:

- The fines in the early years were low (even during 2002–2006 they did not exceed GBP 25 million per year).

- They did not immediately peak after the financial crisis, but only a few years later in 2014–2015, as regulators first prioritized monitoring the survival of banks and tightening up regulatory requirements such as Basel III/IV. When some of these big cases like LIBOR manipulation were uncovered, it became clear that this practice had been going on since the nineties, but no fines had been imposed in earlier years.1

Figure 61.1 UK regulatory fines (Financial Services Authority/Financial Conduct Authority)2

No matter whether the focus is on Europe, Asia, or the complex regulatory regimes in the US FRB (Federal Reserve Board), OCC (Office of the Comptroller of the Currency), FDIC (Federal Deposit Insurance Corporation), SEC (Securities and Exchange Commission), or the CFTC (Commodity Futures Trading Commission) the picture is very clear – a highly significant increase in regulatory fines over the last 10 years.3,4

One can observe an increase in regulatory fines on the one hand and the typical buying cycle of compliance related software on the other hand, previously somewhere around 4–5 years. The fact that recently regulatory fines in the US have stopped increasing due to political movements to deregulate has not changed the overall global picture.5 No bank can afford to sit on the sidelines in times of multibillion-dollar fines, with bank licenses for whole countries being at risk if immediate and firm action is not taken to tackle the underlying financial crimes.

Plagued by historical structural issues such as outdated technology, operating in silos and limited transparency, financial institutions have no choice but to look outside for innovations and faster solutions. In this escalating situation, the arrival of FinTech (financial technology), or more specifically RegTech (regulatory technology), is very important.

Some of the key players in this area are innovative companies with only a few years under their belt and few enterprise references; companies that would not have been given the time of day previously, without the urgent call to action and the imperative need to solve old issues differently.

Financial institutions are under pressure to empower their staff to do more in less time. Legal risk and regulatory use cases are frequently related to vast amounts of unstructured data, which takes humans a very long time to go through and decipher. However, AI is particularly good at recognizing patterns or behaviour in masses of data, hence allowing the solution to reduce the vast number of false positives while at the same time uncovering new complex cases, increasing true positives. The typical signal to noise or needle in the haystack scenario.

Mapping out the RegTech and Legal Risk AI Landscape (The What)

Having reviewed the market conditions and the reasons why AI is well suited to be used for legal risk and regulatory use cases, this section highlights potential solutions in these fields. The increase in the amounts of fines is directly correlated to a significant increase in the amount of regulatory changes per year, at least during the post-financial crisis period.

This in turn led to a significant increase in the number of RegTech firms in the same period, from just over a hundred during the financial crisis to around 500 in 2018. In the last few years, more and more of the technology is also being used by the regulators and supervisors themselves, leading to the term of SupTech being coined in 2017.6

At a high level, RegTech and legal risk solutions typically fall into the following five main categories:

- General compliance/legal processes, e.g. optimization.

- Monitoring, e.g. contract analysis, AML, KYC, behaviours, verification, conduct, surveillance.

- Risk, e.g. monitor limits and stress testing.

- Legal/regulatory analysis (identification & interpretation).

- Reporting (regulatory & management).

Therefore, from an AI perspective the monitoring and legal/regulatory analysis are the most relevant fields, while general compliance/legal is an automation game (using robotic process automation and other technologies, for example). Some AI solutions are emerging for risk, but it will be some time before additional AI factors are accepted as part of a credit risk model, very much depending on the regulator and the state of “explainable AI”.

Key Building Blocks of AI Solutions Addressing Legal Risk and Regulation (The How)

Having elaborated on the why and the what, the focus shifts to the how – how are AI solutions in these segments typically built – what are the key components?

Figure 61.2 highlights that A1 and A2 are different input channels with a particular focus on unstructured data, via news, email, contracts, voice and chat. It is often the case that AI is used to enrich structured data (such as employee or client records) with unstructured data.

Figure 61.2 Key components (created and simplified by the author)

For data integration (B1) quite a few solutions have their own proprietary ETL (extract, transform, load) solution to map the data in; for the rest, typically the standard ETL/data integration tools are used. Clients might prefer using their own big data infrastructure (B2) in terms of Hadoop and Spark, or be comfortable to scale via cloud services (B3). Depending on whether the solution is focused on leveraging unstructured data like communication, the engine (C) might include a natural language processing (NLP) pre-processing stage to let the algorithms detect sentence structure, context and intent before running a number of specific machine learning models to search for certain patterns over time.

A key part is always how good the models are out of the box in terms of precision and recall or how much additional training (i.e. supervised learning) is required to reach a reasonable level. Finally (D) is providing the interface (be it via highlighting/comparison, a knowledge graph or other means).

Judgement and Liability

There is no denying that AI is currently very much on the radar. Since March 2018, people’s view of AI has fundamentally changed following the Cambridge Analytica (CA) scandal and the subsequent investigations into Facebook. The fact that CA claimed to have been involved in more than 200 elections including Trump and the Brexit Referendum, both of which were closely decided, shows that the ethics of companies using AI to endlessly harvest data have been severely questioned.7

In the meantime, a new US antitrust suit is about to be launched against the tech giants, supposedly also for ethical considerations, customer data and oversight of AI Research.8 There is a strong trend among financial services institutions and regulators to look outside the box to harness technical solutions that were previously only available to governments. For a few years, there has been a steady increase in ex-military and intelligence profilers joining financial services companies to apply AI to tackle financial crime.9

In conclusion, just like with the arrival of the internet for the broad public in the nineties, the world now has a chance to participate in something that will fundamentally change the way people search for relevant information, develop solutions to age-old problems, tackle financial crime and influence how financial services firms and regulators think. There is no denying that “data is the new oil” and one can either participate in this journey of innovation or observe and watch.

Notes

- 1“Wall Street gets slammed with $5.8 billion in fines for rate rigging”, Business Insider, 20 May 2015.

- 2Created by the author based on publicly available data. Note that it is difficult to consistently capture all of the data in one graph, as some of the claims are still being collected (in the case of PPI for example). “Wall Street gets slammed with $5.8 billion in fines for rate rigging”, Business Insider, 20 May 2015.

- 3“SFC slaps banks with huge fines for DD failures”, Funds Selector Asia, 15 March 2019.

- 4“Fines totalling S$16.8 m slapped on 42 financial firms in Singapore in 18 months ended December ‘18”, Business Times, 20 March 2019.

- 5“Wall Street Faces Fewer Fines in 2019 as SEC Democrat Departs”, Bloomberg, 19 December 2018.

- 6“Innovative technology in financial supervision (Suptech) – the experience of early users”, BIS, July 2018.

- 7“Cambridge Analytica: The data firm’s global influence”, BBC, 22 March 2018.

- 8“The DOJ’s Antitrust Chief Just Came out Swinging Against Google and Amazon”, Fortune, 11 June 2019.

- 9“HSBC recruits ex-head of MI5 to shore up financial crime defence”, Financial Times, 23 May 2013.