CHAPTER 8

Working with Terraform

This chapter aims to introduce you to Terraform, a tool to help you with the Multicloud concept, allowing you to create the same infrastructure among all the Cloud players in the same automated way.

Structure

In this chapter, we will discuss the following topics:

- Multicloud

- HashiCorp

- Introduction to Terraform

- Creating the Infrastructure

Objectives

After studying this unit, you should be able to:

- Know about HashiCorp tools

- Explain what Multicloud is

- Use Terraform to provide Infrastructure

- Have all your Infrastructure as Code

Multicloud

We learned in the previous chapter that there are many Cloud providers available. The most famous are AWS, GCP, and Azure. But, we also have other options like IBM Cloud, Oracle Cloud, Digital Ocean, etc. Each one of these has its services, which can be the criteria for you to decide among them. However, what can you do when you need the services from each one of them? For example, you must use an Active Directory Authentication, which is only available on Azure, but you also need BigQuery to analyze your data, and it is only available on GCP, and you also need to use the DynamoDB, which is only available on AWS. In the end, you have an on-premises infrastructure based on OpenStack. Therefore, when you need to use many Cloud providers, we call that scenario Multicloud. The challenge now is how to manage all that Infrastructure in the same way, and perhaps, by using the same tools.

HashiCorp

HashiCorp is a company that created many tools to help you with the task of managing infrastructure among different scenarios, like AWS, GCP, Azure, Digital Ocean, etc. We already have experience using their tools; Vagrant is one of the tools from HashiCorp stack which we can use for our development environments. In their stack, you can find the following tools:

- Packer (https://www.packer.io/): This is a tool to create your own Cloud images. In the Vagrant chapter, we used the Ubuntu Cloud image to create our VMs, but if you want to create a custom image with your application or after running a security pipeline.

- Vagrant (https://www.vagrantup.com/): You already know that tool. We have created many Virtual Machines and tested the applications.

- Nomad (https://www.hashicorp.com/products/nomad/ ): It is an orchestrator to deploy your applications among different environments, like the public Clouds or the private Clouds.

- Consul (https://www.hashicorp.com/products/consul/): It is commonly used as a Service Discovery and Service Mesh.

- Vault (https://www.hashicorp.com/products/vault/): It is a tool made to manage your secrets. It is an alternative for the secret services on Cloud like Azure Secret, but, of course, with some differences, like rotate the secrets automatically.

- Terraform (https://www.hashicorp.com/products/terraform/): It is our focus in this chapter. I will show you how to create the Infrastructure in an easy way and have the control among some code files.

Introduction to Terraform

Terraform is a tool to create and manage your Infrastructure as Code like we did using Vagrant, but here, with more details and using public Clouds with focus on productions environments, not just laboratories, as we did earlier.

You can download the open-source version by the following link:

https://www.terraform.io/downloads.html

You just need to unzip the file and include the path in the PATH environment variable. After that, you need to run the following command:

PS C:\Users\1511 MXTI> terraform--version

Terraform v0.12.24

If you have problems with the installation, HashiCorp has a tutorial explaining how to do it step-by-step: https://learn.hashicorp.com/tutorials/terraform/install-cli

The current version is v0.12.24, which is the installation of Terraform.

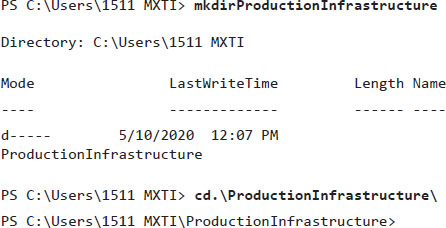

Now, we can create the files and create our first VM using a Cloud provider. I will do that first using the GCP which is the Cloud provider that I use for my personal projects. Then, the first step is creating a folder where you will store your code.

Of course, it is the best practice to split your code into many files, because it makes the code reusable and easy to manage. But in this, we will just create one virtual machine to learn the Terraform syntax and understand how it works. So, I will create everything in one single file.

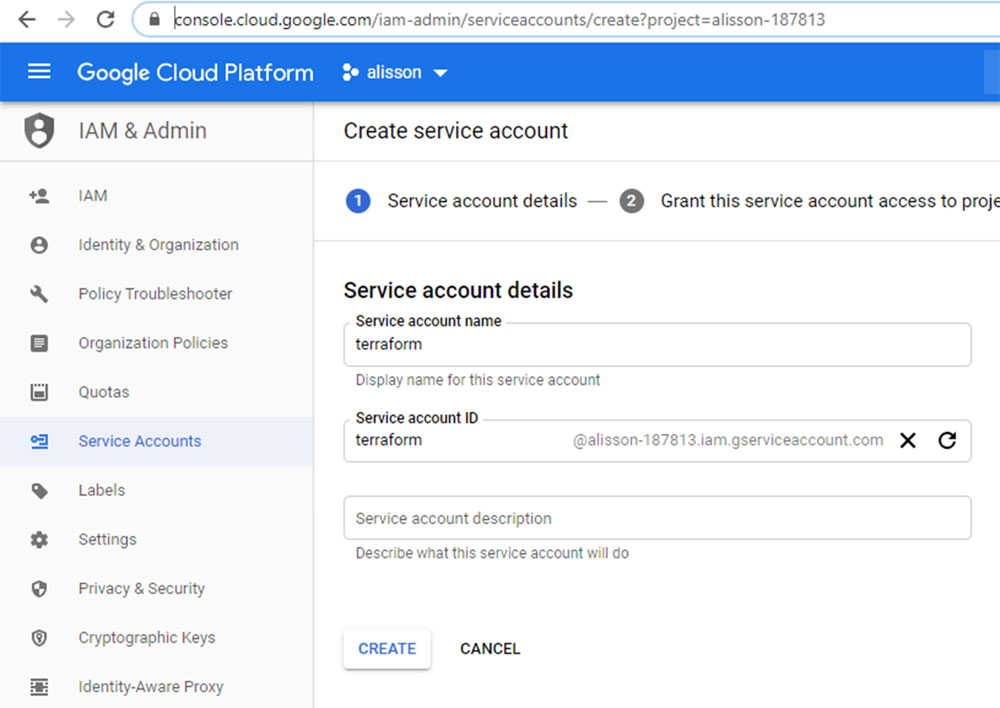

To begin with, we need to create a service account which is a username and a password to create resources on my behalf. To access your Google Cloud Console (https://console.cloud.google.com/), go to IAM & Admin and on Create service account, create an account called terraform, as shown in the following screenshot:

Figure 8.1

Click on Create, and then select the Role Compute Admin. This role will allow the service account to manage all the resources regarding the Compute Engine, which corresponds to our VMs.

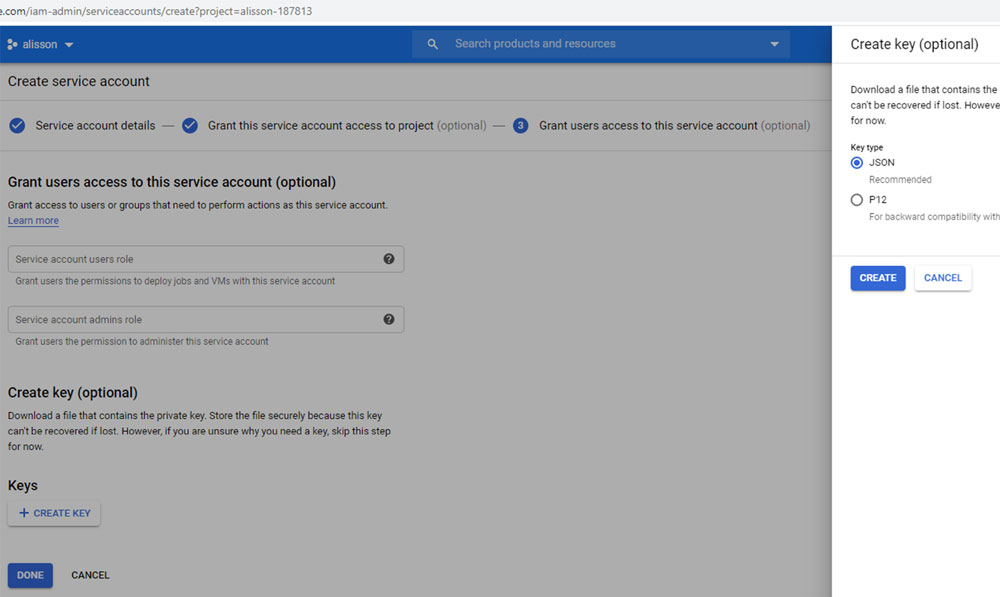

Then, you must create a key in the JSON format as shown in the following screenshot:

Figure 8.2

Now, you have the service account configured and ready to use with Terraform. Copy the JSON file, and put it in the same folder where we will create the Terraform code. In the folder that we created, let's create a new file, called infrastructure.tf with the following content:

provider "google" {

credentials = file("terraform_sa.json")

project = "alisson-187813"

region = "us-east1"

}

This code is necessary for Terraform. In this file, you will use the Google providers. Providers are modules created to make the interface between your Terraform code and the platform that you want to interact with. For example, once I had a situation where I had to manage a DNS server on Linux (Bind9). When the way to do it using the Terraform was using the TSIG protocol, I had to import the DNS provider. In the case of Azure, I imported the AzureRM provider. If you want to get to know more about that, I have two posts on my personal blog.

Using Azure and Terraform: http://alissonmachado.com.br/terraform-azure-criando-uma-infraestrutura-basica/

Managing DNS using Terraform and TSIG: http://alissonmachado.com.br/terraform-gerenciando-dns-com-tsig/

Getting back to the code, the providers do not come by default with Terraform. You must download them. You can do it using the following command:

C:\Users\1511 MXTI\ProductionInfrastructure> terraform init

Initializing the backend…

Initializing provider plugins…

- Checking for available provider plugins…

- Downloading plugin for provider "google" (hashicorp/google) 3.20.0…

The following providers do not have any version constraints in configuration, so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking changes, it is recommended to add version = "…" constraints to the corresponding provider blocks in configuration, with the constraint strings suggested below.

* provider.google: version = "~> 3.20"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work.

If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

Now, you are able to create the Infrastructure because you already have the provider installed in your local machine. Therefore, include the following code to create our first instance:

provider "google" {

credentials = file("terraform_sa.json")

project = "alisson-187813"

region = "us-east1"

}

resource "google_compute_instance""chapter8" {

name = "chapter8-instance"

machine_type = "g1-small"

zone = "us-east1-b"

boot_disk {

initialize_params {

image = "ubuntu-1804-bionic-v20200414"

}

}

network_interface {

network = "default"

access_config {

}

}

}

In the preceding code, we defined that we will use a resource called google_compute_instance which corresponds to a VM as we did in the previous chapter. But, now we are doing so using Terraform. This instance will be created using the Ubuntu 1804 image in the zone us-east1-b and the other details, like networking configurations. We will use the default set by Google.

The first thing when we create a new code using Terraform is to run the following command:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform plan

Refreshing Terraform state in-memory prior to plan…

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.chapter8 will be created

+ resource "google_compute_instance" "chapter8" {

+ can_ip_forward = false

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = false

+ guest_accelerator = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ machine_type = "g1-small"

+ metadata_fingerprint = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = "chapter8-instance"

+ project = (known after apply)

+ self_link = (known after apply)

+ tags_fingerprint = (known after apply)

+ zone = "us-east1-b"

+ boot_disk {

+ device_name = (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

+ mode = "READ_WRITE"

+ source = (known after apply)

+ initialize_params {

+ image = "ubuntu/ubuntu-1804-lts"

+ labels = (known after apply)

+ size = (known after apply)

+ type = (known after apply)

}

}

+ network_interface {

+ name = (known after apply)

+ network = "default"

+ network_ip = (known after apply)

+ subnetwork = (known after apply)

+ subnetwork_project = (known after apply)

+ access_config {

+ nat_ip = (known after apply)

+ network_tier = (known after apply)

}

}

+ scheduling {

+ automatic_restart = (known after apply)

+ on_host_maintenance = (known after apply)

+ preemptible = (known after apply)

+ node_affinities {

+ key = (known after apply)

+ operator = (known after apply)

+ values = (known after apply)

}

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform can't guarantee that exactly these actions will be performed if "terraform apply" is subsequently run.

The command Terraform plan will check your project on Google and make a difference to see what is different between your code and the project on Google. In this case, we can see that everything we set will be created because of the plus signal in front of the lines (+). If some resource is going to be destroyed, you will see a minus signal (-). Also, in the last line, you will be able to see the following:

Plan: 1 to add, 0 to change, 0 to destroy.

Then you will be relieved, nothing will be destroyed. Terraform is a great tool, but you need to take care before running the commands, otherwise, you can destroy all your Infrastructure using it. There is a famous sentence about that.

If you want to make mistakes among all your production servers in an automated way, this is DevOps! Continuing with our Infrastructure, now that we are sure nothing will be deleted, we can run the command applied to create the instance:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.chapter8 will be created

+ resource "google_compute_instance" "chapter8" {

+ can_ip_forward = false

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = false

+ guest_accelerator = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ machine_type = "g1-small"

+ metadata_fingerprint = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = "chapter8-instance"

+ project = (known after apply)

+ self_link = (known after apply)

+ tags_fingerprint = (known after apply)

+ zone = "us-east1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

+ mode = "READ_WRITE"

+ source = (known after apply)

+ initialize_params {

+ image = "ubuntu-1804-bionic-v20200414"

+ labels = (known after apply)

+ size = (known after apply)

+ type = (known after apply)

}

}

+ network_interface {

+ name = (known after apply)

+ network = "default"

+ network_ip = (known after apply)

+ subnetwork = (known after apply)

+ subnetwork_project = (known after apply)

+ nat_ip = (known after apply)

+ network_tier = (known after apply)

}

}

+ scheduling {

+ automatic_restart = (known after apply)

+ on_host_maintenance = (known after apply)

+ preemptible = (known after apply)

+ node_affinities {

+ key = (known after apply)

+ operator = (known after apply)

+ values = (known after apply)

}

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

google_compute_instance.chapter8: Creating…

google_compute_instance.chapter8: Still creating… [10s elapsed]

google_compute_instance.chapter8: Creation complete after 14s [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

As you can see, the command plan has already been executed when you run the command apply. To make sure that you know what will change in your infrastructure and you have to type yes, then, the instance will be created.

The instance was created successfully, but we do not have any information about the public IP address which we can use to connect to the instance. To get this information, we need to use a Terraform statement called output. This includes this part at the end of your current code:

output "name" {

value = google_compute_instance.chapter8.name

}

output "size" {

value = google_compute_instance.chapter8.machine_type

}

output "public_ip" {

value = google_compute_instance.chapter8.network_interface[0].access_config[0].nat_ip

}

The complete file will be like this:

provider "google" {

credentials = file("terraform_sa.json")

project = "alisson-187813"

region = "us-east1"

}

resource "google_compute_instance""chapter8" {

name = "chapter8-instance"

machine_type = "g1-small"

zone = "us-east1-b"

boot_disk {

initialize_params {

image = "ubuntu-1804-bionic-v20200414"

}

}

network = "default"

access_config {

}

}

}

output "name" {

value = google_compute_instance.chapter8.name

}

output "size" {

value = google_compute_instance.chapter8.machine_type

}

output "public_ip" {

value = google_compute_instance.chapter8.network_interface[0].access_config[0].nat_ip

}

Save the file and run the terraform plan to check what will change:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform plan

Refreshing Terraform state in-memory prior to plan…

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

google_compute_instance.chapter8: Refreshing state… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

------------------------------------------------------------------------

No changes. Infrastructure is up-to-date.

This means that Terraform did not detect any differences between your configuration and real physical resources that exist. As a result, no actions need to be performed.

The command says that we will have no changes. This is because we will not change the Infrastructure, but we will change the code to retrieve some information. Thus, you can run the command apply:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform apply

google_compute_instance.chapter8: Refreshing state… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

Outputs:

name = chapter8-instance

public_ip = 104.196.24.122

size = g1-small

Nothing was changed, added, or destroyed. But, as you can see, the command brought to us the name, the public_ip and the instance size. If you want to see these values again, you can run terraform output:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform output

name = chapter8-instance

public_ip = 104.196.24.122

size = g1-small

Even then, we cannot access the instance, because we did not define any SSH key or password. However, we can do it now, by modifying the code again:

resource "google_compute_instance""chapter8" {

name = "chapter8-instance"

machine_type = "g1-small"

zone = "us-east1-b"

boot_disk {

initialize_params {

image = "ubuntu-1804-bionic-v20200414"

}

}

network_interface {

network = "default"

access_config {

}

}

metadata = {

sshKeys = join("",["alisson:",file("id_rsa.pub")])

}

}

The id_rsa.pub I copied from my default SSH key pair, which usually can be found inside the folder:

ls ~/.ssh

Now, the complete code is as follows:

provider "google" {

credentials = file("terraform_sa.json")

project = "alisson-187813"

region = "us-east1"

}

resource "google_compute_instance""chapter8" {

name = "chapter8-instance"

machine_type = "g1-small"

zone = "us-east1-b"

boot_disk {

initialize_params {

image = "ubuntu-1804-bionic-v20200414"

}

}

network_interface {

network = "default"

access_config {

}

}

metadata = {

sshKeys = join("",["alisson:",file("id_rsa.pub")])

}

}

output "name" {

value = google_compute_instance.chapter8.name

}

output "size" {

value = google_compute_instance.chapter8.machine_type

}

output "public_ip" {

value = google_compute_instance.chapter8.network_interface[0].access_config[0].nat_ip

}

As long as we are including more things in our code, Terraform is getting more and more interesting. Let's run the terraform plan to see what the new changes are:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform plan

Refreshing Terraform state in-memory prior to plan…

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

google_compute_instance.chapter8: Refreshing state… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# google_compute_instance.chapter8 will be updated in-place

~ resource "google_compute_instance" "chapter8" {

can_ip_forward = false

cpu_platform = "Intel Haswell"

current_status = "RUNNING"

deletion_protection = false

enable_display = false

guest_accelerator = []

id = "projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance"

instance_id = "9170008912891805003"

label_fingerprint = "42WmSpB8rSM="

labels = {}

machine_type = "g1-small"

~ metadata = {

+ "sshKeys" = <<~EOT

alisson:ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQChi8HX26xv9Rk9gz47Qhb+Tu7MRqGIyPxnheIeEg Fad/dlqG4w4pY7y5dtx5LNGE9C01varco5dZagqsHplI7M+5ECSvjAuS6b5rkYZwZiZru DXxckcQHFpr2yIz3DOzKRTUc5Hg5JHF5aymiqyVfTsxL/aI/LDY8Ikh+INn3S9+b5 bZtU+74tA6y uqth5SCtNSWwMUlv7QL6ONHtQiviAjBe+ksDBBV6thWz2ZIJA/jApSIBJWK9AWmZwq2hFy9sOZArUDB2Kt 6kl3rIZnHpqJ/GMUCxFhtggYamJ5J2H62 77qLFqLZ/8tum9uc5l/lSWYKTDm2+E/prQfmFrxPf9 alisson

EOT

}

metadata_fingerprint = "bxkbv_NPHas="

name = "chapter8-instance"

project = "alisson-187813"

resource_policies = []

self_link = "https://www.googleapis.com/compute/v1/projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance"

tags = []

tags_fingerprint = "42WmSpB8rSM="

zone = "us-east1-b"

boot_disk {

auto_delete = true

device_name = "persistent-disk-0"

mode = "READ_WRITE"

source = "https://www.googleapis.com/compute/v1/projects/alisson-187813/zones/us-east1-b/disks/chapter8-instance"

initialize_params {

image = "https://www.googleapis.com/compute/v1/projects/ubuntu-os-cloud/global/images/ubuntu-1804-bionic-v20200414"

labels = {}

size = 10

type = "pd-standard"

}

network_interface {

name = "nic0"

network = "https://www.googleapis.com/compute/v1/projects/alisson-187813/global/networks/default"

network_ip = "10.142.0.3"

subnetwork = "https://www.googleapis.com/compute/v1/projects/alisson-187813/regions/us-east1/subnetworks/default"

subnetwork_project = "alisson-187813"

access_config {

nat_ip = "104.196.24.122"

network_tier = "PREMIUM"

}

}

scheduling {

automatic_restart = true

on_host_maintenance = "MIGRATE"

preemptible = false

}

shielded_instance_config {

enable_integrity_monitoring = true

enable_secure_boot = false

enable_vtpm = true

}

}

Plan: 0 to add, 1 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform can't guarantee that exactly these actions will be performed if "terraform apply" is subsequently run.

Finally, we can see the first change. Since the SSH Keys are stored in the instance metadata, when we have an existing resource and we want to modify, the filed change is increased.

Plan: 0 to add, 1 to change, 0 to destroy.

So, one thing changed and nothing was destroyed. This is important. Thus, we can run the terraform apply:

PS C:\Users\1511 MXTI\ProductionInfrastructure> terraform apply

google_compute_instance.chapter8: Refreshing state… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# google_compute_instance.chapter8 will be updated in-place

~ resource "google_compute_instance" "chapter8" {

can_ip_forward = false

cpu_platform = "Intel Haswell"

current_status = "RUNNING"

deletion_protection = false

enable_display = false

guest_accelerator = []

id = "projects/alisson-187813/zones/us-eas t1-b/instances/chapter8-instance"

instance_id = "9170008912891805003"

label_fingerprint = "42WmSpB8rSM="

labels = {}

machine_type = "g1-small"

~ metadata = {

+ "sshKeys" = <<~EOT

alisson:ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQChi8HX26xv9Rk9gz47Qhb+Tu7MRqGIyPx nheIeEgFad/dlqG4w4pY7y5dtx5LNGE9C01varco5dZa gqsHplI7M+5ECSvjAuS6 b5rkYZwZiZruDXx ckcQHFpr2yIz3DOzKRTUc5Hg5JHF5aymiqyVfTsxL/aI/LDY8Ikh+INn3S9+b5bZtU+74tA6yuqth5SCtNSW wMUlv7QL6ONHtQiviAjBe+ksD BBV6thWz2ZIJA/jApSIBJWK9AWmZwq2hFy9sOZArUDB2Kt6kl3rIZnHpqJ/GMUCxFhtggYamJ5J2H6277qLFqLZ/8 tum9uc5l/lSWYKTDm2+E/prQfmFrxPf9 alisson

EOT

}

metadata_fingerprint = "bxkbv_NPHas="

name = "chapter8-instance"

project = "alisson-187813"

resource_policies = []

self_link = "https://www.googleapis.com/compute/v1/projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance"

tags = []

tags_fingerprint = "42WmSpB8rSM="

zone = "us-east1-b"

boot_disk {

auto_delete = true

device_name = "persistent-disk-0"

mode = "READ_WRITE"

source = "https://www.googleapis.com/compute/v1/projects/alisson-187813/zones/us-east1-b/disks/chapter8-instance"

initialize_params {

image = "https://www.googleapis.com/compute/v1/projects/ubuntu-os-cloud/global/images/ubuntu-1804-bionic-v20200414"

labels = {}

size = 10

type = "pd-standard"

}

}

network_interface {

name = "nic0"

network = "https://www.googleapis.com/compute/v1/projects/alisson-187813/global/networks/default"

network_ip = "10.142.0.3"

subnetwork = "https://www.googleapis.com/compute/v1/projects/alisson-187813/regions/us-east1/subnetworks/default"

subnetwork_project = "alisson-187813"

access_config {

nat_ip = "104.196.24.122"

network_tier = "PREMIUM"

}

}

scheduling {

automatic_restart = true

on_host_maintenance = "MIGRATE"

preemptible = false

}

shielded_instance_config {

enable_integrity_monitoring = true

enable_secure_boot = false

enable_vtpm = true

}

}

Plan: 0 to add, 1 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

google_compute_instance.chapter8: Modifying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

google_compute_instance.chapter8: Still modifying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 10s elapsed]

google_compute_instance.chapter8: Modifications complete after 13s [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

name = chapter8-instance

public_ip = 104.196.24.122

size = g1-small

The changes were applied successfully, and then, we can test if the access is working properly:

PS C:\Users\1511 MXTI\ProductionInfrastructure>ssh alisson@104.196.24.122

Welcome to Ubuntu 18.04.4 LTS (GNU/Linux 5.0.0-1034-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Mon May 11 13:21:39 UTC 2020

System load: 0.0 Processes: 94

Usage of /: 12.8% of 9.52GB Users logged in: 0

Memory usage: 13% IP address for ens4: 10.142.0.3

Swap usage: 0%

* Ubuntu 20.04 LTS is out, raising the bar on performance, security,

and optimization for Intel, AMD, Nvidia, ARM64, and Z15 as well as

AWS, Azure, and Google Cloud.

https://ubuntu.com/blog/ubuntu-20-04-lts-arrives

0 packages can be updated.

0 updates are security updates.

The programs included with the Ubuntu system are free software; the exact distribution terms for each program are described in the individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

alisson@chapter8-instance:~$

It works! Perfect! We created everything, just managing some lines of code, and now we have an instance up and running, just accessing the console to create the service account. As a final step, I think we can destroy everything using the command terraform destroy and see how it works:

PS C:\Users\1511 MXTI\ProductionInfrastructure>terraform destroy

google_compute_instance.chapter8: Refreshing state… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# google_compute_instance.chapter8 will be destroyed

- resource "google_compute_instance" "chapter8" {

- can_ip_forward = false -> null

- cpu_platform = "Intel Haswell" -> null

- current_status = "RUNNING" -> null

- deletion_protection = false -> null

- enable_display = false -> null

- guest_accelerator = [] -> null

- id = "projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance" -> null

- instance_id = "9170008912891805003" -> null

- label_fingerprint = "42WmSpB8rSM=" -> null

- labels = {} -> null

- machine_type = "g1-small" -> null

- metadata = {

- "sshKeys" = <<~EOT

alisson:ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQChi8HX2 6xv9Rk9gz47Qhb+Tu7MRqGIyPxnheIeEgFad/dlqG4w4p Y7y5dtx 5LNGE9C01varco5dZagqsHplI7M +5ECSvjAuS6b5rkYZwZiZruDXxckcQHFpr2yIz3DOzKRTUc5Hg5JHF5aymiqyVfTsxL/aI/LDY8Ikh+INn3S9+b5bZtU+74tA6yuqth5SCtNSWwMUlv7QL6ONHtQiviAj Be+ksDBBV6thWz2ZIJA/jApSIBJWK9AWmZwq2hFy9sOZArUDB2Kt6kl3rIZnHpqJ /GMUCxFhtggYamJ5J2H6277qLFqLZ/8tum9uc5l/lSWYKTDm2+E/prQfmFrxPf9 alisson

EOT

} -> null

- metadata_fingerprint = "lZHcUBfXG-4=" -> null

- name = "chapter8-instance" -> null

- project = "alisson-187813" -> null

- resource_policies = [] -> null

- self_link = "https://www.googleapis.com/compute/v1/projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance" -> null

- tags = [] -> null

- tags_fingerprint = "42WmSpB8rSM=" -> null

- zone = "us-east1-b" -> null

- boot_disk {

- auto_delete = true -> null

- device_name = "persistent-disk-0" -> null

- mode = "READ_WRITE" -> null

- source = "https://www.googleapis.com/compute/v1/projects/alisson-187813/zones/us-east1-b/disks/chapter8-instance" -> null

- initialize_params {

- image = "https://www.googleapis.com/compute/v1/projects/ubuntu-os-cloud/global/images/ubuntu-1804-bionic-v20200414" -> null

- labels = {} -> null

- size = 10 -> null

- type = "pd-standard" -> null

}

}

- network_interface {

- name = "nic0" -> null

- network = "https://www.googleapis.com/compute/v1/projects/alisson-187813/global/networks/default" -> null

- network_ip = "10.142.0.3" -> null

- subnetwork = "https://www.googleapis.com/compute/v1/projects/alisson-187813/regions/us-east1/subnetworks/default" -> null

- subnetwork_project = "alisson-187813" -> null

- access_config {

- nat_ip = "104.196.24.122" -> null

- network_tier = "PREMIUM" -> null

}

}

- scheduling {

- automatic_restart = true -> null

- on_host_maintenance = "MIGRATE" -> null

- preemptible = false -> null

}

- shielded_instance_config {

- enable_integrity_monitoring = true -> null

- enable_secure_boot = false -> null

- enable_vtpm = true -> null

}

}

Plan: 0 to add, 0 to change, 1 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

google_compute_instance.chapter8: Destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 10s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 20s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 30s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 40s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 50s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 1m0s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 1m10s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 1m20s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 1m30s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 1m40s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 1m50s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 2m0s elapsed]

google_compute_instance.chapter8: Still destroying… [id=projects/alisson-187813/zones/us-east1-b/instances/chapter8-instance, 2m10s elapsed]

google_compute_instance.chapter8: Destruction complete after 2m19s

Destroy complete! Resources: 1 destroyed.

Perfect! Now, we do not have anything else costing in our GCP account and if you want to create the Infrastructure again, you can just run the command terraform apply. But, with this name you will have the output with the IP address and the SSH key already configured in one shot. If you want to create more instances, just copy and paste the code changing the instance name. The SSH key can be the same for all the instances.

Conclusion

This chapter showed us that it is not necessary to learn the command line of all the Cloud players or even install the SDK for each one of them. Using Terraform, we are able to code the Infrastructure using the Terraform syntax, change the providers to make different integrations, and see what will be changed, created, or destroyed. I really recommend you to explore other providers to integrate with Azure, AWS, Digital Ocean, and play around other providers which are not related to the Cloud player, but they can help you to provide Infrastructure, like the DNS module.