A string is an immutable sequence of bytes. Strings may contain arbitrary data, including bytes with value 0, but usually they contain human-readable text. Text strings are conventionally interpreted as UTF-8-encoded sequences of Unicode code points (runes), which we’ll explore in detail very soon.

The built-in len function returns the number of bytes (not runes) in a string,

and the index operation s[i] retrieves the i-th byte of

string s, where 0 ≤ i < len(s).

s := "hello, world"

fmt.Println(len(s)) // "12"

fmt.Println(s[0], s[7]) // "104 119" ('h' and 'w')

Attempting to access a byte outside this range results in a panic:

c := s[len(s)] // panic: index out of range

The i-th byte of a string is not necessarily the i-th character of a string, because the UTF-8 encoding of a non-ASCII code point requires two or more bytes. Working with characters is discussed shortly.

The substring operation s[i:j] yields a new string consisting of

the bytes of the original string starting at index

i and continuing up to, but not including, the byte at index j.

The result contains j-i bytes.

fmt.Println(s[0:5]) // "hello"

Again, a panic results if either index is out of bounds or if j is

less than i.

Either or both of the i and j operands may be omitted, in which

case the default values of 0 (the start of the string) and len(s)

(its end) are assumed, respectively.

fmt.Println(s[:5]) // "hello" fmt.Println(s[7:]) // "world" fmt.Println(s[:]) // "hello, world"

The + operator makes a new string by concatenating two strings:

fmt.Println("goodbye" + s[5:]) // "goodbye, world"

Strings may be compared with comparison operators like == and

<;

the comparison is done byte by byte, so the result is

the natural lexicographic ordering.

String values are immutable: the byte sequence contained in a string value can never be changed, though of course we can assign a new value to a string variable. To append one string to another, for instance, we can write

s := "left foot" t := s s += ", right foot"

This does not modify the string that s originally held but causes

s to hold the new string formed by the += statement; meanwhile, t still

contains the old string.

fmt.Println(s) // "left foot, right foot" fmt.Println(t) // "left foot"

Since strings are immutable, constructions that try to modify a string’s data in place are not allowed:

s[0] = 'L' // compile error: cannot assign to s[0]

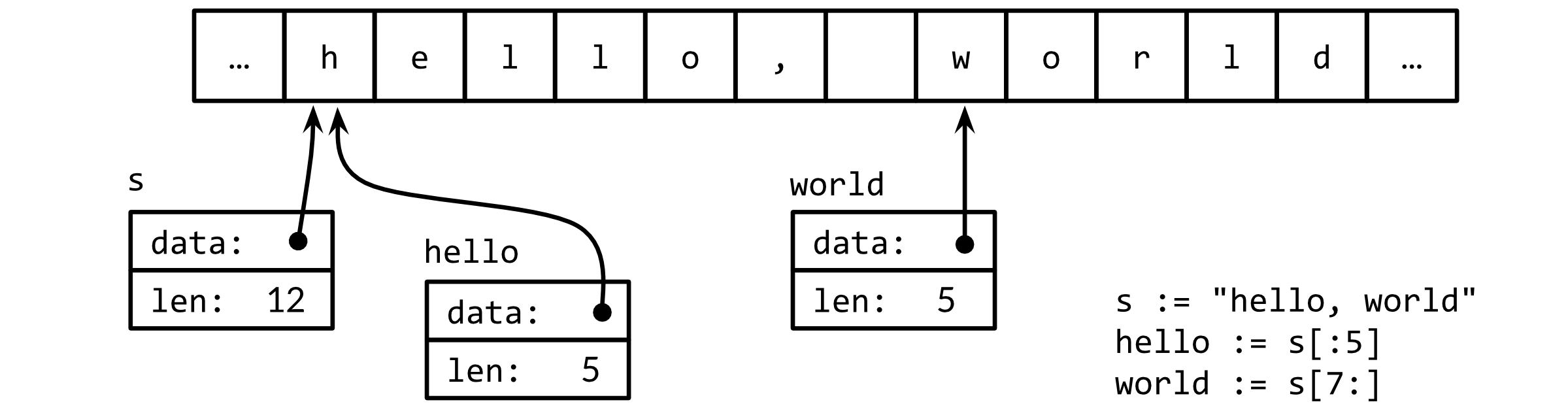

Immutability means that it is safe for two copies of a string to share the

same underlying memory, making it cheap to copy strings of any length.

Similarly, a string s and a substring like s[7:] may safely share the same

data, so the substring operation is also cheap. No new memory

is allocated in either case.

Figure 3.4 illustrates the arrangement of a string and two of its

substrings sharing the same underlying byte array.

A string value can be written as a string literal, a sequence of bytes enclosed in double quotes:

"Hello,  "

"

Because Go source files are always encoded in UTF-8 and Go text strings are conventionally interpreted as UTF-8, we can include Unicode code points in string literals.

Within a double-quoted string literal, escape sequences that begin with

a backslash \ can be

used to insert arbitrary byte values into the string. One set of

escapes handles ASCII control codes like newline, carriage return,

and tab:

\a |

“alert” or bell |

\b |

backspace |

\f |

form feed |

\n |

newline |

\r |

carriage return |

\t |

tab |

\v |

vertical tab |

\' |

single quote (only in the rune literal '\'') |

\" |

double quote (only within "..." literals) |

\\ |

backslash |

Arbitrary bytes can also be included in literal strings using

hexadecimal or octal escapes. A hexadecimal escape is written

\xhh, with exactly two hexadecimal digits h (in upper or lower

case). An octal escape is written \ooo with exactly three octal

digits o (0 through 7) not exceeding \377. Both denote a single

byte with the specified value. Later, we’ll see how to encode Unicode

code points numerically in string literals.

A raw string literal is written `...`, using backquotes

instead of double quotes.

Within a raw string literal, no escape sequences are processed;

the contents are taken literally, including backslashes and newlines, so a raw string

literal may spread over several lines in the program source.

The only processing is that carriage returns are deleted so that the

value of the string is the same on all platforms, including those that

conventionally put carriage returns in text files.

Raw string literals are a convenient way to write regular expressions, which tend to have lots of backslashes. They are also useful for HTML templates, JSON literals, command usage messages, and the like, which often extend over multiple lines.

const GoUsage = `Go is a tool for managing Go source code.

Usage:

go command [arguments]

...`

Long ago, life was simple and there was, at least in a parochial view, only one character set to deal with: ASCII, the American Standard Code for Information Interchange. ASCII, or more precisely US-ASCII, uses 7 bits to represent 128 “characters”: the upper- and lower-case letters of English, digits, and a variety of punctuation and device-control characters. For much of the early days of computing, this was adequate, but it left a very large fraction of the world’s population unable to use their own writing systems in computers. With the growth of the Internet, data in myriad languages has become much more common. How can this rich variety be dealt with at all and, if possible, efficiently?

The answer is Unicode (unicode.org), which collects all of the

characters in all of the world’s writing systems, plus

accents and other diacritical marks, control codes like tab and carriage

return, and plenty of esoterica, and assigns each one a standard number

called a Unicode code point or, in Go terminology, a rune.

Unicode version 8 defines code points for over 120,000 characters

in well over 100 languages and scripts. How are these

represented in computer programs and data? The natural data type to

hold a single rune is int32, and that’s what Go uses; it has the

synonym rune for precisely this purpose.

We could represent a sequence of runes as a sequence of

int32 values. In this representation, which is called UTF-32 or UCS-4, the

encoding of each Unicode code point has the same size, 32 bits. This

is simple and uniform, but it uses much more space than

necessary since most computer-readable text is in ASCII, which requires

only 8 bits or 1 byte per character.

All the characters in widespread use still number fewer than 65,536,

which would fit in 16 bits. Can we do better?

UTF-8 is a variable-length encoding of Unicode code points as bytes. UTF-8

was invented by Ken Thompson and Rob Pike, two of the creators of Go,

and is now a Unicode standard.

It uses between 1 and 4 bytes to represent each rune, but only

1 byte for ASCII characters, and only 2 or 3 bytes for most

runes in common use. The high-order bits of the first byte of the

encoding for a rune indicate how many bytes follow.

A high-order 0 indicates 7-bit ASCII, where each

rune takes only 1 byte, so it is identical to conventional ASCII.

A high-order 110 indicates that the rune takes 2 bytes; the second

byte begins with 10. Larger runes have analogous encodings.

0xxxxxx |

runes 0–127 | (ASCII) |

110xxxxx 10xxxxxx |

128–2047 | (values <128 unused) |

1110xxxx 10xxxxxx 10xxxxxx |

2048–65535 | (values <2048 unused) |

11110xxx 10xxxxxx 10xxxxxx 10xxxxxx |

65536–0x10ffff | (other values unused) |

A variable-length encoding precludes direct indexing to access the n-th character of a string, but UTF-8 has many desirable properties to compensate. The encoding is compact, compatible with ASCII, and self-synchronizing: it’s possible to find the beginning of a character by backing up no more than three bytes. It’s also a prefix code, so it can be decoded from left to right without any ambiguity or lookahead. No rune’s encoding is a substring of any other, or even of a sequence of others, so you can search for a rune by just searching for its bytes, without worrying about the preceding context. The lexicographic byte order equals the Unicode code point order, so sorting UTF-8 works naturally. There are no embedded NUL (zero) bytes, which is convenient for programming languages that use NUL to terminate strings.

Go source files are always encoded in UTF-8, and UTF-8 is the

preferred encoding for text strings manipulated by Go programs. The

unicode package provides functions for working with individual runes

(such as distinguishing letters from numbers, or converting an

upper-case letter to a lower-case one), and the unicode/utf8

package provides functions for encoding and decoding runes as bytes

using UTF-8.

Many Unicode characters are hard to type on a keyboard or to

distinguish visually from similar-looking ones; some are even

invisible. Unicode escapes in Go string literals allow us to specify them

by their numeric code point value. There are two forms,

\uhhhh for a 16-bit value and \Uhhhhhhhh for a 32-bit value,

where each h is a hexadecimal digit; the need for the 32-bit form

arises very infrequently. Each denotes the UTF-8 encoding of the

specified code point. Thus, for example, the following string literals

all represent the same six-byte string:

" "

"\xe4\xb8\x96\xe7\x95\x8c"

"\u4e16\u754c"

"\U00004e16\U0000754c"

"

"\xe4\xb8\x96\xe7\x95\x8c"

"\u4e16\u754c"

"\U00004e16\U0000754c"

The three escape sequences above provide alternative notations for the first string, but the values they denote are identical.

Unicode escapes may also be used in rune literals. These three literals are equivalent:

' ' '\u4e16' '\U00004e16'

' '\u4e16' '\U00004e16'

A rune whose value is less than 256 may be written with a single hexadecimal

escape, such as '\x41' for 'A', but for higher values, a

\u or \U escape must be used.

Consequently, '\xe4\xb8\x96' is not a legal rune literal,

even though those three bytes are a valid UTF-8 encoding of a

single code point.

Thanks to the nice properties of UTF-8, many string operations don’t require decoding. We can test whether one string contains another as a prefix:

func HasPrefix(s, prefix string) bool {

return len(s) >= len(prefix) && s[:len(prefix)] == prefix

}

or as a suffix:

func HasSuffix(s, suffix string) bool {

return len(s) >= len(suffix) && s[len(s)-len(suffix):] == suffix

}

or as a substring:

func Contains(s, substr string) bool {

for i := 0; i < len(s); i++ {

if HasPrefix(s[i:], substr) {

return true

}

}

return false

}

using the same logic for UTF-8-encoded text as for raw bytes. This is

not true for other encodings. (The functions above are drawn from the

strings package, though its implementation of Contains

uses a hashing technique to search more efficiently.)

On the other hand, if we really care about the individual Unicode characters, we have to use other mechanisms. Consider the string from our very first example, which includes two East Asian characters. Figure 3.5 illustrates its representation in memory. The string contains 13 bytes, but interpreted as UTF-8, it encodes only nine code points or runes:

import "unicode/utf8"

s := "Hello,  "

fmt.Println(len(s)) // "13"

fmt.Println(utf8.RuneCountInString(s)) // "9"

"

fmt.Println(len(s)) // "13"

fmt.Println(utf8.RuneCountInString(s)) // "9"

To process those characters, we need a UTF-8 decoder. The

unicode/utf8 package provides one that we can use like this:

for i := 0; i < len(s); {

r, size := utf8.DecodeRuneInString(s[i:])

fmt.Printf("%d\t%c\n", i, r)

i += size

}

Each call to DecodeRuneInString returns r, the rune itself, and

size, the number of bytes occupied by the UTF-8 encoding of r. The

size is used to update the byte index i of the next rune in the

string. But this is clumsy, and we need loops of this kind all the

time. Fortunately, Go’s range loop, when applied to a string,

performs UTF-8 decoding implicitly.

The output of the loop below is also shown in Figure 3.5;

notice how the index jumps by more than 1 for each non-ASCII rune.

for i, r := range "Hello,  " {

fmt.Printf("%d\t%q\t%d\n", i, r, r)

}

" {

fmt.Printf("%d\t%q\t%d\n", i, r, r)

}

We could use a simple range loop to count the number of runes in a

string, like this:

n := 0

for _, _ = range s {

n++

}

As with the other forms of range loop, we can omit the variables we

don’t need:

n := 0

for range s {

n++

}

Or we can just call utf8.RuneCountInString(s).

We mentioned earlier that it is mostly a matter of convention in

Go that text strings are interpreted as UTF-8-encoded sequences of

Unicode code points, but for correct use of range

loops on strings, it’s more than a convention, it’s a necessity.

What happens if we range over a string containing arbitrary binary

data or, for that matter, UTF-8 data containing errors?

Each time a UTF-8 decoder, whether explicit in a call to

utf8.DecodeRuneInString or implicit in a range loop,

consumes an unexpected input byte,

it generates a special Unicode replacement

character, '\uFFFD', which is usually printed as a white question mark

inside a black hexagonal or diamond-like shape ![]() . When a program encounters this

rune value, it’s often a sign that some upstream part of the system that

generated the string data has been careless in its treatment of text

encodings.

. When a program encounters this

rune value, it’s often a sign that some upstream part of the system that

generated the string data has been careless in its treatment of text

encodings.

UTF-8 is exceptionally convenient as an interchange format but within a program runes may be more convenient because they are of uniform size and are thus easily indexed in arrays and slices.

A []rune conversion applied to a UTF-8-encoded string returns

the sequence of Unicode code points that the string encodes:

// "program" in Japanese katakana

s := " "

fmt.Printf("% x\n", s) // "e3 83 97 e3 83 ad e3 82 b0 e3 83 a9 e3 83 a0"

r := []rune(s)

fmt.Printf("%x\n", r) // "[30d7 30ed 30b0 30e9 30e0]"

"

fmt.Printf("% x\n", s) // "e3 83 97 e3 83 ad e3 82 b0 e3 83 a9 e3 83 a0"

r := []rune(s)

fmt.Printf("%x\n", r) // "[30d7 30ed 30b0 30e9 30e0]"

(The verb % x in the first Printf inserts a space between each

pair of hex digits.)

If a slice of runes is converted to a string, it produces the concatenation of the UTF-8 encodings of each rune:

fmt.Println(string(r)) // " "

"

Converting an integer value to a string interprets the integer as a rune value, and yields the UTF-8 representation of that rune:

fmt.Println(string(65)) // "A", not "65"

fmt.Println(string(0x4eac)) // " "

"

If the rune is invalid, the replacement character is substituted:

fmt.Println(string(1234567)) // " "

"

Four standard packages are particularly important for manipulating

strings: bytes, strings, strconv, and unicode.

The strings package provides many functions for searching,

replacing, comparing, trimming, splitting, and joining strings.

The bytes package has similar

functions for manipulating slices of bytes,

of type []byte, which share some

properties with strings.

Because strings are immutable, building up strings incrementally can involve

a lot of allocation and copying.

In such cases, it’s more efficient to use the bytes.Buffer

type, which we’ll show in a moment.

The strconv package provides functions for converting

boolean, integer, and floating-point values to and from their string

representations, and functions for quoting and unquoting strings.

The unicode package provides functions like IsDigit,

IsLetter, IsUpper, and IsLower for classifying

runes. Each function takes a single rune argument and

returns a boolean. Conversion functions like ToUpper and

ToLower convert a rune into the given case if it is a letter.

All these functions use the Unicode standard categories for

letters, digits, and so on.

The strings package has similar functions, also called ToUpper and

ToLower, that return a new string with the specified transformation

applied to each character of the original string.

The basename function below

was inspired by the Unix shell utility of the same name.

In our

version, basename(s) removes any prefix of s that looks like a

file system path with components separated by slashes, and it removes

any suffix that looks like a file type:

fmt.Println(basename("a/b/c.go")) // "c"

fmt.Println(basename("c.d.go")) // "c.d"

fmt.Println(basename("abc")) // "abc"

The first version of basename does all the work without the help of

libraries:

// basename removes directory components and a .suffix.

// e.g., a => a, a.go => a, a/b/c.go => c, a/b.c.go => b.c

func basename(s string) string {

// Discard last '/' and everything before.

for i := len(s) - 1; i >= 0; i-- {

if s[i] == '/' {

s = s[i+1:]

break

}

}

// Preserve everything before last '.'.

for i := len(s) - 1; i >= 0; i-- {

if s[i] == '.' {

s = s[:i]

break

}

}

return s

}

A simpler version uses the strings.LastIndex library function:

func basename(s string) string {

slash := strings.LastIndex(s, "/") // -1 if "/" not found

s = s[slash+1:]

if dot := strings.LastIndex(s, "."); dot >= 0 {

s = s[:dot]

}

return s

}

The path and path/filepath packages provide a more

general set of functions for manipulating hierarchical names.

The path package works with slash-delimited paths on any

platform. It shouldn’t be used for file names, but it is appropriate for

other domains, like the path component of a URL. By contrast,

path/filepath manipulates file names using the rules for the

host platform, such as /foo/bar for POSIX or c:\foo\bar on

Microsoft Windows.

Let’s continue with another substring example.

The task is to take a string

representation of an integer, such as "12345", and insert commas every three

places, as in "12,345".

This version only works for integers; handling floating-point numbers is left

as a exercise.

// comma inserts commas in a non-negative decimal integer string.

func comma(s string) string {

n := len(s)

if n <= 3 {

return s

}

return comma(s[:n-3]) + "," + s[n-3:]

}

The argument to comma is a string. If its length is less than or

equal to 3, no comma is necessary. Otherwise, comma calls itself

recursively with a substring consisting of all but the last three

characters, and appends a comma and the last three characters to the

result of the recursive call.

A string contains an array of bytes that, once created, is immutable. By contrast, the elements of a byte slice can be freely modified.

Strings can be converted to byte slices and back again:

s := "abc" b := []byte(s) s2 := string(b)

Conceptually, the []byte(s) conversion allocates a new byte

array holding a copy of the bytes of s, and yields a slice

that references the entirety of that array.

An optimizing compiler may be able to avoid the allocation and copying

in some cases, but in general copying is required to ensure that the

bytes of s remain unchanged even if those of b are

subsequently modified.

The conversion from byte slice back to string with string(b) also

makes a copy, to ensure immutability of the resulting string s2.

To avoid conversions and unnecessary memory allocation, many of the

utility functions in the bytes package directly parallel their

counterparts in the strings package. For example, here are half a

dozen functions from strings:

func Contains(s, substr string) bool func Count(s, sep string) int func Fields(s string) []string func HasPrefix(s, prefix string) bool func Index(s, sep string) int func Join(a []string, sep string) string

and the corresponding ones from bytes:

func Contains(b, subslice []byte) bool func Count(s, sep []byte) int func Fields(s []byte) [][]byte func HasPrefix(s, prefix []byte) bool func Index(s, sep []byte) int func Join(s [][]byte, sep []byte) []byte

The only difference is that strings have been replaced by byte slices.

The bytes package provides the Buffer type for efficient

manipulation of byte slices. A Buffer starts out empty but grows as

data of types like string, byte, and []byte

are written to it.

As the example below shows, a bytes.Buffer variable requires no

initialization because its zero value is usable:

// intsToString is like fmt.Sprint(values) but adds commas.

func intsToString(values []int) string {

var buf bytes.Buffer

buf.WriteByte('[')

for i, v := range values {

if i > 0 {

buf.WriteString(", ")

}

fmt.Fprintf(&buf, "%d", v)

}

buf.WriteByte(']')

return buf.String()

}

func main() {

fmt.Println(intsToString([]int{1, 2, 3})) // "[1, 2, 3]"

}

When appending the UTF-8 encoding of an arbitrary rune to a bytes.Buffer,

it’s best to use bytes.Buffer’s WriteRune method, but

WriteByte is fine for ASCII characters such as '[' and ']'.

The bytes.Buffer type is extremely versatile, and when we discuss interfaces

in Chapter 7, we’ll see how it may be used as a replacement for a file

whenever an I/O function requires a sink for bytes (io.Writer) as

Fprintf does above, or a source of bytes (io.Reader).

Exercise 3.10:

Write a non-recursive version of comma, using bytes.Buffer

instead of string concatenation.

Exercise 3.11:

Enhance comma so that it deals correctly with floating-point

numbers and an optional sign.

Exercise 3.12: Write a function that reports whether two strings are anagrams of each other, that is, they contain the same letters in a different order.

In addition to conversions between strings, runes, and bytes, it’s

often necessary to convert between numeric values and their string

representations.

This is done with functions from the strconv package.

To convert an integer to a string, one option is to

use fmt.Sprintf; another is to use the function strconv.Itoa (“integer to

ASCII”):

x := 123

y := fmt.Sprintf("%d", x)

fmt.Println(y, strconv.Itoa(x)) // "123 123"

FormatInt and FormatUint can be used to format numbers in a different base:

fmt.Println(strconv.FormatInt(int64(x), 2)) // "1111011"

The fmt.Printf verbs %b, %d, %u, and

%x are often more convenient than Format functions, especially if we want to include

additional information besides the number:

s := fmt.Sprintf("x=%b", x) // "x=1111011"

To parse a string representing an integer, use the strconv

functions Atoi or ParseInt,

or ParseUint for unsigned integers:

x, err := strconv.Atoi("123") // x is an int

y, err := strconv.ParseInt("123", 10, 64) // base 10, up to 64 bits

The third argument of ParseInt gives the size of the integer type that

the result must fit into; for example, 16 implies int16, and the special

value of 0 implies int.

In any case, the type of the result y is always int64,

which you can then convert to a smaller type.

Sometimes fmt.Scanf is useful for parsing input that consists of

orderly mixtures of strings and numbers all on a single line,

but it can be inflexible, especially when handling incomplete

or irregular input.