3

Critical Brain Dynamics at Large Scale

Prologue

“Essentially, all modeling of brain function from studying models of neural networks has ignored the self-organized aspects of the process, but has concentrated on designing a working brain by engineering all the connections of inputs and outputs.”

Bak [1]

3.1 Introduction

In these notes, we discuss the idea put forward two decades ago by Bak [1] that the working brain stays at an intermediate (critical) regime characterized by power-law correlations. Highly correlated brain dynamics produces synchronized states with no behavioral value, while weakly correlated dynamics prevent information flow. In between these states, the unique dynamical features of the critical state endow the brain with properties that are fundamental for adaptive behavior. This simple proposal is now supported by a wide body of empirical evidence at different scales, demonstrating that the spatiotemporal brain dynamics exhibit key signatures of critical dynamics, previously recognized in other complex systems.

3.1.1 If Criticality is the Solution, What is the Problem?

Criticality, in simple terms, refers to a distinctive set of properties found only at the boundary separating regimes with different dynamics, for instance, between an ordered and a disordered phase. The dynamics of critical phenomena are a peculiar mix of order and disorder, whose detailed understanding constitute one of the mayor achievements of statistical physics in the past century [2].

What is the problem for which critical phenomena can be relevant in the context of the brain? The first problem is to understand how the very large conglomerate of interconnected neurons produce a wide repertoire of behaviors in a flexible and self-organized way. This issue is not resolved at any rate, demonstrable by the fact that detailed models constructed to account for such dynamics fail at some of the three emphasized aspects: either (i) the model is an unrealistic low-dimensional version of the neural structure of interest or (ii) it produces a single behavior (i.e., a hardwired circuit), and consequently (iii) it cannot flexibly perform more than one simple thing. A careful analysis of the literature will reveal that only by arbitrarily changing the neuronal connections can current mathematical models play a reasonable wide repertoire of behaviors. Of course, this rewiring implies a kind of supplementary brain governing which connections need to be rewired in each case. Consequently, generating behavioral variability out of the same neural structure is a fundamental question which is screaming to be answered, but seldom is even being asked.

A second related problem is how stability is achieved in such a large system with an astronomical number of neurons, each one continuously receiving thousands of inputs from other neurons. We still lack a precise knowledge of how the cortex prevents an explosive propagation of activity while still managing to share information across areas. It is obvious that if the average number of neurons activated by one neuron is too high (i.e., supercritical), a massive activation of the entire network will ensue, while if it is too low (i.e., subcritical), propagation will die out. About 50 years ago [3], Turing was the first to speculate that the brain, in order to work properly, needs to be at a critical regime, that is, one in which these opposing forces are balanced.

Criticality as a potential solution to these issues was first explored by Bak [1] and colleagues [4–8] while attempting to apply ideas of self-organized criticality [9, 10] to the study of living systems. Throughout the last decade of his short but productive life, in uncountable lively lectures, Bak enthusiastically broadcasted the idea that if the world at large is studied as any other complex system, it will reveal a variety of instances in which critical dynamics will be recognized as the relevant phenomena at play. Basically, the emphasis was in considering criticality as another attractor. The claim was that “dynamical systems with extended spatial degrees of freedom naturally evolve into self-organized critical structures of states which are barely stable. The combination of dynamical minimal stability and spatial scaling leads to a power law for temporal fluctuations” [9].

These ideas were only a portion of Bak's much broader and deeper insight about how nature works in general, often communicated in his unforgiving way, as, for instance, when challenging colleagues by asking: “Is biology too difficult for biologists? And what can physics, dealing with the simple and lawful, contribute to biology, which deals with the complex and diverse. These complex many-body problems might have similarities to problems studied in particle and solid-state physics.” [11]. Thus, Bak was convinced that the critical state was a novel dynamical attractor to which large distributed systems will eventually converge, given some relatively simple conditions. From this viewpoint, the understanding of the brain belongs to the same problem of understanding complexity in nature.

These comments should inspire us to think again about the much larger question underlying the study of brain dynamics using ideas from critical phenomena. Bak's (and colleagues') legacy will be incomplete if we restrict ourselves (for instance) to find power laws in the brain and compare it in health and disease. By its theoretical foundations, critical phenomena offer the opportunity to understand how the brain works, in the same magnitude that it impacted in some other areas, as, for instance, in the mathematical modeling of Sepkoski fossil record of species extinction events, which opened a completely novel strategy to study how macroevolution works [12].

The rest of this chapter is dedicated to review recent work on large-scale brain dynamics inspired by Bak's ideas. The material is organized as follows: Section 3.2 dwells on what is essentially novel about critical dynamics; Sections 3.3 and 3.4 are dedicated to a discussion on how to recognize criticality. Section 3.5 discusses the main implications of the results presented and Section 3.6 closes with a summary.

3.2 What is Criticality Good for?

According to this program, the methods used in physics to study the properties of matter must be useful to characterize brain function [13]. How reasonable is that? A simple but strong assumption needs to be made: that the mind is nothing more than the emergent global dynamics of neuronal interactions, in the same sense than ferromagnetism is an emergent property of the interaction between neighboring spins and an external field. To appreciate the validity of this point, a key result from statistical physics is relevant here: universality. In brief, this notion says that a huge family of systems will follow the same laws and exhibit the same dynamics providing that some set of minimum conditions are met. These conditions involve only the presence of some nonlinearity, under some boundary conditions and some types of interactions. Any other details of the system will not be relevant, meaning that the process will arise in the same quantitative and qualitative manner in very diverse systems, where order, disorder, or the observation of one type of dynamics over another will be dictated by the strength and type of the interactions. This is seen throughout nature, from cell function (warranted by the interaction of multiple metabolic reactions) to global macroeconomics (modulated by trade), and so on.

Perhaps, considering the unthinkable one could appreciate better what universality means, in general, and later translate it to complex systems. The world would be a completely different place without universality, imagine if each phenomena would be explained by a different “relation” (as it would not be possible to talk in terms of general laws) between intervening particles and forces. Gravity would be different for each metal or different materials, Galileo's experiments would not repeat themselves unless for the same material he used, and so on. It can be said that without universality, each phenomenon we are familiar with would be foreign and strange.

3.2.1 Emergence

Throughout nature, it is common to observe similar collective properties emerging independently of the details of each system. But what is emergence and why is it relevant to discuss it in this context? Emergence refers to the unexpected collective spatiotemporal patterns exhibited by large complex systems. In this context, “unexpected” refers to our inability (mathematical and otherwise) to derive such emergent patterns from the equations describing the dynamics of the individual parts of the system. As discussed at length elsewhere [1, 14], complex systems are usually large conglomerates of interacting elements, each one exhibiting some sort of nonlinear dynamics. Without entering into details, it is known that the interaction can also be indirect, for instance, through some mean field. Usually, energy enters into the system, and therefore some sort of driving is present. The three emphasized features (i.e., large number of interacting nonlinear elements) are necessary, although not sufficient, conditions for a system to exhibit emergent complex behavior at some point.

As long as the dynamics of each individual element is nonlinear, other details of the origin and nature of the nonlinearities are not important [1, 15]. For instance, the elements can be humans, driven by food and other energy resources, from which some collective political or social structure eventually arises. It is well known that whatever the type of structure that emerges, it is unlikely to appear if one of the three above-emphasized properties is absent. Conversely, the interaction of a small number of linear elements will not produce any of this “unexpected” complex behavior (indeed, this is the case in which everything can be mathematically anticipated).

3.2.2 Spontaneous Brain Activity is Complex

It is evident, from the very early electrical recordings a century ago, that the brain is spontaneously active, even in absence of external inputs. However obvious this observation could appear, it was only recently that the dynamical features of the spontaneous brain state began to be studied in any significant way.

Work on brain rhythms at small and large brain scales shows that spontaneous healthy brain dynamics is not composed of completely random activity patterns nor by periodic oscillations [16]. Careful analysis of the statistical properties of neural dynamics under no explicit input has identified complex patterns of activity previously neglected as background noise dynamics. The fact is that brain activity is always essentially arrhythmic regardless of how it is monitored, whether as electrical activity in the scalp (EEG, electroencephalography), by techniques of functional magnetic resonance imaging (fMRI), in the synchronization of oscillatory activity [17, 18], or in the statistical features of local field potential peaks [19].

It has been pointed out repeatedly [20–24] that, under healthy conditions, no brain temporal scale takes primacy over average, resulting in power spectral densities decaying of “1/f noise.” Behavior, the ultimate interface between brain dynamics and the environment, also exhibits scale-invariant features as shown in human cognition [25–27], human motion [28], as well as in animal motion [29]. The origin of the brain scale-free dynamics was not adequately investigated until recently, probably (and paradoxically) due to the ubiquity of scale invariance in nature [9]. The potential significance of a renewed interpretation of the brain's spontaneous patterns in terms of scale invariance is at least double. On one side, it provides important clues about brain organization, in the sense that our previous ideas cannot easily accommodate these new findings. Also, the class of complex dynamics observed seems to provide the brain with previously unrecognized robust properties.

3.2.3 Emergent Complexity is Always Critical

The commonality of scale-free dynamics in the brain naturally leads one to ask what physics knows about very general mechanisms that are able to produce such dynamics. Attempts to explain and generate nature's nonuniformity included several mathematical models and recipes, but few succeeded in creating complexity without embedding the equations with complexity. The important point is that including the complexity in the model will only result in a simulation of the real system, without entailing any understanding of complexity. The most significant efforts were those aimed at discovering the conditions in which something complex emerges from the interaction of the constituting noncomplex elements [1, 9]. Initial inspiration was drawn from work in the field of phase transitions and critical phenomena. Precisely, one of the novelties of critical phenomena is the fact that out of the short-range interaction of simple elements, eventually long-range spatiotemporal correlated patterns emerge. As such, critical dynamics have been documented in species evolution [1], ants' collective foraging [30, 31] and swarm models [32], bacterial populations [33], traffic flow on highways [1] and on the Internet [34], macroeconomic dynamics [35], forest fires [36], rainfall dynamics [37–39], and flock formation [40]. The same rationale leads to the conjecture [1, 6, 7] that the complexity of brain dynamics is just another signature of an underlying critical process. Because the largest number of metastable states exists at the point near the transition, the brain can access the largest repertoire of behaviors in a flexible way. That view claimed that the most fundamental properties of the brain are possible only by staying close to that critical instability, independently of how such a state is reached or maintained. In the following sections, recent empirical evidence supporting this hypothesis is discussed.

3.3 Statistical Signatures of Critical Dynamics

The presence of scaling and correlations spanning the size of the system are usually hints of critical phenomena. While, in principle, it is relatively simple to identify these signatures, in the case of finite data and the absence of a formal theory, as is the case of the brain, any initial indication of criticality needs to be checked against many known artifacts. In the next paragraphs, we discuss the most relevant efforts to identify these signatures in large-scale brain data.

3.3.1 Hunting for Power Laws in Densities Functions

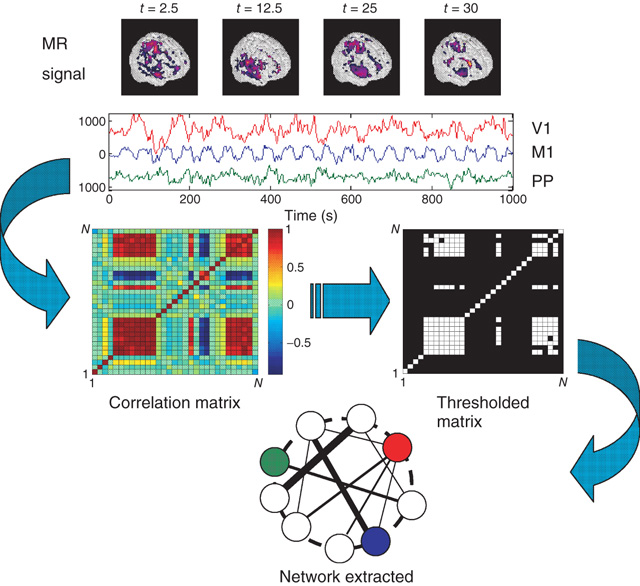

As we will discuss later in more detail, the dynamical skeleton of a complex system can be derived from the correlation network, that is, the subsets of the nodes linked by some minimum correlation value (computed from the system activity). As early as 2003, Eguiluz and colleagues [41] used fMRI data to extract the very first functional networks connecting correlated human brain sites. Networks were constructed (see Figure 3.1) by connecting the brain sites with the strongest correlations between their blood-oxygenated-level-dependent (BOLD) signal. The analysis of the resulting networks in different tasks showed that (i) the distribution of functional connections and the probability of finding a link versus distance were both scale-free, (ii) the characteristic path length was small and comparable with those of equivalent random networks, and (iii) the clustering coefficient was orders of magnitude larger than those of equivalent random networks. It was suggested that these properties, typical of scale-free small-world networks, should reflect important functional information about brain states and provide mechanistic clues.

Figure 3.1 Methodology used to extract functional networks from the brain fMRI BOLD signals. The correlation matrix is calculated from all pairs of BOLD time series. The strongest correlations are selected to define the networks nodes. The top four images represent examples of snapshots of activity at one moment and the three traces correspond to time series of activity at selected voxels from visual (V1), motor (M1), and posterioparietal (PP) cortices. (Figure redrawn from [41].)

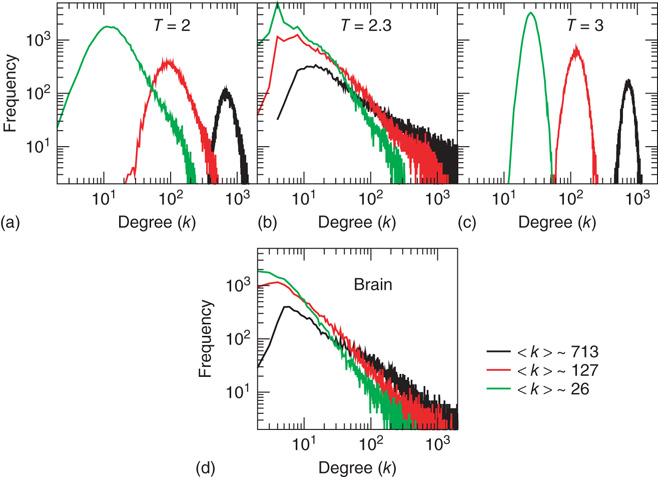

This was investigated in subsequent work by Fraiman et al. [42] who studied the dynamics of the spontaneous (i.e., at “rest”) fluctuations of brain activity with fMRI. Brain “rest” is defined – more or less unsuccessfully – as the state in which there is no explicit brain input or output. Now it is widely accepted that the structure and location of large-scale brain networks can be derived from the interaction of cortical regions during rest, which closely match the same regions responding to a wide variety of different activation conditions [43, 44]. These so-called resting-state networks (RSNs) can be reliably computed from the fluctuations of the BOLD signals of the resting brain, with great consistency across subjects [45–47] even during sleep [48] or anesthesia [49]. Fraiman et al. [42] focused on the question of whether such states can be comparable to any known dynamical state. For that purpose, correlation networks from human brain fMRI were contrasted with correlation networks extracted from numerical simulations of the Ising model in 2D, at different temperatures. For the critical temperature  , striking similarities (as shown in Figure 3.2) appear in the most relevant statistical properties, making the two networks indistinguishable from each other. These results were interpreted as lending additional support to the conjecture that the dynamics of the functioning brain is near a critical point.

, striking similarities (as shown in Figure 3.2) appear in the most relevant statistical properties, making the two networks indistinguishable from each other. These results were interpreted as lending additional support to the conjecture that the dynamics of the functioning brain is near a critical point.

Figure 3.2 At criticality, brain and Ising networks are indistinguishable from each other. The graphs show a comparison of the link density distributions computed from correlation networks extracted from brain data (d) and from numerical simulations of the Ising model (a–c) at three temperatures: critical ( ), subcritical (

), subcritical ( ), and supercritical (

), and supercritical ( ). (a–c) The degree distribution for the Ising networks at

). (a–c) The degree distribution for the Ising networks at  ,

,  , and

, and  for three representative values of

for three representative values of  ,

,  , and

, and  . (d) Degree distribution for correlated brain network for the same three values of

. (d) Degree distribution for correlated brain network for the same three values of  . (Figure redrawn from Fraiman et al. [42].)

. (Figure redrawn from Fraiman et al. [42].)

Kitzbichler et al. [50] analyzed fMRI and magnetoencephalography (MEG) data recorded from normal volunteers at resting state using phase synchronization between diverse spatial locations. They reported a scale-invariant distribution for the length of time that two brain locations on the average remained locked. This distribution was also found in the Ising and the Kuramoto model [51] at the critical state, suggesting that the data exhibited criticality. This work was revisited recently by Botcharova et al. [52] who investigated whether the display of power-law statistics of the two measures of synchronization – phase-locking intervals and global lability of synchronization – can be analogous to similar scaling at the critical threshold in classical models of synchronization. Results confirmed only partially the previous findings, emphasizing the need to proceed with caution in making direct analogies between the brain dynamics and systems at criticality. Specifically, they showed that “the pooling of pairwise phase-locking intervals from a non-critically interacting system can produce a distribution that is similarly assessed as being power law. In contrast, the global lability of synchronization measure is shown to better discriminate critical from non critical interaction” [52].

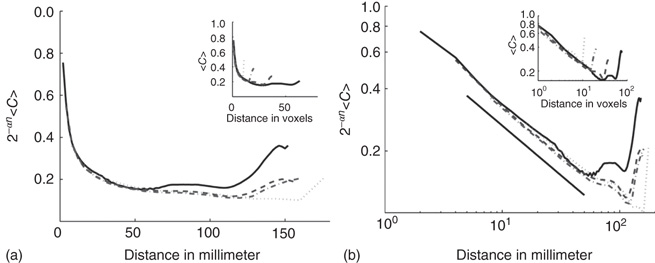

The works commented up until now rely on determining if probability density functions (i.e., node degree, or synchronization lengths) obey power laws. The approach from Expert et al. [53] looked at a well-known property of the dynamics at criticality: self-similarity. They investigated whether the two-point correlation function can be renormalized. This is a very well-understood technique used in critical phenomena in which the data sets are coarse grained at successive scales while computing some statistic. They were able to show that the two-point correlation function of the BOLD signal is invariant under changes in the spatial scale as shown in Figure 3.3, which together with the temporal  scaling exhibited by BOLD time series, suggests critical dynamics.

scaling exhibited by BOLD time series, suggests critical dynamics.

Figure 3.3 Self-similarity of the brain fMRI two-correlation function. The plot shows the renormalized average correlation function versus distance for the four levels of description: solid line:  (n = 0); dashed line:

(n = 0); dashed line:  ; dashed-dotted,

; dashed-dotted,  ; and dotted line:

; and dotted line:  . (a) Linear–linear and (b) log–log axis. The exponent

. (a) Linear–linear and (b) log–log axis. The exponent  describes well the data. (Figure redrawn from Expert et al. [53].)

describes well the data. (Figure redrawn from Expert et al. [53].)

3.3.2 Beyond Fitting: Variance and Correlation Scaling of BrainNoise

An unexpected new angle in the problem of criticality was offered by the surging interest in the source of the BOLD signal variability and its information content. For instance, it was shown recently [54] in a group of subjects of different ages, that the BOLD signal standard deviation can be a better predictor of the subject age than the average. Furthermore, additional work focused on the relation between the fMRI signal variability and a task performance, concluded that faster and more consistent performers exhibit significantly higher brain variability across tasks than the poorer performing subjects [55]. Overall, these results suggested that the understanding of the resting brain dynamics can benefit from a detailed study of the BOLD variability per se.

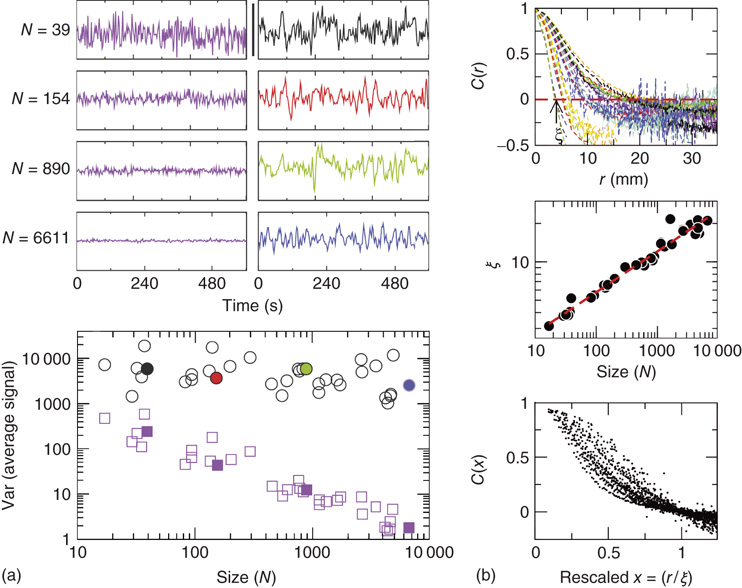

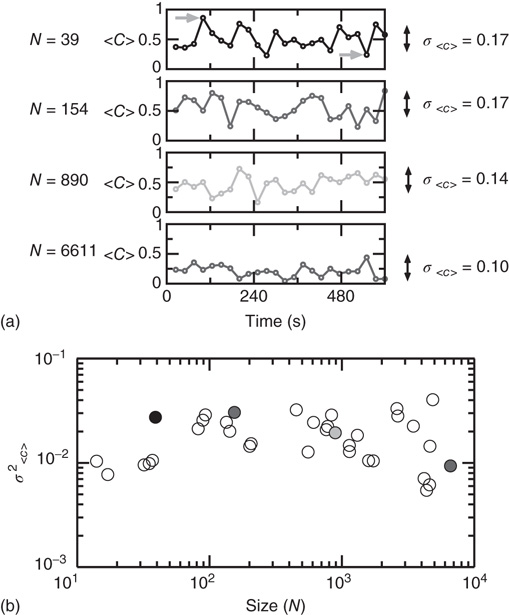

Precisely, at this aim was directed the work in [56], which studied the statistical properties of the spontaneous BOLD fluctuations and its possible dynamical mechanisms. In these studies, an ensemble of brain regions of different sizes were defined and the statistics of the fluctuations and correlations were computed as a function of the region's size. The report identifies anomalous scaling of the variance as a function of the number of elements and a distinctive divergence of the correlations with the size of the cluster considered. We now proceed to describe these findings in detail.

3.3.2.1 Anomalous Scaling

The objects of interest are the fluctuations of the BOLD signal around its mean, which for the 35 RSN clusters used by Fraiman and Chialvo [56], are defined as

where  represents the position of the voxel

represents the position of the voxel  that belongs to the cluster

that belongs to the cluster  of size

of size  . These signals are used to study the correlation properties of the activity in each cluster.

. These signals are used to study the correlation properties of the activity in each cluster.

The mean activity of each  cluster is defined as

cluster is defined as

and its variance is defined as

where  and

and  is the number of temporal points. Note that the average subtracted in Eq. (3.1) is the mean at time

is the number of temporal points. Note that the average subtracted in Eq. (3.1) is the mean at time  (computed over

(computed over  voxels) of the BOLD signals, not to be confused with the BOLD signal averaged over

voxels) of the BOLD signals, not to be confused with the BOLD signal averaged over  temporal points.

temporal points.

As the BOLD signal fluctuates widely and the number  of voxels in the clusters can be very large, one might expect that the aggregate of Eq. (3.1) obeys the law of the large numbers. If this was true, the variance of the mean field

of voxels in the clusters can be very large, one might expect that the aggregate of Eq. (3.1) obeys the law of the large numbers. If this was true, the variance of the mean field  in Eq. (3.3) would decrease with

in Eq. (3.3) would decrease with  as

as  . In other words, one would expect a smaller amplitude fluctuation for the average BOLD signal recorded in clusters (i.e.,

. In other words, one would expect a smaller amplitude fluctuation for the average BOLD signal recorded in clusters (i.e.,  ) comprised by a large number of voxels compared with smaller clusters. However, the data in Figure 3.4a shows otherwise, the variance of the average activity remains approximately constant over a change of four orders of magnitude in cluster sizes. The strong departure from the

) comprised by a large number of voxels compared with smaller clusters. However, the data in Figure 3.4a shows otherwise, the variance of the average activity remains approximately constant over a change of four orders of magnitude in cluster sizes. The strong departure from the  decay is enough to disregard further statistical testing, which is confirmed by recomputing the variance for artificially constructed clusters having a similar number of voxels but composed of the randomly reordered

decay is enough to disregard further statistical testing, which is confirmed by recomputing the variance for artificially constructed clusters having a similar number of voxels but composed of the randomly reordered  BOLD raw time series (as the four examples in the top left panels of Figure 3.4a). As expected, in this case, the variance (plotted using square symbols in the bottom panel of Figure 3.4a) obeys the

BOLD raw time series (as the four examples in the top left panels of Figure 3.4a). As expected, in this case, the variance (plotted using square symbols in the bottom panel of Figure 3.4a) obeys the  law).

law).

Figure 3.4 Spontaneous fluctuations of fMRI data show anomalous behavior of the variance (a) and divergence of the correlation length (b). Top figures in panel a show four examples of average BOLD time series (i.e.,  in Eq. (3.2)) computed from clusters of different sizes

in Eq. (3.2)) computed from clusters of different sizes  . Note that while the amplitude of the raw BOLD signals (right panels) remains approximately constant, in the case of the shuffled data sets (left panels) the amplitude decreases drastically for increasing cluster sizes. The bottom graph in panel a shows the calculations for the 35 clusters (circles) plotted as a function of the cluster size, demonstrating that variance is independent of the RSN's cluster size. The square symbols show similar computations for a surrogate time series constructed by randomly reordering the original BOLD time series, which exhibit the expected

. Note that while the amplitude of the raw BOLD signals (right panels) remains approximately constant, in the case of the shuffled data sets (left panels) the amplitude decreases drastically for increasing cluster sizes. The bottom graph in panel a shows the calculations for the 35 clusters (circles) plotted as a function of the cluster size, demonstrating that variance is independent of the RSN's cluster size. The square symbols show similar computations for a surrogate time series constructed by randomly reordering the original BOLD time series, which exhibit the expected  scaling (dashed line). Filled symbols in the bottom panel are used to denote the values for the time series used as examples in the top panel. In panel b, there are three graphs: the top one shows the correlation function

scaling (dashed line). Filled symbols in the bottom panel are used to denote the values for the time series used as examples in the top panel. In panel b, there are three graphs: the top one shows the correlation function  as a function of distance for clusters of different sizes. Contrary to naive expectations, large clusters are as correlated as relatively smaller ones: the correlation length increases with cluster size, a well-known signature of criticality. Each line in the top panel shows the mean cross-correlation

as a function of distance for clusters of different sizes. Contrary to naive expectations, large clusters are as correlated as relatively smaller ones: the correlation length increases with cluster size, a well-known signature of criticality. Each line in the top panel shows the mean cross-correlation  of BOLD activity fluctuations as a function of distance

of BOLD activity fluctuations as a function of distance  averaged over all time series of each of the 35 clusters. The correlation length

averaged over all time series of each of the 35 clusters. The correlation length  , denoted by the zero crossing of

, denoted by the zero crossing of  is not a constant. As shown in the middle graph scale,

is not a constant. As shown in the middle graph scale,  grows linearly with the average cluster diameter

grows linearly with the average cluster diameter  for all the 35 clusters (filled circles),

for all the 35 clusters (filled circles),  . The bottom graph shows the collapse of

. The bottom graph shows the collapse of  by rescaling the distance with

by rescaling the distance with  . (Figure redrawn from Fraiman and Chialvo [56].)

. (Figure redrawn from Fraiman and Chialvo [56].)

3.3.2.2 Correlation Length

A straightforward approach to understanding the correlation behavior commonly used in large collective systems [40] is to determine the correlation length at various systems' sizes. The correlation length is the average distance at which the correlations of the fluctuations around the mean crosses zero. It describes how far one has to move to observe any two points in a system behaving independently of each other. Notice that, by definition, the computation of the correlation length is done over the fluctuations around the mean, and not over the raw BOLD signals; otherwise, global correlations may produce a single spurious correlation length value commensurate with the brain size.

Thus, we start by computing for each voxel BOLD time series their fluctuations around the mean of the cluster to which they belong. Recall the expression in Eq. (3.1), where  is the BOLD time series at a given voxel and

is the BOLD time series at a given voxel and  represents the position of the voxel

represents the position of the voxel  that belongs to the cluster

that belongs to the cluster  of size

of size  . By definition, the mean of the BOLD fluctuations of each cluster vanishes,

. By definition, the mean of the BOLD fluctuations of each cluster vanishes,

Next we compute the average correlation function of the BOLD fluctuations between all pairs of voxels in the cluster considered, which are separated by a distance  :

:

where  is a unitary vector and

is a unitary vector and  represents averages over

represents averages over  .

.

The typical form we observe for  is shown in the top panel of Figure 3.4b. The first striking feature to note is the absence of a unique

is shown in the top panel of Figure 3.4b. The first striking feature to note is the absence of a unique  for all clusters. Nevertheless, they are qualitatively similar, being at short distances close to unity, to decay as

for all clusters. Nevertheless, they are qualitatively similar, being at short distances close to unity, to decay as  increases, and then becoming negative for longer voxel-to-voxel distances. Such behavior indicates that within each and any cluster, on an average, the fluctuations around the mean are strongly positive at short distances and strongly anticorrelated at larger distances, whereas there is no range of distance for which the correlation vanishes.

increases, and then becoming negative for longer voxel-to-voxel distances. Such behavior indicates that within each and any cluster, on an average, the fluctuations around the mean are strongly positive at short distances and strongly anticorrelated at larger distances, whereas there is no range of distance for which the correlation vanishes.

It is necessary to clarify whether the  divergence is trivially determined by the structural connectivity. In that case,

divergence is trivially determined by the structural connectivity. In that case,  must be constant throughout the entire recordings. Conversely, if the dynamics are critical, their average value will not be constant, as it is the product of a combination of some instances of high spatial coordination intermixed with moments of discoordination. In order to answer this question, we study the mean correlation (

must be constant throughout the entire recordings. Conversely, if the dynamics are critical, their average value will not be constant, as it is the product of a combination of some instances of high spatial coordination intermixed with moments of discoordination. In order to answer this question, we study the mean correlation ( ) as a function of time for regions of interest of various sizes, for nonoverlapping periods of 10 temporal points.

) as a function of time for regions of interest of various sizes, for nonoverlapping periods of 10 temporal points.

Figure 3.5 shows the behavior of  over time for four different cluster sizes. Notice that, in all cases, there are instances of large correlation followed by moments of weak coordination, as those indicated by the arrows in the uppermost panel. We have verified that this behavior is not sensitive to the choice of the length of the window in which

over time for four different cluster sizes. Notice that, in all cases, there are instances of large correlation followed by moments of weak coordination, as those indicated by the arrows in the uppermost panel. We have verified that this behavior is not sensitive to the choice of the length of the window in which  is computed. These bursts keep the variance of the correlations almost constant (i.e., in this example, there is a minor decrease in variance (by a factor of 0.4) for a huge increase in size (by a factor of 170). This is observed for any of the cluster sizes as shown in the bottom panel of Figure 3.4, where the variance of

is computed. These bursts keep the variance of the correlations almost constant (i.e., in this example, there is a minor decrease in variance (by a factor of 0.4) for a huge increase in size (by a factor of 170). This is observed for any of the cluster sizes as shown in the bottom panel of Figure 3.4, where the variance of  is approximately constant, despite the four order of magnitude increase in sizes. The results of these calculations imply that independent of the size of the cluster considered, there is always an instance in which a large percentage of voxels are highly coherent and another instance in which each voxel activity is relatively independent.

is approximately constant, despite the four order of magnitude increase in sizes. The results of these calculations imply that independent of the size of the cluster considered, there is always an instance in which a large percentage of voxels are highly coherent and another instance in which each voxel activity is relatively independent.

Figure 3.5 Bursts of high correlations are observed at all cluster sizes, resulting in approximately the same variance, despite the four orders of magnitude change in the cluster size. (a) Representative examples of short-term mean correlation  of the BOLD signals as a function of time for four sizes spanning four orders of magnitude. The arrows show examples of two instances of highly correlated and weakly correlated activity, respectively. (b) The variance of

of the BOLD signals as a function of time for four sizes spanning four orders of magnitude. The arrows show examples of two instances of highly correlated and weakly correlated activity, respectively. (b) The variance of  as a function of cluster sizes. The four examples on (a) are denoted with filled circles in (b). (Figure redrawn from Fraiman and Chialvo [56].)

as a function of cluster sizes. The four examples on (a) are denoted with filled circles in (b). (Figure redrawn from Fraiman and Chialvo [56].)

Thus, to summarize Fraiman and Chialvo, their work [56] revealed three key statistical properties of the brain BOLD signal variability.

- The variance of the average BOLD fluctuations computed from ensembles of widely different sizes remains constant (i.e., anomalous scaling).

- The analysis of short-term correlations reveals bursts of high coherence between arbitrarily far apart voxels, indicating that the variance anomalous scaling has a dynamical (and not structural) origin.

- The correlation length measured at different regions increases with the region's size, as well as its mutual information.

3.4 Beyond Averages: Spatiotemporal Brain Dynamics at Criticality

Without exception, all the reports considering large-scale brain critical dynamics resorted to the computation of averages over certain time and/or space scales. However, as time and space are essential for brain function, it would be desirable to make statements of where and when the dynamics is at the brink of instability, that is, the hallmark of criticality. In this section, we summarize novel ideas that attempt to meet this challenge by developing techniques that consider large-scale dynamics in space and time in the same way that climate patterns are dealt with, tempting us to call these efforts “brain meteorology.”

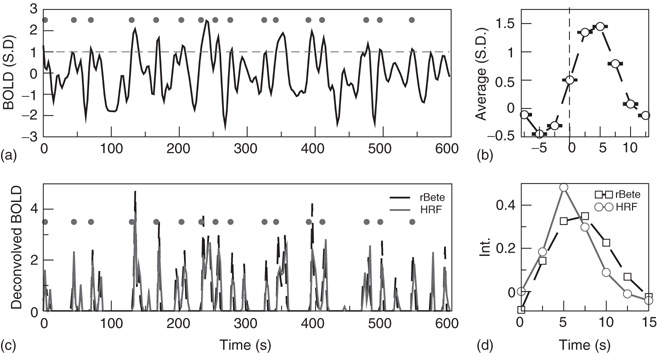

Tagliazucchi et al. departed from the current brain imaging techniques based on the analysis of gradual and continuous changes in the brain BOLD signal. By doing that, they were able to show that the relatively large amplitude BOLD signal peaks [57] contain substantial information. These findings suggested the possibility that relevant dynamical information can be condensed in discrete events. If that were true, then the possibility of capturing space and time was possible, an objective ultimately achieved in a subsequent report by Tagliazucchi and colleagues [58], which demonstrated how brain dynamics at the resting state can be captured just by the timing and location of such events, that is, in terms of a spatiotemporal point process.

3.4.1 fMRI as a Point Process

The application of this novel method allowed, for the first time, to define a theoretical framework in terms of an order and control parameter derived from fMRI data, where the dynamical regime can be interpreted as one corresponding to a system close to the critical point of a second-order phase transition. The analysis demonstrated that the resting brain spends most of the time near the critical point of such transition and exhibits avalanches of activity ruled by the same dynamical and statistical properties described previously for neuronal events at smaller scales.

The data in Figure 3.6 shows an example of a point process extracted from a BOLD time series. A qualitative comparison with the established method of deconvolving the BOLD signal with the hemodynamics response function suggests that at first order, the point process is equivalent to the peaks of the deconvolucion.

Figure 3.6 (a) Example of a point process (filled circles) extracted from the normalized BOLD signal. Each point corresponds to a threshold (dashed line at 1 SD) crossing from below. (b) Average BOLD signal (from all voxels of one subject) triggered at each threshold crossing. (c) The peaks of the deconvolved BOLD signal, using either the hemodynamic response function (HRF) or the rBeta function [57] depicted in panel d, coincide on a great majority with the timing of the points shown in panel a. (Figure redrawn from Tagliazucchi et al. [58].)

As shown in [58], the point process can efficiently compress the information needed to reproduce the underlying brain activity in a way comparable with conventional methods such as seed correlation and independent component analysis demonstrated by, for instance, its ability to replicate the right location of each of the RSN. While the former methods represent averages over the entire data sets, the point process, by construction, compresses and preserves the temporal information. This potential advantage, unique to the current approach, may provide additional clues on brain dynamics.

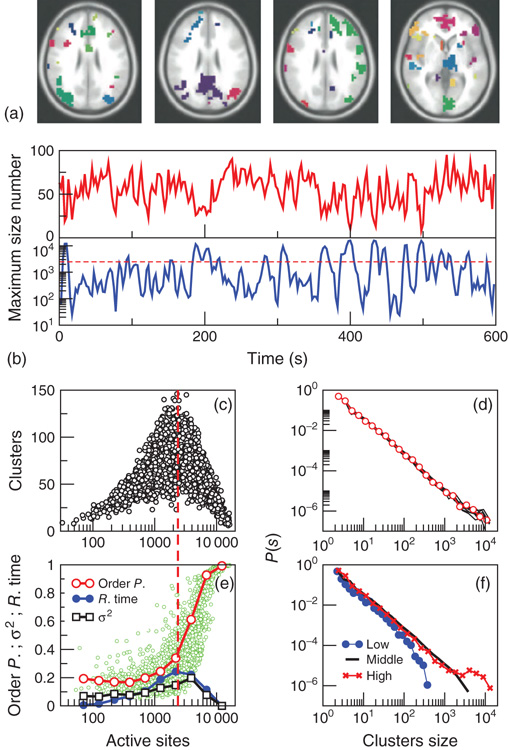

This is explored here by compiling the statistics and dynamics of clusters of points both in space and time. Clusters are groups of contiguous voxels with signals above the threshold at a given time, identified by a scanning algorithm in each fMRI volume. Figure 3.7a shows examples of clusters (in this case nonconsecutive in time) depicted with different colors. Typically (Figure 3.7b, top), the number of clusters at any given time varies only an order of magnitude around the mean ( ). In contrast, the size of the largest active cluster fluctuates widely, spanning more than four orders of magnitude.

). In contrast, the size of the largest active cluster fluctuates widely, spanning more than four orders of magnitude.

Figure 3.7 The level of brain activity continuously fluctuates above and below a phase transition. (a) Examples of coactivated clusters of neighbor voxels (clusters are 3D structures; thus, seemingly disconnected clusters may have the same color in a 2D slice). (b) Example of the temporal evolution of the number of clusters and its maximum size (in units of voxels) in one individual. (c) Instantaneous relation between the number of clusters versus the number of active sites (i.e., voxels above the threshold) showing a positive/negative correlation depending on whether activity is below/above a critical value ( voxels, indicated by the dashed line here and in panel b). (d) The cluster size distribution follows a power law spanning four orders of magnitude. Individual statistics for each of the 10 subjects are plotted with lines and the average with symbols. (e) The order parameter, defined here as the (normalized) size of the largest cluster, is plotted as a function of the number of active sites (isolated data points denoted by dots, averages plotted with circles joined by lines). The calculation of the residence time density distribution (“R. time”, filled circles) indicates that the brain spends relatively more time near the transition point. Note that the peak of the R. time in this panel coincides with the peak of the number of clusters in panel c, as well as the variance of the order parameter (squares). (f) The computation of the cluster size distribution calculated for three ranges of activity (low: 0–800; middle: 800–5000; and high

voxels, indicated by the dashed line here and in panel b). (d) The cluster size distribution follows a power law spanning four orders of magnitude. Individual statistics for each of the 10 subjects are plotted with lines and the average with symbols. (e) The order parameter, defined here as the (normalized) size of the largest cluster, is plotted as a function of the number of active sites (isolated data points denoted by dots, averages plotted with circles joined by lines). The calculation of the residence time density distribution (“R. time”, filled circles) indicates that the brain spends relatively more time near the transition point. Note that the peak of the R. time in this panel coincides with the peak of the number of clusters in panel c, as well as the variance of the order parameter (squares). (f) The computation of the cluster size distribution calculated for three ranges of activity (low: 0–800; middle: 800–5000; and high  5000) reveals the same scale invariance plotted in panel d for relatively small clusters, but shows changes in the cutoff for large clusters. (Figure redrawn from [58].)

5000) reveals the same scale invariance plotted in panel d for relatively small clusters, but shows changes in the cutoff for large clusters. (Figure redrawn from [58].)

The analysis reveals four novel dynamical aspects of the cluster variability, which could have hardly been uncovered with the previous methods.

- At any given time, the number of clusters and the total activity (i.e., the number of active voxels) follows a nonlinear relation resembling that of percolation [59]. At a critical level of global activity (

voxels, dashed horizontal line in Figure 3.7b, vertical in Figure 3.7c), the number of clusters reaches a maximum (∼100–150), together with its variability.

voxels, dashed horizontal line in Figure 3.7b, vertical in Figure 3.7c), the number of clusters reaches a maximum (∼100–150), together with its variability. - The correlation between the number of active sites (an index of total activity) and the number of clusters reverses above a critical level of activity, a feature already described in other complex systems in which some increasing density competes with limited capacity [1, 59].

- The rate at which the very large clusters (i.e., those above the dashed line in 3.7b) occurs (

one every 30–50 s) corresponds to the low frequency range at which RSNs are typically detected using probabilistic independent component analysis (PICA) [45].

one every 30–50 s) corresponds to the low frequency range at which RSNs are typically detected using probabilistic independent component analysis (PICA) [45]. - The distribution of cluster sizes (Figure 3.7d) reveals a scale-free distribution (whose cutoff depends on the activity level; see panel f).

3.4.2 A Phase Transition

The four features just described are commonly observed in complex systems undergoing an order–disorder phase transition [1, 10, 13]. This scenario was explored in [58] by defining control and order parameter from the data. To represent the degree of order (i.e., the order parameter), the size of the largest cluster (normalized by the number of active sites) in the entire brain was computed and plotted as a function of the number of active points (i.e., the control parameter). This was done for all time steps and plotted in Figure 3.7e (small circles). As a control parameter, the global level of activity was used as in other well-studied models of order–disorder transitions (the clearest example being percolation [59]).

Several features in the data reported in [58] suggest a phase transition: first, there is sharp increase in the average order parameter (empty circles in Figure 3.7e), accompanied by an increase of its variability (empty squares). Second, the transition coincides with the peak in the function plotted in Figure 3.7c, which accounts for the number of clusters. Finally, the calculation of the relative frequency of the number of active sites (i.e., the residence time distribution) shows that the brain spends, on an average, more time near the transition than in the two extremes, the highly ordered and the highly disordered states. This supports the earlier conjecture that the brain works near criticality [1, 13, 53]. It would be interesting to investigate whether and how this transition diagram changes with arousal states, unhealthy conditions, and anesthesia, as well as to to develop ways to parametrize such changes to be used as objective markers of mind state.

3.4.3 Variability and Criticality

It is important to note that the description in terms of a point process allows the observation of activity fluctuations in space and time. In particular, note that the results in Figure 3.7c,e show that the resting brain dynamics achieves maximum variability at a particular level of activation which coincides with criticality. As it is known that the peak of variability in critical phenomena is found at criticality, it is tempting to speculate that the origin of the spontaneous brain fluctuations can be traced back to a phase transition. This possibility is further strengthened by the fact that the data shows the brain spends most of the time around such transitions.

Thus, overall the results point to a different class of models that needs to emphasize nonequilibrium self-generated variability. The data is orthogonal to most of the current models in which, without the external noise, the dynamics are stuck in a stable equilibrium state. On the other hand, nonequilibrium systems near criticality do not need the introduction of noise: variability is self-generated by the collective dynamics, which spontaneously fluctuate near the critical point.

3.5 Consequences

As discussed in previous sections, critical dynamics implies coherence of activity beyond what is dictated by nearest neighbor connections and correlations longer than that of the neural structure and nontrivial scaling of the fluctuations. These anomalies suggest the need to turn the page on a series of concepts derived from the idea that the brain works as a circuit. While it is not suggested here that such circuits do not exist, fundamentally different conclusions should be extracted from their study. As a starting point, the following paragraphs discuss which of the associated notions of connectivity and networks should be revised under the viewpoint of criticality. At the end of the section, an analogy with river beds is offered to summarize the point.

3.5.1 Connectivity versus Functional Collectivity

The present results suggest that the current interpretation of functional connectivity, an extensive chapter of the brain neuroimaging literature, should be revised. The three basic concepts in this area are brain functional connectivity, effective connectivity, and structural connectivity [60–62]. The first one “is defined as the correlations between spatially remote neurophysiological events” [60]. Per se, the definition is a statistical one, and it “is simply a statement about the observed correlations; it does not comment on how these correlations are mediated” [60]. The second concept, effective connectivity, is closer to the notion of causation between neuronal connections and “is defined as the influence one neuronal system exerts over another.” Finally, the concept of structural or anatomical connectivity refers to the identifiable physical or structural (synaptic) connections linking neuronal elements.

The problem with the use of these three concepts is that, intentionally or not, they emphasize “the connections” between brain regions. This is so, despite cautionary comments emphasizing that “depending on sensory input, global brain state, or learning, the same structural network can support a wide range of dynamic and cognitive states” [61].

An initial demonstration of the ambiguity in the functional connectivity definition was shown by the results of Fraiman in the Ising model, which, almost as an excercise, showed [42] the emergence of nontrivial collective states over an otherwise trivial regular lattice (i.e., the Ising's structural connectivity). As it is well known that the brain structural connectivity is not a lattice, the replication in the Ising model of many relevant brain network properties suggested the need to revise our assumptions at the time of interpreting functional connectivity studies.

The second blow to the connectivity framework was given by recent results from Haimovici et al. [63]. They compared the RSN from human fMRI with numerical results obtained from their network model, which is based on the structural connectivity determined earlier by Hagmann et al. [64], plus a simple excitable dynamics endowed to each network node. Different dynamics were obtained by changing the excitability of the nodes, but only the results gathered at criticality compared well with the human fMRI. These striking results indicate that the spatiotemporal brain activity in human RSN represents a collective emergent phenomena exhibited by the underlying structure only at criticality. By indicating under which specific dynamical conditions the brain structure will produce the empirically observed functional connectivity, Haimovici's results not only reemphasized that “the same structural network can support a wide range of dynamic and cognitive states” but it also showed, for the first time, how it can be done. Of course, these modeling results only scratched the surface of the problem, and a theory to deal with dramatic changes in functionality as a function of global parameters is awaiting.

The third concept in the circuit trio is effective connectivity, which, as mentioned, implies the notion of influence of one neuronal group over another. Implicit to this idea is the notion of causation, which needs to be properly defined to prevent confusion. In this context, the causation for a given variable boils down to identify which one of all the other covariables (i.e, degrees of freedom sharing some correlations) predicts best its own dynamics. This is done by observing the past states of all the interactions to a given site and estimating which one contributes more to determine the present state of such a site. While the idea is always the same, the question of causation can be framed in different ways, by specific modeling, by calculating partial correlations, different variants of Granger causality, transfer entropies, and so on. Independent of the implementation, in systems at criticality, the notion of effective connectivity suffers from a severe limitation as emergent phenomena cannot be dissected in the interaction pairs. To illustrate such a limitation, it suffices to mention the inability to predict the next avalanche in the sandpile model [9] by computing causation between the nearest neighbor sites.

An important step forward is the work reported recently by Battaglia and colleagues [65], who in the same spirit as in the earlier discussion begin by stating:

“The circuits of the brain must perform a daunting amount of functions. But how can “brain states” be flexibly controlled, given that anatomic inter-areal connections can be considered as fixed, on timescales relevant for behavior?”

The authors conjectured, on the basis of dynamical first principles, that even relatively simple circuits (of brain areas) could produce many “effective circuits” associated with alternative brain states. In their language, “effective circuits” are different effective connectivities arising from circuits with identical structural connectivity. In a proof of principle model, the authors demonstrated convincingly how a small perturbation can change at will from implementing one effective circuit to another. The effect of the perturbation is, in dynamical terms, a switch to different phase-locking patterns between the local neuronal oscillations. We shall add that for this switch to be possible, the basins of attraction between patterns need to be close to each other or, in other words, the system parameters need to be tuned to a region near instability. Furthermore, they found that “information follows causality,” which implies that under this condition brief dynamics perturbations can produce completely different modalities of information routing between brain areas of a fixed structural network. It is clear that this is the type of theoretical framework needed to tackle the bigger problem of how, at a large scale, integration and segregation of information is permanently performed by the brain.

3.5.2 Networks, Yet Another Circuit?

The recent advent of the so-called network approach has produced, without any doubt, a tremendous impact across several disciplines. In all cases, accessing the network graph represents the possibility of seeing the skeleton of the system over which the dynamics evolves, with the consequent simplification of the problem at hand. In this way, the analysis focuses on defining the interaction paths linking the systems' degrees of freedoms (i.e., the nodes). The success of this approach in complex systems probably is linked to the universality exhibited by the dynamics of this class of systems. Universality tells us that, in the same class, in many cases, the only relevant information is the interactions; thus, in that case a network represents everything needed to understand how they work.

Thus, in the case at hand, the use of network techniques could bring the false hope that knowing where the connections between neuronal groups are, then the brain problem will be solved. This illusion will affect even those who are fully aware that this is not possible, because the fascination with the complexity of networks will at least produce an important distraction and delay. The point is that we could be fooling ourselves in choosing for our particular problem a description of the brain determined by graphs, constructed by nodes, connected by paths, and so on.

The reflection we suggest is that, despite changing variables and adopting different names, this new network approach preserves the same idea that we consider is (dangerously) rigid for understanding the brain: the concept of a circuit. This notion, introduced as the most accepted neural paradigm for the past century, was adopted by neuroscience from the last engineering revolution (i.e., electronics). Thus, while it is true that action potentials traverse, undoubtedly, and circulate through paths, the system is not a circuit in the same sense of electronic systems, where nothing unexpected emerges out of the collective interaction of resistors, capacitors, and semiconductors. Thus, if these new ideas will move the field ahead, it will depend heavily on resisting this fascination and preventing the repetition of old paradigms with new names.

3.5.3 River Beds, Floods, and Fuzzy Paths

The question often appears on how the flow of activity during any given behavior could be visualized if the brain operates as a system near criticality.1

The answer, in the absence of datum, necessarily involves the use of caricatures and analogies. In such a hypothetical framework, we imagine a landscape where the activity flows, and to be graphical let us think of a river. If the system is near criticality, first and most importantly, such a landscape must exclude the presence of deep paths (i.e., no “Grand Canyon”), only relatively shallow river beds, some of then with water and others dry. On the other hand, if the system is ordered, the stream will always flow following deep canyons. In this context, let us imagine that “information” is transmitted by the water, and in that sense it is its flow that “connects” regions (whenever at a given time two or more regions are wet simultaneously). Under relatively constant conditions erosion, owing to water flow, it will be expected to deepen the river beds. Conversely, changes in the topology of this hypothetical network can occur anytime that a sudden increase makes a stream overflow its banks. After that, it will be possible to observe that the water changed course, a condition that will be stable only until the next flooding.

Thus, in this loose analogy, the river network structural connectivity (i.e., the relatively deeper river beds) is the less relevant part of the story for predicting where information will be shared. The effective connectivity is created through the history of the system, and its paths can not be conceived as being fix. The moral behind this loose analogy is to direct attention to the fact that the path's flexibility, which is absolutely essential for brain function, depends on having a landscape composed by shallow river beds.

3.6 Summary and Outlook

The program reviewed here considers the brain as a dynamical object. As in other complex systems, the accessible data to be explained are spatiotemporal patterns at various scales. The question is whether it is possible to explain all these results from a single fundamental principle. And, in case the answer is affirmative, what does this unified explanation of brain activity imply about goal-oriented behavior? We submit that, to a large extent, the problem of the dynamical regime at which the brain operates is already solved in the context of critical phenomena and phase transitions. Indeed, several fundamental aspects of brain phenomenology have an intriguing counterpart, with dynamics seen in other systems when posed at the edge of a second-order phase transition.

We have limited our review here to the large-scale dynamics of the brain; nevertheless, as discussed elsewhere [13], similar principles can be demonstrated at other scales. To be complete, the analysis must incorporate behavioral and cognitive data, which will show similar signatures indicative of scale invariance. Finally, and hopefully, overall, these results should give us a handle for a rational classification of healthy and unhealthy mind states.

References

- 1. Bak, P. (1996) How Nature Works: The Science of Self-Organized Criticality, Springer, New York.

- 2. Stanley, H. (1987) Introduction to Phase Transitions and Critical Phenomena, Oxford University Press.

- 3. Turing, A. (1950) Computing machinery and intelligence. Mind, 59 (236), 433–460.

- 4. Stassinopoulos, D. and Bak, P. (1995) Democratic reinforcement: a principle for brain function. Phys. Rev. E, 51 (5), 5033–5039.

- 5. Ceccatto, A., Navone, H., and Waelbroeck, H. (1996) Stable criticality in a feedforward neural network. Rev. Mex. Fis., 42, 810–825.

- 6. Chialvo, D. and Bak, P. (1999) Learning from mistakes. Neuroscience, 90 (4), 1137–1148.

- 7. Bak, P. and Chialvo, D. (2001) Adaptive learning by extremal dynamics and negative feedback. Phys. Rev. E, 63 (3), 031912.

- 8. Wakeling, J. and Bak, P. (2001) Intelligent systems in the context of surrounding environment. Phys. Rev. E, 64 (5), 051920.

- 9. Bak, P., Tang, C., and Wiesenfeld, K. (1987) Self-organized criticality: an explanation of 1/f noise. Phys. Rev. Lett., 59 (4), 381–384.

- 10. Jensen, H.J. (1998) Self-Organized Criticality: Emergent Complex Behavior in Physical and Biological Systems, Cambridge University Press, New York.

- 11. Bak, P. (1998) Life laws. Nature, 391 (6668), 652–653.

- 12. Bak, P. and Sneppen, K. (1993) Punctuated equilibrium and criticality in a simple model of evolution. Phys. Rev. Lett., 71 (24), 4083–4086.

- 13. Chialvo, D. (2010) Emergent complex neural dynamics. Nat. Phys., 6 (10), 744–750.

- 14. Bak, P. and Paczuski, M. (1995) Complexity, contingency, and criticality. Proc. Natl. Acad. Sci. U.S.A., 92 (15), 6689–6696.

- 15. Anderson, P. (1972) More is different. Science, 177 (4047), 393–396.

- 16. Buzsaki, G. (2009) Rhythms of the Brain, Oxford University Press, New York.

- 17. Linkenkaer-Hansen, K., Nikouline, V., Palva, J., and Ilmoniemi, R. (2001) Long-range temporal correlations and scaling behavior in human brain oscillations. J. Neurosci., 21 (4), 1370–1377.

- 18. Stam, C. and de Bruin, E. (2004) Scale-free dynamics of global functional connectivity in the human brain. Hum. Brain Mapp., 22 (2), 97–109.

- 19. Plenz, D. and Thiagarajan, T.C. (2007) The organizing principles of neuronal avalanches: cell assemblies in the cortex? Trends Neurosci., 30 (3), 101–110.

- 20. Bullock, T., McClune, M., and Enright, J. (2003) Are the electroencephalograms mainly rhythmic? Assessment of periodicity in wide-band time series. Neuroscience, 121 (1), 233–252.

- 21. Logothetis, N. (2002) The neural basis of the blood–oxygen–level–dependent functional magnetic resonance imaging signal. Philos. Trans. R. Soc. London, Ser. B, 357 (1424), 1003–1037.

- 22. Eckhorn, R., van Pelt, J., Corner, M.A., Uylings, H.B.M., and Lopes da Silva, F.H. (1994) Oscillatory and non-oscillatory synchronizations in the visual cortex and their possible roles in associations of visual features, in Progress in Brain Research, Vol. 102, Chapter 28, Elsevier Science, pp. 405–425.

- 23. Miller, K., Sorensen, L., Ojemann, J., and Den Nijs, M. (2009) Power-law scaling in the brain surface electric potential. PLoS Comput. Biol., 5 (12), e1000609.

- 24. Manning, J., Jacobs, J., Fried, I., and Kahana, M. (2009) Broadband shifts in local field potential power spectra are correlated with single-neuron spiking in humans. J. Neurosci., 29 (43), 13613–13620.

- 25. Gilden, D. (2001) Cognitive emissions of 1/f noise. Psychol. Rev., 108 (1), 33–56.

- 26. Maylor, E., Chater, N., and Brown, G. (2001) Scale invariance in the retrieval of retrospective and prospective memories. Psychon. Bull. Rev., 8 (1), 162–167.

- 27. Ward, L. (2001) Dynamical Cognitive Science, MIT Press.

- 28. Nakamura, T., Kiyono, K., Yoshiuchi, K., Nakahara, R., Struzik, Z., and Yamamoto, Y. (2007) Universal scaling law in human behavioral organization. Phys. Rev. Lett., 99 (13), 138103.

- 29. Anteneodo, C. and Chialvo, D. (2009) Unraveling the fluctuations of animal motor activity. Chaos, 19 (3), 033123.

- 30. Beckers, R., Deneubourg, J., Goss, S., and Pasteels, J. (1990) Collective decision making through food recruitment. Insectes Soc., 37 (3), 258–267.

- 31. Beekman, M., Sumpter, D., and Ratnieks, F. (2001) Phase transition between disordered and ordered foraging in pharaoh's ants. Proc. Natl. Acad. Sci. U.S.A., 98 (17), 9703–9706.

- 32. Rauch, E., Millonas, M., and Chialvo, D. (1995) Pattern formation and functionality in swarm models. Phys. Lett. A, 207 (3), 185–193.

- 33. Nicolis, G. and Prigogine, I. (1977) Self-Organization in Nonequilibrium Systems: From Dissipative Structures to Order Through Fluctuations, Wiley-Interscience, New York.

- 34. Takayasu, M., Takayasu, H., and Fukuda, K. (2000) Dynamic phase transition observed in the internet traffic flow. Physica A, 277 (1), 248–255.

- 35. Lux, T. and Marchesi, M. (1999) Scaling and criticality in a stochastic multi-agent model of a financial market. Nature, 397 (6719), 498–500.

- 36. Malamud, B.D., Morein, G., and Turcotte, D.L. (1998) Forest fires: an example of self-organized critical behavior. Science, 281 (5384), 1840–1842.

- 37. Peters, O. and Neelin, J. (2006) Critical phenomena in atmospheric precipitation. Nat. Phys., 2 (6), 393–396.

- 38. Peters, O., Hertlein, C., and Christensen, K. (2002) A complexity view of rainfall. Phys. Rev. Lett., 88, 018701.

- 39. Peters, O. and Christensen, K. (2002) Rain: relaxations in the sky. Phys. Rev. E, 66, 036120.

- 40. Cavagna, A., Cimarelli, A., Giardina, I., Parisi, G., Santagati, R., Stefanini, F., and Viale, M. (2010) Scale-free correlations in starling flocks. Proc. Natl. Acad. Sci. U.S.A., 107 (26), 11865–11870.

- 41. Eguiluz, V., Chialvo, D., Cecchi, G., Baliki, M., and Apkarian, A. (2005) Scale-free brain functional networks. Phys. Rev. Lett., 94, 018102.

- 42. Fraiman, D., Balenzuela, P., Foss, J., and Chialvo, D. (2009) Ising-like dynamics in large-scale functional brain networks. Phys. Rev. E, 79 (6), 061922.

- 43. Fox, M. and Raichle, M. (2007) Spontaneous fluctuations in brain activity observed with functional magnetic resonance imaging. Nat. Rev. Neurosci., 8 (9), 700–711.

- 44. Smith, S., Fox, P., Miller, K., Glahn, D., Fox, P., Mackay, C., Filippini, N., Watkins, K., Toro, R., Laird, A., and Beckmann, C. (2009) Correspondence of the brain's functional architecture during activation and rest. Proc. Natl. Acad. Sci. U.S.A., 106 (31), 13040–13045.

- 45. Beckmann, C., DeLuca, M., Devlin, J., and Smith, S. (2005) Investigations into resting-state connectivity using independent component analysis. Philos. Trans. R. Soc. London, Ser. B, 360 (1457), 1001–1013.

- 46. Xiong, J., Parsons, L., Gao, J., and Fox, P. (1999) Interregional connectivity to primary motor cortex revealed using mri resting state images. Hum. Brain Mapp., 8 (2-3), 151–156.

- 47. Cordes, D., Haughton, V., Arfanakis, K., Wendt, G., Turski, P., Moritz, C., Quigley, M., and Meyerand, M. (2000) Mapping functionally related regions of brain with functional connectivity MR imaging. Am. J. Neuroradiol., 21 (9), 1636–1644.

- 48. Fukunaga, M., Horovitz, S., van Gelderen, P., de Zwart, J., Jansma, J., Ikonomidou, V., Chu, R., Deckers, R., Leopold, D., and Duyn, J. (2006) Large-amplitude, spatially correlated fluctuations in bold fmri signals during extended rest and early sleep stages. Magn. Reson. Imaging, 24 (8), 979–992.

- 49. Vincent, J., Patel, G., Fox, M., Snyder, A., Baker, J., Van Essen, D., Zempel, J., Snyder, L., Corbetta, M., and Raichle, M. (2007) Intrinsic functional architecture in the anaesthetized monkey brain. Nature, 447 (7140), 83–86.

- 50. Kitzbichler, M., Smith, M., Christensen, S., and Bullmore, E. (2009) Broadband criticality of human brain network synchronization. PLoS Comput. Biol., 5 (3), e1000314.

- 51. Kuramoto, Y. (1984) Chemical Oscillators, Waves and Turbulence, Springer Verlag, Berlin.

- 52. Botcharova, M., Farmer, S., and Berthouze, L. (2012) A power-law distribution of phase-locking intervals does not imply critical interaction. Phys. Rev. E, 86, 051920.

- 53. Expert, P., Lambiotte, R., Chialvo, D., Christensen, K., Jensen, H., Sharp, D., and Turkheimer, F. (2011) Self-similar correlation function in brain resting-state functional magnetic resonance imaging. J. R. Soc. Interface, 8 (57), 472–479.

- 54. Garrett, D., Kovacevic, N., McIntosh, A., and Grady, C. (2010) Blood oxygen level-dependent signal variability is more than just noise. J. Neurosci., 30 (14), 4914–4921.

- 55. Garrett, D., Kovacevic, N., McIntosh, A., and Grady, C. (2011) The importance of being variable. J. Neurosci., 31 (12), 4496–4503.

- 56. Fraiman, D. and Chialvo, D. (2012) What kind of noise is brain noise: anomalous scaling behavior of the resting brain activity fluctuations. Front. Physiol., 3: 307. doi: 10.3389/fphys.2012.00307.

- 57. Tagliazucchi, E., Balenzuela, P., Fraiman, D., Montoya, P., and Chialvo, D. (2011) Spontaneous bold event triggered averages for estimating functional connectivity at resting state. Neurosci. Lett., 488 (2), 158–163.

- 58. Tagliazucchi, E., Balenzuela, P., Fraiman, D., and Chialvo, D. (2012) Criticality in large-scale brain fMRI dynamics unveiled by a novel point process analysis. Front. Physiol., 3: 15. doi: 10.3389/fphys.2012.00015.

- 59. Stauffer, D. and Aharony, A. (1994) Introduction to Percolation Theory, CRC Press.

- 60. Friston, K. (2004) Functional and effective connectivity in neuroimaging: a synthesis. Hum. Brain Mapp., 2 (1-2), 56–78.

- 61. Sporns, O., Tononi, G., and Kötter, R. (2005) The human connectome: a structural description of the human brain. PLoS Comput. Biol., 1 (4), e42.

- 62. Horwitz, B. (2003) The elusive concept of brain connectivity. Neuroimage, 19 (2), 466–470.

- 63. Haimovici A., Tagliazucchi, E., Balenzuela, P., and Chialvo, D.R. (2013) Brain organization into resting state networks emerges at criticality on a model of the human connectome. Phys. Rev. Lett., 110, 178101.

- 64. Hagmann, P., Cammoun, L., Gigandet, X., Meuli, R., Honey, C., Wedeen, V., and Sporns, O. (2008) Mapping the structural core of human cerebral cortex. PLoS Biol., 6 (7), e159.

- 65. Battaglia, D., Witt, A., Wolf, F., and Geisel, T. (2012) Dynamic effective connectivity of inter-areal brain circuits. PLoS Comput. Biol., 8 (3), e1002438.