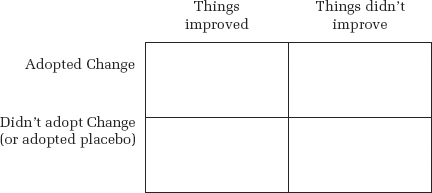

FIGURE 7.1: You need four types of information to fairly evaluate a Change. A testimonial offers just one of the four.

Most institutions demand unqualified faith; but the institution of science makes skepticism a virtue.

—Robert K. Merton1

Let’s pretend that it’s 2005 and that you hear about Brain Gym, a program of physical movements that the Web site claims “enhance[s] learning and performance in ALL areas.”2 The front page of the Web site from 2005 claims that the program is used in more than eighty countries and that the company has been in business for more than thirty years, so there seem to be plenty of people who find it valuable. The Web site headline tugs at both Enlightenment and Romantic heartstrings: “Brain Gym develops the brain’s neural pathways the way nature does . . .”

Even though it’s 2005, long before When Can You Trust the Experts? was published, let’s suppose that you strip the claims here. The core idea is that students who perform twenty-six relatively simple exercises will observe dramatic academic improvements. The promises on the Web home page are that the exercises enable one to

These promises may seem a bit grand, and you’ll note that Chapter Five warned that you ought to be suspicious of any Change that claims to help cognitive processes across the board. But when you click on the “research” link, you find a twenty-one-page document with dozens of technical-sounding studies. Admittedly, many were published in Brain Gym Journal, which certainly sounds as though it must have close ties to the company, so perhaps those should be discounted. But there are also publications from the journals Perceptual and Motor Skills and Journal of Adult Development.

Staying in the year 2005, suppose you leave the Brain Gym site and do a general Web search of the term “Brain Gym.” You find lots of Google hits on the United Kingdom’s government Web site. You have a hard time working out whether there is an official relationship between Brain Gym and UK schools, but the references to the program certainly seem positive. Your search also yields an article in the Wall Street Journal3 and two somewhat older articles from British newspapers, all of which mention Brain Gym in a positive light.4 The citation of some scientific studies, the implicit approval of the UK government, and recognition in mainstream newspapers might well make you think that Brain Gym is legitimate.

Now suppose that you’re doing these Web searches in 2011 instead of 2005. You probably would soon discover a 2006 column by Ben Goldacre, who writes a regular feature titled “Bad Science” for the Guardian, a British newspaper. Goldacre excoriated Brain Gym as “nonsense” and a “vast empire of pseudoscience.”5 A year later, two leading British scientific societies (the British Neuroscience Association and the Physiological Society) wrote a joint letter to every local education authority in the United Kingdom to warn them that Brain Gym had no scientific basis.6 Days later, the creators of Brain Gym agreed to withdraw unsubstantiated scientific claims from their teaching materials. According to one newspaper report, the author of the Brain Gym teacher’s guide admitted that many of the claims there were based on his “hunches,” rather than on scientific data.7 Oddly enough, it was not until December 2009—some twenty months later—that a spokesperson for the British government would admit that it had been promoting a program that was without scientific basis.8 This is especially curious because Brain Gym had changed the claims featured on its Web home page nearly a year earlier, in February 2009.9 The previous list of promises had been changed to this notably less grand set:

In one sense, this story is cheering. The purveyors of an educational intervention overstate what it can do, scientists draw attention to this fact, and the claims are withdrawn. But that doesn’t mean that there is a system that effectively guards against nonscientific claims in education. Brain Gym was adopted in thousands of schools, and it drew the critical attention of journalists and the two British scientific societies only because it was so commonly used and because it was endorsed by the UK government. If “the system” really worked, scientific claims would be evaluated before the programs became popular. So here’s the challenge. If you had been looking at Brain Gym in 2005, what clues were present that would tell you that its scientific basis was weak? How should you think about evidence? In this chapter, we’ll examine three relevant topics: how to use your experience to evaluate new claims, what looks like evidence but isn’t, and how to use the technical scientific literature.

We’ve said that educational Changes have, at their heart, the claim “If you do X, there is a Y percent chance that Z will happen.” It’s natural that when you hear this claim, your mind will automatically play out the hypothesis: you’ll imagine doing X, and you’ll imagine the outcome, judging whether or not Z really is likely to happen. Suppose you heard, “Check out this software. If your child uses it for just fifteen minutes each day, her reading will improve two grade levels in six months.” You will judge how easy or difficult it will be to get your child to use the software, and you’ll make some prediction about whether she’ll learn. Obviously, this prediction is heavily influenced by your experience with your child, your impressions about learning to read, and probably your impressions about computer-assisted learning.

On the one hand, the heading of this section may surprise you—in fact, we might say it ought to surprise you. The message of this book has been “You can’t trust your own experience. You need scientific proof!” The point of the scientific method is to put human experience into an experimental context. On the other hand, it seems foolish to jettison all of our prior nonexperimental experiences; surely having observed my daughter at close hand for ten years gives me some useful information when trying to judge whether or not she’s going to learn from the software. Is there not a way to use this less formal knowledge wisely? When do our experiences offer a reliable guide, and when are they likely to fool us?

Two common problems are associated with the use of our informal knowledge. First, we are wrong when we think with certainty, “I know what happens in this sort of situation.” I think to myself, “My daughter loves playing on the computer. She’ll think this reading program is great!” I might be right about my experience—my daughter loves the computer—but that experience happened to have been unusual; perhaps she loved the two programs that she’s used, but further experience will reveal that she doesn’t love to fool around on the computer with other programs. Another reason my experience might lead me astray is that I misremember or misinterpret my past experience, possibly due to the confirmation bias. Perhaps it’s not that my daughter loves playing on the computer; actually, I’m the one who loves playing on the computer. So I interpret her occasional, reluctant forays on the Internet as enthusiasm. We all know parents (not us, naturally) who project their own dreams and opinions on their children.

Misremembering your experiences can happen even when you’ve had ample opportunities for observation. For example, when I was in graduate school, I knew a professor who had lived as an adviser in an undergraduate dorm for about twenty years, so he was quite familiar with undergraduate life. His experience led him to conclude that the raising of the drinking age (which happened in the mid-1980s) had only made students drink alcohol secretively; in fact, he thought they drank more. He was not and is not alone in this belief. More than one hundred college and university presidents have signed a public statement declaring that raising the drinking age has failed to encourage responsible drinking among young people, and that new ideas are needed.10 But empirical data show that they are wrong. For example, nighttime traffic fatalities for eighteen- to twenty-year-olds dropped when the drinking age was raised to twenty-one. Alcohol-related health problems also decreased in this age group.11

Why would so many college presidents think that raising the drinking age has backfired? I expect most were not looking at data; they were, like my professor friend, thinking back on their experiences with students, and these are the types of experiences that are especially prone to the confirmation bias. The confirmation bias is more likely to arise with events (1) that you are remembering, rather than experiencing right now; (2) that are ambiguous in their meaning, rather than clear; and (3) that may be hidden or unremarkable, rather than quite obvious. Trying to compare incidents of problem drinking ten years ago to the present day obviously requires memory. “Drinking to excess” is also somewhat ambiguous; if a student is acting belligerent, it’s hard to know whether the two beers he drank contributed to his mood, or whether he just had a bad day. And student drinking is nonobvious in that students drink on the sly—the professor was guessing at how much secretive drinking was going on.

So it’s risky to use your experience to generalize about that sort of event. A teacher may think that her students have trouble staying on task when they work in groups, but like student drinking, that may be hard to assess. Whether or not students are working well in groups is at least sometimes a judgment call. And if I have several groups in my class working simultaneously, might not the one or two that are having a hard time stand out among the others? Might I not be more likely to remember those groups?

There are ways of evaluating your experience to give you more confidence that you’re right. First, you should recognize that sometimes your experience does not have the problems I’ve mentioned, and therefore merits full confidence. Some experiences are, by their nature, unambiguous: for example, if my daughter has failed math, I don’t have to wonder whether the confirmation bias is making me believe that she failed math. It’s an unambiguous event, and one that is not likely to be subject to the tricks of memory. Other experiences might in principle be ambiguous, but in your view the conclusion is unmistakable. “Students are fascinated by demonstrations in science; they are always drawn in.” Or “My son will work on any problem, no matter how difficult, if he feels that I’m working with him.”

Now it appears that I’m offering conflicting advice. I’m saying, “Don’t trust your memory if the situation is ambiguous. Unless you’re really, really sure.” Just how clear is a case supposed to be before you have confidence in your conclusion? There are steps you can take to double-check. For example, you can compare your “common sense” with that of other people. Does your spouse agree that your son will work on any problem so long as you are there? Ask a few fellow teachers, “Are your students as enthusiastic about science demonstrations as mine are?”

Note that when you use your experience to evaluate a proposed Change, you’re not just remembering what has happened; you’re also predicting what will happen. You’re thinking “students like science demonstrations,” not just as an idle observation, but as a means of judging the likelihood that a Change will work as you expect. For example, you might think that the Change will be more likely to succeed because it uses lots of science demonstrations. You can put your commonsense intuitions to the test by forcing yourself to generate reasoned support for outcomes that you don’t think will happen.

For example, suppose you’re a teacher and you attend a professional development session at which a Persuader recommends that homework be eliminated. Your initial reaction is, “That’s crazy! Kids have to practice certain skills, and if they don’t practice them at home, they’ll have to practice them at school. We’ll lose valuable time that ought to be spent on critical thinking.” Okay, so there’s your experience predicting what will happen. Now imagine that you’ve implemented the Change as the Persuader recommended, and, as you predicted, the outcome was bad. Write a list of the bad outcomes that occurred and the reasons for the bad outcomes. Now, imagine that you implement the change, and the outcome is good. List the good things that happened and the reasons these outcomes occurred. Give this your best shot, even though you initially think the idea is dumb. If you’re stuck, ask a friend to help you.

You may be surprised to find that the outcome you don’t expect is not as outlandish as you imagined. Sometimes the exercise of making the best case we can for something we don’t believe helps us avoid the confirmation bias. It helps us see that there is more than one way of looking at things. And if, try as you might, you can’t see a way that the Change will turn out differently from what you expect, your confidence should be a little higher that you’re right.

So far we’ve talked about how you can avoid drawing the wrong conclusion about what you have actually experienced in the past. A different problem arises when we use our experience to draw conclusions not just about what has happened (my daughter likes using the computer), but about what caused events to happen. That is, we use our everyday experience to draw conclusions about a broader theory. For example, let’s suppose that I’m right—my daughter really does enjoy using the computer, and she has a real knack for learning to navigate programs. It would be a mistake for me to conclude that observation shows that the left brain–right brain theory of thinking must be correct, because my daughter is so obviously a left-brained thinker. Why would this be a mistake? Two reasons.

The first is our old friend the confirmation bias. If I believe a theory, I’m likely to notice instances in which my daughter’s behavior seems to fit the theory (she’s a deft computer user, as a left-brain person should be), and to ignore or discount behavior that doesn’t fit: according to the theory, she should show other left-brain behaviors (logical thinking) and not show purportedly right-brain behaviors (such as daydreaming). It’s asking a lot for me to keep a running tally of each, but to avoid the confirmation bias, that’s what I’d need to do.

Another reason that you shouldn’t use your casual observations as evidence for or against a theory is that many theories might make the same prediction. The left brain–right brain theory might predict that someone who likes math will also like computers, but so would a theory based on the common observation that both are technical subjects.

Most of the observations we make are casual and therefore imprecise. Because they are imprecise, lots of theories are consistent with them. For example, I mentioned the lack of evidence for learning styles in Chapter One, and I’ve touched on this topic when I’ve spoken with teachers. More than once I’ve fielded angry questions from teachers who think I’m saying that a teacher whose instruction is informed by learning styles must be a poor teacher. They are thinking, the theory of learning styles informs my teaching, and my students learn a lot. So there has to be something to the idea of learning styles.

But there is much more to these teachers’ practice than learning styles theory. An effective teacher is warm; he knows his subject matter cold and knows compelling ways to explain difficult concepts; he is sensitive to his students’ emotions; and so on. Some or all of these features may be what makes him effective, and the use of learning styles actually contributes nothing. The only way to know for sure would be to have the same teacher compare his effectiveness when he uses learning styles and when he doesn’t, and to measure with care the results for students. In other words, you’d need to do an experiment.

In sum, your knowledge can help you predict what will happen, at least under some circumstances, but it’s hazardous to use your experience to draw conclusions about why something happens.

But there is still another way that your knowledge can help you. You can use it to judge how revolutionary a Change is. If a Change sounds like a breakthrough, a radical advance in addressing a difficult problem, it’s probably a sham. Why? Unheralded breakthroughs in science are exceedingly rare. A popular image of scientific progress has the lone scientist working in his lab, struggling vainly to solve a problem, and then, in a Eureka! moment, he has a breakthrough idea, which he reveals to an astonished world. This image of scientific progress fits some cases, notably Newton; his achievements in optics and gravitation all came as he worked in isolation at his family’s country home, Woolsthorpe, where he had gone to escape the bubonic plague then threatening Cambridge University.

But that image doesn’t fit many cases, especially today. Progress is almost always the product of multiple scientific laboratories working on the same problem, sometimes engaging in petty sniping, but one way or another improving each other’s work through collaboration and criticism. History is a ruthless editor, however, and the credit for a landmark of science usually goes to one person or at best a pair. James Watson and Francis Crick saw themselves as racing other laboratories to uncover the structure of DNA,12 but how many of us today are aware of those other researchers? Indeed, how many are aware that Watson and Crick shared the Nobel prize for the discovery with Maurice Wilkins? Beware of breakthroughs developed by the lone genius, especially one who first publicizes his findings on a Web site with a money-back guarantee.

Further, science typically proceeds in a series of steps, some forward, some backward, that creep toward an advance. Scientific breakthroughs nearly always have some foreshadowing. Before a successful therapy for Alzheimer’s disease is developed, you will see news of important steps taken at the molecular level, then successful treatment of Alzheimer’s in animal models, and so on.

• • •

I’ve suggested that common sense can be an ally, but it should not be your only weapon. A Persuader will often suggest that there is research evidence supporting the proffered change. How can you evaluate it? Let’s start by getting clear about what is evidence, and what looks like evidence but isn’t.

When you’re evaluating evidence, the first thing to do is to be sure that you understand the evidence that’s being offered. After all, why would you adopt a Change if you don’t understand the evidence supporting it? By far the best way to gain this understanding is through a conversation with the Persuader, as opposed to, for example, reading something about it. It’s generally easier to listen to an explanation because you can stop the speaker when something doesn’t make sense, and ask for a different explanation.

The dirty little secret is that when you press a Persuader to explain a Change, you’re not only seeking to understand it but also convincing yourself that the Persuader understands it. Einstein’s theories are both mathematically dense and conceptually counterintuitive, so it is noteworthy that he said, “If you can’t explain it simply, you don’t understand it well enough.” All too often it happens that the Persuader has a pat set of phrases regarding the “latest brain research” supporting the Change. But if you ask a few questions, the Persuader’s confidence noticeably drops, the same phrases are repeated, and the explanation doesn’t seem to hang together very well. You don’t need scientific expertise to detect this phenomenon. It doesn’t mean that the Change won’t work, but it’s a good sign that the person who is trying to persuade you to use it doesn’t understand the purported evidence behind it.

If the arguments marshaled are clear to you, there are a couple of tests you should apply to them. One is to be sure that the Persuader is not confusing a label with proof. A classic illustration comes from The Imaginary Invalid, a 1673 play in which Molière lampooned physicians. In one scene, the lead character is examined for his admission to the medical profession by a chorus of pompous doctors. When asked why opium makes people sleepy, the student replies that it does so because it has “a dormitive quality.” The doctors nod their heads sagely and say, “Well argued. Well argued. He’s worthy of joining our learned body.”a

But of course saying that opium makes people sleep because of its “dormitive quality” is no explanation at all. It’s giving the thing to be explained a fancy label and then pretending that you’ve accounted for how it works. Unfortunately, one sees this sort of “explanation” all too often applied to educational products. Students who have trouble reading are said to have “phonic blocks,” or a Persuader will dub learning “repatterning” to make it sound technical and therefore mysterious. Whether it’s fabricated jargon or a bona fide term, if it’s invoked merely to label something that you know under another name, it’s not doing any intellectual work. It should be discarded, and it should make you suspicious. Technical-sounding terms for ordinary concepts are invoked only to impress.

You should also keep in the forefront of your mind a bait-and-switch technique that’s common in the sale of education products. A Persuader will cite research papers that are perfectly sound, but that relate to the Change only peripherally, if at all. Consider, for example, the Dore Program (http://www.dore.co.uk/), a course of treatment meant to address autism. (The claim is that it’s applicable to other problems as well, but to keep things simple, I’ll discuss only autism.) The logic behind the program is this:

If you strip the Dore claim, you’ll focus on point 5: if your child performs physical exercises, his or her symptoms will improve. There are not scientific studies on the Dore Web site that address this claim. There are, however, lots of links to articles in scientific journals that verify a link between the cerebellum and autism (point 1), the link between the cerebellum and skill (point 3), and the link between the cerebellum and exercise (point 4). All of these articles represent good basic science, but they don’t directly support (or falsify) the Dore Program.13 For example, suppose exercise and autism implicate different parts of the cerebellum? The cerebellum is, in fact, enormous, and is involved in many functions.

Another source of “evidence” that should not persuade you is testimonials—that is, first-person accounts from people who have used the product and swear that it helped. Testimonials are, in their way, much more compelling than dry statistics. Compare these two statements for their persuasive value:

Poet Muriel Rukeyser wrote, “The universe is made of stories, not of atoms.”14 Perhaps she meant that we experience events as connected, as leading to a conclusion. Certainly, stories are more interesting and memorable than statistics—so much more so that cognitive psychologists sometimes refer to stories as “psychologically privileged.”15 It’s no surprise that Persuaders make use of them. But you should ignore them. There is usually someone who is ready to testify to the efficacy of almost anything. To take an extreme example, the members of the Heaven’s Gate religious cult happily offered testimony in 1997 that the world was about to come to an end and that they would be rescued by aliens in a spaceship following the Hale-Bopp comet.16

Two mechanisms can lead you to think that a futile Change has worked a miracle, and make you a good candidate to offer a testimonial. First, there’s the placebo effect, wherein being told that you are under some sort of treatment can provide a quite real psychological boost. Supermodel Elle Macpherson was criticized when she admitted that she used powdered horn from endangered rhinoceroses for “medicinal” purposes, despite the lack of evidence that it brings any benefits. Macpherson’s response: “Works for me.”17 The benefit comes not from the treatment, but from the belief. You’ve probably heard about placebos relieving pain,18 but they have also been shown to improve symptoms in kids with ADHD19 or autism.b 20

It’s also possible that whatever’s being treated gets better, but the improvement has nothing to do with the treatment. For example, take one hundred kids in the autumn of second grade who are struggling with reading. Now look again at those kids in the spring. Of those hundred, there will probably be a few—five, maybe ten—for whom something clicks, and their reading dramatically improves. Perhaps they really connected with their teacher and worked extra hard to please her. Perhaps they encountered a book that they wanted to be able to read independently. Perhaps they simply accumulated enough practice that they got the hang of it. If you’re the parent of one of those kids, you’ll think, “I’m so happy that Robert’s reading has improved!” But if you’ve been giving Robert an herbal supplement guaranteed to improve reading, you are very likely to attribute the improvement to your intervention. And if you were asked to provide a testimonial, you just might comply. And the parents whose kids took the herbal supplement to no avail? Their testimonies will not appear on the Web site.

Testimonials invite you to conclude, “This is what’s in store for me if I buy in.” But to make a principled prediction as to what outcome you can fairly expect, you need more information than a testimonial can offer. In Chapter Three I noted that positive evidence is not conclusive for a theory. For example, I theorize that all swans are white, and to prove it, I take you to some parks and zoos and show you some white swans. Well, you probably figured that there were some white swans around, or I wouldn’t have proposed the theory in the first place.

Testimonials show you white swans: “Look, there’s one!” You need not just stories of success from people who adopted the change but also stories of failure. You also need stories of success and failure from people who didn’t adopt the change. The heart of the problem with testimonials is captured in Figure 7.1.

FIGURE 7.1: You need four types of information to fairly evaluate a Change. A testimonial offers just one of the four.

Testimonials offer information only from the upper-left quadrant, and to evaluate whether or not the Change has an impact, you need information from all four quadrants.

What you’ve done to this point is simply to listen to the arguments of the Persuader and try to evaluate them critically. But a logical argument in support of a Change is not the same thing as scientific data indicating that it works. You want to know whether such data exist. Where are you going to find them?

Your first request ought to go to the Persuader. If someone tells me that a Change is “research based,” I brightly say “Great! Can you send me the research? I’d really like to see the original papers that report data.” The Persuader almost always says “Sure!” Sometimes I get something. Often I don’t. I figure that either way, I’ve learned something: either I get some research papers, or I learn that the Persuader either doesn’t know about the research or can’t be bothered to follow through and send it to me.

But of course I don’t really expect that the Persuader is going to send me all available research papers, especially the ones critical of the Change. What he or she sends is a start, but I need to do a little digging on my own. This process calls for some work. That’s why I’ve saved it for last. I don’t advise moving ahead unless what you’ve heard about the Change to this point sounds good, and you are contemplating it fairly seriously.

There are two varieties of research that you might seek. One is a direct test of the Change. For example, the Persuader is urging you to use Accelerated Reader (http://www.renlearn.com/ar/), a specific program for elementary reading. So you could look for research studies that compare how kids read when that program is in use versus how they read when it is not. The other type of evidence you might seek doesn’t test the specific Change, but rather bears on a more general claim about how kids learn. For example, suppose that the Persuader urges you to use Accelerated Reader because it emphasizes reading practice. You might seek evidence on the importance of reading practice more generally, not just as it’s implemented in Accelerated Reader. Let’s start with finding research that tests a specific Change.

The goal here is to find original research, not what someone else has said about original research. Thus much of what you find in a regular Web search will not do: Web sites (even of reputable organizations), blogs, newspaper articles, and Wikipedia are all secondary sources. They might, however, prove useful if they cite original research articles.

Fortunately, there is another, direct way to locate material. There are several search engines available on the Internet that will help you locate relevant research articles. One of the best is Education Resources Information Center (ERIC; www.eric.ed.gov), which is maintained by the U.S. Department of Education. It provides a fairly comprehensive search of articles related to education. PubMed (http://www.ncbi.nlm.nih.gov/pubmed/) is maintained by the U.S. National Library of Medicine and by the National Institutes of Health. This database is very useful for more medically oriented articles (for example, on autism or attention deficit hyperactivity disorder).

Using either will be fairly intuitive to people accustomed to such search engines as Google or Yahoo. There is a search bar into which you type a few keywords. You’re looking for research testing the effectiveness of a Change, so the search term should just be the name of the program. If you want to know whether the Dore Program helps kids with ADHD, search on “Dore Program.” If you want to know whether Singapore Math boosts achievement for math, search for “Singapore Math.” You do want to include quotation marks—that will limit the search to articles that have the exact phrase “Singapore Math.” If you enter Singapore Math, the search will return articles that have the word Singapore and the word Math, and you’ll end up with articles that, for example, compare math achievement of kids in different nations, but nothing about that particular math program. For some searches, the difference won’t matter much, but it’s a good habit to adopt.

If you’re having trouble finding articles, try to think of whether there is more than one term for what you’re interested in. For example, “whole word” reading could also appear under the terms “whole language,” “sight word,” or “look-say.” Google may be useful in finding such synonyms, and ERIC has a thesaurus that you can consult, so that you’ll have greater confidence that you’re using the right search term.

You will also want to limit your search to papers that have undergone peer review. You’ll recall that I mentioned peer review in Chapter Three; remember the discussion of the “cold fusion” scientists who held a press conference to announce their results, rather than submitting their findings to the criticism of their peers? I expect that by this point in the book, you have an even better understanding of why peer review is so important. The repeated theme in our discussions has been that our judgment is subject to a broad variety of biases, especially those that confirm our preconceptions and flatter our egos. Much of what we call scientific method consists of safeguards meant to increase the objectivity of those judgments. Planning and executing an experiment, analyzing the data, and writing a report—this process can easily take a year. Little wonder that, once it’s complete, a researcher is quite convinced that it’s really good. Needless to say, the study may contain mistakes, no matter how careful the researcher. What’s needed is a dispassionate expert to give it a careful read and to determine whether it is scientifically sound. That’s the point of peer review.

When you conduct a search on PubMed, almost all the articles that turn up are peer reviewed. Few medical journals will publish an article that has not undergone peer review,c and PubMed doesn’t catalogue articles from those journals. Education is a different matter. Many journals are not peer reviewed, and ERIC catalogues those. Fortunately, ERIC also makes it simple to limit your search to peer-reviewed articles—you need merely to check a box. Doing so makes a big difference in the number of articles your search returns. For example, when I search on “Orton-Gillingham” (a method of reading instruction), I get thirty-nine article citations. When I limit my search to peer-reviewed articles, I get six citations. If I search on “Bright Beginnings” (a preschool curriculum), I get twelve articles. When I limit my search to peer-reviewed articles, I get one.

If you take the simple measure of looking for research articles, you’re way ahead of the game, and you haven’t even read anything yet. The fact is that when most Persuaders say that there is scientific evidence supporting a Change, they are blowing smoke. There isn’t any.

If you do find some articles on the Change, don’t buy in just yet. You’ll want to have a look at the content of those articles. Fortunately, both ERIC and PubMed list abstracts of all the articles they have catalogued. The abstract is a summary of the article, written by the authors. Your main purpose is, of course, to know whether or not the Change works, and that’s typically pretty easy to discern from the abstract; that’s what everyone wants to know, so it’s very likely to be in the abstract.

Now, you can leave it there. You can just count the number of articles that conclude “the Change helped” or “the Change didn’t help,” and that’s a start. But you’re better off digging just a bit deeper by including some information that will qualify how you think about the results. To do so, you’ll probably need the full article, not just the abstract. The full article is sometimes downloadable directly from PubMed or ERIC. If it’s not, use a Web search engine (Google, Yahoo, and the like) to search for the name of the author. Researchers—especially those who are also college professors—frequently maintain personal Web sites from which you can download articles they have written. If not, you will likely find his or her e-mail address, and you can request a copy. This is not unusual or impolite. Researchers are used to it. And if there is more than one author listed, you can write to any of them.

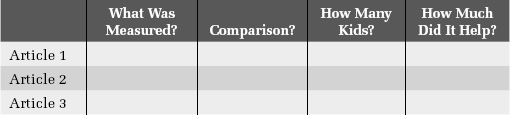

Once you have the full article, what exactly do you want to know? I suggest that you create a scorecard (Table 7.1).

TABLE 7.1: A suggested “scorecard” to keep track of research findings.

The authors will usually say plainly in the abstract whether or not the Change “worked.” But the authors’ definition of success may differ from yours. For example, at the start of this chapter I mentioned that the 2005 Brain Gym Web site listed some peer-reviewed research articles. True enough, but if you read the abstract of one of the listed articles, you’ll see that the outcome the researchers measured was response time—that is, they measured how quickly subjects could press a button in response to a signal.21 Subjects who underwent Brain Gym training were faster than those who had not undergone Brain Gym training. Unless your goal is to have students with really fast reactions, this article is not going to be relevant to your decision to use Brain Gym.

Another of the peer-reviewed articles that seems to support Brain Gym’s effectiveness used a different outcome, one more commonly reported in education research.22 The researchers report that the five students at a music conservatory rated Brain Gym as having had a positive effect on their playing. Participants also had a more positive attitude toward Brain Gym at the end of the experiment than at the start. But you want to know whether or not Brain Gym actually helps, not whether people think it does. And as we’ve seen, people who have invested their time in a Change are motivated to think that their time was well spent—that’s cognitive dissonance at work. Thus you shouldn’t be convinced by data showing that people liked a Change, or the close variant that is often reported: “94 percent of people who used this product said that they would recommend it to a friend!”

You should also make sure that there is a comparison group—in other words, there should be at least two types of students measured: those who participated in the Change, and another group that did something else. Why? Well, suppose that I tell you I measured math problem-solving skills in ten third-grade classrooms, first in the autumn and then again in the spring. All the classrooms used Dan’s SuperMath program, and scores on a standardized math test went from 69 percent in the autumn to 92 percent in the spring. Big improvement! But wouldn’t we expect scores to be higher after a year of schooling? The real question is not whether third-graders are better at math in the spring than they were in the fall—we assume they will be. The question is whether Dan’s SuperMath helps kids learn more math compared with whatever method is in place now. It seems pretty obvious when you spell it out, but it’s surprising how often an article trumpets “Kids learned more!” without answering the question “Compared with what?”

The number of kids who were in the experiment also matters. Here’s a simpleminded example. Suppose I wanted to know how friendly University of Virginia (UVa) students are. I stand on the main quadrangle, stop a random undergraduate, and administer some test of personality, which we’ll assume is a valid measure of friendliness. If I do this with, say, five students, do I have a good estimate of the friendliness of UVa undergraduates? Of course not. I tested only five, so I might have, by chance, selected five extroverts or five odd ducks, or whatever. But if I test a larger number of students, the odds get better and better that the group I’m testing is similar to UVa undergraduates as a whole. In extremis, if I tested all 14,297 of them, I’d know exactly how friendly the average UVa undergraduate is, at least according to the test I’m using. If I randomly select 14,000 out of the 14,297, I’m obviously still very close to the true average—I’m still testing most of them. How small can the number get and still allow me to have some confidence that my result is not quirky? It depends on several factors that are too technical to go into here, but a decent rule of thumb is that you’d like to see at least twenty kids who have been exposed to the Change and twenty who were not.

You will see plenty of peer-reviewed studies that test many fewer than that. Such studies are valuable, but for different reasons. There is almost always a trade-off between the number of people tested and the richness and detail of the measures used. Studies that involve hundreds of kids will probably use a paper-and-pencil test, and the experimenters will have no information about how the kids thought about the questions. When you see a study with six kids, the experimenters often have had long conversations with each child about the Change, multiple measures of the consequences of the Change, and so forth. This sort of study can be very useful to researchers, but is less useful to those who want to know if the Change reliably helps kids.

Finally, you want to pay attention to how much the Change helped. It’s important to understand the logic behind the question “Did the Change make a difference?”—a question that you’ll see in a moment is rather crude.

The statistical procedures used to answer that question have the following logic. I have two groups: one was exposed to the Change; the other was not. We’ll call that the NoChange group. Let’s say that the Change is supposed to help kids understand what they read. If the Change doesn’t work, then both groups should score the same on a reading comprehension test at the end of the experiment. Now of course it’s possible that the Change group will score higher just by chance. The test is not perfect, after all—it has some “static” in it, and maybe that will just happen to favor the Change group. Or maybe, even though I selected who was going to be in the Change and in the NoChange groups at random, the Change group happened to have a lot of kids who were good readers.

If the Change group scores a little higher than the NoChange group just by chance, I’ll draw the wrong conclusion. I’ll think that the reading program works. How can we protect ourselves from these possible chance occurrences? We say to ourselves, “Okay, maybe the Change group would, just by chance, do a little better on the reading comprehension test at the end of the experiment. But they would not do a lot better just by chance. So how about this: if they do a little better, I’ll ignore it—that is, I’ll say, ‘The difference in scores may just have been due to chance, so I’m concluding that the Change didn’t work.’ But if there is a big difference between the two groups, then I will have to conclude that it couldn’t be due to chance. The big difference in reading comprehension scores must be due to the Change.”

So far, so good. But here’s the complication. We just noted that how much “static” there is in a measure depends on how many people are in the group. If I test the friendliness of just five undergraduates, I know that I might, by chance, have picked a quirky group, whereas if I test one hundred undergraduates, it’s much less likely that the group is quirky. So let’s apply that to my comparison of the Change and the NoChange groups. If each group has one hundred people in it, and I see that the reading comprehension scores of the two groups are different, shouldn’t I be less worried that the difference is due to some quirk in one of my groups? And shouldn’t I be more worried that the difference between the groups is a quirk if I have just five people in each group?

The answer is “Absolutely.” And that factor is built into the statistical tests that almost everyone uses. As the number of people in each group increases, you set a lower and lower bar for how big the difference between the groups must be before you conclude “Wow, that difference is too big to have occurred by chance. The groups must be different, and that means the Change did something.”

Here’s why that matters for you. When you’re looking through these articles, the natural thing to do is to mentally categorize each study as showing “the Change worked” or “the Change didn’t work.” That’s fine, but note that “worked” really means that the authors were justified in concluding that “the difference between the Change and NoChange groups was so big that it was really unlikely to have happened by chance,” and that conclusion actually depends on two factors: how big the difference was between the groups and how many people there were in each group. So if there were lots and lots of people in the groups, a relatively modest difference in scores would still lead you to conclude that “the Change worked.”

Psychologists refer to this as the difference between “statistical significance” and “practical significance.” Statistical significance means that you’re justified in concluding that the difference between the Change and the NoChange groups is real, not a quirk due to chance. Practical significance refers to whether or not that difference is something you care about. As the name implies, that’s a judgment call. For example, suppose the Change is a new method of teaching history, and at the end of a thirty-two-week program, kids in the Change group scored 1 percent better on a history test than kids in the NoChange group. If there were a lot of kids in each group, that difference might be statistically significant, but it’s improbable that you’d think of it as practically significant. (Note that the opposite case is not possible. An experiment can’t show a practically significant difference between the Change and the NoChange groups that isn’t statistically significant.)

How can you judge whether or not a result is of practical significance? That can be hard to tell if you’re not familiar with the measure. If it’s a history test that the experimenters themselves created, it’s hard to know what a 5 percent or a 15 percent improvement on the test means. If you can’t get a good feel for whether or not the improvement is of practical significance, make a mental note of this fact and, if you have the opportunity, raise this point with the Persuader. You need to know in terms that are familiar to you how much your child or your students are supposed to improve.

Most important, you need to consider practical significance in light of your goals. A Change may offer a guaranteed improvement in, say, students’ public speaking ability. The question is, How much do you need to invest in terms of time and resources to gain the guaranteed improvement? In education, a Change almost always carries an opportunity cost. That is, when you spend your time and energy on one thing, you necessarily have less time and energy for something else. So you need to decide whether this improvement in public speaking ability is going to be worth it. And that is a personal decision. The answer depends on your goals for education.

I’ve summarized the suggested steps for evaluating evidence in Table 7.2. As I did in Chapter Five, I strongly encourage you not only to carry out each action but to maintain a written record of the results.

TABLE 7.2: A summary of the actions suggested in this chapter.

| Suggested Action | Why You’re Doing This |

| Compare the Change’s predicted effects to your experience; but bear in mind whether the outcomes you’re thinking about are ambiguous, and ask other people whether they have the same impression. | Your own accumulated experience may be valuable to you, but it is subject to misinterpretation and memory biases. |

| Evaluate whether or not the Change could be considered a breakthrough. | If it seems revolutionary, it’s probably wrong. Unheralded breakthroughs are exceedingly rare in science. |

| Imagine the opposite outcome for the Change that you predict. | Sometimes when you imagine ways that an unexpected outcome could happen, it’s easier to see that your expectation was shortsighted. It’s a way of counteracting the confirmation bias. |

| Ensure that the “evidence” is not just a fancy label. | We can be impressed by a technical-sounding term, but it may mean nothing more than the ordinary conversational term. |

| Ensure that bona fide evidence applies to the Change, not something related to the Change. | Good evidence for a phenomenon related to the Change will sometimes be cited as though it proves the change. |

| Ignore testimonials. | The person believes that the Change worked, but he or she could easily be mistaken. You can find someone to testify to just about anything. |

| Ask the Persuader for relevant research. | It’s a starting point to get research articles, and it’s useful to know whether the Persuader is aware of the research. |

| Look up research on the Internet. | The Persuader is not going to give you everything. |

| Evaluate what was measured, what was compared, how many kids were tested, and how much the Change helped. | The first two items get at how relevant the research really is to your interests. The second two items get at how important the results are. |

We’ve been through three steps in our research shortcut: Strip and Flip it, Trace it, and Analyze it. Now it’s time for the fourth and final step: making a decision.

a This bit of the play is actually written in a burlesque of Latin, to further ridicule the learned society of seventeenth-century physicians, so any translation can only be approximate.

b If placebos work, why don’t we just give everyone placebos? They don’t work for everyone. In fact, they work for a minority of people. But of course, you need only a few testimonials to make a Change look good!

c Whether or not an article undergoes peer review varies by journal. Either the journal editor always sends articles out for peer review, or never does. It’s not decided on a case-by-case basis.

Notes

1. Merton, R. K. (1973). The sociology of science. Chicago: University of Chicago Press.

2. All versions of the Brain Gym Web site were downloaded from the Wayback Machine (http://www.archive.org/web/web.php), which archives old versions of Web sites.

3. Chaker, A. M. (2005, April 5). Attention deficit gets new approach—as concerns rise on drugs used to treat the disorder, some try exercise regimen. Wall Street Journal, p. D4.

4. Hughes, J. (2002, September 7). Jane Hughes discovers how “Brain Gym” can help. The Times Magazine, pp. 64–65; Carlyle, R. (2002, February 7). Exercise your child’s intelligence. Daily Express, p. 51.

5. Goldacre, B. (2006, March 18). Brain Gym exercises do pupils no favours. Guardian, p. 13.

6. Reported in Randerson, J. (2008 April 3). Experts dismiss educational claims of Brain Gym programme. Guardian. See also O’Sullivan, S. (2008, April 6). Brain Gym feels the heat of scientists. Sunday Times, p. 4.

7. Brain Gym claims to be withdrawn. (2008, April 5). The Times of London, p. 2.

8. Clark, L. (2009, December 19). Brain Gym for pupils pointless, admits Balls. Daily Mail. http://www.dailymail.co.uk/news/article-1237042/Brain-gym-pupils-pointless-admits-Balls.html.

9. Retrieved from http://braingym.org/ on August 9, 2011. The 2010 revision of the book Brain Gym: Teacher’s Edition (Ventura, CA: Edu-Kinesthetics) still contains a lot of scientific inaccuracies about the mind.

10. Amethyst Initiative. (n.d.). Welcome to the Amethyst Initiative. http://www.amethystinitiative.org/.

11. Carpenter, C., & Dobkin, C. (2011). The minimum legal drinking age and public health. Journal of Economic Perspectives, 25, 133–156.

12. Watson offered his account of this competition in a controversial book: Watson, J. D. (1968). The double helix: A personal account of the discovery of the structure of DNA. New York: Atheneum.

13. There have been some studies that directly tested the efficacy of the Dore Program, and the results were published in professional journals. Reynolds, D., Nicolson, R. I., & Hambly, H. (2003). Evaluation of an exercised-based treatment for children with reading difficulties. Dyslexia, 9, 48–71; Reynolds, D., & Nicolson, R. I. (2007). Follow-up of an exercise-based treatment for children with reading difficulties. Dyslexia, 13, 78–96. These studies were later the subject of controversy, as a number of scientists stepped forward to question the research design. Bishop, D.V.M. (2008). Criteria for evaluating behavioural interventions for neurobehavioral disorders. Journal of Pediatrics and Child Health, 44, 520–521; McArthur, G. (2007). Test-retest effects in treatment studies of reading disability: The devil is in the detail. Dyslexia, 13, 240–252.

14. Rukeyser, M. (1968). The speed of darkness. New York: Random House.

15. Willingham, D. T. (2004, Summer). The privileged status of story. American Educator, pp. 43–45, 51–53.

16. Ayres, B. D., Jr. (1997, March 29). “Families learning of 39 cultists who died willingly.” New York Times. Available online at http://www.nytimes.com/1997/03/29/us/families-learning-of-39-cultists-who-died-willingly.html.

17. “Witter: Elle Macpherson” (2010, May 30). The Times of London. http://women.timesonline.co.uk/tol/life_and_style/women/fashion/article7139977.ece?token=null&offset=12&page=2.

18. Zubieta, J.-K., Yau, W.-Y., Socct, D. J., & Stohler, C. S. (2006). Belief or need? Accounting for individual variations in the neurochemistry of the placebo effect. Brain, Behavior, and Immunity, 20, 15–26.

19. Sandler, A. D., & Bodfish, J. W. (2008). Open-label use of placebos in the treatment of ADHD: A pilot study. Child: Care, Health and Development, 34, 104–110.

20. Sandler, A. (2005). Placebo effects in developmental disabilities: Implications for research and practice. Mental Retardation and Developmental Disabilities Research Reviews, 11, 164–170.

21. Sifft, J. M., & Khalsa, G.C.K. (1991). Effect of educational kinesiology upon simple response times and choice response times. Perceptual and Motor Skills, 73, 1011–1015.

22. Moore, H., & Hibbert, F. (2005). Mind boggling! Considering the possibilities of Brain Gym in learning to play an instrument. British Journal of Music Education, 22, 249–267.