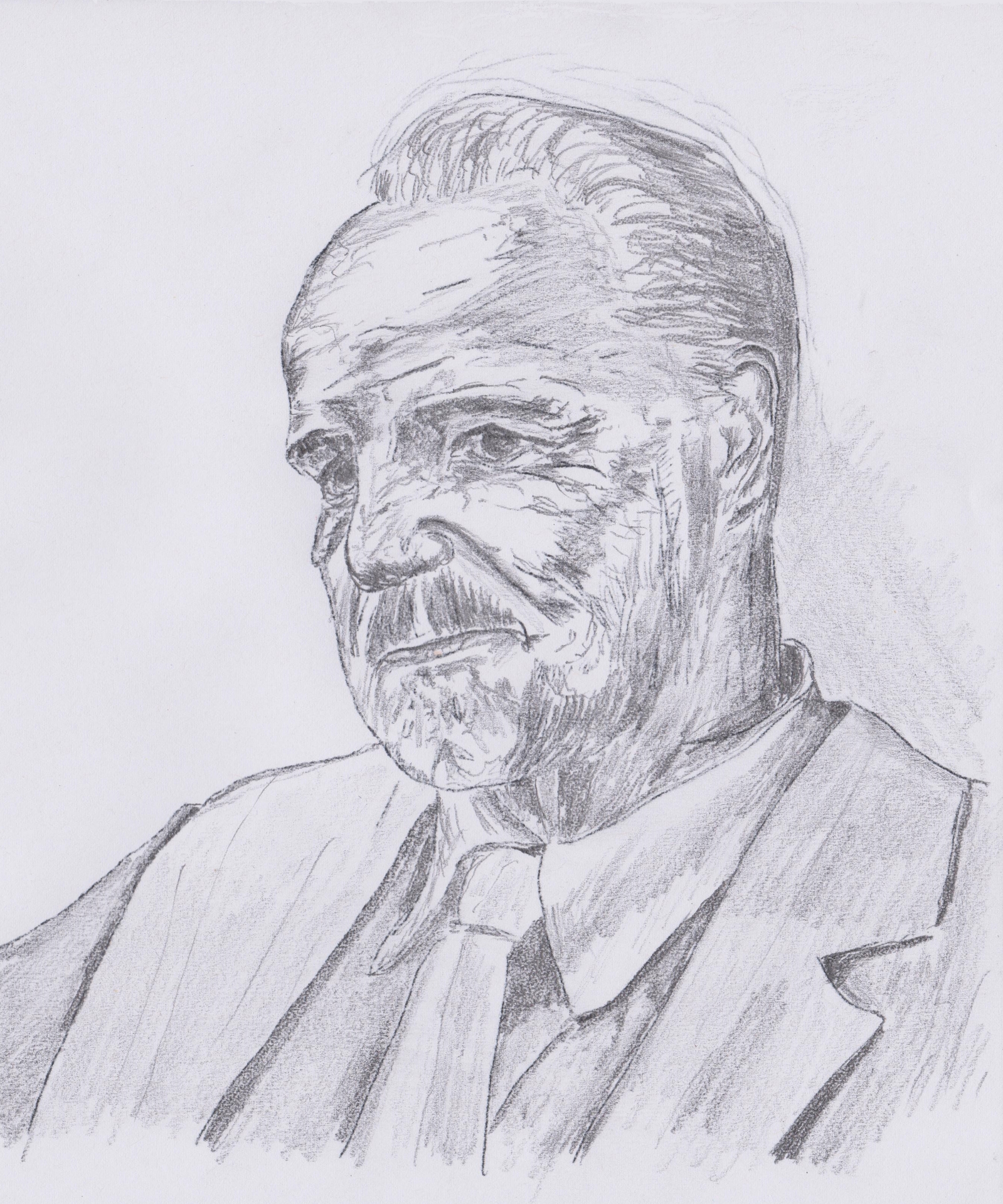

The above sketch is, however, of the Godfather of the street: Vito Corleone AKA Marlon Brando/Robert De Niro (you recognise

The

Godfather – and better show some respect!) (Drawing by the

author.)

Mention should also be made of Yoshua Bengio, who established a laboratory called ‘LISA’ that has done much AI theoretical work – resulting in extensive publication and high-profile international conferences on deep learning (in collaboration with Yann LeCun). As a result of all this, Hinton, Bengio, and LeCun are sometimes referred to as the ‘Godfathers of AI’ or the ‘Godfathers of Deep Learning’. Godfathers indeed. But presumably the difference between these three Godfathers and The

Godfather, is that they: “…have not learned more in the streets than in any classroom”. The latter, of course, refers to Don Vito Corleone (born Vito Andolini).

In 2018 Hinton was awarded the Turing Award alongside Bengio and LeCun, in recognition of their work on deep learning. We should also not forget to mention Andrew Ng, who created Google Brain – a pioneering large-scale distributed system for Deep Learning, which brought the ImageNet competition to an entirely new level.

But what, in simple terms, is

convolution? A useful/intuitive way to think of convolution is as an operation that takes as input two functions: an input signal, and a filter; and outputs a third function that is a modified or ‘filtered’ version of the input. This idea has been used for some time in image processing, where specialized ‘filters’, also called convolutional kernels, have been designed to modify images. Most of us have used some form of image processing programmes in the form of software that might have been supplied with a digital camera bought for taking holiday snaps – or perhaps in the form of well-known packages that we have installed on our computer – such as, say, Photoshop® or PaintShop® Pro. When we use such software to perform operations such as image smoothing, sharpening and edge detection, what we are really doing is getting the programs to perform convolution, or filtering if you prefer, to generate the effects we desire. Of course, when using such packages we generally don’t need to be concerned with the maths behind the convolution – or, say, the exact configuration of the masks employed in the filtering operations. But to give a very brief

summary, what the program is doing in convolution, is applying a small mask (or ‘kernel’) iteratively across the image, and each time the image pixel in the location of the centre of the mask is changed – depending upon the values in the kernel and the pixel values in the image. Another way of explaining this is to say that in convolution, or image filtering, the value of each of the pixels is modified slightly, depending upon the filter being employed, and the values of the pixels surrounding the pixel being modified.

In the case of CNNs, the kernels are known as ‘feature detectors’. The output from the convolution operation is often called a ‘feature map’ and can be used as the input to traditional machine learning/classification algorithms for feature detection - to enhance the performance of the model. So, in comparison to ‘traditional’ neural networks, the CNN is a new type of neural network that is trained using backpropagation and which has a new architecture. It consists of several ‘convolutional layers’, each containing several separate convolutional filters that each output a feature map from the layer’s input image. These are then stacked together and sent to the next layer. Also, the network has several ‘fully connected layers’, similar to those traditionally found in neural networks. This creates a powerful image classification model that combines the feature detection properties of convolutional filters with the versatility of neural networks. I hope that is clear. Any further questions can be directed towards one of the three Godfathers of Deep Learning. And as long as you don’t subsequently find a horse’s head in your bed, all should be OK.

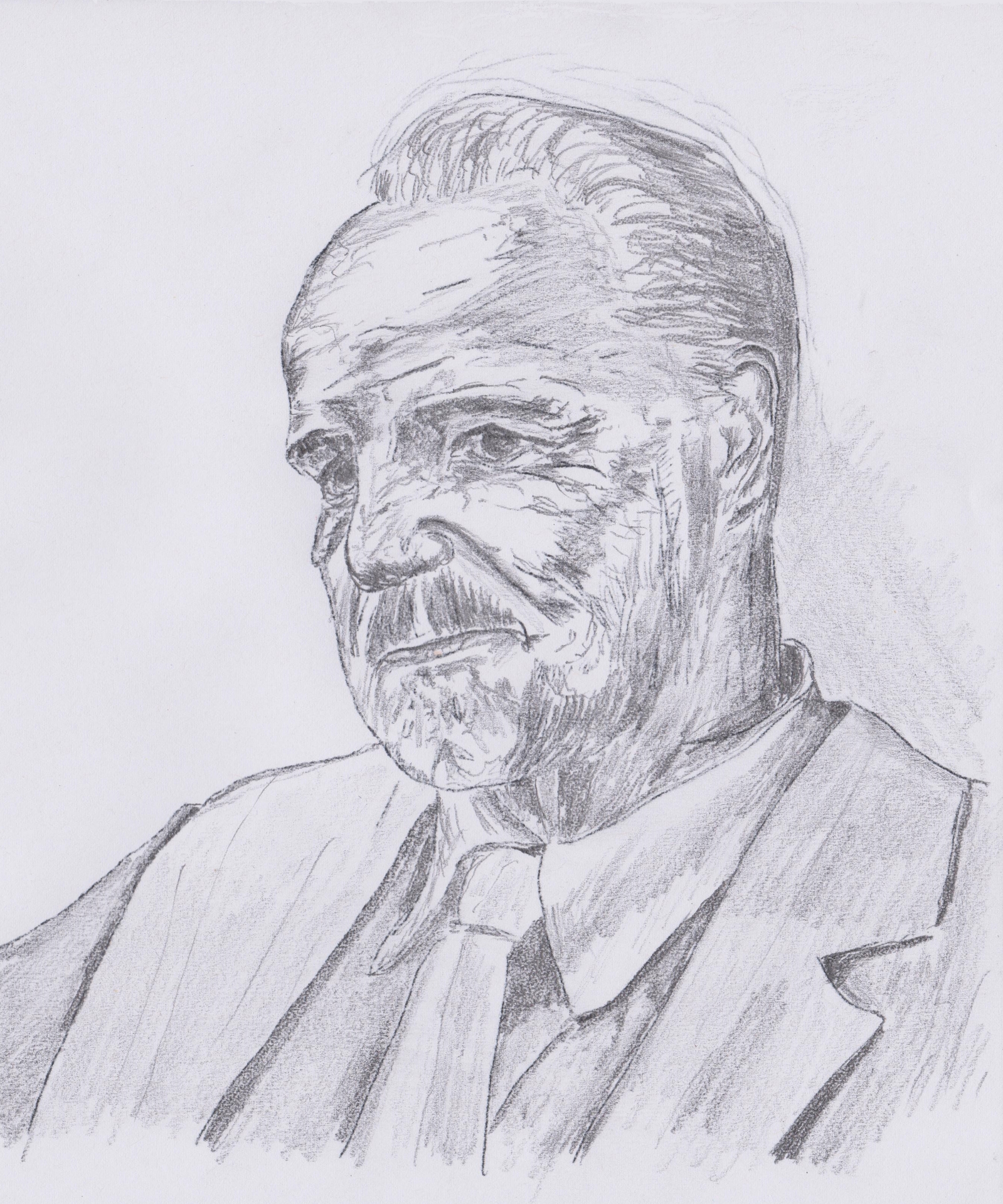

In the film

The Godfather

, Jack Woltz was not 100% happy to wake up and find a horse’s head in his bed. (Pen and ink drawing by the author.)

That was a joke – you are not going to find a horse’s head in your bed. But I was just reading about that scene in the film The Godfather

: “This is an iconic moment. Jack Woltz wakes up with a horse head in his bed, showing him that the Corleones mean business. Apparently, this forces Woltz to give Johnny Fontane (cough Frank Sinatra cough) a part in a movie that will make him a star, despite his personal distaste for Fontane.” Either that or it was seriously poor housekeeping/carelessness on the part of the maid. On another web page, someone goes on to ask whether the horse head that appears in The Godfather

is real and they are answered thus: “While a fake head was used during rehearsals, Coppola swapped it with a real one for the actual shot, meaning the screams you hear from actor John Marley were absolutely genuine.” Wow, I guess that actor was really suffering for his art – after shooting that kind of scene it’s a wonder he can get any sleep. In any case, putting horse heads into people’s beds is not the modus operandi

of the Godfathers of Deep Learning. They would be more likely to want to put some extra memory into their computers.

While we are on the subject of computer upgrades, the final development, in 2009, was to employ graphical processing units (GPUs) as general-purpose processors for training large machine learning models. So, finally, all of the development money and effort that has been poured into gaming development in recent years has found a useful application – and one that does not involve having to shoot as many Nazis per minute as possible (or whatever the hell else is going on in video games nowadays). Use of GPUs in deep learning enables great reductions in training times, so that a network that would previously take months to train can now be trained in days. This means that the use of exceptionally large networks is possible – which has led to a period of rapid development of CNN architectures. The result has been a dramatic increase in the performance of vision systems, and the subsequent research into the application of CNNs across a wide range of sectors that we see today. As mentioned above, there has been only one significant drawback to the CNN approach – traditionally very large amounts of training data have been required to train networks that are sufficiently complex to accurately and reliably perform useful tasks in the real world. We can now introduce two techniques that have been developed to address this: transfer learning and data augmentation

.