Dr Richard Daystrom – the electronics/computing genius who (happily) created many of the computer systems on the Enterprise but who went on (less happily) to create the ultimate computer - the M5. In the episode ‘The Ultimate Computer’, Daystrom is played (rather well) by William Horace Marshall. (Drawing by the author.)

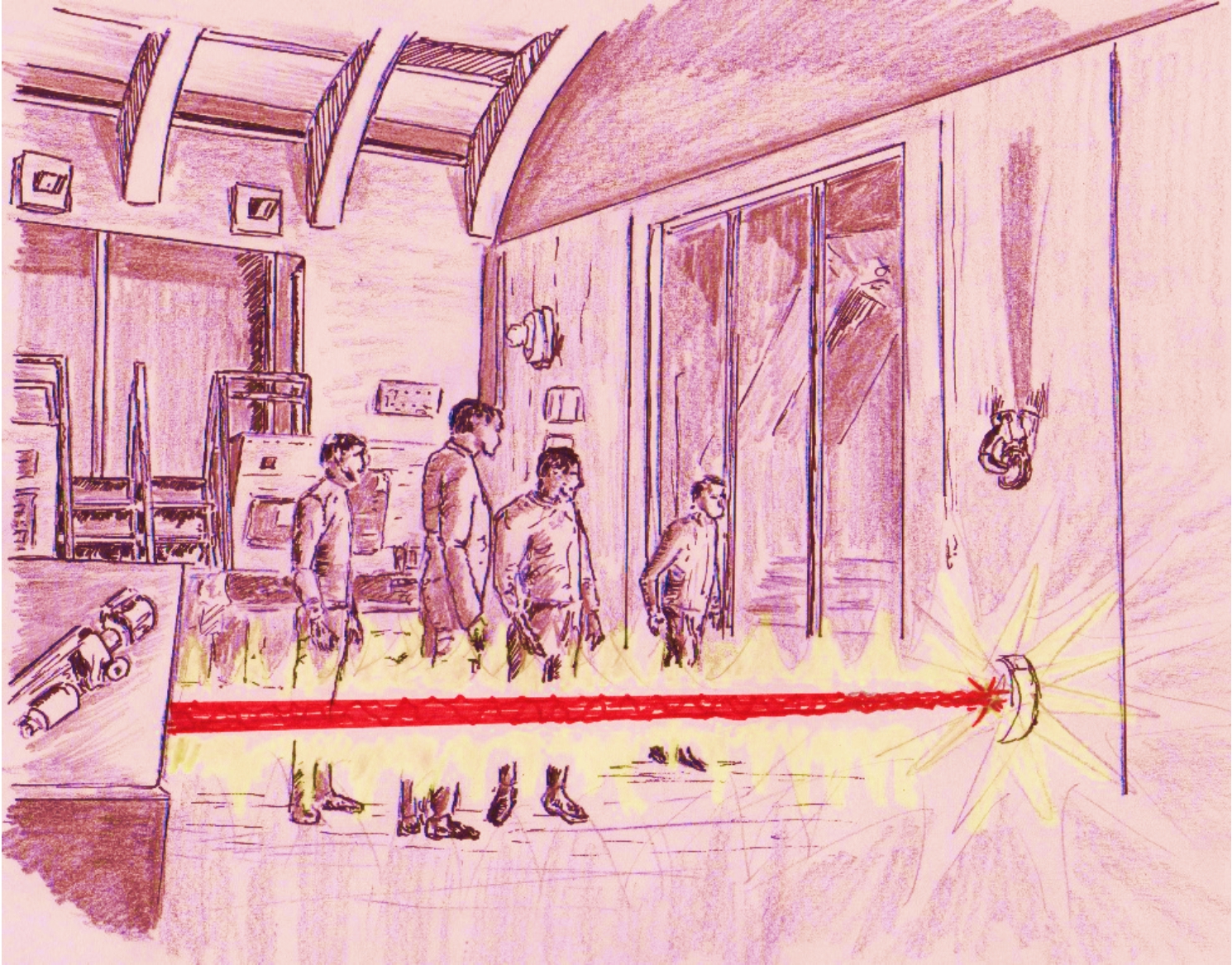

The M5 seeks a new power supply – killing a crewman in the process

.

(Note details of Enterprise Engineering section in the background.) (Illustration by the author.)

Coming back to our century, all that deep learning and CNNs can do is to identify and recognise patterns; and in many cases, do this as well as or better than humans – but as discussed, this does not mean they have human intelligence. Acting upon this assumption can often lead to spectacular failures and a good example of this is IBM’s Watson computer, which successfully competed on the US TV quiz Jeopardy! in February 2011. Watson was a supercomputer running software called ‘Deep QA’, developed by IBM Research. While the grand challenge driving the project was to win on Jeopardy!, the broader goal of Watson was to create a new generation of technology that could find answers in unstructured data more effectively than standard search technology.

There was much excitement about the commercial potential of Watson and in 2013, the MD Anderson Cancer Center launched a “moon-shot” project: diagnose and recommend treatment plans for certain forms of cancer using IBM’s Watson cognitive system.

IBM’s Watson computer. (Pen and ink drawing by the author.)

But in 2017, the project was put on hold after costs went beyond $62M without the system having been used on a single patient. Why did Watson succeed with the game but fail in the real-world application? There could be many detailed explanations for this, but most, if not all, are related to the observation that games operate with a limited set of clearly defined rules. In the case of some games, such as the chess mentioned above and the Jeopardy! quiz, the game can appear to be very complex, but this is only because of the highly extensive nature of the data that can be associated with the game (in

the case of chess the enormous number of possible move combinations and in Jeopardy! the vast number of natural language phrases that need to be analysed to identify the various patterns/connections). In reality, the actual rules of how the chess pieces move or how the Jeopardy! phrases are generally related are relatively simple and straightforward. So, in the world of games, we are operating in a simplified universe, where data might be extensive or complex, but where they can be analysed in relation to a relatively simple set of rules or potential associations. In contrast to this, in the real world, where you and I live and where problems such as medical diagnoses need to be solved, complexity and non-standardisation, in virtually every aspect of data capture and procedures, are the norm. Computer systems, however powerful they are, are just not as good as humans at interpreting, understanding, and drawing significant conclusions from data in the forms in which they are usually employed by humans. This was illustrated when Watson processed leukaemia patient health records that exhibited missing and/or ambiguous data that were sometimes out of order chronologically.

It may well be that the relative failure of IBM Watson has more to do with shortcomings in the data it was presented with, and strict adherence to the limitations of standard practice in medical research, than a consequence of limitations in the computer’s ability to process data and identify relationships/patterns. Or to put it another way, health care medical research methodologies can be somewhat more oriented towards a reverence to current practice rather than regarding data as king. This seems again to be associated with medical conservatism and resistance to new thinking – even when supported by data (why should I wash my hands, Vitamin C is only a dietary supplement, and aspirin a painkiller – they can’t help fight cancer, and so on…). In the current health care environment, even if Watson had formed recommendations for patient treatment based on analysis of vast amounts of past patient data, they would not have been accepted if not linked to official medical guidelines and the accepted conclusions of clinicians from the existing medical literature. To be fair to the medical establishment, the problem with

purely studying patient data is that there may be significant complications/irregularities within and between patient cohorts that may make it difficult to draw reliable conclusions. This is why so much emphasis is placed within medical research on consistency within patient cohorts and the use of randomised trials – so that complicating varying circumstances between patients can be, as far as possible, minimised. I have, myself, experienced the limitations that are placed on automated diagnoses as a result of non-standardised data. Some years ago, I undertook a review of the extent to which computer vision techniques have been applied to usefully detect skin cancer from analysis of images of patient moles. My finding was that many of the computer vision techniques showed promise for this very important application, but at that time a useful clinical role for the technology had not been demonstrated. The reason for this did not have to do with limitations in the computerised image analysis software, but in the variations in the data resulting from non-standard ways of capturing the images of the moles. Variation was present that arose from the use of different camera resolutions and lens set-ups, changes in lighting, distance from the skin and so on.

These were observations that led to the development of the Skin Analyser that employs a standard camera and lighting configuration each time a mole is imaged. This provides repeatability in image capture that thereby enables change to be detected – which is an important indicator of possible skin cancer. In fact, I am convinced that combining the data from this device with deep learning can provide a reliable method for automating diagnosis of skin cancer – which for me is a very exciting possibility – but a subject that could itself form the basis of this whole book.

The Skin Analyser employs a standard camera and lighting configuration that provides repeatability in image capture, thereby enabling change to be detected – which is an important indicator of possible skin cancer. (Photograph by the author.)

Therefore, if there is a problem it does not really lie with Watson or IBM, but with limitations in the way medical data are currently captured. If AI is to realize its full potential and transform medicine, the computer systems need access to many more categories of factors than are currently represented in a typical clinical trial. This, of course, requires data capture and access on a vast scale, and so will need enormous investments in relevant technologies for capture and processing along with relevant infrastructure. However, the potential benefits of objective analysis of vast amounts of reliable and standardised patient data in an automated system offers great potential for new insights into disease causes and cures in a way that could constitute truly personalized care

. In 2020, the UK National Health Service announced a £250 million investment into new ways of applying emerging AI techniques to healthcare – one hopes this will form a first step along this road.

Not that IBM Watson is a complete failure; some physicians are finding it useful as an instant second opinion that they can share with

nervous patients, and say that it is useful, and quite good sales of it have been reported in Asia. It’s just that, although Watson had demonstrated impressive natural language processing capabilities, after the $62M direct investment (and spending billions of dollars on company acquisitions that they expected to support the project), as well as over four long years of hard work, IBM did not create the super doctor they had aimed for.

Is it possible to be even more precise as to why? Yes, it’s because they didn’t call it ‘IBM Holmes’! Or, since they were in the USA, perhaps they could have called it ‘IBM Sherlock’.

A sketch of Dr John H. Watson’s service revolver. In ‘The Adventure of the Speckled Band’, Sherlock Holmes tells Watson that: "An Eley's No. 2 is an excellent argument with gentlemen who can twist steel pokers into knots”. (An Eley No. 2 is a type of cartridge, rather than gun.) (Drawing by the author.)