Take a systems-based long-term approach and

think big

"It is difficult to think of a major industry that AI will not transform. This includes healthcare, education, transportation, retail, communications, and agriculture. There are surprisingly clear paths for AI to make a big difference in all of these industries."

- Andrew Ng

What are the most promising future applications of AI? In 2018, Michael Wu endeavoured to answer this in his keynote session, “How AI Enables the Future of Business Intelligence,” at the MDM Analytics Summit 2018 on Wednesday, 19th

September in Denver. Here is part of what he said:

“AI is being used in a wide variety of ways by businesses across every sector. However, these are the four most prominent – and promising – applications of AI in business today:

1. Fully autonomous systems

Machines are learning to act and react on their own. This application is largely driven by the rise of self-driving cars, where the AI is constantly learning and ultimately enabling the machine to know how to react to all the potentially different environments and circumstances.

A similar application is relevant for distributors as well, particularly within fulfilment centres. For example, sensors within Internet of Things (IoT) devices can detect inventory levels and automatically place orders, automating the fulfilment process and enabling businesses to run more effectively.

2. Human-computer interface

AI is changing how humans and businesses interact with machines. Think of computer assistants like Alexa, Siri, and other chatbots. This form of perpetual AI mimics higher cognitive functions of humans and can be used in the form of “intelligent digital assistants” to help companies boost productivity.

3.

Online personalization

AI can drive recommendation engines, helping companies like Amazon, Netflix, and Spotify learn from users’ online behaviour to deliver highly personalized suggestions. Companies can leverage this application of AI to deliver personalized recommendations over time, improving the customer experience.

4. Automating business decisions

Organizations are using AI to improve their systems and gain a competitive advantage. The learning loops in this application of AI can give distributors data-driven insights that enable them to make better-informed decisions and identify opportunities to grow revenue.

By better understanding the different applications of AI, business leaders can determine which is right for their business. Only with this foundation of knowledge can they begin reaping the benefits of this technology.”

Wu may be largely right in what he is saying; however, with the possible exception of self-driving cars which represent a significant and possibly life-changing technology (that is once it works reliably and safely on our over-crowded and complex road networks), the other applications mentioned represent AI providing somewhat increased levels of efficiency or productivity. But if I am ever to stand any chance of making a private trip to Mars, there need to be revolutionary

technologies.

It’s not enough to just arrange a tweak of what we already have, but instead we need new types of systems that deliver novel and advanced capabilities in the form of automated solutions to complex and significant challenges. The four things that Wu refers to; specifically fully autonomous systems, human-computer interface, online personalization and automating business decisions could, for example, take the form of slight modifications to an online retailing system, such as www.amazon.com, that would be undertaken to improve the shopping experience for the customer and to enhance the seller’s business operation. But what I am suggesting is needed is more of a revolution in technological solutions associated with the

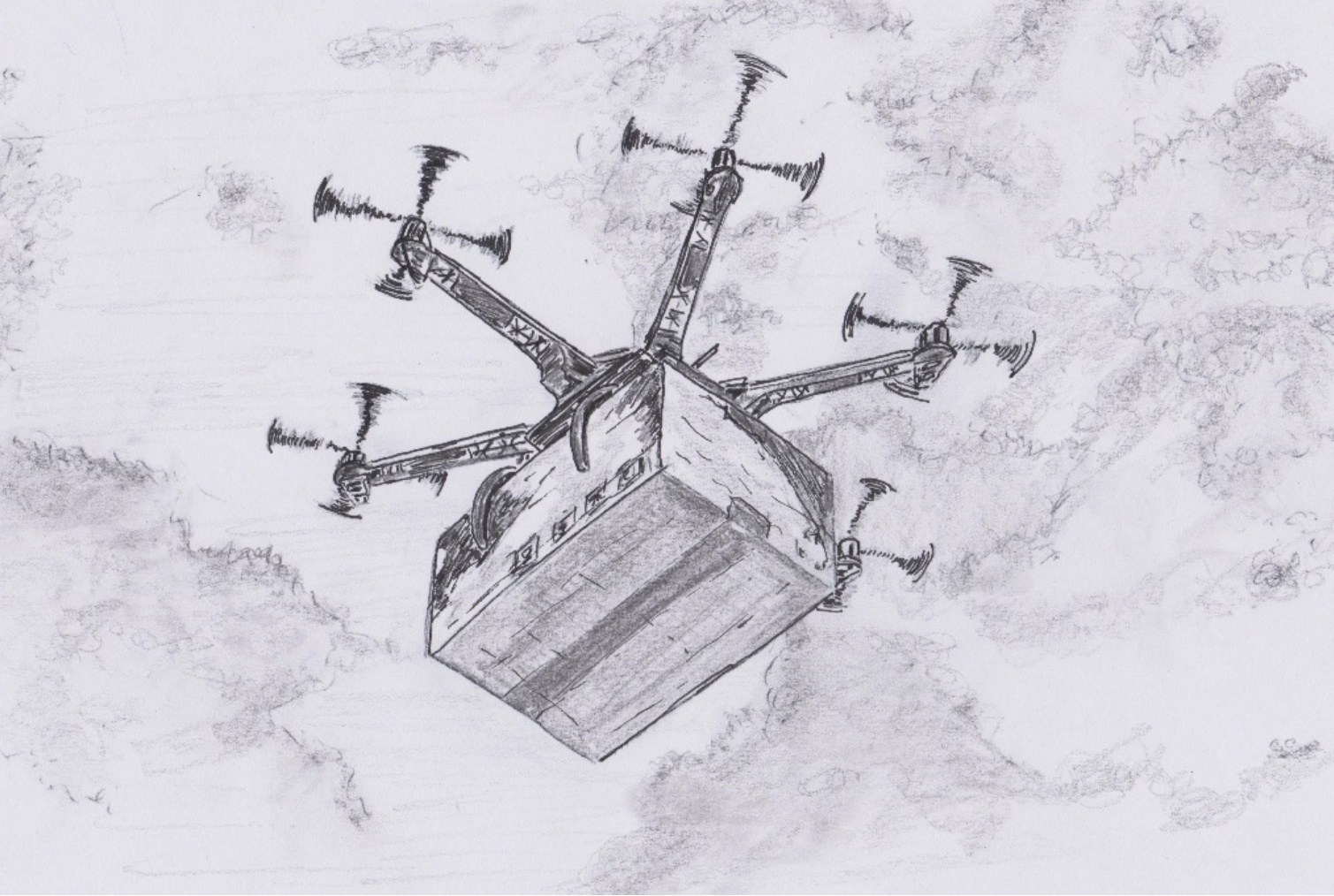

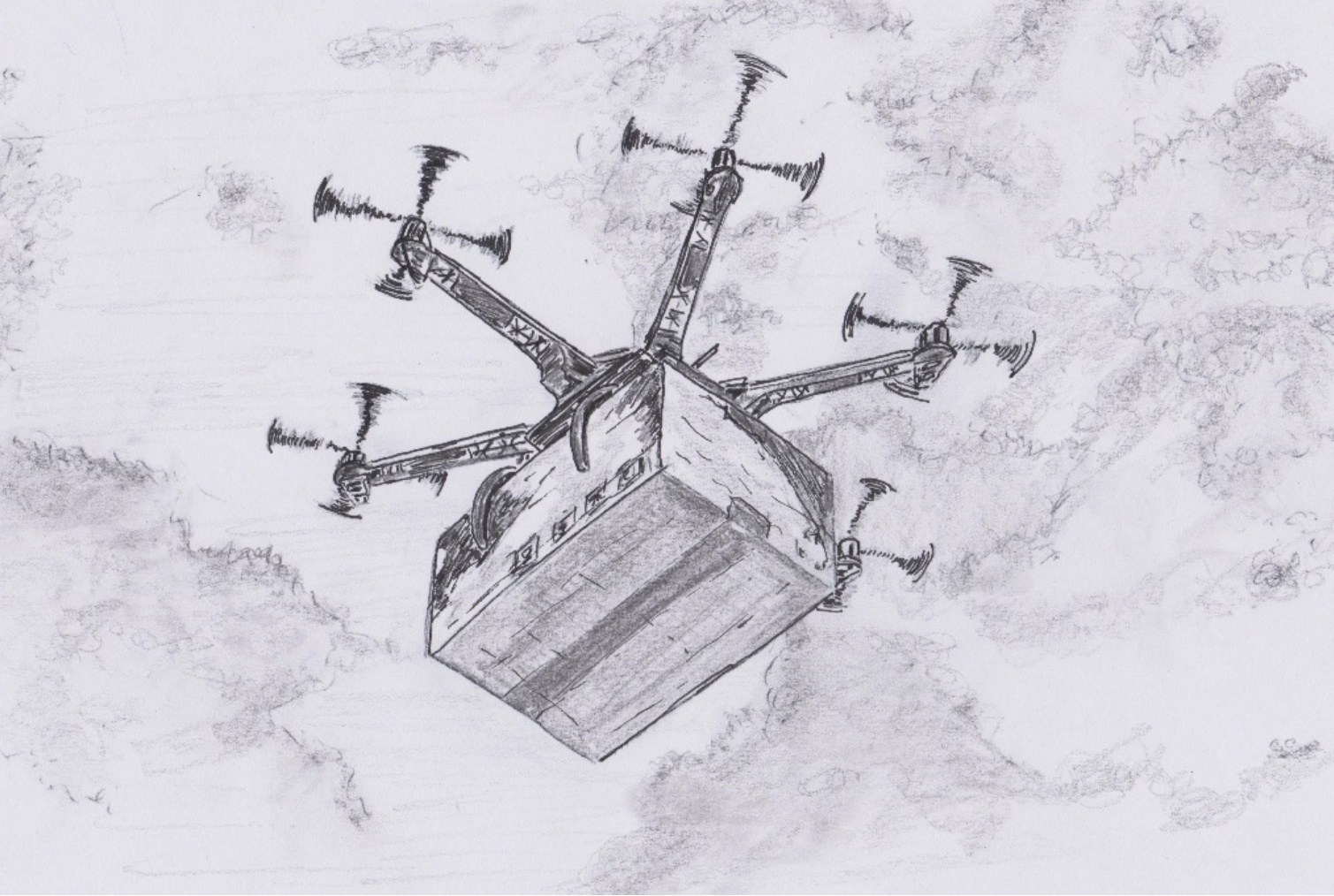

business operation. For the case of buying some items from Amazon, the tweak might be to have a better concept of the kinds of products the buyer is interested in and so more closely align online shop advertisements to those interests. Rather than just achieving this, the technological revolution would be something more like arranging for the items to be delivered to the customer automatically by AI-guided drones employing GPS and advanced vision capabilities.

By the way, for such a delivery the last mile and, in particular, the last few dozen yards would be the more critical part of the delivery. This is because for current manual delivery, often the last mile comprises up to half of the costs, and it is also when in close proximity to a person’s home that GPS will prove less useful and there will be a need for advanced vision that employs AI for complex scene understanding and identifying exactly where the package should be delivered.

Therefore, for facilitating new high-performance and high-impact technologies that offer potential to improve our lives, we need to look beyond searches and analyses of databases, such as those relating to online customer interests and shopping behaviours. Instead, we need to employ autonomous systems that will typically be multi-sensorial, but which are certainly likely to employ vision systems powered by cutting edge AI techniques such as CNNs. This point is in fact worth dwelling upon since most research that is being undertaken in fields such as computer vision does tend to dwell more upon database analysis rather than the guidance of multisensory autonomous systems. If we were not feeling charitable, we might say that this is because processing databases is easier to do.

Automatic drones; the future of delivery? (Illustration by the author.)

Yes folks, inputting data that already exists for you in a formatted and standard fashion, is certainly going to be easier than developing your own system that can capture new data for you. Not that performing research on existing datasets is a complete waste of time; as mentioned above, it was, after all, studies on reliability of face recognition for well-known face datasets that originally led to the breakthrough in use of CNNs for pattern recognition.

But what taking a systems-based approach and thinking big really means is that the world is a very complex place and in order to generate new types of automated systems that can successfully operate in the real world, providing real benefits, the systems need to be able to interact with that world. Although the level of interaction might vary greatly depending upon the task to be undertaken, some ability to capture and interpret new data in a real-world interaction is definitely needed. The relative lack of emphasis

of, say, university researchers, on real world autonomous systems, in comparison to the extensive studies on existing datasets, may help to explain why, despite the great promise of advanced capabilities arising from the marriage of modern high-performance cameras with AI, the actual impact i.e. number of autonomous vision-guided systems now usefully operating in the field, is so low.

Perhaps we should again refer to the canon for guidance. In ‘The Adventure of the Greek Interpreter’, Holmes says that his brother, Mycroft, is his superior in observation and deduction and then goes on to say: "If the art of the detective began and ended in reasoning from an armchair, my brother would be the greatest criminal agent that ever lived. But he has no ambition and no energy. He will not even go out of his way to verify his own solution and would rather be considered wrong than take the trouble to prove himself right. Again and again, I have taken a problem to him and have received an explanation which has afterwards proved to be the correct one. And yet he was absolutely incapable of working out the practical points which must be gone into before a case could be laid before a judge or jury."

Mycroft Holmes, whom Holmes refers to as his younger brother who is his ‘superior in observation and deduction’. (Drawing by the author.)

Holmes is saying that although his brother may be a brilliant reasoner, this is of little practical use due to his lack of application.

The same is true of AI-driven vision systems. Although we may generate some very powerful scene analysis algorithms, in practice

these will be of limited utility if we don’t get out in the field and use the system with new data from the particular situation that is of interest to us. And from experience I can confirm that there are many potentially very important situations where the marriage of high-performance imaging and state-of-the-art AI can provide solutions that may have the greatest significance in the long term.

It’s not just commonly encountered problems that we can address, such as the location of weeds in grass so that we can eliminate them without spraying herbicide everywhere. No, there are a vast array of applications in a very wide range of sectors; to name just a few I have been involved with: animal identification and condition appraisal, monitoring of building site operations to assist with logistics and improve productivity, plant phenotyping, 3D face analysis at airports, 4D face analysis to identify medical conditions and to assess surgical outcomes, non-contact breathing monitoring, skin cancer detection, used book identification and condition appraisal, identification of security features on banknotes and wear classification, metal power characterisation, measurement of airfreight dimensions, non-contact wheel alignment, scratch detection on polished stone, assessment of aggregate morphologies, 3D face recognition for ticketless travel with gateless gate lines on the London Underground … which brings us back to Holmes (did he not travel by steam on the Bakerloo Line?)

Early London Underground ticket. (Drawing by the author.)

So, yes, it would be great to be able to be transported to Mars, but to do so, we can’t rely on ‘reasoning from an armchair’.