A scene from the film

Forbidden Planet

where the spacecraft United Planets Cruiser C-57D is landing on the planet Altair IV. (Illustration by the author).

Another scene from the film

Forbidden Planet

: Robby the Robot Lt. 'Doc' Ostrow (left) an

d

Commander Adams (right). (Pen and

ink by the author.)

Twilight Zone

made extensive use of props and costumes originally created for Forbidden Planet

- perhaps most memorable of which were Robby himself (or itself) and the film’s saucer spaceship (United Planets Cruiser C-57D). In 2017, at the age of 61, Robbie was sold by Bonhams in New York for $5,375,000 – one of the most expensive movie props ever sold.

MGM’s Robby the Robot may well have been influenced by Isaac Asimov’s seminal robot stories – in particular the short story, ‘Robbie’, which was first published in 1940 as part of his robot anthology I, Robot

. It turns out that Isaac Asimov had a significant influence on Hollywood science fiction. He took an interest in Star Trek

from its earliest days and used to say that it was his favourite TV show.

Then, later, in the STNG episode, ‘Datalore’, we are told that the android Data is the result of Doctor Noonian Soong’s desire “to make Asimov’s dream of a positronic brain come true”. Data was, in fact, a very capable android who formed one of the main hero characters of STNG – subsequently becoming a firm favourite with the multitudinous fans of the show.

A robot that features in a more recent film is ‘TARS’ - one of four former U.S. Marine Corps tactical robots (along with PLEX, CASE, and KIPP) that feature in the science fiction film Interstellar

.

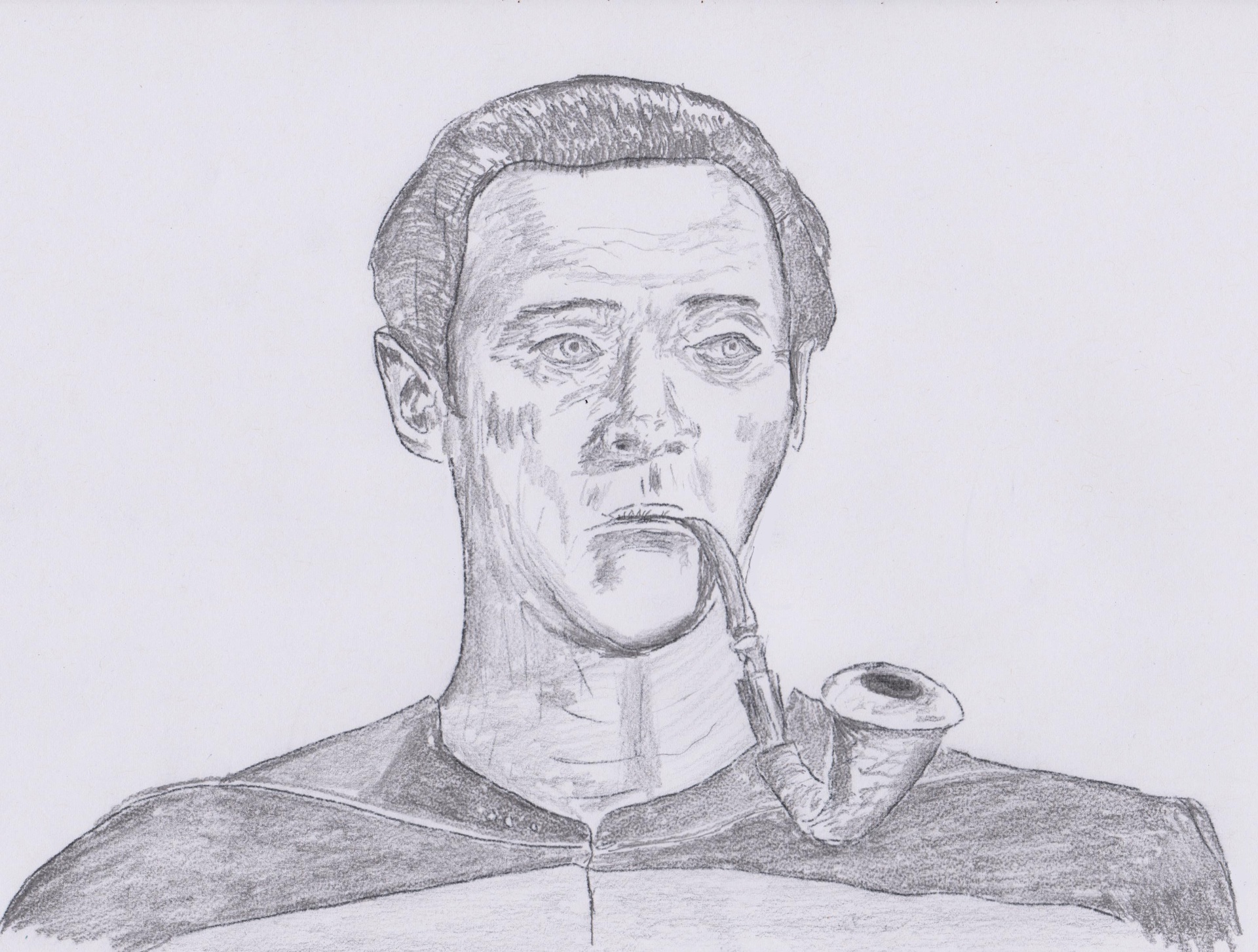

The android Data, from

Star Trek: The Next Generation

; which has no emotions but, as you can see, did have an interest in Sherlock Holmes and was given, on occasion, to masquerading as the great detective. This illustration combines three of the major themes of this book: robotics,

Star Trek

and Sherlock Holmes. (Drawing by author.)

I must say I enjoyed the sequences in this film that featured TARS; in fact, I thought the whole film was great – loads of interesting physics content, including time dilation, direction by Christopher Nolan and acting from the likes of Matthew McConaughey and Michael Caine – what more could you ask for? One thing I would say though, is that the grandeur of Robby is derived from a real presence – whereas TARS appeared somewhat more dependent upon cinematographic special effects. For me, the former will always create much more of an impression.

Why, you may be asking, have we gone into such detail regarding

robot depictions in science fiction films? The reason is that these and other films and TV shows have presented robots in very impressive and believable roles. It turns out that the ‘magic’ of moviemaking has set our expectations very high. When we see what current robots can actually do, it can be somewhat disappointing. Aside from some specialised tasks such as vacuuming or lawn cutting, most domestic robots only really excel at arm-waving, while industrial robots are best suited to pick-and-place operations or moving through fixed and repetitive trajectories. In the late 1980s, there was something of a boom in industrial robotics – I was in fact part of it working at Cranfield University with a number of robot/automation companies. Unfortunately, as mentioned earlier, it didn’t last - the boom largely turned to bust, due to the frequent employment of expensive robots to undertake simple tasks that could be achieved at a much lower cost using simpler equipment. To summarise, robots were looking like a solution in search of an application. The chief problem then was that re-programming industrial robots was so expensive that they were often scrapped at the end of their first use. In other words, software limitations meant that their flexibility was not being exploited – they were being employed as hard automation – and in that role they looked expensive. However, it may be that the AI breakthroughs discussed in this book, such as CNNs, will enable this software hurdle to be overcome.

So much for past events; what about the future and, intriguingly, the far future? Will robots be able to undertake anything like the kinds of impressive and wide-ranging tasks they commonly accomplish in the movies? What will they look like – do films give us any realistic indications? Is there any chance that the dominance of mankind could be threatened by robots – are they our servants or usurpers? Having said this, I can’t help thinking of the Twilight Zone

episode, ‘To Serve Man’. Here, after tall somewhat weird-looking aliens known as Kanamits land on Earth, the humans have trouble deciding whether they are a threat. When a Kanamit book entitled To Serve Man

is discovered, everyone thinks all is well and the Kanamits genuinely intend to bring humanity the benefits they have been promising. But, after someone manages to translate some of the

book, you can imagine their consternation when they discover that it is in reality a cookbook! Due to this final dramatic twist, this is one of the most famous episodes of the series. By the way, since Twilight Zone

was made by MGM you will not be surprised to learn that the spacecraft that the Kanamits travel in is the same one that appears in Forbidden Planet

– specifically the United Planets Cruiser C-57D (it also appears in many other Twilight Zone

episodes).

Robot intelligence

“I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way similar to the human brain. That is the goal I have been pursuing. We are making progress, though we still have lots to learn about how the brain actually works.”

- Geoffrey Hinton

There is no reason why robots of the future should not comprise multi-purpose mechanisms. As mentioned above, the chief problem with robots up to now has been the prohibitively high cost of re-programming them for an altered task. This leads companies such as auto manufacturers, to simply buy a new set of robots (and all the needed ancillary equipment), every time the tasks for the robots is changed somewhat. As you can imagine, this is not a particularly economic solution and it was factors such as this that led to the bursting of the robotics investment bubble that occurred around 1990 (which I have already alluded to a couple of times), with all its attendant disappointments. In contrast to this rather unprepossessing past, the future for robot re-programming is bright since we can expect it to be largely automated. All that is needed for this is implementation of effective machine learning algorithms and the availability of vast amounts of data relevant to the task. Drawing on historical data from online databases might help the latter, but perhaps more importantly, the new robot could learn on the job

, just as humans have done for centuries. This could be called, if you will, robot apprenticeships, or work-based learning (WBL) for robots (WBL is something that has come into fashion in recent years in

tertiary education). The great advantage of this approach is that it would not be long before the knowledge/expertise of a new robot being trained and so generating new data, exceeded the knowledge of the older robot guiding it through the training. After a while, its knowledge will also exceed that of any human that has undertaken the same task. This is an illustration of how, in the not too far distant future, robot knowledge can be expected to exceed that of humans. This is not to say that such a robot would have greater intelligence than humans, or in fact intelligence at all as we understand it. Another way of stating this is that it is not clear whether robots will be capable of independent original thought – we can’t say for certain that robots will be coming up with new and innovative ideas – it’s just too early in the history of real AI. (I define real AI as being the capability of a computer to recognise patterns with similar or greater skill than humans and also being able to employ data that contain a great deal of presentational variation – such as we see every day in the real world.) In AI, there are two distinct approaches to machine learning – supervised and unsupervised learning. The difference between the two depends upon whether or not the program is provided with annotated data (in supervised it is, unsupervised it is not). In unsupervised learning, you just give the program a vast amount of data and the machine identifies features of interest automatically (e.g. weeds in grass). This is clearly somewhat similar to what humans do, and so we could term it intelligence, but it must be pointed out that scientific/technological progress in unsupervised learning has been much slower and more limited than for supervised learning. Despite this, progress is being made; and I would not be surprised if machines able, for all practical purposes, to think

and to demonstrate intelligence greater than that of humans, are attained by the end of the 21st

century. If so, this would be a couple of hundred years before Star Trek

writers predicted that Dr Richard Daystrom would produce his M5 Multitronic Unit – as discussed above. One thing we can be sure of is that robot intelligence will require ultra-high levels of computation performance in the computer that will comprise the robot brain. As will, by the way, real-time robot sensing – which is something just about as important as the actual AI.

Robot senses

“If robots are to clean our homes, they'll have to do it better than a person.”

- James Dyson

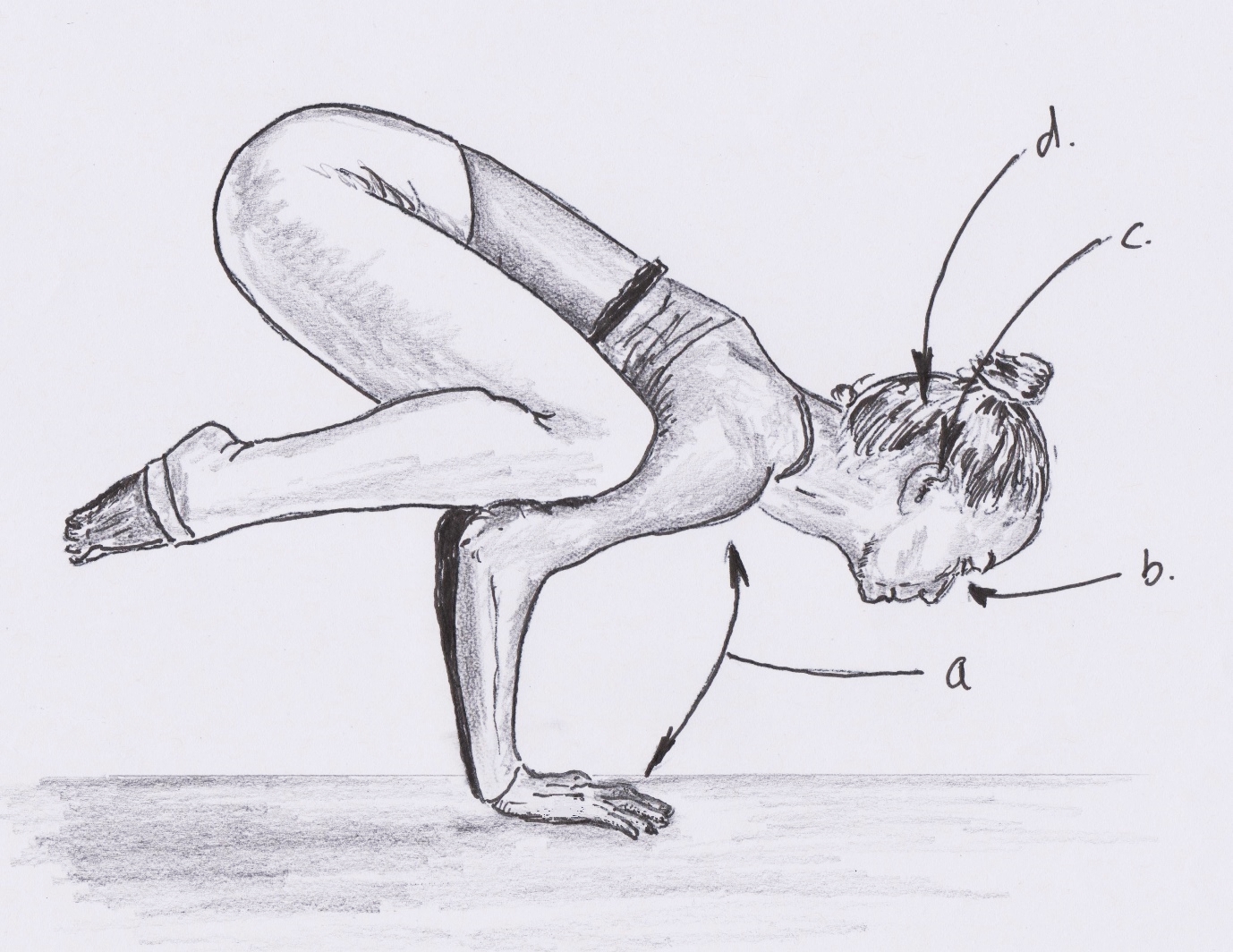

One thing we can be sure of is that the advanced robots of the far future will employ multi-sensory approaches for gathering information on their environments. In the case of humans, we are often said to have five senses; but in fact there are six – the one that is often overlooked is proprioception

– or the ability we have to know where, say, our arm is without actually looking at it.

Ironically, this is about the only sense that most current robots actually have. There are encoders in the joints of industrial robots that continuously and very accurately monitor the position of the joint. Using these data, and some mathematical processing known as ‘inverse kinematics’ it is possible to calculate what positions all the joints need to be in for the robot hand to reach a given position. Then typically ‘servo motors’ (which are motors employing positional feedback to help them get to needed positions even in conditions of variable load – where the feedback can come from the encoders) are employed to make sure the joints reach those positions.

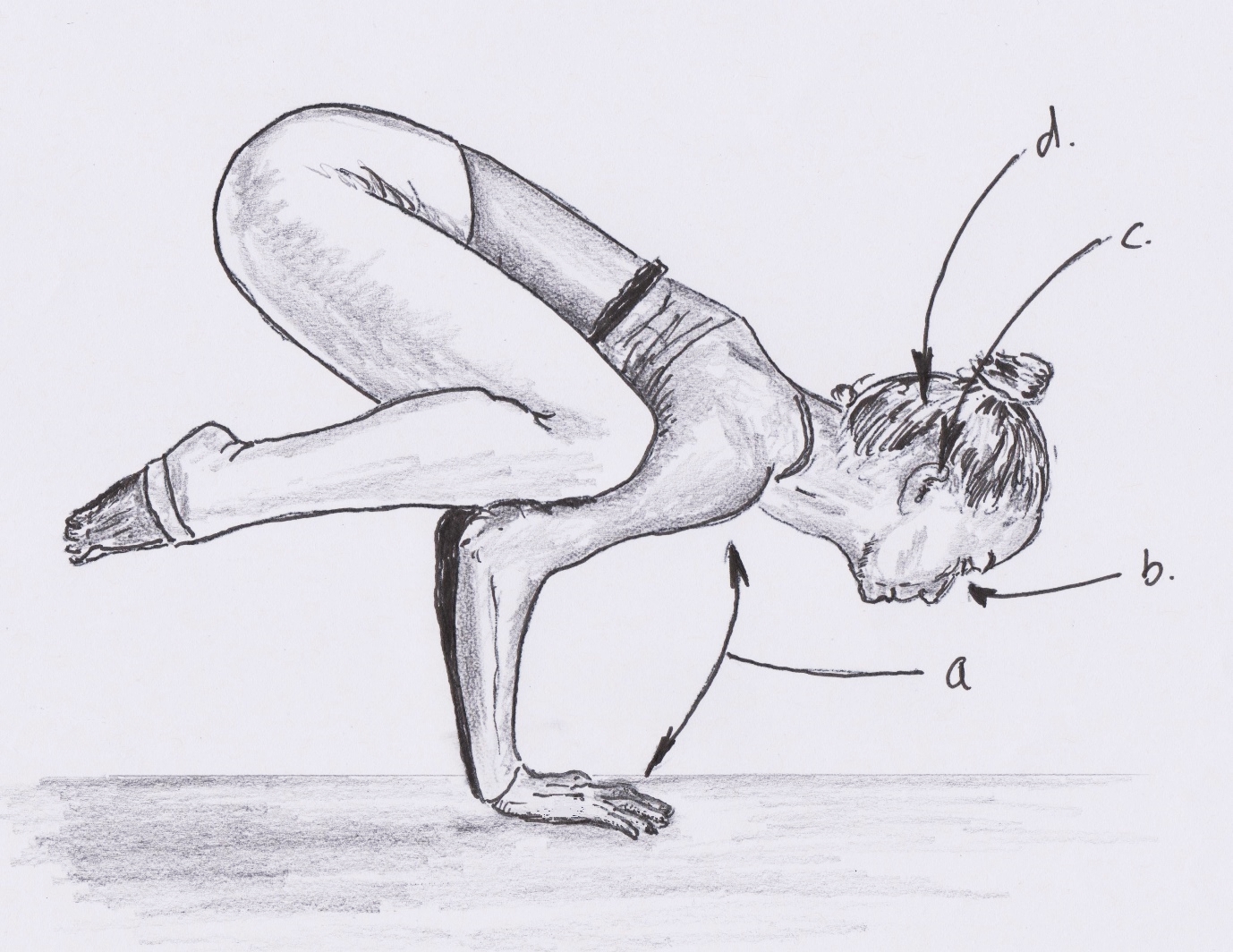

Ways in which a human achieves proprioception - perception or awareness of position and movement: (a) muscles and joints send information about body position, (b) eyes send information about position and movement, (c) inner ear vestibular organs send information on acceleration, rotation and position, and (d) the information is received and interpreted in the brain. (Illustration by the author.)

This might seem like a simple task, but in the real world, where loads can vary for all kinds of unexpected reasons, it is not. This is fine for doing such routine tasks as, say, putting a car windscreen into position on an automotive assembly line, but it will be woefully inadequate for the multi-purpose robot of the future. The latter will require rich high-quality data recovery, i.e. high-resolution wide-ranging sensory perception. There are many reasons for this, but the chief ones are that it will enable the device to interact effectively and safely in an environment that might include humans, and it will also

enable it to gather data of a quality sufficient to allow it to undertake the machine learning mentioned above, which will be so critical to the long term success of the robot in whatever tasks are assigned to it.

Consider, for example, robot vision. This will need to be multisensory (i.e. more than one camera) to facilitate, for example, range finding using binocular stereo (stereo triangulation) as employed by humans. The images will need to be high quality and high resolution (in a rather similar way to the images from a digital SLR camera) and the latter will require high computation capabilities in the robot’s brain. This requirement is not to be underestimated. Consider, for example, what a small angle of view humans can see at high resolution (which is known as the vision span). Although the visual field of the human eye spans approximately 120 degrees of arc, most of this is peripheral (i.e. low-resolution) vision. The human eye does, however, have much greater resolution in the macula, where there is a higher density of cone cells, but the field of view that is observed with sufficient resolution to read text typically only spans about 6 degrees of arc. So, despite all the image processing power of the human brain (which is, after all, the most complex thing known in the Universe), evolution has arranged it so that the amount of data it reviews at high resolution is really very limited. Presumably a greater amount of data would be asking too much of the brain and it would be overwhelmed.

So yes, the robots of the future will require high resolution vision, but the actual amount of data captured might be limited by the amount of processing of continuous data that the robot brain could undertake in real time. Consequently, it does not seem unlikely that robotics will follow the human example and only capture a small number of degrees of arc at very high resolution. One way of doing this would be to have actuators re-orientating cameras towards objects of interest – as human eyes move to survey a scene. However, robotic technology might provide an advantage here in that a camera could be capable of capturing a wide field of view at high resolution but only a relatively small region of interest would

be analysed at any given time. (This can, in fact, already be done to some extent in modern industrial cameras – particularly those based on CMOS technology.) The advantage for the robot here comes in the form of no moving parts being needed and also an ability to move the region of interest much more quickly than a human or animal can rotate their eyeballs.

While we are on the subject of potential advantages for the robot, it is worth mentioning that robot vision could be capable of detecting ‘light’ with a far greater range of wavelengths than humans can manage. While our eyes are only sensitive to a relatively narrow band of the EM spectrum, from red up to violet light, there is no reason why robot vision could not extend into ultraviolet and above, as well as into the longer wavelengths of infra-red (IR). Here, some interesting possibilities arise; for example, a robot detecting ultra-violet (UV) radiation could raise an alarm for any humans in its vicinity, since UV is harmful to humans and can even cause blindness. Likewise, detecting well into the IR would effectively provide the robot with a ‘thermal camera’ and such a device can be used to monitor the temperature of humans. If an elevated temperature were detected it could be providing an indication that the person has a fever – thereby flagging early that the person might have a virus and need to be tested and, if positive, their movements should be tracked. (All of which is, of course, very relevant to the current COVID-19 pandemic.) Going into even longer wavelengths, we enter the range of ‘terahertz radiation’, which occupies a middle ground between microwaves and infrared light. The emission and detection of terahertz radiation is something of an emerging technology, but it offers the capability to penetrate fabrics and plastics. The potential applications of this can be readily imagined. In surveillance, for example, terahertz security screening could be used for covert detection of concealed weapons on a person (and, interestingly, many materials of interest have unique spectral ‘fingerprints’ in the terahertz range).

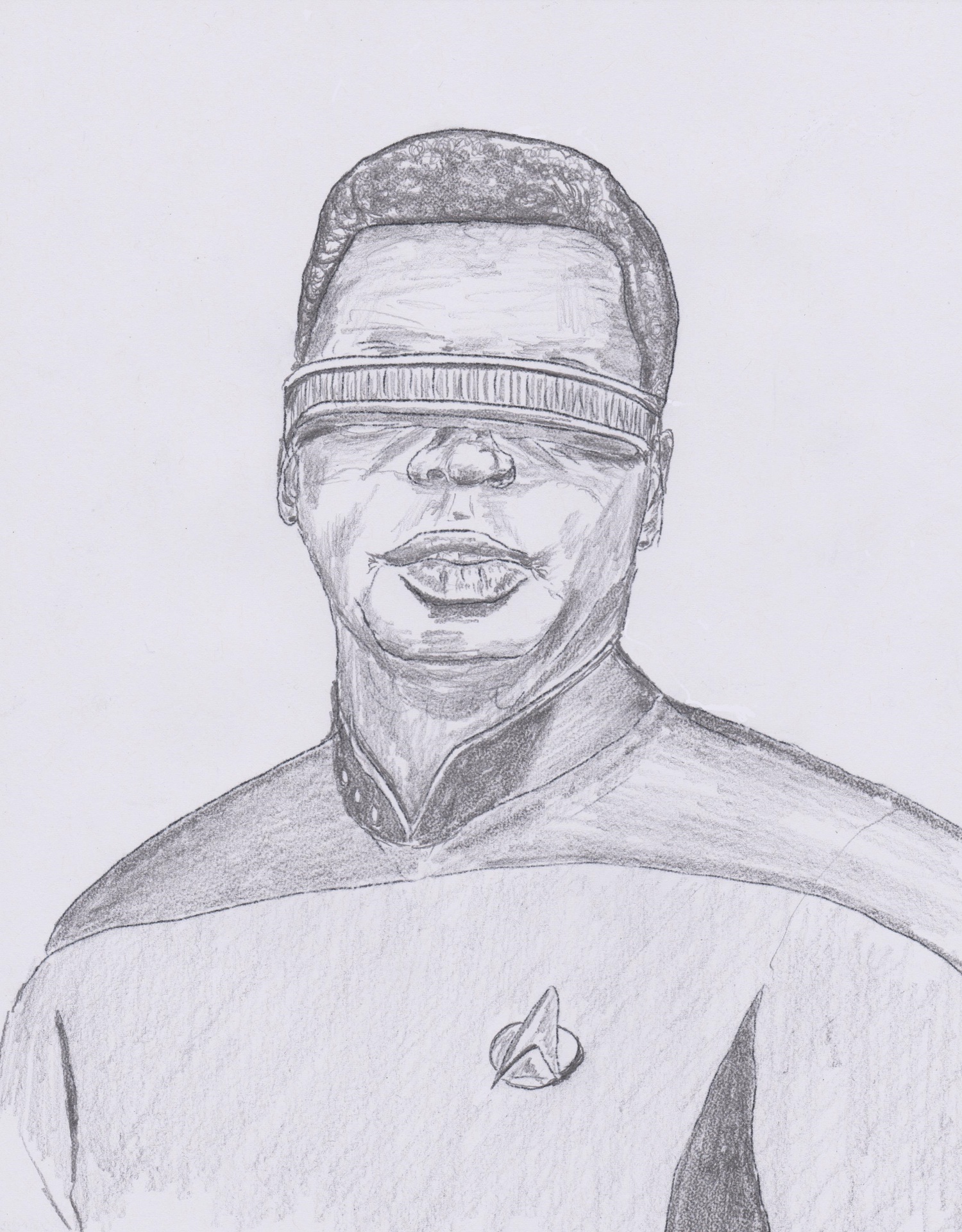

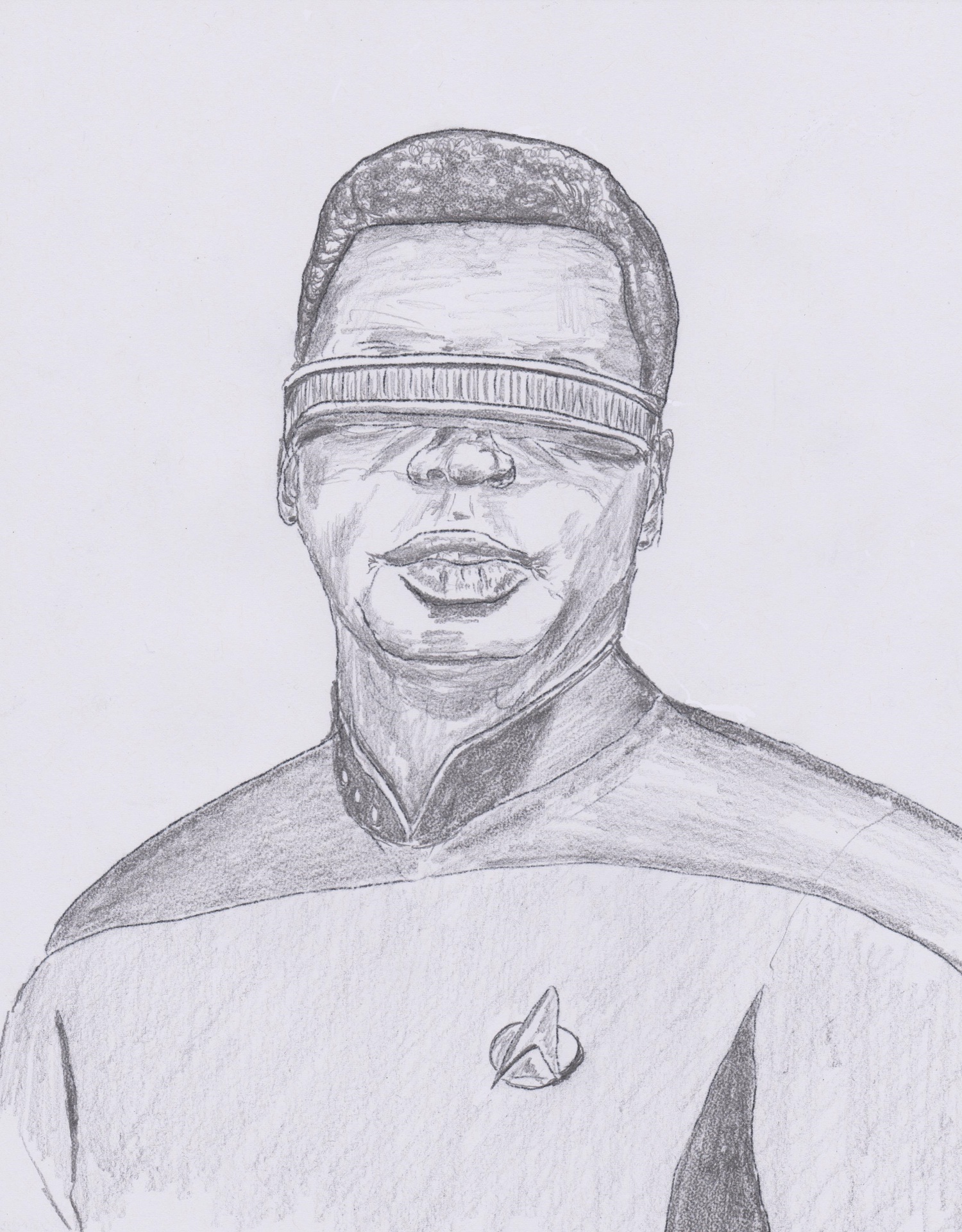

To be honest, all this reminds me of Geordie LaForge – the engineer in STNG who was blind since birth but who employed a visor to see. Geordie LaForge was one of the main STNG characters being, of

course, popular with the fans, but I can’t help wondering whether the actor who played him, LeVar Burton, might have been slightly annoyed with the series writers for the way in which Geordie always seemed to have little success with women, while Will Riker was apparently getting intimate with females (alien and otherwise) just about every other week! I guess the Will Riker character was written to be a little similar to that of Captain Kirk in the original series – in that he often found that the solution to a serious and dramatic situation that he found himself in could be attained by kissing the nearest attractive woman. Anyway, although Geordie’s visor allowed him to ‘see much more than other people’, he said he would have preferred to just have normal human vision. (The visor was replaced by ocular prosthetic implants in the last three films of the STNG franchise.)

What of the other senses? In the case of hearing, there is no reason to suppose that future robots will not have great sensitivity and a facility for detecting a wide range of sound frequencies (humans are limited to 20 to 20,000Hz). As for the other well-known senses – touch (tactile), smell, and taste, these will all be needed and, like hearing, they will benefit from advanced capabilities that will be offered from future sensor technologies – capabilities that would be expected to greatly exceed those of humans. By the way, the sense of balance is provided in humans by structures in the middle ear – in the case of robots this could be facilitated through use of gyros (perhaps explaining why gyroscopes are shown spinning around at the top of Robby’s ‘head’). It should be mentioned that robots are also likely to employ additional sensing technologies that are not available to humans – examples include object detection using RADAR and GPS position recording.

Geordie LaForge – the engineer in

Star Trek: The Next Generation,

who was blind since birth but who employed a visor to see. (Drawing by author.)

Therefore, even from this brief discussion, we can conclude that it is unlikely that future robots will be lacking in sensory technology. However, due to the discrete and compact technologies we can expect to be available in the future, this does not necessarily dictate the way in which they will appear. Instead, this may be more dependent upon sociological and practical considerations.

The appearance of robots in the far future

“Here I come

Cryogenic heart, skin a polished silver

One thing I am glad of

For this I thank my builder

I can never rust”

- Louis Shalako

From the brief discussion above of the depiction of robots in the movies, it can be seen that their appearances are not usually a result of functional requirements. As you would perhaps expect, the appearance is generally intended to create an impression on the viewer – either of beauty (Maschinenmensch), technical impressiveness (Robby), or fright (Gort). It may, however, be television that has given us the truest impression of the likely appearance of robots in the far future – specifically the humanoid robot, Data.

There are many reasons to suppose that future robots will be humanoid in form. The first and most obvious is the fact that if a robot has a similar external geometry to a human then it will be able to do many things humans commonly do – which might have many associated benefits. Suppose, for example, that a robot is intended to act as a carer for a person with some disabilities. The person concerned may still wish to undertake common tasks such as travelling on public transport or dining in restaurants. If the robot had a humanoid form, it would be able to accompany and care for the person without the need for specialised conversions of seats and other equipment used by humans. Another factor has to do with psychology or social acceptance. Put simply, most people are much more likely to be accepting of a robot that resembles a human than one which manifestly does not.

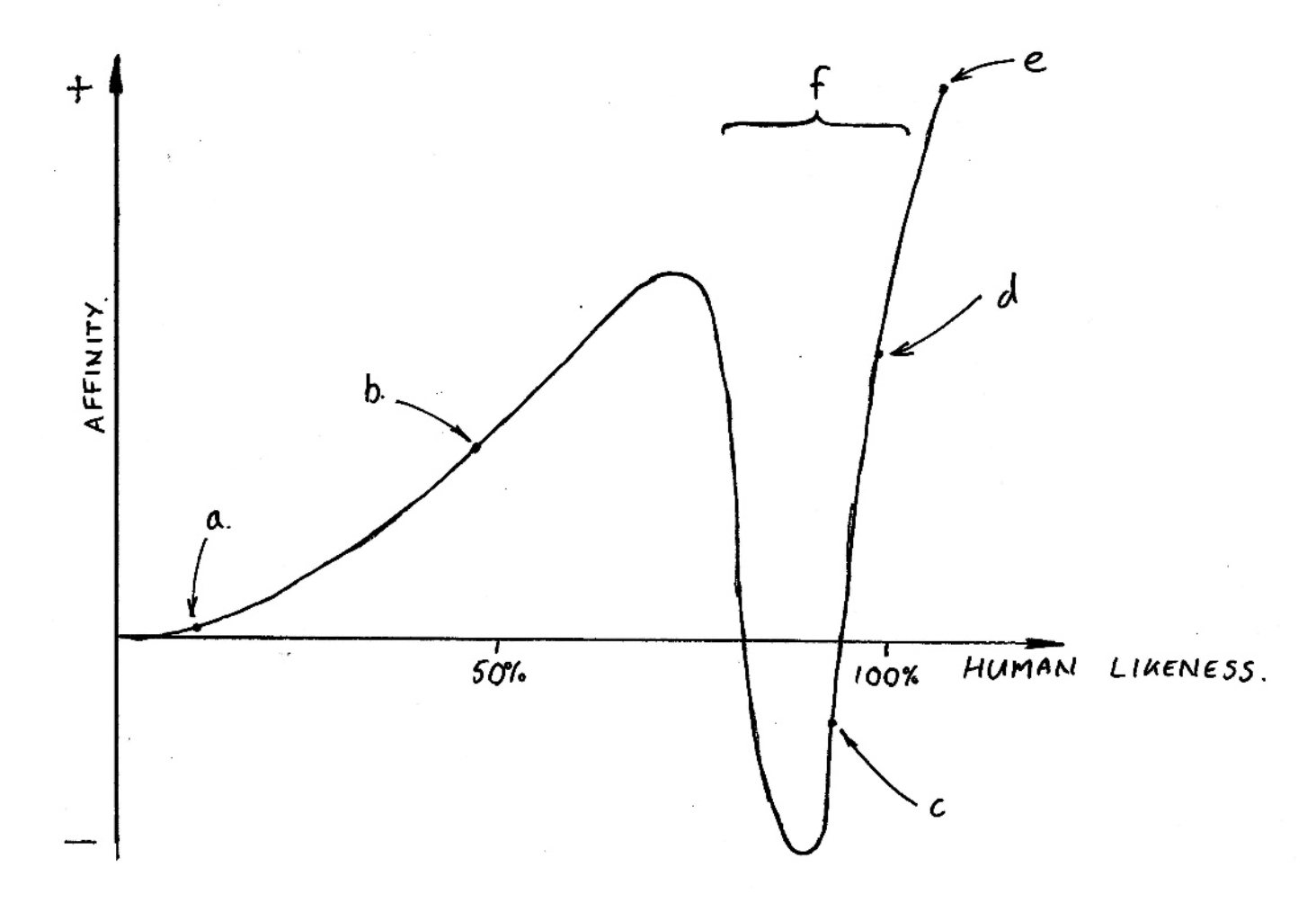

However, those who do not like the concept of a robot that appears realistically human-like will often point out the concept of ‘uncanny valley’ in relation to people’s negative reaction to lifelike robots. This term was first introduced in the 1970s by Masahiro Mori, then a

professor at the Tokyo Institute of Technology. He invented the term ‘uncanny valley’ to describe his observation that as robots appear more humanlike, they cease to be appealing. Upon reaching the uncanny valley, our reaction supposedly descends into a feeling of strangeness, a sense of unease, and a tendency to be scared or freaked out, as shown in the graph below.

Even if there is some truth in the theory of the uncanny valley, I wouldn’t get too concerned about it in relation to humanoid robots of the far future. Such devices are likely to have a very high degree of human likeness; and, as shown in the graph, as the human likeness approaches 100% the affinity very much rises – so much, in fact, that it ends up at the point where you would expect it to be if the uncanny valley never existed at all.

Are there, then, any serious objections to the concept that a humanoid form is the ultimate robot destiny?

The Uncanny Valley in the relationship between human affinity for robots and the latter’s human likeness. (a) industrial robot,

(b) educational robot, (c) prosthetics, (d) puppets such as Sophia, (e) healthy person, and (f) Uncanny Valley. (Graph by the author.)

Some people may point to the capabilities of current machines and question whether there will ever be a time when the power and agility of a human could be realised within a machine confined within the limited space of a typical human body (not for nothing did Hamlet exclaim: "What a piece of work is man!"). But again, I would not be too concerned over such reservations. Electric motors are continually becoming more complex, powerful, and lightweight. Consider, for example, the harmonic gearbox; this employs strain wave gearing theory, which is based on elastic dynamics and utilizes the flexibility of metal. This type of gearbox, which was invented in 1957, is compact and lightweight and offers a high gear reduction ratio, which has led to it being commonly employed in robotics and aerospace (the latter includes the electric drives of the Apollo Lunar Rover). This is just an example – there are multitudes of other types of drives being constantly introduced – so we may expect that robotic motive power is something that will be available in the future.

Regarding matters such as balance and agility, these are control issues that are dependent upon computing/algorithmic developments. In the past, much emphasis has been placed on robotics research into ‘closed form’ classical approaches to control, the results of which have been quite limited. Now, with the breakthrough in AI that has occurred in recent years – particularly in deep learning, we may expect more robust and practical solutions to robotics control problems.

One thing that robots of the future will definitely need, if they are going to be able to undertake the delicate or difficult tasks that humans can perform, is compliance. This term refers to flexibility and suppleness; and in order to understand what compliance is, it helps to consider what non-compliance is. A non-compliant (stiff) robot will have predetermined positions or trajectories. No matter what kind of external force is exerted on, say the robotic hand, it will

follow the exact same path each and every time. In contrast to this, a compliant robot can reach several positions and exert different forces on a given object. For example: a compliant robot gripper can grasp an egg without crushing it, even if the egg is, say, slightly larger than expected. A non-compliant gripper will crush the egg and continue its given operation. Compliance can take two forms: passive and active. Passive can be thought of as being similar to the ability of, say, your fingers to be slightly spongy and to deform somewhat. This deformation would, in the above example, help to avoid the egg being crushed. Active compliance, on the other hand, is where the robot makes slight positional modifications during a task to assist with performing it successfully. For example, suppose a robot were given a task of threading a nut onto a bolt. This is a simple task for a human, but a robot using its grippers to hold the nut and bolt may not be able to accomplish it if there is any slight misalignment (which would be often expected to occur through common occurrences such as the nut or bolt position varying slightly each time they are picked up by the robot).

Active compliance would involve the robot very slightly varying the relative position and/or orientation of the nut and bolt, to assist in completing the task. This may seem like a trivial task, but it is the sort of thing that, up to now, has been very difficult to achieve robotically, and it also provides a good illustration of why a practical robot requires well developed sensors that are integrated with its control system. The most important sensory data needed for implementing active compliance for tasks like threading nuts onto bolts are likely to be high resolution vision and force feedback (tactile sensing).

Regarding the actual implementation of the compliance, in the case of the passive, we may expect this to result from new generations of polymers that exhibit human skin-like mechanical characteristics, such as stress relaxation. Just in case anyone is wondering what this term refers to, stress relaxation is the property some materials have (particularly plastic or rubber type substances), of applying forces but only for a short time. (Laziness, I call it.) I don’t know if you have ever had an experience similar to the one I had a few years ago - of

fitting a tap with a rubber washer into a hole in a kitchen sink (Belfast type sink, as a matter of fact). Well, using your special box spanners bought for the job, you tighten the nut that holds the tap in place. You get it good and tight – applying as much force as you can. Then, after a few minutes you check that the nut is tight and, lo and behold, it’s not! You find that you can quite easily turn it – the reason for this is that the rubber washer has undergone stress relaxation. In the case of active compliance, the robot needs a sequence of motion commands that will correspond, in real time, to the information from its vision and tactile senses. The best way of generating these commands automatically is likely to be to again employ machine learning, with networks that have been previously trained on very similar tasks, using the task nature and sensory data as the input, and the needed motion commands as output.

The above discussion has become rather more technically detailed than intended; and it is likely that some of the approaches utilised and certainly terminology employed in the humanoid robotic systems of the far future will be rather different from what we currently envisage. But this does not alter the central conclusion that, in the future, robotic systems will emerge that will be able to do many of the difficult and dangerous tasks that are currently manually undertaken.

The presence amongst us of impressive human-shaped robots, or androids as I should call them, was, of course, introduced to us, or at least our imaginations, a long time ago now - in the previously mentioned anthology I, Robot

. The stories therein were surely among the most impressive studies of the roles androids could play in society with interesting situations that can arise, as have ever been penned. Asimov created a robot named Robbie and went so far as to imagine the discipline of robot psychology, which is practiced in his stories by a Dr Susan Calvin, who is discussed in more detail below.

Perhaps even more famous than Robbie though is, of course, Data, for whom the STNG writers explored robots’ rights in relation to their claim to be sentient beings as well as their right to be parents. These concepts are explored in depth in the STNG episodes ‘Measure

of a Man’ and ‘The Offspring’. In the latter, Data creates for himself a daughter named Lal – with impressive if not entirely successful results that lead to a moving ending.

Therefore, various factors make it likely that robots of the far future will be humanoid in form but capable of super-human performance in many respects – or at least that’s my view. This kind of talk reminds me of what my old maths teacher used to tell us back in the 1970s – specifically that, in the future, humans will not spend their time working but instead will have to fill their abundant leisure time. He qualified this by saying that this would not occur in our lifetimes (or even that of our children). He was certainly right about that last point. In any case, there can be little doubt that in the far future robots will be a great help to humans – but will they also constitute a threat?

The androids Data and Lal from the STNG episode The Offspring; Data created Lal to be his daughter. (Drawing by the author.)