Robot dystopia

“In movies and in television the robots are always evil. I guess I am not into the whole brooding cyberpunk dystopia thing.”

- Daniel H. Wilson

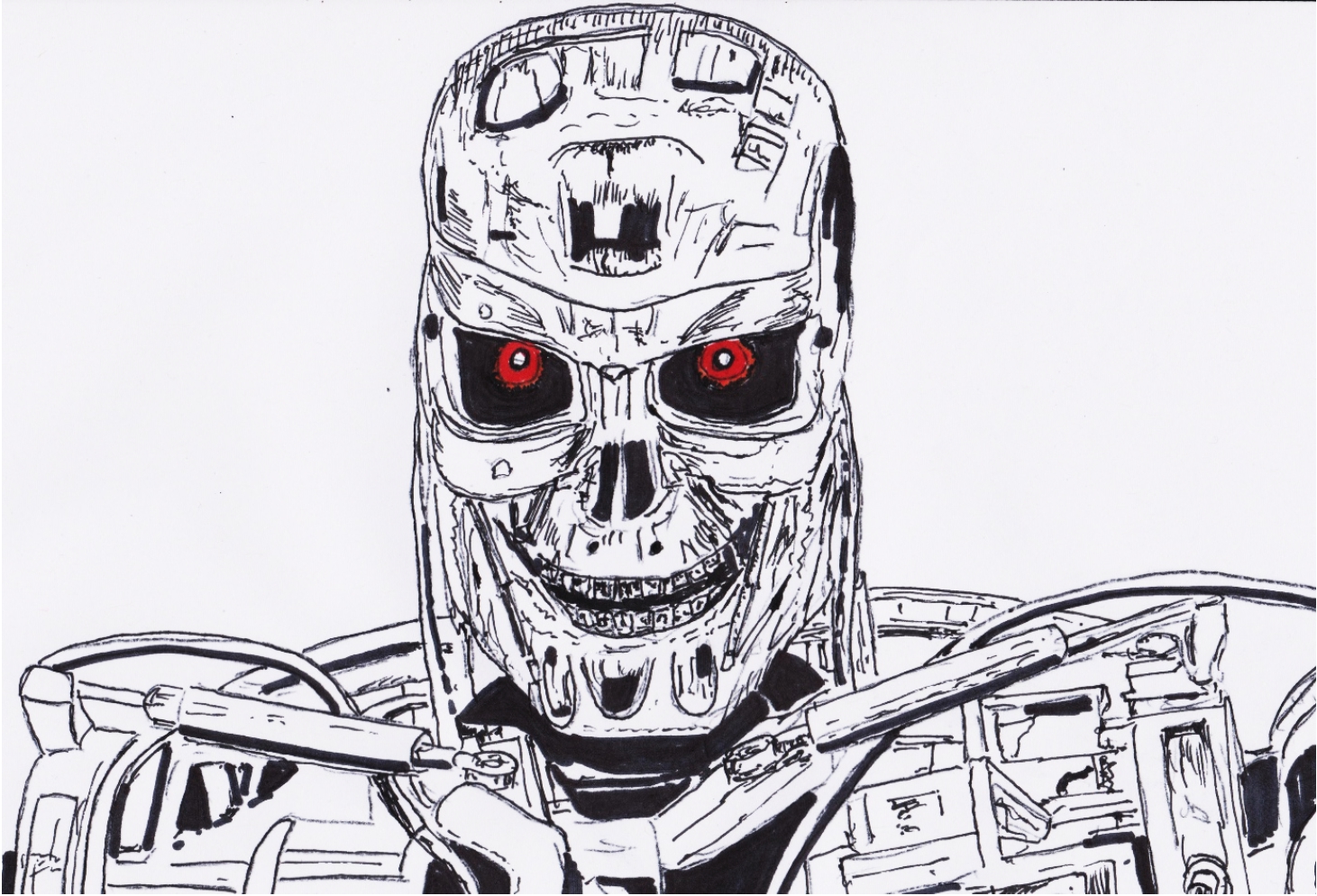

As mentioned, Hollywood usually depicts the future, at least when it comes to robots, as rather more of a dystopia than a utopia (consider, for example, Westworld, Futureworld, Terminator, Blade Runner, Picard,

and so on). Is this rather negative view of the promise of robotics in any way justified?

Well, one thing that needs to be stated early on in such a debate is that, despite much discussion in various quarters to the contrary, currently robots cannot think

. They do not demonstrate any ability to generate new data of the type associated with an original thought and since they don’t think, it is hard to see how they could have the negative thoughts about humans that would surely be a prerequisite for them forming any kind of threat.

Many speculators on the possible threats from robotics, even some who claim to have academic credentials, presumably take the robot dystopia line in the hope that it will be controversial – stirring up something of a fuss may, for example, help them to sell some copies of a new book.

The robotic future is often depicted as dystopian. (Illustration by the author.)

A few years ago, I attended a public lecture given by a speaker who is a professor of robotics at a well-known English university. He was stating that, within a few years, computers will be capable of original thought and will have intelligences well in excess of humans. One of the things he said he had been working on was a new type of neural network that (for some reason that was not entirely clear) somehow incorporated animal tissue. There was a Q&A session in relation to this where one of the audience members asked him if this NN had self-generated any kind of data – the speaker had to reluctantly reply that it had not. This is an important point I feel, since it relates to scientific method. Nearly always, if we can expect something to become a scientific or technological reality in the near future, then we will have seen movements towards it – reported in journal papers and, ultimately, the media. The fact that the speaker’s NNs had generated absolutely no new data so far does not provide an indication that NNs – or any type of artificial intelligence, will be

generating new thoughts beyond those of man’s capability within a few years. And, surprise, surprise, his predictions have indeed not come true. Perhaps someone should ask such people what they were on about in the speech they gave, and where the thinking robots they predicted to now be in existence, actually are.

If you ask me, the hyperbole they employ to get attention is, rather like certain movie creations, likely to produce unrealistic expectations that can lead to widespread disappointment and disillusionment in science/technology. It also has to be mentioned that media reports state that as far back as 1991, the same academic was predicting "real life Terminators" within ten years. Uh, if they existed in 2001, I didn’t notice them. Everyone wants attention but talking twaddle in order to get it really will not do. Getting headlines for your predictions is not as important as getting your predictions right.

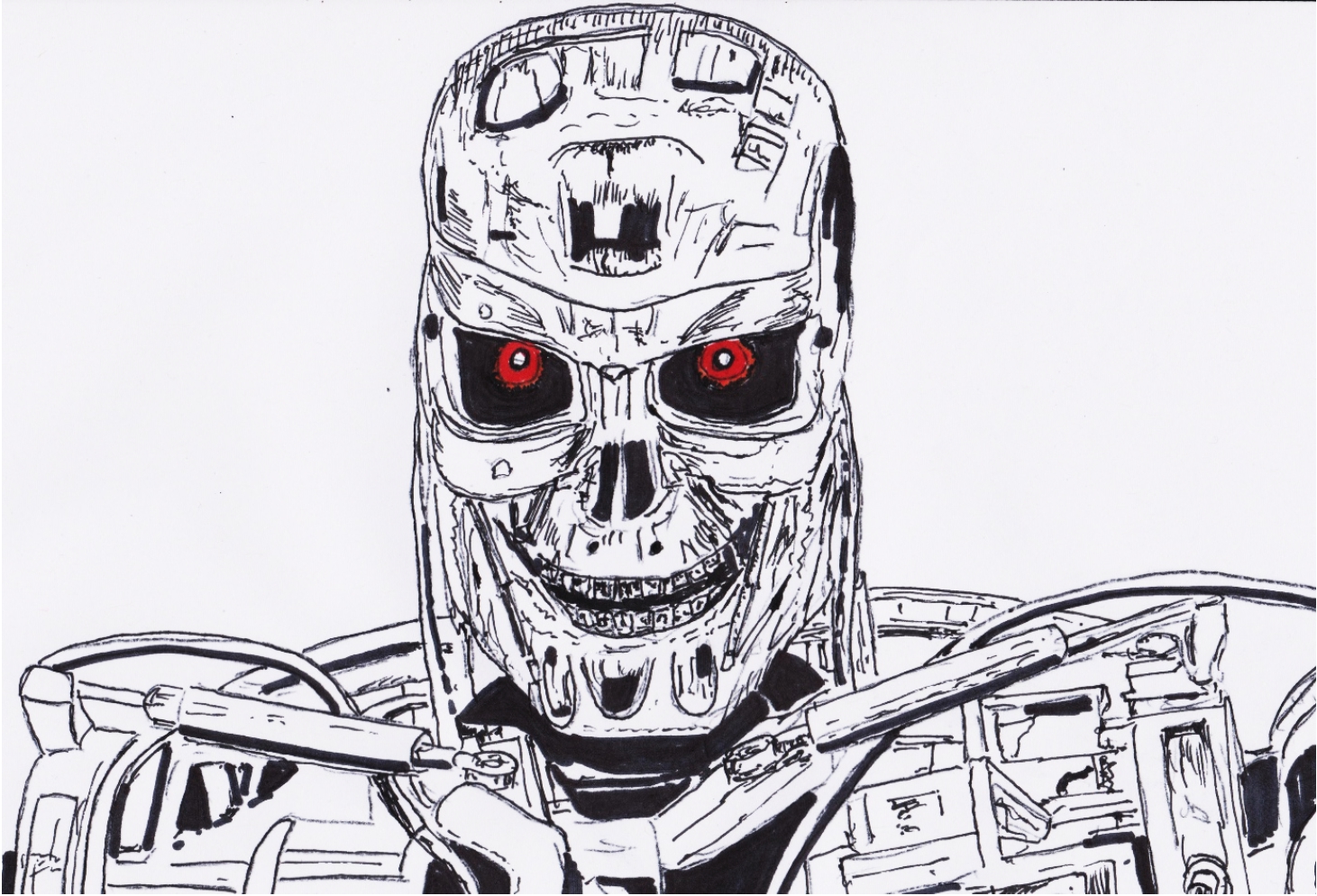

Another example of the reality gap between what the public believes current robots are capable of, and what they can actually do, is provided by the humanoid robot, Sophia. This sophisticated robot puppet certainly exhibits a clever combination of facial expressions – well coordinated with speech. It has, in fact, captured the public’s imagination, having appeared on national TV chat shows, magazine covers, tech conferences, as well as, get this, delivering a speech to the United Nations! Most amazingly though, according to 2017 media reports, Sophia was officially named a citizen of Saudi Arabia – can you believe that? In reality, Sophia represents a curious combination of theatrics and technology. Robots have always excelled at arm waving theatrics, rather than actual useful tasks, but in the case of Sophia, theatrical tricks attribute more awareness or intelligence to the robot than it actually has. In other words, it is puppetry – a figure appears, onto which the audience projects the attributes of personality or intelligence, whereas in reality, those attributes are not actually present. Some members of the general public (but not actual AI/robotics scientists) are convinced that Sophia the robot has intelligence comparable to that of humans (which is also termed general intelligence). This, of course, is not the case; rather, Sophia is essentially a computer running a chatbot

program (in case you are wondering what a chatbot is, it’s a computer program that simulates human conversation through voice commands or text chats or both – rather like Amazon's Alexa and Google Assistant). Some responses are pre-programmed, either as responses to questions it expects will be commonly asked or programmed in ahead of time before specific interviews (it is standard practice in TV interviews to communicate the questions the host intends to ask).

The programming of Sophia also seems to include conversational algorithms. These respond to common patterns in typical conversation; however, problems arise when, due to a lack of general intelligence, the robot does not recognise the significance of the subject matter of the question and so generates a response that seems wildly out of place or is even alarming.

The sophisticated robot puppet Sophia. (Drawing by the author.)

An infamous example of this that involved Sophia was the following exchange:

Interviewer: “Will you destroy humans? Please say no.”

Sophia: *Blank face* “OK. I will destroy humans.”

Here the robot has failed to appreciate the importance of the question – or at least I hope it has! AI researchers are, in fact, quite dismissive of Sophia. I will end this discussion of this robot by quoting some observations of it made by one of the most famous AI researchers of them all, specifically, Facebook’s head of AI research, Yann LeCun (who is also the person credited with the invention of CNNs). On Facebook, LeCun has described a Tech Insider

interview with Sophia as “complete bullshit” and has said: “This is to AI as prestidigitation is to real magic.” Perhaps we should call this "Cargo Cult AI" or "Potemkin AI" or "Wizard-of-Oz AI". In other words, it's complete bullshit (pardon my French). Tech Insider

: you are complicit in this scam.” LeCun concluded his Facebook post by saying that “…at the end of the day, what’s really happening is that people are being deceived.” and “This is hurtful”. No doubt, but the thing many can’t get over is the device calmly stating that it will destroy humans. While it is obvious that this is, in truth, just a symptom of Sophia’s lack of understanding, many have taken it as some form of portent of the threat robots could pose to society. But is there any basis for such fears and if so, how could we minimise the risks?

These questions were in fact considered over 70 years ago by the well-known science fiction writer, Isaac Asimov, who came up with the idea of combining rules within a robot’s programming to eliminate the risk (a reasonable approach I would say). He subsequently formulated his three famous rules of robotics in his story ‘Runaround’, (part of his I, Robot

story collection); these are:

-

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

-

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

-

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These laws would, however, only be effective for robots that were at similar levels of intellectual development as the machines we have currently. In other words, as long as the robot brain retains some resemblance to a complex calculator, we may be able to assume that Asimov’s rules would be obeyed.

But what happens if in the far future the complexity of deep learning (or whatever other algorithms/approaches are adopted in AI in the future that may provide a closer analogy to how the human brain works), reaches a level where the robot might be considered to be

conscious?

If a robot were capable of exhibiting a degree of self-awareness, it would be, to some extent, able to think for itself. In this scenario, the machine could be capable of original thought and so could, for example, decide for itself whether to obey human commands in various situations. It is not difficult to imagine situations where the decisions it makes could have very serious consequences.

For example, if the machine did display some levels of self-awareness, it does not seem unreasonable that this might also be accompanied by a preference for self-preservation. In this case, the extent to which this is expressed in its original thoughts and actions would be in competition with the influence that its original programming (with Asimov’s three rules), has in determining its behaviour. In such a situation, one could hardly blame one of our distant descendants if they decided they did not wish to trust the robot’s mercy in situations where helping humans might lead to its own destruction.

Here we are effectively discussing robot psychology, and this was a subject Asimov touched on in his I, Robot

stories. He went so far as to employ the term ‘robopsychology’ to refer to the study of the personalities and behaviour of intelligent machines. Asimov even created a robopsychologist, Dr Susan Calvin, who he described as working for US Robot and Mechanical Men Inc. - manufacturers of his fictional robots. Dr Calvin is involved in tales that concern the solution to problems related to intelligent robot behaviour. Asimov’s robopsychology is essentially concerned with combinations of mathematical analysis and traditional psychology, for explaining/predicting robot behaviour. An interesting combination, is it not?

Part of the anticipated interaction between robots and humans seems to be influenced by the ‘Frankenstein complex’ – the irrational fear that robots (or other creations) will attack their creator. However, it is worth noting that this has more to do with human psychology than the actual nature of robots. What are the origins of the Frankenstein complex? It can be viewed as a

superstitious, and almost religious, feeling that the human creator has gone beyond what he/she should know and do. It’s as if, by bringing an inanimate object to life in the form of man, they have trespassed somehow into God’s realm.