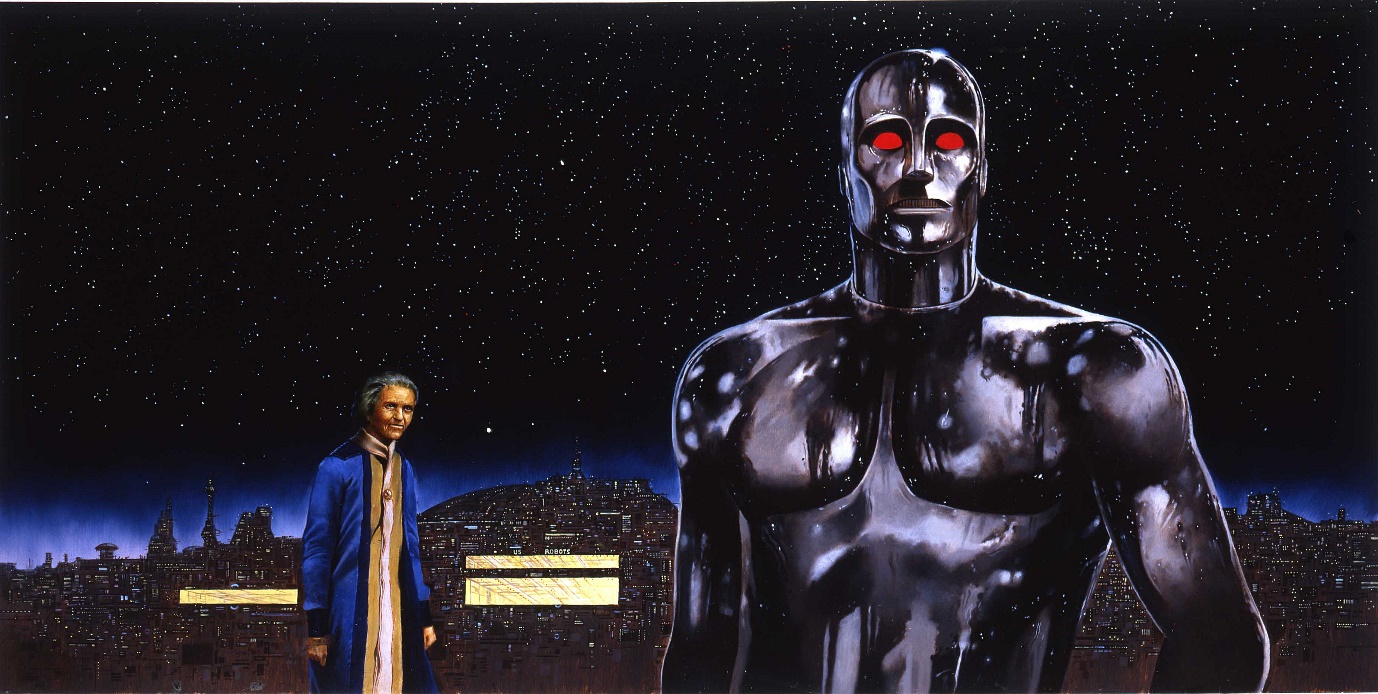

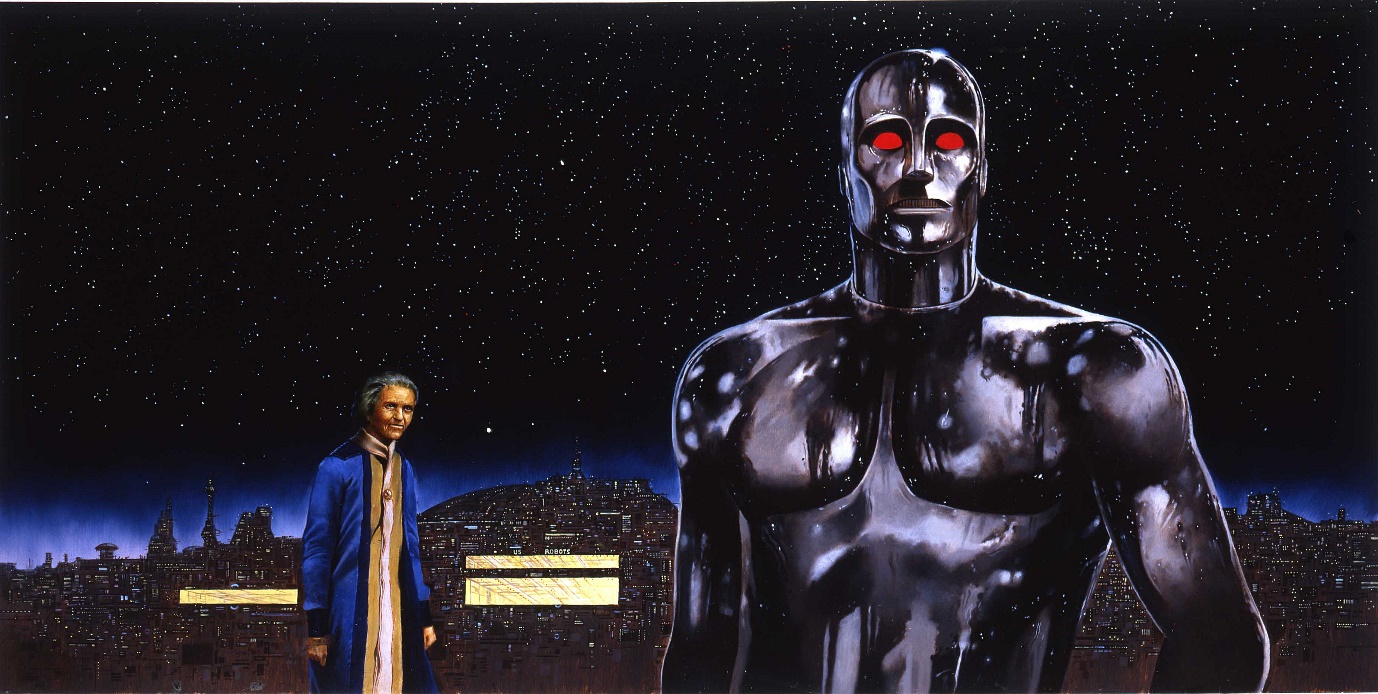

Dr Susan Calvin and one of Isaac Asimov’s fictional robots, in a book cover illustration by Les Edwards. (Illustration used with the permission of Les Edwards, www.lesedwards.com.)

Interestingly, in 1977 The Alan Parsons Project released an album that was originally intended to relate to Isaac Asimov’s classic stories I, Robot

, and Eric Woolfson actually spoke to Asimov about it, who was reported as being enthusiastic. However, the rights to the I, Robot

stories had already been sold, so the comma was dropped and the record was adapted to a more general theme of human versus artificial intelligence. The title of the final track, ‘Genesis Ch. 1 v.32’, also makes a religious reference, by implying that humans made robots in their image, as a continuation to the story of Creation (the first chapter of Genesis only has 31 verses). The Frankenstein Complex has then, more to do with ancient fears, and also perhaps a natural fear of the unknown, than it has with advanced technology. For filmmakers, it also represents an opportunity to generate an emotional response in the viewer, thereby making them feel more involved and so increasing interest in the movie. This may well explain why a dystopian robot future is so commonly portrayed by Hollywood – even in the film I, Robot

,

(which is in contrast to Asimov’s book – the latter being quite positive about the future of robotics and portraying robots as useful and powerful tools). Despite all these pessimistic views of the future, what reason do we have to suppose that robots that possess a degree of artificial intelligence would be hostile to humans? Precious little I would say. Consider, for example, a future where they have some intelligence and self-awareness, but at a much lower level than that of humans. This is, I suggest, somewhat similar to the situation for animals – dogs, for example. Do dogs have a strong desire to kill humans – surely not – in fact they are known as ‘man’s best friend’! However limited their intelligence may be, it is sufficient to enable them to, at some level, appreciate that their welfare and that of humans is intertwined. Then, the other possibility is that robots come to possess much greater intelligence and mental function than that of humans. This is a difficult scenario to imagine since mankind has far greater intelligence than any other beings of which he is aware or of which he has experience. Nevertheless, what advantage or benefit could such a robot obtain from attacking or destroying humans? Also, would not far greater intelligence also imply a higher level of ethical and moral awareness? Just as we judge that it is wrong to be cruel to and abuse animals that are less developed intellectually than ourselves, surely a super-intelligent robot would feel similar empathy and responsibility towards mankind.