A plausible account of mindreading ought to provide satisfying answers to the following two sets of questions. First, what is the function of mindreading? What advantages does it confer, and why might it have evolved? Second, how could we tell whether a subject is mindreading? These two questions are closely related, for to answer either one, we must give an account of which behaviors we should expect from a mindreader that we would not expect from a subject that does not reason about the mental states of others. In addition, to answer either requires an investigation of alternative cognitive processes that a subject may be utilizing. Finally, both questions require us to say what mindreading is so that we investigate what it does and where it can be found.

In this chapter, I will examine an influential account of mindreading, most prominently forwarded by Andrew Whiten (1994, 1996, 2013), according to which mental state attributions serve as intervening variables, intermediary causal links between a subject’s beliefs about various observable cues, including others’ present behavior, and her subsequent predictions about how others will behave. Importantly, these variables can unify perceptually disparate cue-behavior links, yielding more flexible predictions in a variety of contexts. Thus, a mindreader’s social inferences in different contexts may be represented by a single, unified causal model containing an intervening mental-state variable. In contrast, it is claimed, a subject that reasons about contingencies among observables alone is best represented by distinct causal models for each predictive context.1

On this account, we can conceive of empirical investigations of mindreading as attempts to determine which causal model most accurately represents a subject; e.g. is it one that contains an intervening mental state variable or one that does not? To answer such questions, we can turn to the field of causal modeling, an increasingly dominant approach among statisticians, epistemologists, computer scientists, and psychologists. Causal modeling provides tools for inferring causal structure from observable correlations, including tools for revealing the presence and content of intervening variables. It can also shed light on which models are particularly apt for playing various epistemic roles. Thus, the intervening variable approach seems to suggest promising empirical avenues for testing mindreading in children and nonhuman animals.

In this chapter, I will first provide a brief description of the intervening variable approach and some straightforward applications of the causal modeling framework that it suggests. Then I will examine several significant obstacles for using causal models to think about mindreading; these raise questions about whether we can appropriately represent mindreading via causal models and, if so, how we could know which causal model best represents some actual subject’s cognitive process.

Any attempt to answer questions about the function of mindreading and how to test for it runs into an immediate problem. Suppose we have a subject A who makes the prediction that B will perform some behavior P in the presence of observable cues O. For example, suppose that chimpanzee A observes that dominant chimpanzee B is oriented toward a piece of food (O), predicts that B will punish A if A goes for the food (P), and as a result, A refrains from taking it.

The mindreading hypothesis (MRH) states that A made this behavioral choice by attributing some mental state, M, to B; for example, A believed that B could see the food and that if B could see the food, B would punish A for taking it. The natural alternative is the behavior-reading hypothesis (BRH), according to which A predicted the behavior of another on the basis of O alone; for example, A believed that B was oriented toward the food and that if B is oriented toward the food, he will punish him for taking it (Lurz 2009).2

Because the mental states of others are not directly observable, any act of mindreading would require A to first attend to various observable cues in order to infer the likely mental state of B3; in our example, A must know that the dominant is oriented toward the food and that this means that he can see it. How, then, could we know that our subject A made his prediction about B’s likely behavior on the basis of both O and M (as the MRH would have it) or O alone (as the BRH would have it)? Further, given that A was necessarily behavior-reading on either hypothesis, when “would it become valid to say that a non-verbal creature was reading behavior in a way which made it of real interest to say they were mind-reading?” (Whiten 1996, 279).4

This so-called “logical problem” has been used to cast doubt on experiments that have purported to demonstrate mindreading in nonhuman animals and has received considerable attention in the psychological and philosophical literatures (the problem is most forcefully presented by Povinelli and Vonk 2004, and Penn and Povinelli 2007; for a sampling of critical discussions, see Lurz 2009, Andrews 2005, Halina 2015, Clatterbuck 2016, and Chapters 21 and 22 in this volume by Lurz and Halina, respectively). Povinelli and Vonk (2004) describe the logical problem as follows:

The general difficulty is that the design of these tests necessarily presupposes that the subjects notice, attend to, and/or represent, precisely those observable aspects of the other agent that are being experimentally manipulated. Once this is properly understood, however, it must be conceded that the subject’s predictions about the other agent’s future behavior could be made either on the basis of a single step from knowledge about the contingent relationships between the relevant invariant features of the agent and the agent’s subsequent behavior, or on the basis of multiple steps from the invariant features, to the mental state, to the predicted behavior.

(8–9)

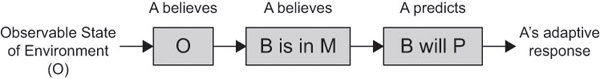

We can cast the problem in terms of the causal models that the MRH and BRH generate. An MRH model of A’s prediction will posit that some observable state, O, of the environment caused A to have a belief that O obtains. From this belief, A inferred that B was in mental state M, which in turn caused A to predict that B would perform some behavior P. Finally, this causes some observable response from A (see Figure 23.1).

However, there is also a BRH model of A’s behavior, according to which A’s belief that O obtains directly caused it to predict that B would P (see Figure 23.2).

In these causal models, the beliefs of the subject are represented as variables that may take alternative states (e.g. A does or does not believe that O obtains), and the arrows between them denote causal relations. We can add a parameter to each arrow denoting the direction and strength of the causal relationship via the conditional probability of the effect given its cause (e.g. Pr[A believes that O | O is present]).

It is possible to assign parameters to the two models in such a way that will guarantee that they are able to fit our data equally well; that is, they can make the same predictions about the probability that A would perform his adaptive response given the observable state of the environment.5 If this is the case, then observations of A’s behaviors will be evidentially neutral between the MRH and BRH. How, then, can we tell which of these models is true of A?6 Further, if A’s belief about O would, by itself, suffice for P, what function does the additional mental state attribution play?

These problems arise when we compare single causal chain MRH and BRH models. However, Whiten’s key insight into this problem is that mental state attributions need not merely serve as intermediary links between a single observable cue and single behavioral prediction. Instead, a subject may posit mental states to explain what many different behaviors in different observable circumstances have in common, thereby uniting various known cue-behavior links as instances of a single mental state.

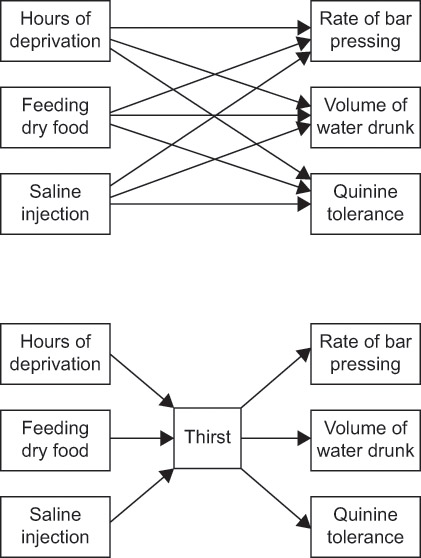

Consider the following example from Whiten (1996) of how we might attribute the mental state of “thirst.” A purely behavior-reading subject may represent separate contingencies between various observable cues (e.g. B is fed dry food) and behavioral consequences (e.g. B drinks a large volume of water). A mindreader, on the other hand, may notice a pattern in the data, given that diverse inputs are all leading to the same outputs, and thereby posit thirst as an intervening variable, “the value of which can be affected by any or all of the input variables, and having changed, can itself affect each of the outputs” (Whiten 1996, 284). The behavior-reader and mindreader can be depicted by the causal models shown in Figure 23.3.

If the arrows in each graph have positive parameter values, then according to either, the subject will predict that responses on the right will follow from inputs on the left (and again, given the parameters we assign, they might predict the very same conditional probabilities). What, then, would allow us to distinguish between the two models? And is there any advantage to being as described by one rather than the other?

Source: Reprinted from Whiten (1996, 286).

Whiten (1996, 2013) identifies two key differences between these two models. The first has to do with the predictions that a mindreader can make in novel observable contexts. A mindreader

codes another individual as being in a certain state such as ‘fearing’, ‘wanting’ or ‘knowing’ on the basis of a host of alternative indicator variables, and uses that information more efficiently to take actions that are apt for different adaptive outcomes in different circumstances than would be possible if the vast number of alternative pairwise links had to be learned.

(Whiten 2013, 217)

For example, consider a behavior-reader that knows the links between the first two inputs on the left-hand side of the model (hours of deprivation and dry food) and each of the three behavioral consequences on the right-hand side, and observes that a saline injection also leads to a high rate of bar pressing. If these stimuli are treated as distinct from the other two, the subject would still have to learn whether saline injections would also lead to, say, a high volume of water drunk. On the other hand, this observed contingency might suffice for a mindreader to link saline injections to the known “thirst” variable, in turn linking it to the known consequences of thirst without having to observe these contingencies.7

In addition to making past observed contingencies relevant to new cases, the introduction of an intervening variable also has a more syntactic effect on a causal model, reducing the number of causal arrows it contains (in Figure 23.3, from nine to six). For every new cue that is added to the behavior-reading model, separate arrows must be created which link it to each output variable. However, an intervening variable serves as an “informational bottleneck” which requires the addition of just one additional arrow.

Whiten argues that mindreading models are thus simpler and “more economical of representational resources” (Whiten 1996, 284). However, if mindreading’s function lies in the simplicity of the models it generates, then a seeming paradox arises. The best candidates for mindreading are large-brained primates and corvids, suggesting that the process of positing intervening variables to capture complex patterns requires significant neural and cognitive resources. Further, on Whiten’s conception, this process does not obviate the need to recognize a complex system of cue-response links first before the mindreading variable can be introduced. In what sense, then, are mindreading models simpler in a way that yields benefits for its users?

An answer to this question is suggested by Sober’s (2009) application of model selection criteria to the case at hand. As noted above, it is possible to assign parameter values to the BRH and MRH models such that they will make similar predictions; that is, they can be made to fit to the data (roughly) equally well. However, introducing an intervening variable reduces the number of causal arrows, and thus adjustable parameters, required by a causal model. Interestingly, this has important epistemic consequences.

One of the central challenges of modeling is to strike the right balance between underfitting (failing to pick up on the “signal”) and overfitting one’s data (picking up on the “noise”). Models with more parameters typically allow for a closer fit to data but also run the risk of overfitting to noise; hence, it is well known by modelers that simpler models are often more predictively accurate (Bozdogan 1987, Forster and Sober 1994). Model selection criteria are attempts to formally describe this trade-off between fit and complexity (as measured by the number of parameters). Sober employs one such criterion,8 the Akaike Information Criterion (AIC), which has been proven to provide an unbiased estimate of a model’s predictive accuracy.9 AIC takes into consideration a model’s fit to data (Pr[data | L(M)]) and subtracts a penalty for the number of adjustable parameters, k:

AIC score of model M = log [Pr(data | L(M))] – k

Thus, models that fit the data roughly equally well can differ in their AIC scores, with more complex models suffering lower expected predictive accuracy.

The upshot is that while the BRH and MRH models may fit the data equally well, the latter will often contain fewer adjustable parameters as a result of its intervening mental state variable. Therefore, a subject that uses such a mental model can be expected to make more accurate predictions.10 This buttresses Whiten’s contention that for a mindreader, “behavioral analysis can become efficient on any one occasion, facilitating fast and sophisticated tactics to be deployed in, for example, what has been described as political maneuvering in chimpanzees” (Whiten 1996, 287). This also demonstrates that even if the only function of mindreading were to systematize already-known observable regularities, it might still contribute significantly to prediction.

However, there are several problems with the suggestion that the distinctive function of postulating intervening mental state variables is in allowing their users to make the same predictions but faster and more accurately. First, this function is difficult to test for; what baseline speed or accuracy should we expect from a behavior-reader, in order that we may compare it to a mindreader? Second, we might wonder whether mindreading would be worth the investment if it did not provide genuinely new kinds of predictive abilities.11 Lastly, as we will soon see, there are also behavior-reading intervening variables that may serve the same syntactic role of simplifying models.

While model selection considerations shed light on the advantages of using a model containing intervening variables, they don’t provide a methodology for testing for them. However, other tools from causal modeling are more promising to this end.

According to the intervening variable approach, the predictions that mindreaders make in various contexts all have a common cause, a variable denoting the presence or absence of a mental state. In contrast, the predictions of behavior-readers across observationally disparate situations are independent of one another. For simplicity, consider the two models of predictions made in observable contexts, O1 and O2, where there is a direct causal connection between two variables if and only if there is an arrow linking them shown in Figure 23.4.

For example, O1 might be a context in which a dominant chimp is oriented toward a piece of food and O2 might be one in which the dominant is within earshot of a piece of food (where this is an observable property, such as distance) that would be noisy to obtain (as in the experiments of Melis et al. 2006). Each of these states is associated with the dominant punishing the subordinate for taking the food (P1), going for the food himself (P2), and so on. A mindreading chimp represents both of these situations as one in which the dominant is in some mental state M, such as perceiving the subordinate taking the food or knowing where the food is. A behavior-reading chimp represents them via distinct learned contingencies.

Each of these models predicts that A will exhibit adaptive responses in both observable contexts. However, they make different higher-order predictions about the correlations between those behaviors.12 This follows from the Causal Markov Condition (CMC), a fundamental assumption of most prominent causal-modeling approaches. Informally, it says that once we know the states of a variable’s direct causes (its “parents”), then that variable will be independent of all other variables in the graph except the variables that it causes (its “descendants”). Slightly less informally, if there is a causal arrow from A to B (and no other arrows into B), then conditional on the state of A, the state of B is independent of all other variables in the graph except for any variable C for which there is a directed causal path from B to C.13

Applied to the BRH model in Figure 23.4, the CMC states that once we take into account the observable states of the environment, A’s belief that O1 will be independent of all other variables in the graph except those that it causes; thus, it will be independent of any of its beliefs or behaviors in context 2 (the variables in the bottom row). A behavior-reader’s responses in the two situations will be probabilistically independent once we have conditioned on the observable state of the environment. That is, a behavior-reader who performs an adaptive response in context 1 will be no more likely to do so in context 2 (or vice versa).

This is not true of the MRH, however. If we merely condition on the observable states of the environment, we have not yet accounted for a common cause of the adaptive responses in both contexts. Because the belief about B’s mental state is a more proximate cause of those behaviors and we expect that the effects of a common cause will be correlated,14 we should expect that a mindreader’s behaviors in the two contexts will be probabilistically dependent, even once we have conditioned on the observable states of the environments.

In theory, then, we can experimentally distinguish between these two models by testing whether a chimp that performs the successful behavior in one experiment is more likely to perform the successful behavior in the other. In our working example, if chimps who successfully avoided the food that the dominant is oriented toward are no more likely to avoid the food that the dominant can hear, then this is good evidence that they were reasoning via separate, behavioral contingencies rather than an intervening mental state attribution.15

Let us grant that independence in behaviors across context is evidence against mindreading.16 Is the converse true? Does a correlation in behaviors indicate that a subject was indeed mindreading? Unfortunately, the inference in this direction is far more fraught and has opened the intervening variable approach to significant objections.

The central predictive difference between the MRH and BRH – the presence or absence of a correlation in behaviors, conditional on observable contexts – depends on the assumption that an intervening mental state attribution variable is the only thing that could impose such a correlation. However, there are other causal connections among mental representations in different contexts that could also lead to correlated behaviors, and unless these are controlled for, the intervening variable approach will have difficulty establishing the presence of mindreading.

The first class of alternative behavior-reading explanations for a correlation in behaviors across observable contexts posits that while there is no intervening variable in use, there may be other causal links between the observable states or the subject’s representations of them (see Figure 23.5).

Heyes (2015) suggests two mechanisms through which this could occur. First, if the O1 stimuli is perceptually similar to that in O2, stimulus generalization may cause a subject to treat the O2 context as if it were the O1 (the second dashed arrow in Figure 23.5). Second, situations in which O1 is present might typically be ones in which O2 is also present; for instance, situations in which a dominant is oriented toward food might also be ones in which he is in earshot of food (the first dashed arrow in Figure 23.5). In this case, mediating conditioning can cause the stimuli to “become associated with each other such that presentation of one of these stimuli would activate representations of them both, and thereby allow ‘pairwise’ learning involving the stimulus that was not physically present” (Heyes 2015, 319).

Still other confounds may be present. There may be correlations between the adaptive responses that are called for in each situation, such that a subject who produces one often produces the other. Lastly, for reasons of development or subjects’ prior experience, a subject who possesses the top pairwise link may be more likely to possess the other (for example, perhaps only particularly astute or experienced chimps will pick up on both).

Controlling for these possibilities is indeed a central methodological concern among mindreading researchers, and we might hope that good experimental design could solve such problems.17 However, there is another alternative behavior-reading hypothesis that poses a somewhat more vexing challenge (see Figure 23.6).

The problem here is that a behavior-reader may also use an intervening representation that unites both observable contexts. This variable denotes some observable generality (OG) of which both O1 and O2 are instances; this may be some single feature that both share, a common prototype that is used to represent both,18 or some other perceptually based category that nevertheless seems to fall short of a genuine mental state attribution. For example, in response to experiments that purport to show that chimpanzees know what others see, skeptics of the MRH have argued that chimpanzees could solve these tasks by representing various experimental contexts as ones in which the dominant had a “line of sight” to the food, where this is an observable, geometric property of the environment (Lurz 2009; Kaminski et al. 2008).

The logical problem re-emerges, then, at the level of intervening variables. Given that an intervening behavior-reading variable will also impose a correlation in behaviors across contexts, this simple test will not suffice to distinguish between that model and the MRH. Additionally, if such variables are often available as heuristic stands-in for mental state attributions, what is the unique function of “genuine” mindreading?19

The methodological problems raised above arose from the fact that we were testing for a merely syntactic property of models (whether effects were correlated). Because MRH and BRH models with intervening variables have the same syntactic properties, this test will not distinguish between them. What is needed, then, is an approach that is sensitive to the semantic differences between mental state attributions, on the one hand, and representations of their perceptual analogs, on the other.

A few avenues for resolving this problem suggest themselves. First, we might insist that there is no real semantic difference between “genuine” mindreading variables and observable-level variables that unite particularly complex and sophisticated webs of observable contexts.20 Alternatively, we might insist that there is a real difference and test for whether a subject makes novel predictions (that is, beyond the known cue-behavior links to which the intervening variable was introduced) that are instances of a mental regularity but not a corresponding observable-level regularity, or vice versa; however, doing so will involve an in-depth examination of the semantics of mental state attributions (Clatterbuck 2016, Heyes 2015). Likewise, we might abandon the idea that there is some bright line distinguishing mindreading and behavior-reading at any single point in time and instead focus on subjects’ learning trajectories over time, evaluating “candidate mental state representations by seeing whether the animal can revise them to be sensitive to new and additional sources of evidence for the target mental state” (Buckner 2014, 580).

We might have hoped that the intervening variable approach could offer a quick fix to some of the empirical and theoretical problems that beset investigations of mindreading: if mindreading models have a different causal structure than behavior-reading models, then simple syntactic tools might easily distinguish between them. However, as the foregoing discussion shows, there are many factors that complicate this picture and demand both careful experimental controls for confounding variables and a renewed focus on the semantic differences between mindreading and other types of social cognition.

1 This assumption will be called into question in what follows.

2 See Butterfill (Chapter 25 of this volume) for a discussion of how to formulate a “pure” BRH and minimal mindreading alternatives that do not attribute full-fledged representations of mental states.

3 Characterizing this as an inference may be tendentious, but I will continue to do so for ease of exposition. If one likes, they can read “inference” as “The representation of O causes the representation of M” or “The O variable causes the M variable.”

4 Here, I will focus more on the epistemological question and less about the semantic question of what counts as mindreading, though of course these are intimately related. For a discussion of a “logical problem” with respect to the latter, see Buckner (2014).

5 One has to make certain assumptions to ensure that the MRH and BRH have equal fit to data (Penn and Povinelli 2007, Sober 2009, Clatterbuck 2015).

6 As presented by Povinelli and Vonk (2004) and Penn and Povinelli (2007), the logical problem has two parts. The first is that for any MRH model like this, there will always be a BRH model that fits the data equally well. The second part states that this BRH model will be more parsimonious, in virtue of its attributing fewer beliefs to A, and therefore ought to be favored on those grounds. I will not address this second claim here. See Fitzpatrick (2009) and Sober (2015) for more general discussions of parsimony in the debate over mindreading.

7 Whether this capacity is restricted to mindreaders will be discussed in the next section.

8 For an overview of various model selection criteria, see Zucchini (2000).

9 “By predictive accuracy of a model M we mean how well on average M will do when it is fitted to old data and the fitted model is then used to predict new” (Sober 2009, 84).

10 Sober (2009) uses model selection considerations to show that we can more accurately predict subjects’ behaviors via MRH models and that this gives us some reason to believe that those models are true. Clatterbuck (2015) argues that this argument is problematically instrumentalist, and instead, we should use Sober’s insight to shed light on the advantage of possessing an MRH model, not merely being described by it.

11 See Andrews (2005, 2012) for a defense of the view that the distinctive function of mindreading is not to predict behavior but rather to explain and/or normatively assess behavior.

12 See Sober (1998) for a comprehensive, and perhaps the first, application of this technique to the mindreading debate, and Sober (2015) for an elaboration of the technique.

13 I am glossing over a great many technical details of the CMC which vary somewhat relative to the formal frameworks in which it is used. See Hausman and Woodward (1999), Spirtes et al. (2000), and Pearl (2000). For a helpful introduction, see Hitchcock (2012).

14 This will only be true, in general, if we assume Faithfulness. See Spirtes et al. (2000, Ch. 3).

15 In the Melis et al. (2006) work that inspired this example, it was shown that chimps more often than not chose to pursue food in ways that their competitor could not see, in one task, and in ways their competitor could not hear, in another. The authors took this to be some evidence that chimps may understand what others perceive, in such a way that is not limited to a particular sensory modality. Notably, the experimenters only tested for whether their chimps, in the aggregate, succeeded above chance levels in each of the two experiments. They did not test for whether individual performances were correlated on the two tasks.

16 A problem here is that a mindreader could use separate mental state attributions in each context, in which case, her subsequent behaviors would still be independent.

17 For a good example, see Taylor et al. (2009). For a discussion of how to control for developmental confounds, see Gopnik and Melzoff (1997).

18 For a discussion of how different types of mental categorizations, such as prototypes or examplars, can be represented using directed acyclic graphs, see Danks (2014).

19 For a presentation and critical examination of an argument for why such observable-level variables will always be available, see Clatterbuck (2016).

20 Whiten suggests that one can count as a mentalist if one uses a single variable which represents another as being in a certain state, even if one doesn’t have a fully fledged concept of beliefs as being “in the heads” or as being internal causes of their behaviors (1996, 286).

For a comprehensive examination of the various roles that parsimony plays in causal inference and its relation to the animal mindreading debate, see E. Sober, Ockham’s Razors (Cambridge: Cambridge University Press, 2015). D. Danks, Unifying the Mind: Cognitive Representations as Graphical Models (Cambridge, MA: MIT Press, 2014) presents a unified framework for thinking about many types of mental representations and activities in terms of causal models, as well as a helpful and accessible introduction to the technical details of various causal modeling frameworks.

Andrews, K. (2005). Chimpanzee theory of mind: Looking in all the wrong places? Mind & Language, 20(5), 521–536.

——— (2012). Do apes read minds? Toward a new folk psychology. Cambridge, MA: MIT Press.

Bozdogan, H. (1987). Model selection and Akaike’s information criterion (AIC): The general theory and its analytical extensions. Psychometrika, 52(3), 345–370.

Buckner, C. (2014). The semantic problem(s) with research on animal mindreading. Mind & Language, 29(5), 566–589.

Clatterbuck, H. (2015). Chimpanzee mindreading and the value of parsimonious mental models. Mind & Language, 30(4), 414–436.

——— (2016). The logical problem and the theoretician’s dilemma. Philosophy and Phenomenological Review. DOI:10.1111/phpr.12331

Danks, D. (2014). Unifying the mind: Cognitive representations as graphical models. Cambridge, MA: MIT Press.

Fitzpatrick, S. (2009). The primate mindreading controversy: A case study in simplicity and methodology in animal psychology. In R. Lurz (Ed.), The Philosophy of Animal Minds (pp. 258–277). Cambridge: Cambridge University Press.

Forster, M., and Sober, E. (1994). How to tell when simpler, more unified, or less ad hoc theories will provide more accurate predictions. The British Journal for the Philosophy of Science, 45(1), 1–35.

Gopnik, A., and Meltzoff, A. (1997). Words, thoughts, and theories. Cambridge, MA: MIT Press.

Halina, M. (2015). There is no special problem of mindreading in nonhuman animals. Philosophy of Science, 82, 473–490.

Hausman, D., and Woodward, J. (1999). Independence, invariance, and the causal Markov condition. British Journal for the Philosophy of Science, 50, 1–63.

Heyes, C. M. (2015). Animal mindreading: What’s the problem? Psychonomic Bulletin and Review, 22(2), 3113–327.

Heyes, C., and Frith, C. (2014). The cultural evolution of mind readings. Science, 344(6190), 1357–1363.

Hitchcock, C. (2012). Probabilistic causation. In Edward N. Zalta (ed.), The Stanford Encyclopedia of Philosophy (Winter 2012 Edition), http://plato.stanford.edu/archives/win2012/entries/causation-probabilistic/

Kaminski, J., Call, J., and Tomasello, M. (2008). Chimpanzees know what others know, but not what they believe. Cognition, 109, 224–234.

Lurz, R. (2009). If chimpanzees are mindreaders, could behavioral science tell? Toward a solution to the logical problem. Philosophical Psychology, 22, 305–328.

Meketa, I. (2014). A critique of the principle of cognitive simplicity in comparative cognition. Biology & Philosophy, 29(5), 731–745.

Melis, A. P., Call, J., and Tomasello, M. (2006). Chimpanzees (Pan troglodytes) conceal visual and auditory information from others. Journal of Comparative Psychology, 120(2), 154.

Pearl, J. (2000). Causality: Models, reasoning, and inference. Cambridge: Cambridge University Press.

Penn, D. C., and Povinelli, D. J. (2007). On the lack of evidence that non-human animals possess anything remotely resembling a ‘theory of mind’. Philosophical Transactions of the Royal Society of London B: Biological Sciences, 362(1480), 731–744.

Povinelli, D. J., and Vonk, J. (2003). Chimpanzee minds: Suspiciously human? Trends in Cognitive Sciences, 7(4), 157–160.

——— (2004). We don’t need a microscope to explore the chimpanzee’s mind. Mind & Language, 19(1), 1–28.

Sober, E. (1998). Black box inference: When should intervening variables be postulated? The British Journal for the Philosophy of Science, 49(3), 469–498.

——— (2009). Parsimony and models of animal minds. In R. Lurz (ed.), The Philosophy of Animal Minds. New York: Cambridge University Press.

——— (2015). Ockham’s razors. Cambridge: Cambridge University Press.

Spirtes, P., Glymour, C., and Scheines, R. (2000). Causation, prediction, and search (2nd ed.). Cambridge, MA: MIT Press.

Taylor, A. H., Hunt, G. R., Medina, F. S., and Gray, R. D. (2009). Do New Caledonian crows solve physical problems through causal reasoning? Proceedings of the Royal Society B, 276, 247–254.

Whiten, A. (1994). Grades of mindreading. In C. Lewis and P. Mitchell (Eds.), Children’s early understanding of mind (pp. 277–292). Cambridge: Cambridge University Press.

——— (1996). When does smart behavior-reading become mind-reading? In P. Carruthers and P. Smith (Eds.), Theories of theories of mind (pp. 277–292). Cambridge: Cambridge University Press.

——— (2013). Humans are not alone in computing how others see the world. Animal Behaviour, 86(2), 213–221.

Zucchini, W. (2000). An introduction to model selection. Journal of Mathematical Psychology, 44, 41–61.