I want to become a mother with the paternity unknown.

—Pauline Roland, early feminist, 1832*1

The single individual who best epitomized the dramatic ascent of rationality in a time shot through with madness was Isaac Newton (1642-1727). His scientific discoveries convinced a majority of educated Europeans that the universe consisted of quantifiable objects and measurable forces obeying immutable laws. The left brain’s faculties of reason and mathematical skills were crucial in discovering and proving these laws.

Economists, philosophers, and political theorists soon grafted Newton’s natural law onto all aspects of life. The late eighteenth century’s Enlightenment, a culmination of triumphs for the left hemisphere, celebrated in advance the inevitable taming of both wild nature and irrational behavior. These, many thinkers believed, would inevitably be brought to heel by the sustained application of linear thinking. These same thinkers deemed anything that could not be comprehended by reason was “other”; by which they meant it was secondary, insignificant, not namable, less than real.

For many men, women fell into the category of “other.” “Natural law” reinforced their conviction that they were “naturally” superior to women. Using “irrefutable” logic, they “proved” beyond doubt that the male was the standard and the female a defective version of him.† Women’s rights and the attributes associated with the right brain suffered accordingly. Thus, European civilization passed from a patriarchal society based on laws handed down three thousand years earlier by a male deity into a new version of patriarchy founded on “natural laws” discovered by male scientists.

Each successive scientific discovery persuaded more people that science was a credible belief system. Unfortunately, the faithful arrived at the joyless conclusion that the world was devoid of spontaneity. Newton and the scientists who followed him described a world in which every effect was due to a previous cause. The Age of Miracles was officially over, replaced by a new age and a new metaphor: that of the Majestic Clockwork. A dispassionate Creator fashioned it, wound it, set it ticking and then withdrew to become a non-participant in both the daily affairs of humans and the operation of the clock. This spiritual black hole was dubbed “scientific determinism.”

Newton’s mechanics imprisoned Free Will by proving that planets and billiard balls must follow the laws of mass and motion. If they seemed to deviate, it was only because the scientist did not yet know all the variables. There was no such thing as luck, chance, or the unexpected. Voltaire put it this way: “It would be very singular that all nature and all the planets should obey eternal laws, and that there should be a puny animal, five feet high, who, in contempt of these laws, could act as he pleased.”2

Later, in 1859, the left brain received what could be seen as its ultimate scientific validation. Charles Darwin published On the Origin of Species. Darwin’s view reduced the vaunted Homo sapiens from the pinnacle of creation to just one species among many. This revolutionary displacement of Man challenged the Bible’s claim that God had given Adam dominion over the animal kingdom.

Women should have, but did not, benefit from this demotion of Adam. The social philosophers of the day pounced on the phrase “survival of the fittest,” coined by Herbert Spencer. Spencer postulated that the havoc and mayhem of war were necessary forces that periodically pruned the human species of deadwood. Had not Darwin explained that natural selection required strife in order for the alpha male (not uncommonly the strongest and most aggressive) to rise above the pack? In a dog-eat-dog world, love, nurturance, and cooperation were perceived as signs of weakness. Henceforth, the Robber Baron could turn to natural law to justify his crass grasp for power. But this is getting ahead of the story. Earlier, there was another social change that profoundly affected the relationship between the sexes.

The Enlightenment and the scientific discoveries that underpinned it paved the way for a far greater drama—the Industrial Revolution. This event had a profound effect on human relationships; comparable in impact only to the changes previously wrought by the development of agriculture and writing. Two key factors precipitated the Machine Age: the depletion of Europe’s forests, and human inventiveness. By the mid-1700s, swarming humankind, thick as termites, had chewed its timber stock to stumps. The dense canopy of trees that had once shaded Europe had been relentlessly clear-cut. Firewood, the fuel of preference, became increasingly expensive as axemen had to venture ever farther from the king’s hearth to find virgin stands to fell. It was the first energy crisis since the discovery of fire 750, 000 years earlier. One can just imagine the scene repeated from Bavaria to England: court ministers tentatively showing His Majesty a lump of sooty, black rock, then apologetically explaining that although coal did not burn with the clean snap and crackle of fire logs, and tunnels had to be dug in the earth to get at it, coal would keep the populace warm in winter and cook their food.

Once coal, a cheap, seemingly inexhaustible source of energy, had been identified, human inventiveness came into play. Together they were a momentous combination. Scientific discoveries bubbled forth from one laboratory after another. By 1725, science had surpassed organized religion as the chief influence shaping European and American culture. In the early nineteenth century, scientists discovered the secrets of heat energy, which led to the invention of the steam engine. which in turn gave new meaning to the word power.*

The Industrial Revolution aggressively increased the sum total of tangible wealth and made possible many advances unimaginable in the preceding century. One technological marvel after another contributed to a rapidly rising standard of living. But these innovations came with a price. The exploitation of children and a widening disparity between the rights and prerogatives of the sexes were just two of them.

As the eighteenth century ticked to a close, no one had the slightest inkling of the titanic force that was about to crash into his or her lives. And after the debris from the Industrial Revolution had been swept away, society would be unrecognizable from what it had been. Whole populations migrated from farms to mills and as the population of cities soared; their inadequate infrastructures groaned under the weight. Family bonds splintered as former farmers disappeared into mines and factories, and mothers labored endless hours in sweatshops. Owning the means of production began to supplant owning land as the premier source of wealth.

The new era reeked of male sweat and engine oil. The left brain was boss. The Industrial Revolution was a combination of science, brawn, finance, mathematics, and competition and it was pursued without much concern for its effects on family life or community. The dark clouds of soot belching forth from factory smokestacks and the unearthly glow of slag heaps lit by Bessemer furnaces were considered by entrepreneurs as proud symbols of progress. These men raped Mother Nature with nary a concern for the future, ignoring the lesson of the clear-cut tree trunks.

Inspired by a small number of women who made their mark as authors, nineteenth-century women began to pull themselves up by their own bootlaces. Literacy rates for women had risen steeply. The brilliance of accomplished literate women began to subvert the notion that women were intellectually inferior. Women increasingly contributed to the culture’s literature and, in a few cases, to its science.

Fiction and poetry writers create images with words through the use of similes, metaphors, description, and analogies and magically illuminate a scene or action in the reader’s mind. There was a blossoming of literary luminaries, including Jane Austen, Louisa May Alcott, Elizabeth Barrett, George Sand, the Brontë sisters, Germaine de Staël, and Mary Shelley. London in the 1830s was a literary mecca, and a third of its published writers were women.3 The literary scene in Paris was supported by many women both as writers and readers.

In the late eighteenth and early nineteenth centuries, intellectuals fissured into two opposing camps. Voltaire, Diderot, Kant, Hume, and Locke contributed to the intellectual heft of the times as the Age of Reason’s linear thinkers segued effortlessly into the Enlightenment. Their views on matters of importance generated a strong reaction from those representing the right brain, whose champions initiated the Romantic movement. Rousseau, Keats, Byron, Goethe, and Shelley, repulsed by the Industrial Revolution’s heavy-handed abuse of the earth’s resources, extolled love, nature, and beauty. Enlightenment enthusiasts claimed reason was superior to emotion; to the Romantics, feelings were the surer guide to truth.

To the Enlightenment crowd, the Romantics seemed to be tilting at windmills. The ear-splitting, smoke-spewing Industrial Revolution and the left-brained values it represented appeared as unstoppable as a barreling locomotive. But two innovations provided a major assist to the values of the right brain: the invention of photography and the discovery of the electromagnetic field. They appeared in culture simultaneously, and together they would eventually reconfigure every aspect of human interaction. Photography and electromagnetism elevated the importance of images at the expense of written words, and in so doing began to bring balance back to the leftbrain-leaning European-language-speaking peoples. Before we examine how this came about, a telling of two stories is in order.

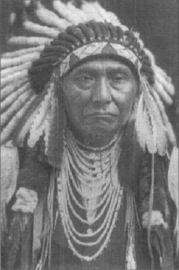

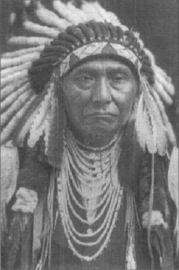

Leonardo da Vinci had described the principle of the camera obscura in the Renaissance. In 1837, Jacques Mandé Daguerre developed a technique of “fixing” images on metal and he named his invention “daguerreotypes.” Daguerre’s innovation became a household word almost overnight. With a flash of magnesium and the click of a shutter, the camera recorded a slice of visual space, preserving one moment out of the linear sequence of time. Photography can be read as meaning “writing with light.” Opposite in nearly every respect from writing with ink, photography illuminated Europe like a bolt of lightning. It seemed that by the second half of the nineteenth century just about everyone had sat for his or her photograph at least once. Old photographs of American Indians who sometimes traveled hundreds of miles just to have their likenesses preserved in silver nitrate salts attest to the daguerreotype’s seductive draw.

Philosopher José Argüuelles observed,” Photography is one of those technical devices which has so drastically altered our senses and upon which we have developed such a profound dependence that it is difficult, indeed impossible, for us to think about it with any degree of detachment.”4 The right brain’s face-pattern-comprehension abilities received a major boost as people became enthralled with photographs. Virtually all people were aware that photography depended on a negative transparency being transformed into positive image, which reinforced the concept of the complementarity of opposites.

Chief Joseph of the Nez Perce

If someone in the centuries immediately following Gutenberg’s revolution asked a sampling of people, “If your house were on fire and you had only enough time to retrieve one personal item, what would it be?,” the vast majority of Europeans and Americans would have answered: “the family Bible.” Handed down from generation to generation, this book served as the family’s memory bank, often containing genealogies, wedding contracts, and deeds. If you asked the same question a few decades after the introduction of photography, the answer changed to “the family photo album.” A collection of images had become the most precious of possessions. The invention of photography began to shift culture from written word back to perceived image.*

Photography did for images what the printing press had done for written words: it made their reproduction easy, quick, and relatively inexpensive. An illustration of photography’s iterative power: the single-most-reproduced art image is Vincent van Gogh’s Sunflowers (1888). It is estimated that more than 17 million copies have adorned sundry hotel, parlor, and dormitory rooms. While alive, van Gogh received little monetary recompense or recognition. Due to photographic reproduction, his oeuvre is familiar to the average citizen from Maine to Borneo, and originals that he could not give away presently command stratospheric prices at art auctions.

Before the advent of photography, an elite audience appreciated painters. After photography, art became a common language that permeated every stratum of society and subtly changed the very nature of human intercourse. Mention the name Dali to a group from any segment of the population and someone will surely say “melting watches.” Key art images have indelibly imprinted the psyche of Western culture.

Photography liberated artists from the goal of replicating nature realistically. Many great art innovations, such as Impressionism, Pointillism, Cubism, and Fauvism were the result of artists’ newfound freedom. Because photography faithfully reproduced visual reality, painting and sculpture could serve new functions—to respond to the world in a variety of ways, and address the scientific, cultural, and industrial transformations buffeting the times. And as I proposed in Art & Physics, art increasingly intuited the shape of the future. The visionary artist is the first person in a culture to see the world in a new way. Sometimes simultaneously, sometimes later, a visionary physicist has an insight so momentous that he discovers a new way to think about the world. The artist uses images and metaphors and the physicist uses numbers and equations. Yet when the artist’s antecedent images are superimposed on the later physicist’s formula, there is a striking fit. For example, Monet’s haystack series, the representation of an object in both three-dimensional in space and changing time preceded Minkowski’s formulation of the fourth dimension—the space-time continuum.

As the nineteenth century progressed, people increasingly obtained information about the world through images. The camera and the newly refined art of lithography tilted culture away from the printed word and toward the visual gestalt. Political cartoons began to appear regularly in the newspapers of the day and were often more to the point than the wordy editorials that accompanied them.

Daguerre was responsible for one half of the Iconic Revolution. Michael Faraday (1791-1867) initiated the other. The circumstances of his childhood made him an unlikely candidate to be a scientific innovator. Faraday’s working-class parents believed their child was retarded because he did not speak until the age of five. As a young man, he worked as a bookbinder’s apprentice, a blue-collar job that afforded him the opportunity to read the books he was binding, many of which concerned science. During these years, Faraday spent his evenings attending public lectures by Sir Humphry Davy, the director of England’s prestigious Royal Institute of Science. He interviewed to be Davy’s assistant, showing the meticulous notes and masterfully drawn technical illustrations he had made from Davy’s lectures. Davy saw in the raw twenty-two-year-old bookbinder a superior intelligence and, much to the chagrin of Ph.D. applicants from Oxford and Cambridge, he appointed “Mike,” as Faraday came to be known, to the coveted position.

In the 1820s, building on the work of an international group of scientists—Ampère in France, Volta and Galvani in Italy, Ohm in Germany, and Oersted in Denmark—Faraday intuited the existence of a force field that humans could neither see, hear, smell, touch, nor taste. A few years earlier, the poet Samuel Coleridge had written, “The universe is a cosmic web woven by God and held together by the crossed strands of attractive and repulsive forces.”5 Faraday invited people to imagine the spectral lines of force that make up an electromagnetic field.

After discovering features and principles of this “cosmic web” throughout the 1820s, Faraday invented the electric dynamo in 1831. Humankind could now generate this crackling power. Just as the Mechanical Age of gears and pistons was gathering its full head of steam, Faraday and the other pioneers in electromagnetic research logarithmically increased human possibilities. Ultimately, the electric dynamo would transform culture even more than the initial stages of the Industrial Revolution had. It would also bring about a subconscious adjustment of the existing female/male equation.

Electromagnetism is not confined to one bounded locus in space. It is not mechanistic as it has no moving parts. It is not reducible and can only be apprehended in its totality. It is a pattern rather than a point, insubstantial rather than material, more a verb than a noun, more a process than an object, more sinuous than angular. Since it is invisible, one has to imagine it in order to grasp it. Early researchers conjured it using feminine metaphors. The words used to describe it, such as “web,” “matrix,” “waves,” and “strands,” are all words etymologically and mythologically associated with the feminine. A “field,” which has proved to be electromagnetism’s most common synonym, is a noun borrowed from agriculture and nature. Electromagnetism had an organic interdependence, and it supplanted the Mechanical Age’s independent steps, sequence, and specialization with holism, simultaneity, and integration. The great principle at the heart of electromagnetism is that tension exists between polar opposites: positive and negative. An electromagnetic state only exists when both are present. The two poles, positive and negative, always strive to unite and it is only when they do that energy is generated.

Prior to the discovery of electromagnetism, poets and lovers usually compared their burning erotic love and desires to flames. The spark of love, an electrifying kiss, a compulsive attraction, a magnetic personality, an aura of sensuality, a repulsive person, and the pull of polar opposites entered the lexicon of love to the delight of anyone who ever needed a fresh way to express an archaic feeling.

The alphabet nation, inculcated with the belief that reality consists of a linear sequence of spatial events, had to pretend that electricity marched single file down a wire. In truth, nothing moves! Aspects of electromagnetism that seemed linear changed states at light speed, too fast for humans to appreciate. Electromagnetic events appeared to be both interdependent and simultaneous. Electromagnetism was mysterious and wave-like. Immaterial and insensate, electromagnetism resembles a spirit. Everything about it reaffirmed the validity of right-hemispheric faculties; in Faraday’s time it subtly increased both men’s and women’s appreciation of the feminine.

Benjamin Lee Whorf, a twentieth-century linguist, put forth the idea that the language we learn profoundly shapes the universe we can imagine. If a culture’s words describe a reality that is causal, linear, and mechanistic, then its members will accord more respect to the masculine left side of the corpus callosum, a mind-set that manifests in patriarchy. If, however, the features of a major new discovery force a people to employ the imagery of the right brain, then feminine values and status will be buoyed as a result.

Democritus, in ancient Greece, divided reality into Atoms and the Void. Thereafter, Western philosophers, convinced that there was little they could say about nothingness, ignored the Void and concentrated on describing matter’s smallest indivisible components, the atoms. Science became the investigation of “things” and the forces that acted on them, and scientists envisioned reality, to a large extent, in terms of masculine metaphors. A world composed of objects obeying deterministic laws of causality reinforced the idea that the left brain was superior. Invisible electromagnetism, a no-thing, the other half of Democritus’s duality, upset the Newtonian cog-and-gear perception of celestial Clockwork.

The discovery of electricity and the field it generated changed the world. The inventions it begat—the electric motor (1831), turbines and telegraph (1844), batteries and telephone (1876), electric lamp (1879), electric car (1875), microphone (1876), phonograph (1877), electric elevator (1880), X rays (1886), radio (1887/1903), and the twentieth century’s television, cyclotrons, tape recorders, radio telescopes, VCRs, computers, fax machines, cellular phones, and cyberspace—pushed humankind’s conception of reality into areas unimaginable to Faraday and his contemporaries. This hyperinflating perimeter is due not only to the content of information these devices enable us to access but also the reprogramming of the brain of each member of the culture who uses these new forms of information transfer.

Much has been written recently about the differing modes of communication used by each sex. Our awareness of the process as well as of the content of information exchanges should also take into account how gender relations have been affected by changing communication technologies. The return of the image, and the way electromagnetism reshaped reality from angular masculine to curvaceous feminine, art important factors contributing both to the rise of women’s rights in the late nineteenth century and to the increased respect for nature in the second half of the twentieth century. These changes in perception came about, in part, because of two technologies that affirmed gatherer/nurturer values.

Numerous factors contributed to the rise of women’s assertiveness and independence and the resurgence of feminine values and holistic thinking. All photographs increase the status of the image-recognition skills of the right brains of both sexes. This factor in turn reinforces a cultural interest in art, myth, nature, nurture, and poetry.

. . .

Since the invention of writing five thousand years ago, millions of forceful, intelligent, well-educated women have lived and died. Yet they rarely organized into a concerted movement to challenge patriarchal systems. The first organized women’s movement that called for an end to patriarchy began in the latter part of the nineteenth century in England and America. It occurred in these two countries rather than on the Continent for the same reason that the witch craze was less severe in English-speaking lands than on the Continent. A single feature of the English language holds the secret: in all the major Continental tongues, most important nouns must be defined by gender articles. Because there are no masculine or feminine articles in the English language, English nouns are gender neutral. In the majority of European languages, most passive objects, such as urns, vessels, sheaths, and holsters (all waiting to be filled), along with doorways, gates, and thresholds (through and over which one passes), tend to be feminine; weapons that thrust, tools that pierce, smash, or crush, and implements that cut, saw, or divide, are almost always masculine.

While there is a certain rustic logic to these assignments when it comes to physical objects, the classification of more abstract nouns by gender suggests a misogyny deeply rooted in the languages. Continental children learn to distinguish between masculine and feminine nouns between the ages of two and four, and parenthetically they learn that there is sexual value associated with each noun. If nouns that are stationary, receptive, ill defined, or sinister require a female article, would not this information affect how a little girl will perceive her place in relation to boys? If nouns denoting passivity are feminine, would this not tend to encourage feminine passivity? If pouvoir (power) requires a le and maladie (sickness) a la, what are very young French children to make of these distinctions? In Italian, disability (invalidata) is feminine and honor (onore) is masculine; vacancy (vacante) is feminine in Spanish, while value (valor) is masculine; in German, mind (Geist) is masculine and foible (Eigenheit) is feminine. Although there are many counterexamples, in the main this division holds.

Toddler boys learn that active, positive, thrusting, and clearly defined nouns are associated with their sex just as they are becoming aware of the anatomical differences between themselves and little girls. Would not this information stimulate pride in being male and disdain for females whose gender nouns are, in general, ambiguous, passive, and negative? Many of the gender articles of Continental languages can be guessed simply by using sexual or patriarchal imagery.

English is also distinguished from Continental languages by its lack of variant second-person-singular pronouns. In English, one has no choice but to address another with the egalitarian you. In German, the intimate du is traditionally reserved for immediate family members, friends, or lovers, and the formal Sie is used for superiors and strangers. Similar distinctions are present in French, Italian, and Spanish. Pronouns are words that define the speaker’s relationship to the person addressed. Any language that forces the speaker to choose between two pronouns to address another, a decision that depends on the permission of the person addressed, promotes a vertical layering of culture, and this in turn reinforces dominant/submissive interpersonal relations. The hierarchy of Continental language pronouns enters the consciousness of two-year-olds before they are aware of this artifice. One’s own native language must be transcended in order to see the world as it is. Most European men and women, tripped up by the snares of their grammars at age three, are prisoners forever. Women in English-speaking cultures have been more able to consider themselves the equals of men. In parallel fashion, because of the gender-neutrality inherent in their language, English-speaking men have been more favorably disposed to women’s aspirations for equality than their Continental counterparts.* The English language’s gender neutrality and its lack of pronoun distinction foster democracy and I suggest this is one of the primary reasons why the suffragette movement began where it did. Photography and electromagnetism were key factors enabling it to begin when it did.

The first modern call for female equality was Mary Wollstonescraft’s A Vindication of the Rights of Women published in 1792. Wollstonecraft challenged John Locke’s libertarian stance that women should remain subordinate to their husbands, and disputed Rousseau’s Romantic notion that women think differently from, and are therefore inferior to, men. There was nb “sex in souls,” she declared. But her male contemporaries in America, France, and England, while passionately espousing the cause of individual liberty for the male half of the population, paid little attention to her.

Then in the 1830s, Frances Wright, a Scotswoman, and Sarah Grimké an American, took up Wollstonecraft’s refrain. Wright, an atheist, raged against the “insatiate priestcraft” who kept women in a state of “mental bondage.”6 Grimké remained within the Christian fold but claimed that an erroneous view of scripture had evolved through “perverted interpretations of the Holy Writ.”7

In the next generation, the articulate voices of Sojourner Truth, Elizabeth Cady Stanton, Harriet Taylor, and Susan B. Anthony joined the chorus. In 1848, at Seneca Falls, New York, women from all walks of life attended the first convention dedicated (albeit implicitly) to overthrowing patriarchy. After days of rousing speeches decrying centuries-old abuses, the attendees ratified a Declaration of Sentiments (drafted primarily by Stanton). Repeating almost verbatim the wording of the American Declaration of Independence, it called for a fundamental restructuring of society:

We hold these truths to be self-evident: that all men and women are created equal; that they are endowed by their Creator with certain inalienable rights: that among these are life, liberty, and the pursuit of happiness; that to secure these rights governments are instituted, deriving their powers from the consent of the governed.8

Using the phraseology of the Founding Fathers was ironic because women, not having the vote, were disenfranchised from having a say in their own governance.

In his 1869 book, The Subjection of Women, their prestigious and unexpected ally, philosopher John Stuart Mill, provided welcome support. He called upon men voluntarily to end patriarchal practices: “the legal subordination of one sex by the other … is wrong in itself,” he wrote; it “is now one of the chief hindrances to human improvement.”9 Using his now-familiar argument of the “greatest good for the greatest number,” he wrote:

But it is not only through the sentiment of personal dignity that the free direction and disposal of their own faculties is a source of unhappiness to human beings, and not least to women. There is nothing, after disease, indigence, and guilt, so fatal to the pleasurable enjoyment of life as the want of a worthy outlet for the active faculties.10

Large numbers of women suddenly refused to tolerate the injustices long perpetuated against their sex. They lent their money, courage, and intellect to the cause, and growing numbers of men endorsed their aspirations. Still, most nineteenth-century men disapproved. As an act of civil disobedience in 1872, Susan B. Anthony slipped into a voting booth and pulled the lever. Her arrest forced the issue of woman’s suffrage into the courts. She was confident that any judge would be compelled to interpret the recently enacted Fourteenth Amendment to the U.S. Constitution in her favor, “… Nor shall any State deprive any person of life, liberty, or property, without due process of law; nor deny to any person within its jurisdiction the equal protection of the laws.” All Anthony had to do was to convince the court that as a respiring human born in America, she met the qualifications to be considered a “person.” The judge rejected her argument. He had composed his opinion before he heard her or her lawyer’s brief.

Despite this and other setbacks, the success of the suffragette movement seemed inevitable—change was in the air. Electromagnetism was exerting an ever-widening influence on culture, pulling it like taffy into new and unusual shapes. The telegraph collapsed time and space, the telephone and phonograph transferred information with the immediacy of speech, and photographic images continued to proliferate. The hold on people’s psyche that the linear alphabet had exercised for millennia began to loosen. And the pendulum concerning attitudes about gender equality, so long stuck on the masculine side, began to gradually swing back to the other side.

Signs of this shift were evident throughout the alphabet world. In Germany, Johann Bachofen (1815-87) investigated preliterate societies’ practice of Mutterrecht,“Mother Right” and questioned whether patriarchy was always present. Friedrich Engels (1820-95) recommended a new economic system that, in theory, granted women greater equality. In America, Ralph Waldo Emerson (1803-82) promoted the egalitarian Transcendentalist philosophy, while William James turned his readers’ attentions to the possibilities of experiential spirituality.

When Nietzsche proclaimed “God is dead!” the God he had in mind was the singular masculine patriarch who ruled from a heavenly throne. In 1854, a few years before Nietzsche’s declaration, the Catholic Church proclaimed that Mary had been conceived by Immaculate Conception, which meant that Mary had not been tainted by Original Sin. She ceased to be an ordinary mortal. The first tentative recognition in Western culture by male ecclesiastics of a Goddess in one thousand five hundred years occurred in conjunction with the putative death of God.

The nineteenth century was a period of intense religious feelings. Gnostic experiential revelations came to be valued over dogmas dispensed by figures of authority. Two of the most influential individuals were Mary Baker Eddy, who founded Christian Science, and Helena Blavatsky, the founder of Theosophy. Both disciplines attracted many male adherents. Adding to the century’s ferment, ashrams and Zen centers sprang up in Paris, London, and New York, as Eastern thought systems were embraced by Occidentals. Mystic traditions such as Sufism, Kabbalah, alchemy, astrology, and Rosicrucianiam flourished. Each emphasized personal exercises that would help the individual achieve union with God, and each taught that both complementary masculine and feminine principles constituted the cosmos.

By the latter half of the nineteenth century, when images were everywhere ascendant, art became mainstream. The Impressionists awoke the general public to new ways of envisioning the world. Composers such as Ravel, Debussy, and Rimsky-Korsakoff strove to create pictorial music. Symbolist poets used words to conjure images, and some, like Stéphane Mallarmé arranged their words on a page to create a visual pattern that resembled the animals or objects about which they wrote.

Science was also affected by these radical changes in the culture. During La Belle Époque, the last decade of the nineteenth century, the physicist Ludwig Boltzmann so despaired over his inability to convince his peers that reality had a significant component of randomness and disorder that he would commit suicide. He should have been more patient. Dangerous cracks were beginning to appear in Newton’s mechanistic edifice and the sharp edges of Descartes’ dualistic black-and-white opposites were blurring.

Politically, democracy was in the air. Kings shifted uneasily on their thrones, natives became restless, servants surly, and women dissatisfied. Communists demanded the overthrow of the ruling class and a redistribution of the wealth, while capitalists insisted that governing authorities end all interference in commerce and adopt a laissez-faire stance. Everywhere, paternalism was in retreat.

One of the new machines invented in the late nineteenth century profoundly affected writing. Ever since the first Sumerian had pressed a pointed stick into wet clay five thousand years ago, one dominant hand, controlled exclusively by the dominant hemisphere, had dictated the mechanics of writing. It made no difference whether the implement used was a stylus, a chisel, a brush, a quill, a crayon, a pen, or a pencil, the hunter/killer left lobe of the brains of both men and women directed the muscles of the aggressive right hand to write. Then, in 1873, an American, Philo Remington, invented the typewriter.

Within a generation of his patent, the sound of tap-tapping could be heard in offices and homes across the Western world. Typewriters fundamentally changed the way people created the written word. Skilled typists engaged both their dominant and their non-dominant hands, connected to both sides of their brains, and the content of typewriter-generated words was indirectly influenced. Right-brained values and attributes began to leak onto the page. The typewriter had a negligible impact on gender relations at first because at the end of the nineteenth century, secretarial pools were comprised mostly of young women transcribing letters dictated by their male bosses. The full impact of the typewriter keyboard on human communication—and on gender equality—would not be felt until the following century, when another new invention would entice men to join the world of q-w-e-r-t-y (more about this later).

In 1887, Thomas Edison’s prolific laboratory developed a technology that combined electromagnetism and photography. An electric motion picture projector unspooled a series of negatives past an intense light, which shone through them. At flicker fusion the projected individual frames merged into a motion picture. Film made it possible to tell a linear story, and people were hypnotized and fascinated, eagerly congregating in darkened rooms to watch the plots of novels unfold on screen. Movies, as the new medium was eventually called, began to compete with books for the public’s attention. Movie attendance first challenged and then easily surpassed church attendance. As the century turned and film technology improved, the public’s enthusiasm for movies began to erode the hegemony of the written word.

At the end of the nineteenth century, thoughtful inhabitants of Europe and America looked back in wonder at the amazing changes wrought by technology. A United States government official seriously proposed closing the Patent Office because he was convinced that the next century would bring forth nothing of interest—everything, he said, had already been invented. In Paris, a major newspaper convened a panel of distinguished art critics and asked them what they foresaw for the twentieth century. To a man, they claimed that the profusion of art styles in the late nineteenth century had exhausted the reservoirs of human creativity. The art of the next century, they declared, would simply provide a filigree here and an arabesque there. In military academies everywhere, generals pored over their contingency plans and fed their horses. Few people were aware that evolving methods of communication would transform their culture yet again and that every aspect of society, including the relative status of men and women, would be reconfigured. The century just over the horizon would soon supply ample evidence of the power inherent in communication technologies to change the world.

*She had four children by four different fathers.

†“Scientists” measured the skulls of women and men and because of their relative disparity in size claimed with scientific certainty that they had proved that men were more intelligent than women.

*The Greeks had discovered the principle of the steam engine two thousand years earlier, but subsequent ages lost the knowledge.

*Lithography, the reproduction of images by means of engraving, was perfected in the 1820s virtually at the very moment photography superseded it in importance.

*Along with English, Swedish is another of the few European languages that does not heavily encumber its nouns with gender politics. Contrast the generally peaceful character of Swedish men and the equality of their women with their cousins of identical ethnic stock: the patriarchal, militaristic Prussians, who live only a few hundred miles south but speak a language freighted with male dominance.