two

HUMOR ME

A DEFINING CHARACTERISTIC OF THE CEREBRAL MYSTIQUE is the artificial distinction it draws between brain and body. In this chapter we will see how abiotic depictions of the brain, and in particular the pervasive analogy of the brain to a computer, promote this distinction. The true brain is a grimy affair, swamped with fluids, chemicals, and glue-like cells called glia. The centerpiece of our biological mind is more like our other organs than a man-made device, but the ways we think and talk about it often misrepresent its true nature.

Standing astride a pilaster on the facade of Number 6 Burlington Gardens in London’s Mayfair district is a statue that conjures up the long history of such misconceptions. It is the likeness of Claudius Galenus of Pergamon, more commonly known as Galen, possibly the most influential figure in the history of medicine. His sneer of cold command conveys the haughtiness of a man who learned his trade at the gladiator’s arena, ministered to four Roman emperors, and reigned as an unchallenged oracle of medical truth for over a thousand years. In Galen’s stony hands rests a skull, symbolizing the biological principles he revealed in public dissections before audiences of Roman aristocrats and academics. Galen’s place in the pantheon of great intellectuals reflects his discoveries, as well as the endurance of his copious writings, over three million words by some counts, which over centuries were copied, amplified, and elaborated like scripture by Arabic and European scholars. Far from his birthplace in Asia Minor, the Galen of Burlington Gardens is flanked by statues of similarly iconic luminaries dear to Victorian scientific culture. He is of course a fabrication—no likeness of the real Galen survives from his own time.

Galen’s investigations contributed significantly to the triumph of brain-centered views of cognition. Although Hippocrates of Kos, writing four hundred years before Galen’s time, had already proclaimed the brain as the seat of reason, sensation, and emotion, Roman contemporaries of Galen maintained the Aristotelian cardiocentric view that the heart and vascular system controlled the body, including the brain. To Galen, the heart and vasculature occupied the crucial but subsidiary role of supplying “vital spirits” that energize the body. Galen’s vote for the brain followed largely from observation of the relationships of gladiators’ wounds to deficits they displayed in action, an incisive data source blissfully unavailable to later scientists.

Galen also performed careful dissections, raising this approach to an art. His dissections were performed exclusively on animals; human bodies were considered sacred (at least outside the arena) and not to be defiled by experimentation, even after death. Galen traced the peripheral nerves of his subjects to their origins at the base of the brain, providing evidence that the brain was uniquely capable of controlling the body. One famous experiment involved severing one of these fibers, the laryngeal nerve, in the head of a live pig, an operation that rendered the pig mute. Galen probably sent his slaves to procure carcasses and body parts from the local markets. Butchered heads were widely available at the time, no doubt destined for the tables of the well-to-do. The doctor carved them up to reveal notable features of intracranial anatomy. He took particular interest in structures he thought to be interfaces between the vasculature and the brain. Galen viewed these structures as critical for the conversion of vital spirits into “animal spirits,” the fluid essence to which he attributed consciousness and mental activity. Candidate interfaces included the linings of the ventricles, the fluid-filled cavities common to vertebrate brains, as well as a curious weblike structure of interconnected blood vessels so singular in Galen’s anatomical investigations as to merit the appellation rete mirabile, or “wondrous net.”

The rete figured prominently in Galen’s writings about the brain. It was in effect a biological locus of ensoulment, and reverence for the importance of this structure was passed down with Galen’s writings as received truth for hundreds of years. Like the statue at Burlington Gardens however, the rete mirabile was a mirage. Renaissance anatomists discovered that the formation occurs only in animals but not in people. In his monumental De Humani Corporis Fabrica (1543), the pioneering anatomist Andreas Vesalius wrote confidently that the blood vessels at the base of the human brain “quite fail to produce such a plexus reticularis as that which Galen recounts.” Galen’s extrapolations from animal dissections were indeed erroneous, his conclusions skewed by the cultural taboos of his time. Yet as a symbol of the brain’s mysterious qualities, the rete mirabile continued to appeal long after it was discredited scientifically. A hundred years after Vesalius, Galen’s obsession inspired the English poet John Dryden to write, “Or is it fortune’s work, that in your head / The curious net, that is for fancies spread / Lets through its meshes every meaner thought / While rich ideas there are only caught?”

The story of Galen’s rete shows us that salient but arbitrary or even mistaken features of the brain can be singled out for special attention because they mesh with the culture of the time. In Galen’s day, pride of place went to the rete mirabile and its part in a theory of the human mind that was governed by spirits. As we shall see in this chapter, the importance now ascribed to neuroelectricity and its role in computational views of brain function occupies a similar position in our era. I will argue that our cerebral mystique is upheld by contemporary images of the brain as a machine. I will also present a more organic, alternative picture of brain function that tends to demystify the brain, and that also bears curious resemblance to the ancient theory of spirits.

Like other wonders of nature, the brain and mind have always been popular subjects of poetic conceit. Long before Dryden, Plato wrote that the mind was a chariot steered by reason but pulled by the passions. Anchored by considerably deeper biological insight in 1940, the groundbreaking neurophysiologist Charles Sherrington described the brain as “an enchanted loom where millions of flashing shuttles weave a dissolving pattern, always a meaningful pattern though never an abiding one; a shifting harmony of subpatterns.” The loom metaphor has found its way into the titles of several books and even has its own Wikipedia page. Sherrington’s fibrous motif evokes Galen’s net; his musical reference also resonates with the imagery of other writers, who analogized the brain to a piano or a phonograph, both of which mimic the brain’s capacity to emit a large repertoire of complex but chronologically organized output sequences. In his book The Engines of the Human Body (1920), the anthropologist Arthur Keith laid out the more prosaic comparison to an automated telephone switchboard, conceptualizing the brain’s ability to connect diverse sensory inputs and behavioral outputs.

The most popular analogy for the mind today is the computer, and for good reason—like our minds, modern computers are capable of inscrutable feats of intellect. Critics have objected to the notion that human consciousness and understanding can be reduced to the soulless digit crunching performed by CPUs. To the extent that the analogy between minds and computers ignores or trivializes consciousness, it demeans what we consider most special about ourselves. The computational view of the mind took off at a time when human minds so clearly outranked computers that the insult carried a bit more bite than it does today. The situation is almost reversed now: we associate computers with a combination of arithmetic acumen, memory capacity, and accuracy that our own minds certainly cannot equal.

Most scientists and philosophers accept the analogy between minds and computers and actively or passively incorporate it into their professional creeds. Given the close association between mind and brain, a computational view of the brain itself is likewise widespread. The portrayal of brain as computer permeates our culture. One of the most memorable episodes from the original Star Trek television series begins when an alien steals Mr. Spock’s brain and installs it at the core of a giant computer, where it controls life support systems throughout an entire planet. The robots of science fiction generally have brainlike computers or computerlike brains in their heads, ranging from the positronic brains of Isaac Asimov’s I, Robot to the dysfunctional brain that occupies the oversized cranium of Marvin the paranoid android, in the 2005 film version of The Hitchhiker’s Guide to the Galaxy. In contrast, many of the real-life robots sponsored by the US Defense Advanced Research Projects Agency (DARPA) wear their processors in their chests or even distributed throughout their bodies, where they are somewhat less brainlike but better protected against mishaps. Popular science magazines are full of the brain-computer analogy, comparing and contrasting brains with actual computers in terms of speed and efficiency.

But what is the “meat” in a computational view of the brain—does the comparison really help us understand anything? Fingers are like chopsticks. Fists are like hammers. Eyes are like cameras. Mouths and ears are like telephones. These analogies are not worth dwelling on because they are too obvious. The tool in each pairing is an object designed to do a thing that we humans have evolved to do but wish we could do better, or at least slightly differently—that’s why we made the tools. At some point we also decided we wanted to multiply numbers bigger or faster than we could manipulate easily in our heads, so we built tools to accomplish this. Similar tools turned out to be useful for various other things we also do with our brains: remembering things, solving equations, recognizing voices, driving cars, guiding missiles. Brains are like computers because computers were designed to do things our brains do, only better.

Brains are enough like computers that physical analogies between brains and computers have been proposed since the earliest days of the digital age, when John von Neumann, the mathematician and computing innovator, wrote The Computer and the Brain in 1957. Von Neumann argued that the mathematical operations and design principles implemented in digital machines might be similar to phenomena in the brain. Some of the similarities that prompted von Neumann’s comparison are well-known. Both computers and brains are noted for their dependence on electricity. Neuroelectricity can be detected remotely using electrodes placed outside brain cells and even outside the head, making electrical activity a particularly salient hallmark of brain function. If you’ve ever had an electroencephalography (EEG) test, you’ve seen this phenomenon in action when tiny wires were pasted to your scalp (or perhaps attached via a cap) in order to permit electrical recording of your brain activity. Such procedures help doctors detect signs of epilepsy, migraines, and other abnormalities.

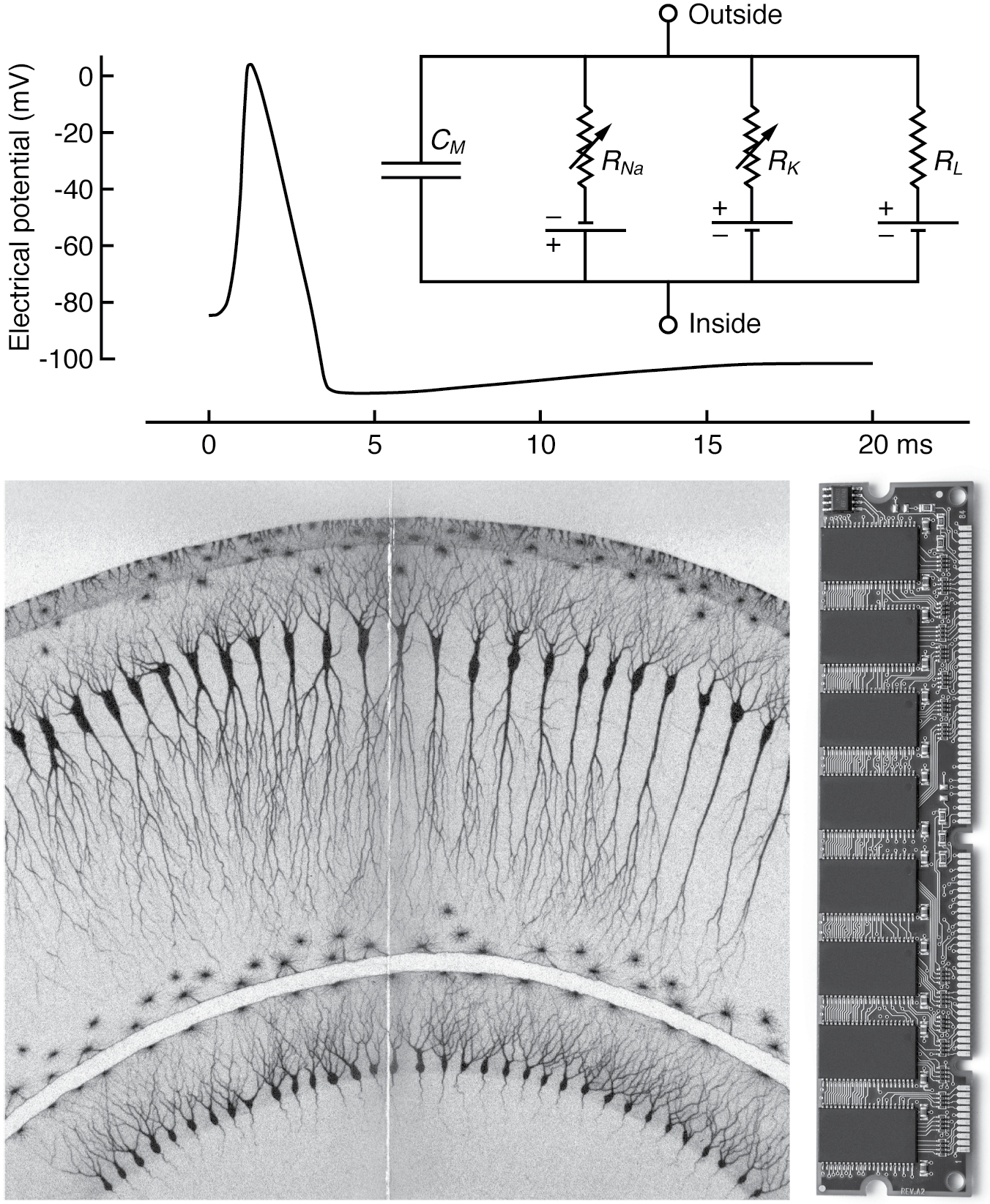

FIGURE 2. Electronic and computational analogies to brain function: (top) transmembrane voltage versus time during an action potential, with inset showing a circuit model that predicts neural membrane potentials, labeled according to the conventions of electronics, after work by A. L. Hodgkin and A. F. Huxley; (bottom left) neural structure of the hippocampus, as illustrated by the famous neuroanatomist Camillo Golgi; (bottom right) memory circuit board from a modern computer. (Licensed from Adobe Stock)

The brain’s electrical signals arise from tiny voltage differences across the membranes that surround neurons, like the differences between terminals on a battery (see Figure 2). Unlike batteries, transmembrane voltages (known as membrane potentials) fluctuate dynamically in time, resulting from the flow of electrically charged molecules called ions across the cell membrane. If the voltage across a neural membrane fluctuates by more than about twenty millivolts from the cell’s resting level, a much larger voltage spike called an action potential can occur. During an action potential, a neuron’s voltage changes by about a hundred millivolts and returns to the baseline in the space of a few milliseconds, as ions zip back and forth through little channels in the membrane. When a neuron displays such flashes of electrical energy, we say it is “firing.” Action potentials spread spatially along neural fibers at speeds faster than a sprinting cheetah and are essential to how distant parts of the brain can interact rapidly enough to mediate perception and cognition.

Most neurons fire action potentials at frequencies ranging from a few per second up to about one hundred per second. In these respects, neuronal action potentials resemble the electrical impulses that make our modems and routers flash and allow our computers and other digital devices to calculate and communicate with each other. Measurements of such electrophysiological activity are the mainstay of experimental neuroscience, and electrical signaling is often thought of as the language brain cells use to talk to each other—the lingua franca of the brain.

Brains contain circuits somewhat analogous to the integrated circuits in computer chips. Neural circuits are made up of ensembles of neurons that connect to one another via synapses. Many neuroscientists regard synapses as the most fundamental units in neural circuitry because they can modulate neural signals as they pass from cell to cell. In this respect, synapses are like transistors, the elementary building blocks of computer circuitry that get turned on and off and regulate the flow of electric currents in digital processing. The human brain contains many billions of neurons and trillions of synapses, well over the number of transistors in a typical personal computer today. Synapses generally conduct signals in one predominant direction, from a presynaptic neuron to a postsynaptic neuron, which lie on opposite sides of each synapse. Chemicals called neurotransmitters, released by presynaptic cells, are the most common vehicle for this communication. Different types of synapses, often distinguished by which neurotransmitter they use, allow presynaptic cells to increase, decrease, or more subtly affect the rate of action potential firing in the postsynaptic cell. This is somewhat analogous to how your foot pressing on the pedals of a car produces different results depending on which pedal you push and what gear the car is in.

The structure of neural tissue itself sometimes resembles electronic circuitry. In many regions of the brain, neurons and their synaptic cotacts are organized into stereotyped patterns of local connectivity, reminiscent of the regular arrangements of electronic components that make up microchips or circuit boards. For example, the cerebral cortex, the convoluted rind that makes up the bulk of human brains, is structured in layers running parallel to the brain surface, resembling the rows of chips on a computer’s memory card (see Figure 2).

Neural circuits also do things that electronic circuits in digital processors are designed to do. At the simplest level, individual neurons “compute” addition and subtraction by combining inputs from presynaptic cells. Roughly speaking, a postsynaptic neuron’s output represents the sum of all inputs that increase its firing rate minus the sum of all inputs that decrease its activity. This elementary neural arithmetic acts as a building block for many brain functions. In the mammalian visual system, for instance, signals from presynaptic neurons that respond to light in different parts of the retina add up when these cells converge onto individual postsynaptic cells. Responses to progressively more sophisticated light patterns can be built by combining such computations over multiple stages, each involving another level of cells that gets input from the previous level.

The complexity of neural calculations eventually extends to concepts from college-level mathematics. Neural circuits perform calculus—a mainstay of freshman-year education—whenever they help keep track of how something in the world is changing or accumulating in time. When you fix your gaze on something while moving your body or head, you are using a form of this neural calculus to keep track of your accumulated movements; you use the data to adjust your eyes just enough in the opposite direction so that the direction of your gaze doesn’t change as you move. Scientists have found a group of thirty to sixty neurons in the brain of a goldfish that seems to accomplish this computation. A different form of neural calculus is required for detecting moving objects in the visual system of a fly. To make this possible, small groups of neurons in the fly’s retina compare input from neighboring points in space. These little neural circuits signal the presence of motion if visual input at one point arrives before input to the second point, sort of like the way you could infer motion of a subway train by considering its arrival times at adjacent stations, even if you could not directly see the train moving.

Neuroscientists speak of circuits that perform functions much more complicated than calculus as well—processes that include object recognition, decision making, and consciousness itself. Even if entire neural networks that perform these operations have not yet been mapped, neuronal hallmarks of complicated computations have been discovered by comparing the action potential firing rates of neurons to performance in behavioral tasks. One example comes from a classic set of experiments on the neural basis of learning performed by Wolfram Schultz at Cambridge University, using electrode recordings from monkey brains. Schultz’s group studied a task in which the monkeys learned to associate a specific visual stimulus with a subsequent juice reward—a form of the same experiment Pavlov conducted with his dogs. In the monkeys, firing of dopamine-containing neurons in a brain region called the ventral tegmental area initially accompanied delivery of the juice. As animals repeatedly experienced the visual stimulus followed by the juice, however, the dopamine neurons eventually began to fire when the stimulus appeared before the juice. This showed that these neurons had come to “predict” the juice reward that followed each stimulus. Remarkably, the behavior of dopamine neurons in this task also closely paralleled part of a computational algorithm in the field of machine learning. The similarity between the abstract machine-learning method and the actual biological signals suggests that the monkeys’ brains might be using neural circuits to implement an algorithm similar to the computer’s.

In a further parallel between electrical engineering and the activity attributed to the brain, neuronal firing rates are often said to encode information, in reference to a theory Claude Shannon developed in the 1940s to describe the reliability of communication in electronic systems like radios or telephones. Shannon’s information theory is used routinely in engineering and computer science to measure the reliability with which inputs are related to outputs. We implicitly brush up against information theory when we compress megapixel camera images into kilobyte jpeg images without losing detail, or when we transfer files over the ethernet cables in our homes or offices. To make these tasks work well, engineers had to think about how effectively the compressed data in our digital photographs can be retrieved or how accurately and quickly the signal transmitted over cables can be understood or “decoded” at the other end of each upload or download. Such problems are closely related to the questions of how data are maintained in biological memory and how the timing of action potentials communicates sensory information along nerve fibers to the brain. The mathematical formalisms of information theory and of signal processing more generally can be tremendously useful for quantitative interpretation of neural functions.

When we think of the brain as an electronic device, it seems entirely natural to analyze brain data using engineering approaches such as information theory or machine-learning models. In some cases, the computational analogy of the brain drives researchers even further—to imagine that parts of the brain correspond to gross features of a computer. In a 2010 book, the neuroscientists Randy Gallistel and Adam King argued that the brain must possess a read-write memory storage device similar to that of a prototypical computer, the Turing machine. The Turing machine processes data by writing and reading zeros and ones from a piece of tape; the reading and writing operations proceed according to a set of rules in the machine (a “program”), and the tape constitutes the machine’s memory, analogous to the disks or solid-state memory chips used in modern PCs. If efficient computers universally depend on such read-write memory mechanisms, Gallistel and King reason, then the brain should too. The authors thus challenge the contemporary dogma that the basis of biological memory lies in changing synaptic connections between neurons, which are difficult to relate to Turing-style memory; they insist that this synaptic mechanism is too slow and inflexible, despite the formidable experimental evidence in its favor. Although Gallistel and King’s hypothesis is not widely accepted, it nevertheless offers a remarkable example of how the analogy between brains and computers can take precedence over theories derived from experimental observation. In looking from brain to computer and back from computer to brain, it can be difficult to tell which is the inspiration for which.

The association of brains with computers sometimes seems to take on a spiritual flavor. John von Neumann’s own early efforts to synthesize computer science and neurobiology apparently coincided with his rediscovery of Catholicism, shortly before his death of pancreatic cancer in 1957. There is little evidence that religion was at all important to von Neumann throughout much of his life, although he had undergone baptism in 1930 on the eve of his first marriage. It is a cliché that people find God on their deathbed—a kind of last-minute insurance for the soul—and at first it might seem dissonant to think at the same time about recasting the material basis of the soul itself into the language of machines. From another angle, these views are easy to reconcile, however, because equating the organic mind to an inorganic mechanism might offer hope of a secular immortality—if not for ourselves, then for our species. If we are our brains, and our brains are isomorphic to devices we could build, then we can also imagine them being repaired, remade, cloned, propagated, sent through space, or stored for an eternity in solid-state dormancy before being awakened when the time is right. In identifying our brains with computers, we also tacitly deny the messy, mortal confusion of our true physical selves and replace it with an ideal not born of flesh.

A substantial cohort of eminent physical scientists in their later lives joined von Neumann by also speculating about abstract or mechanical origins of cognition. With the wave equation almost twenty years behind him and his renowned cat nine years out of the bag, Erwin Schrödinger postulated a universal consciousness embodied in the statistical motion of atoms and molecules. His theory is far removed from von Neumann’s computer analogy but likewise presents mental processes as fundamentally abiotic. Another case in point is that of Roger Penrose, the eminent cosmologist whose contributions to the understanding of black holes are overshadowed in some circles by his commentaries on consciousness. Penrose explicitly rejects the suggestion that a computer could emulate human minds but instead seeks a basis for free will in the esoteric principles of quantum physics. Like the computer analogy, Penrose’s quantum view of the mind seems rooted more in physics than physiology and in equations more than experiments. The biophysicist Francis Crick turned to neuroscience after codiscovering the structure of DNA; his influence lingers powerfully in his injunction that researchers should seek correlates of consciousness in the electrical activity of large neuronal ensembles. But even Crick’s ruthlessly materialist and biologically anchored view of the brain focuses almost entirely on computational and electrophysiological aspects of brain function that most differentiate the brain from the rest of the body.

Although each of these perspectives differs dramatically from the others, they share a tendency to minimize the organic aspects of brains and minds and emphasize inorganic qualities that relate most distantly to other biological entities. In effect, they set up a brain-body distinction that parallels the age-old metaphysical distinction between mind and body, traditionally referred to as mind-body dualism. Through this distinction, the brain takes the place of the mind, and thus becomes analogous to an immaterial entity humankind has struggled for millennia to explain.

The tendency to draw a distinction between the brain and the rest of the body is a phenomenon I will call scientific dualism, because it parallels mind-body dualism but draws strength from strands of scientific thought and coexists with scientific worldviews. Scientific dualism is one of the most ubiquitous realizations of the cerebral mystique, and we shall see it in many forms throughout this book. It is the powerful cultural vestige of a philosophy most commonly associated with René Descartes, a seventeenth-century scholar and adventurer who argued that mind and body are made of separate substances that interact to actuate living beings. In Descartes’s depiction, the mind or soul (he made no distinction) interacts with the body through part of the brain, though Descartes was never able to explain the mechanics of how this interaction could take place. Related forms of dualism in which the soul departs the body upon death, submits to divine judgment, and sometimes finds a new body are almost universally present among the religions of the world.

Dualism is an operating principle most of us use at least implicitly in daily life. Even outside our places of worship, and even if we are not religious, we speak of the mind and spirit in ways that distinguish it from the body. We say that so-and-so has lost his mind or that what’s-her-name lacks spirit. The ego and id of Freudian psychoanalysis, now fixtures of folk psychology, lead dualism-sanctioned lives of their own: “My ego tells me to do this; my id tells me to do that.” And our actions also reflect dualism. For example, a white-collar workaholic who fails to connect the importance of a sound mind to the need for a sound body may be in for an early heart attack, and could well suffer diminished productivity even before the sad corporeal end comes. In other instances, we might fear judgment about mental transgressions that could never possibly be witnessed by other people—Jesus referred to this as sinning “in one’s heart” (Matthew 5:28), but atheists probably know the feeling just as well. Our anxiety here is a manifestation of dualism because we suppose at least subconsciously that the mind can be accessed separately from the body, perhaps even after we die.

In traditional dualist perspectives like Descartes’s, the mind or soul is like the invisible operator of a remote-controlled body. In scientific dualism, on the other hand, the operator is not an incorporeal entity but rather a material brain, which lives within the body but otherwise fulfills the same mysterious role. Unlike the dualisms of religion and philosophy, scientific dualism is rarely a consciously held opinion or an openly professed point of view. Few scientifically informed people really believe that the brain and body are materially separable, but they might nevertheless treat the brain and body separately in thought, rhetoric, and even practice. Through scientific dualism, some of the cherished attitudes about the disembodied soul can thus persist without any conviction that the soul or mind is truly incorporeal. In this respect, scientific dualism mirrors the instinctive morality of many atheists or the tacit sexism and racism that pervade even the most enlightened corners of our postmodern society. In each of these examples, old-fashioned habits of thought outlive overt adherence to the religious or social doctrines that originally spawned them.

As with other prejudices, scientific dualism can sometimes be expressed explicitly. Take for instance the Xbox video game Body and Brain Connection, which “integrate[s] cerebral and physical challenges for the optimal gaming experience.” Despite the talk of integration, the language used here treats brain and body as discrete units with functions that complement each other but do not overlap. Less explicit instances of scientific dualism arise when scientists like von Neumann, Schrödinger, Penrose, and Crick conjure up abiotic images of brains that lack the wet and squishy qualities that characterize other organs and tissues. These authors do not draw bright-line boundaries between brain and body, but their writing still implies that the brain is special in its makeup or modes of action. In each instance, scientific dualism provides a mechanism for keeping our minds sacred—distinguishing the functions and processes of the brain from those of mundane bodily processes like digestion or cancer, and perhaps even guarding our brains from being eaten. We shall see, however, that more organic views of brain physiology were once common and are being increasingly resurrected by recent science.

On a February morning in 1685, King Charles II of England emerged from his private chamber to undergo his daily toilet. His face looked ghastly, and he spoke to his acolytes with slurred speech, his mind apparently wandering. As he was being shaved, the king’s complexion suddenly turned purple, and his eyes rolled back into his head. He tried to stand and instead slouched into the arms of one of his attendants. He was laid out on a bed, and a doctor stepped forward with a penknife to lance a vein and draw blood. Hot irons were applied to the monarch’s head, and he was force-fed “a fearsome decoction extracted from human skulls.” The king regained consciousness and spoke again, but appeared to be in terrible pain. A team of fourteen physicians waited on him and continued to draw blood—some twenty-four ounces in total—but it became apparent that they could not save him. His highness passed away four days later.

Although rumors about poison circulated at the time, the more widespread belief was that Charles II had died following an episode of apoplexy, what we now call a stroke, in which the blood vessels of the brain become blocked or broken. Strokes affect tens of millions of people each year worldwide and are still a leading cause of neurological injury and death. We have now developed treatments that reduce the risks of strokes and help protect the brain when they occur. To the seventeenth-century mind, however, brain ailments such as apoplexy, as well as diseases affecting all aspects of the body, were brought on by imbalances among bodily fluids called humors. An excess of blood, one of the four humors along with black bile, yellow bile, and phlegm, was thought to cause the apoplexy. Bloodletting was supposed to relieve the excess and help the patient accordingly.

Many of us remember being taught to laugh at humorism in school, and the brain in particular is difficult to imagine as a soup of bodily fluids. Current neuronocentric views about brain function in cognition are most concerned with the roles of neurons and neuroelectricity, features that lend themselves best to computational analogies and that seem inherently dry and machinelike. But although computers are known to react poorly to liquids (try spilling a cup of coffee on your laptop), the brain is actually rich in fluids that participate intimately in neurobiology. A fifth of the brain’s volume consists of fluid-filled cavities and interstices. About half of this is occupied by blood, and the other half by cerebrospinal fluid (CSF), a clear substance produced by the linings of the brain’s cavernous ventricles in a process strikingly resembling Galen’s proposed generation of animal from vital spirits. CSF fills the ventricles and exchanges rapidly with extracellular inlets that directly contact all the brain’s cells, bathing them with a mix of ions, nutrients, and molecules related to brain signaling. The cells of the brain themselves, about 80 percent by volume, are also filled with intracellular fluids, which hold the DNA and other biomolecules and metabolites that make cells work.

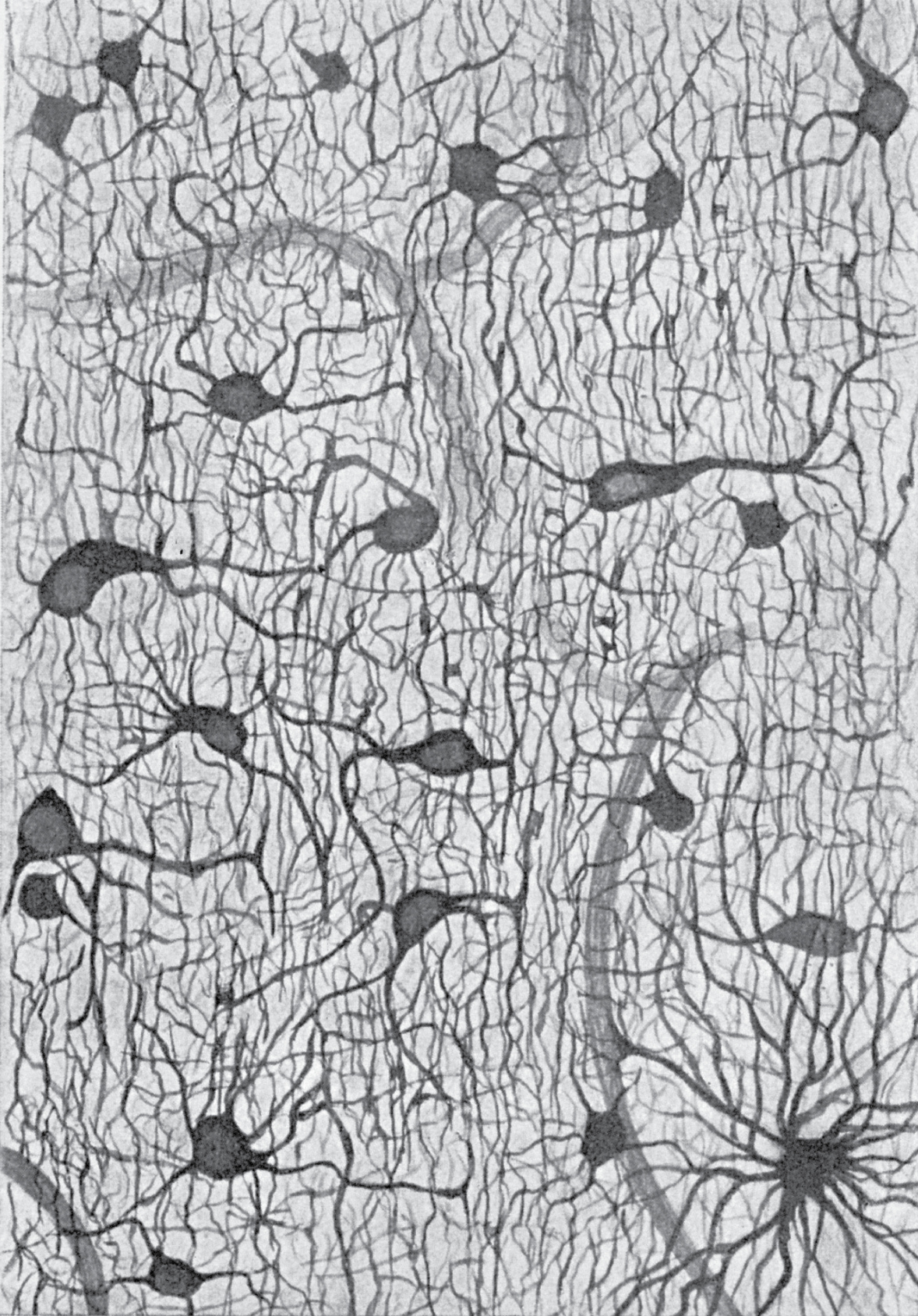

Perhaps more surprisingly, only at most half of brain cells are actually the charismatic, electrically active neurons that steal most neuroscientists’ attention. The less noticed brain cells are the glia, smaller nonspiking cells that do not form long-range connections reminiscent of electrical wiring (see Figure 3). These cells were historically thought to play only a literally supporting role in the brain—the term glia derives from the Greek word for glue, another fluid—but in the cerebral cortex they outnumber neurons by up to a factor of ten to one. A conception of the brain that doesn’t include a role for glia is like a brick wall built without mortar.

Oddly enough, it is precisely the nonneuronal components of brain anatomy that are often directly implicated in many of the best-known brain diseases. One of the most prevalent and pernicious brain cancers, glioblastoma multiforme, arises from uncontrolled proliferation of glial cells; the cancer then results in brain fluid pressure buildup that in most cases is the ultimate cause of death. This terrible disease is the one that killed Senator Ted Kennedy of Massachusetts in 2009. Disruptions to fluid exchange between blood vessels and surrounding brain tissue are closely associated with stroke, multiple sclerosis, concussion, and Alzheimer’s disease. Many of these conditions specifically affect blood flow or the integrity of the blood-brain barrier, a network of tightly connected cells that surrounds blood vessels and regulates transport of chemicals between the blood and the brain.

Is the thinking brain really distinct from the brain that underlies neurological disease? Research now suggests that the brain’s glue and fluids, previously thought to be bystanders, are in fact deeply engaged in many aspects of function. One of the striking revelations of recent years has been the discovery that glia undergo signaling processes similar to neurons. By analyzing microscopic-scale videos of neurons and glia, researchers have shown that glia respond to some of the same stimuli that neurons do. Several neurotransmitters evoke calcium ion fluctuations in glia, a phenomenon also observed in neurons, where such dynamics are closely related to electrical activity. Calcium fluctuations in a type of glial cell called an astrocyte are correlated with the electrical signals of nearby neurons. My MIT colleague Mriganka Sur and coworkers showed that astrocytes in the visual cortex of ferrets are even more responsive than neurons to some visual features.

Blood flow patterns in the brain are also closely correlated with neuronal activity. When regions of the brain become activated, local blood vessels dilate and blood flow increases in a coordinated phenomenon called functional hyperemia. Discovery of functional hyperemia is attributed to the nineteenth-century Italian physiologist Angelo Mosso. Using an oversize stethoscope-like device called a plethysmograph, he monitored pulsation of blood volume in the head noninvasively through the fontanelles of infants and in adults who had suffered injuries that breached their skulls. Mosso’s best-known subject was a farmer named Bertino, whose cerebral pulsation accelerated when the local church bells rang, when his name was called, or when his mind was engaged by various tasks. These experiments were forerunners of modern brain-scanning techniques, which use positron emission tomography (PET) and magnetic resonance imaging (MRI) in place of the plethysmograph to map blood flow changes in three dimensions.

That glia and blood vessels respond to many of the same stimuli that activate neurons highlights the multifarious nature of brain tissue—neurons have housemates—but this fact does not prove that nonneuronal elements have more than a supporting role. A neuronocentric, computational view of brain function might suppose that glia and vessels are analogous to the power supply and cooling fans that keep the electronics running; they face demands that rise and fall depending on the CPU’s workload, but they do not compute anything themselves. If this description were accurate, then stimulating glia or vasculature independent of neurons would have negligible effects on the activity of other neurons—but recent results contradict this premise.

Some evidence suggests, for instance, that blood flow changes can influence neural activity in addition to responding to it. Certain drugs that act on enzymes in blood vessels appear to alter neural electrical activity indirectly, implying that the vessels can transmit chemical signals to neurons. There are also hints that the dilation of blood vessels during hyperemia could stimulate neurons via pressure sensors on the surfaces of some neurons. If true, this would be analogous to how our sense of touch works through pressure on the fingertips. A functional role for glia is also increasingly supported by recent neuroscience research. Selective activation of glia using a technique called optogenetic stimulation can alter both spontaneous and stimulus-induced firing rates of nearby neurons. Glial activity can even influence behavior. In one example, Ko Matsui and his group at the National Institute for Physiological Sciences in Japan showed in mice that stimulating glia in a brain region called the cerebellum affected eye movements previously thought to be coordinated only by neurons in this structure.

A particularly extraordinary example of the influence of nonneuronal brain components comes from work of Maiken Nedergaard at the University of Rochester. Her laboratory transplanted human glial progenitors—embryonic cells that mature into glia—into the forebrains of developing mice. When the mice grew into adults, their brains were rich in human glial cells. The animals were then analyzed in a test of their ability to associate a short tone with a subsequent mild electric shock. During this kind of procedure, animals exposed to the tone-shock pairing begin to react to the tone as they would normally react to a shock alone (usually by freezing); the “smarter” an animal is, the more quickly it learns that the tone predicts an impending shock. In this case, the mice that carried human glial cells performed three times better in the task than reference mice that only received glial transplants from other mice. The hybrid animals also learned to run mazes more than twice as fast as the reference mice and made about 30 percent fewer errors in a memory recall task. It is simplistic to suppose that the mice performed better because of something the new glia did all on their own, but the experiments nevertheless show that these uncharismatic cells can influence behavior in nontrivial ways. With this comes the astonishing suggestion that the secret to human cognitive success may lie at least partly in our once neglected glia.

In the narrow fluid-filled alleys that snake between cells of the brain thrives another form of brain activity that defies typical notions of computation. It is in these interstices that much of the chemical life of the brain takes place. To some, the idea of chemistry in the brain might evoke the psychedelic experiences induced by LSD and cannabis, but to neuroscientists, the term brain chemistry refers primarily to the study of neurotransmitters and related molecules called neuromodulators. Most communication between mammalian brain cells relies heavily on neurotransmitters secreted by neurons at synapses. Neurotransmitters are released when a presynaptic neuron spikes, and they then act rapidly on postsynaptic neurons, via specialized molecular “catcher’s mitts” called neurotransmitter receptors, to induce changes in the postsynaptic cell’s probability of spiking. In this neuronocentric view of the brain, neurotransmitters are primarily a means for propagating electrical signals from one neuron to the next. To the extent that neuroelectricity is indeed the lingua franca of the brain, this view seems justified.

But now imagine an alternative chemocentric view in which the neurotransmitters are the main players. In this view, electrical signaling in neurons enables the spread of chemical signals, rather than the other way around. From a chemocentric perspective, even the electrical signals themselves might be recast as chemical processes, because of the ions they rely on. This picture is upside-down by the standards of contemporary neuroscience, but it has something going for it. Perhaps most obviously, neurotransmitters and their associated receptors play functionally distinct roles far more diverse than neuroelectricity per se; by some counts there are over a hundred kinds of transmitter in the mammalian brain, each acting on one or more receptor type. An action potential means different things depending on which neurotransmitters it causes to be released and where those transmitters act. In parts of the central nervous system such as the retina, neurotransmitters can be released without spiking at all.

Neurotransmitter effects are also shaped by factors that are independent of neurons. Glia exert substantial influence because of their role in scavenging some neurotransmitters after they have been released. If the rate of neurotransmitter uptake by glia changes, the amount of neurotransmitter would be regulated in much the same way that the level of water in a bathtub is affected by opening or closing the drain. Glia also release chemical signaling molecules of their own, sometimes called gliotransmitters. Like neurotransmitters, gliotransmitters can induce calcium signals in both neurons and other glia. The functional effects of gliotransmitters in behavior and cognition are significant topics of current research.

The action of neurochemicals is also heavily influenced by a cell-independent process called diffusion, the passive spreading of molecules that results from their random movement through liquids. Diffusion is what causes the spontaneous dispersion of oil droplets over the surface of a puddle, or the aimless dancing of microscopic particles in milk, known as Brownian motion. Diffusion also influences the postsynaptic activity of neurotransmitters in important ways that are not yet fully appreciated but that represent a stark contrast to the orderly communication of information across circuit-like contacts between neurons. Some neurotransmitters and most neuromodulators are known in particular for their ability to diffuse out of synapses and act remotely on cells that do not form direct connections to the cells that released them. One such diffusing molecule is dopamine, the neurotransmitter we saw previously in the context of reward-related learning in monkeys. The significance of dopamine diffusion is highlighted by the action of narcotics like cocaine, amphetamine, and Ritalin. These drugs block brain molecules whose job it is to remove dopamine after it has been released at synapses. In doing so, the drugs increase dopamine’s tendency to spread through the brain and influence multiple cells.

Neurotransmitter diffusion also underlies the phenomenon of synaptic cross-talk, another unconventional mode of brain signaling whereby molecules released at one synapse trespass into other synapses and affect their function. From the invaded synapse’s point of view, this is like hearing a third person’s voice murmuring on the phone while trying to have a one-on-one conversation with a friend. A number of studies have documented surprising levels of cross-talk among synapses that use the neurotransmitter glutamate, which is released by 90 percent of neurons in the brain and is mainly known for fast action within individual synapses. Such results are remarkable because they challenge the notion of the synapse as the fundamental unit of brain processing. Instead, both synaptic cross-talk and the more general effects of neurochemical diffusion in the brain represent aspects of what is sometimes called volume transmission, because they act through volumes of tissue rather than specific connections between pairs of neurons. Volume transmission arises from overlapping ripples of fluctuating neurotransmitter concentrations that seem more like raindrops falling on a pond than like the orderly flow of electricity through wires.

So from the neurotransmitter’s point of view, neurons are specialized cells that help shape neurochemical concentrations in space and time, along with glia and processes of passive diffusion. Neurotransmitters in turn influence cells of the brain to generate more neurotransmitters, both locally and remotely. Whenever a sensory stimulus is perceived or a decision is made, a flood of swirling neurotransmitters emerges, mixing with the background of chemical ingredients whose pattern constantly wavers across the brain’s extracellular space. Seen through this murky chemical stew, the electrical properties of neurons seem almost irrelevant—any sufficiently rapid mechanism for interconverting chemical signals would do. Indeed, in the nervous systems of some small animals, such as the nematode worm Caenorhabditis elegans, electrical signals are far weaker, and action potentials have not been documented.

The brain seen in this way is more like the ancients’ vision, with not four humors but rather a hundred vital substances vying for influence in the brain’s extracellular halls of power, not to mention the thousands of substances at work inside each cell as well. This chemical brain is a mundane but biologically grounded counterpoint to the shining technological brain of the computer age, or to the ethereal brains actuated by quantum physics and statistical mechanics. We can imagine the chemical brain instead as a descendent of the primordial soup of protobiological reagents that first gave rise to life in the Archean environment of the young planet Earth. We can also imagine the chemical brain as a close cousin of the chemical liver, the chemical kidneys, and the chemical pancreas—the offal we eat, all organs whose function revolves around the generation and processing of fluids. In this way, the brain loses some of its mystique.

I am one of those unhappy souls who discovered Douglas Hofstadter’s cult classic Gödel, Escher, Bach (GEB) late in life. When my college roommate tried to amuse me with the bedazzling puzzles peppered throughout this book, I kept my nose boorishly buried in my physics and chemistry homework. I finally picked up GEB years past the wilting of my salad days, when I had neither the patience nor the youthful agility to give the puzzles the attention they deserved. Although I love Bach, enjoy Escher, and remain intrigued by Gödel, my mind had closed too far to revel in the book’s rather mystical musings about consciousness. In one chapter, Hofstadter explains the structure of the nervous system as he saw it in the 1970s, a factual summary surprisingly consonant with the science of today and indicative in some ways of how slowly the field of neuroscience has been progressing. The description is also thoroughly redolent of scientific dualism. Wholeheartedly embracing the computer analogy, Hofstadter hypothesizes that “every aspect of thinking can be viewed as a high-level description of a system which, on a low level, is governed by simple, even formal, rules.”

Another passage in GEB, however, strongly resonates with the point I have tried to make in this chapter; it deals with the relationship between figure and ground in drawings and other art forms. Hofstadter discusses cases where the background can be a subject in its own right, a phenomenon seen most famously in images of a vase versus two faces in profile (see Figure 4). In modern neuroscience, neurons and neuroelectricity have constituted the brain’s figure, while many other components of brain function make up the ground. This gestalt has contributed prominently to the computational interpretation of the brain and to the persistence of the brain-body dualism. But just as visual perception can seamlessly flit from vase to faces and back again, so our view of brain function could as easily shift to emphasizing nonneuronal and nonelectrical features that make the brain appear more akin to other organs. Chemicals and electricity, active signaling and passive diffusion, neurons and glia are all parts of the brain’s mechanisms. Raising some of these components above the others is like choosing which gears in a clock are the most important. Rotating each gear will turn the others, and removing any gear will break the clock. For this reason, attempts to reduce cognitive processing to the brain’s electrical signaling, or to its wiring—the neural fibers over which electrical signals propagate—are at best simplistic and at worst mistaken.

Our embrace of the notion that brains function according to exceptional or idealized principles, largely alien to the rest of biology, is a consequence of the cerebral mystique. Our brains seem most foreign and mysterious to us when we imagine them as powerful computers, wondrous prostheses embedded in our skulls, rather than as the moist mixtures of flesh and fluids that throb there and also throughout the rest of our bodies. What better way to keep our souls abstract than to think of the organ of the soul as abstract, dry, and lacking in humor? We will see that this is but one of the ways in which idealization of the brain conflicts with a more naturalistic view in which the brain and mind are enmeshed in their biological and environmental context. In the next chapter, we will consider in particular how widespread emphasis on the extreme complexity of the brain contributes powerfully to the cerebral mystique and to the dualist distinction between brain and body.