Although C# contains many exciting features, one of its most powerful is its built-in support for multithreaded programming. A multithreaded program contains two or more parts that can run concurrently. Each part of such a program is called a thread, and each thread defines a separate path of execution. Thus, multithreading is a specialized form of multitasking.

Multithreaded programming relies on a combination of features defined by the C# language and by classes in the .NET Framework. Because support for multithreading is built into C#, many of the problems associated with multithreading in other languages are minimized or eliminated. As you will see, C#’s support of multithreading is both clean and easy to understand.

With the release of version 4.0 of the .NET Framework, two important additions were made that relate to multithreaded applications. The first is the Task Parallel Library (TPL), and the other is Parallel LINQ (PLINQ). Both provide support for parallel programming, and both can take advantage of multiple-processor (multicore) computers. In addition, the TPL streamlines the creation and management of multithreaded applications. Because of this, TPL-based multithreading is now the recommended approach for multithreading in most cases. However, a working knowledge of the original multithreading subsystem is still important for several reasons. First, there is much preexisting (legacy) code that uses the original approach. If you will be working on or maintaining this code, you need to know how the original threading system operated. Second, TPL-based code may still use elements of the original threading system, especially its synchronization features. Third, although the TPL is based on an abstraction called the task, it still implicitly relies on threads and the thread-based features described here. Therefore, to fully understand and utilize the TPL, a solid understanding of the material in this chapter is needed.

Finally, it is important to state that multithreading is a very large topic. It is far beyond the scope of this book to cover it in detail. This and the following chapter present an overview of the topic and show several fundamental techniques. Thus, it serves as an introduction to this important topic and provides a foundation upon which you can build.

There are two distinct types of multitasking: process-based and thread-based. It is important to understand the difference between the two. A process is, in essence, a program that is executing. Thus, process-based multitasking is the feature that allows your computer to run two or more programs concurrently. For example, process-based multitasking allows you to run a word processor at the same time you are using a spreadsheet or browsing the Internet. In process-based multitasking, a program is the smallest unit of code that can be dispatched by the scheduler.

A thread is a dispatchable unit of executable code. The name comes from the concept of a “thread of execution.” In a thread-based multitasking environment, all processes have at least one thread, but they can have more. This means that a single program can perform two or more tasks at once. For instance, a text editor can be formatting text at the same time that it is printing, as long as these two actions are being performed by two separate threads.

The differences between process-based and thread-based multitasking can be summarized like this: Process-based multitasking handles the concurrent execution of programs. Thread-based multitasking deals with the concurrent execution of pieces of the same program.

The principal advantage of multithreading is that it enables you to write very efficient programs because it lets you utilize the idle time that is present in most programs. As you probably know, most I/O devices, whether they be network ports, disk drives, or the keyboard, are much slower than the CPU. Thus, a program will often spend a majority of its execution time waiting to send or receive information to or from a device. By using multithreading, your program can execute another task during this idle time. For example, while one part of your program is sending a file over the Internet, another part can be reading keyboard input, and still another can be buffering the next block of data to send.

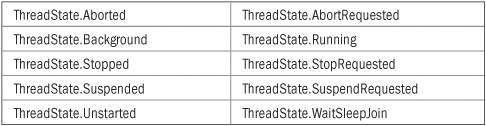

A thread can be in one of several states. In general terms, it can be running. It can be ready to run as soon as it gets CPU time. A running thread can be suspended, which is a temporary halt to its execution. It can later be resumed. A thread can be blocked when waiting for a resource. A thread can be terminated, in which case its execution ends and cannot be resumed.

The .NET Framework defines two types of threads: foreground and background. By default, when you create a thread, it is a foreground thread, but you can change it to a background thread. The only difference between foreground and background threads is that a background thread will be automatically terminated when all foreground threads in its process have stopped.

Along with thread-based multitasking comes the need for a special type of feature called synchronization, which allows the execution of threads to be coordinated in certain well-defined ways. C# has a complete subsystem devoted to synchronization, and its key features are also described here.

All processes have at least one thread of execution, which is usually called the main thread because it is the one that is executed when your program begins. Thus, the main thread is the thread that all of the preceding example programs in the book have been using. From the main thread, you can create other threads.

C# and the .NET Framework support both process-based and thread-based multitasking. Thus, using C#, you can create and manage both processes and threads. However, little programming effort is required to start a new process because each process is largely separate from the next. Rather, it is C#’s support for multithreading that is important. Because support for multithreading is built in, C# makes it easier to construct high-performance, multithreaded programs than do some other languages.

The classes that support multithreaded programming are defined in the System.Threading namespace. Thus, you will usually include this statement at the start of any multithreaded program:

using System.Threading;

The multithreading system is built upon the Thread class, which encapsulates a thread of execution. The Thread class is sealed, which means that it cannot be inherited. Thread defines several methods and properties that help manage threads. Throughout this chapter, several of its most commonly used members will be examined.

There are a number of ways to create and start a thread. This section describes the basic mechanism. Various options are described later in this chapter.

To create a thread, instantiate an object of type Thread, which is a class defined in System.Threading. The simplest Thread constructor is shown here:

public Thread(ThreadStart start)

Here, start specifies the method that will be called to begin execution of the thread. In other words, it specifies the thread’s entry point. ThreadStart is a delegate defined by the .NET Framework as shown here:

public delegate void ThreadStart( )

Thus, your entry point method must have a void return type and take no arguments.

Once created, the new thread will not start running until you call its Start( ) method, which is defined by Thread. The Start( ) method has two forms. The one used here is

public void Start( )

Once started, the thread will run until the entry point method returns. Thus, when the thread’s entry point method returns, the thread automatically stops. If you try to call Start( ) on a thread that has already been started, a ThreadStateException will be thrown.

Here is an example that creates a new thread and starts it running:

// Create a thread of execution.

using System;

using System.Threading;

class MyThread {

public int Count;

string thrdName;

public MyThread(string name) {

Count = 0;

thrdName = name;

}

// Entry point of thread.

public void Run() {

Console.WriteLine(thrdName + " starting.");

do {

Thread.Sleep(500);

Console.WriteLine("In " + thrdName +

", Count is " + Count);

Count++;

} while(Count < 10);

Console.WriteLine(thrdName + " terminating.");

}

}

class MultiThread {

static void Main() {

Console.WriteLine("Main thread starting.");

// First, construct a MyThread object.

MyThread mt = new MyThread("Child #1");

// Next, construct a thread from that object.

Thread newThrd = new Thread(mt.Run);

// Finally, start execution of the thread.

newThrd.Start();

do {

Console.Write(".");

Thread.Sleep(100);

} while (mt.Count != 10);

Console.WriteLine("Main thread ending.");

}

}

Let’s look closely at this program. MyThread defines a class that will be used to create a second thread of execution. Inside its Run( ) method, a loop is established that counts from 0 to 9. Notice the call to Sleep( ), which is a static method defined by Thread. The Sleep( ) method causes the thread from which it is called to suspend execution for the specified period of milliseconds. The form used by the program is shown here:

public static void Sleep(int millisecondsTimeout)

The number of milliseconds to suspend is specified in millisecondsTimeout. If millisecondsTimeout is zero, the calling thread is suspended only to allow a waiting thread to execute.

Inside Main( ), a new Thread object is created by the following sequence of statements:

// First, construct a MyThread object.

MyThread mt = new MyThread("Child #1");

// Next, construct a thread from that object.

Thread newThrd = new Thread(mt.Run);

// Finally, start execution of the thread.

newThrd.Start();

As the comments suggest, first an object of MyThread is created. This object is then used to construct a Thread object by passing the mt.Run( ) method as the entry point. Finally, execution of the new thread is started by calling Start( ). This causes mt.Run( ) to begin executing in its own thread. After calling Start( ), execution of the main thread returns to Main( ), and it enters Main( )’s do loop. Both threads continue running, sharing the CPU, until their loops finish. The output produced by this program is as follows. (The precise output that you see may vary slightly because of differences in your execution environment, operating system, and task load.)

Main thread starting.

Child #1 starting.

..... In Child #1, Count is 0

..... In Child #1, Count is 1

..... In Child #1, Count is 2

..... In Child #1, Count is 3

..... In Child #1, Count is 4

..... In Child #1, Count is 5

..... In Child #1, Count is 6

..... In Child #1, Count is 7

..... In Child #1, Count is 8

..... In Child #1, Count is 9

Child #1 terminating.

Main thread ending.

Often in a multithreaded program, you will want the main thread to be the last thread to finish running. Technically, a program continues to run until all of its foreground threads have finished. Thus, having the main thread finish last is not a requirement. It is, however, good practice to follow because it clearly defines your program’s endpoint. The preceding program tries to ensure that the main thread will finish last by checking the value of Count within Main( )’s do loop, stopping when Count equals 10, and through the use of calls to Sleep( ). However, this is an imperfect approach. Later in this chapter, you will see better ways for one thread to wait until another finishes.

While the preceding program is perfectly valid, some easy improvements will make it more efficient. First, it is possible to have a thread begin execution as soon as it is created. In the case of MyThread, this is done by instantiating a Thread object inside MyThread’s constructor. Second, there is no need for MyThread to store the name of the thread since Thread defines a property called Name that can be used for this purpose. Name is defined like this:

public string Name { get; set; }

Since Name is a read-write property, you can use it to set the name of a thread or to retrieve the thread’s name.

Here is a version of the preceding program that makes these three improvements:

// An alternate way to start a thread.

using System;

using System.Threading;

class MyThread {

public int Count;

public Thread Thrd;

public MyThread(string name) {

Count = 0;

Thrd = new Thread(this.Run);

Thrd.Name = name; // set the name of the thread

Thrd.Start(); // start the thread

}

// Entry point of thread.

void Run() {

Console.WriteLine(Thrd.Name + " starting.");

do {

Thread.Sleep(500);

Console.WriteLine("In " + Thrd.Name +

", Count is " + Count);

Count++;

} while(Count < 10);

Console.WriteLine(Thrd.Name + " terminating.");

}

}

class MultiThreadImproved {

static void Main() {

Console.WriteLine("Main thread starting.");

// First, construct a MyThread object.

MyThread mt = new MyThread("Child #1");

do {

Console.Write(".");

Thread.Sleep(100);

} while (mt.Count != 10);

Console.WriteLine("Main thread ending.");

}

}

This version produces the same output as before. Notice that the thread object is stored in Thrd inside MyThread.

The preceding examples have created only one child thread. However, your program can spawn as many threads as it needs. For example, the following program creates three child threads:

// Create multiple threads of execution.

using System;

using System.Threading;

class MyThread {

public int Count;

public Thread Thrd;

public MyThread(string name) {

Count = 0;

Thrd = new Thread(this.Run);

Thrd.Name = name;

Thrd.Start();

}

// Entry point of thread.

void Run() {

Console.WriteLine(Thrd.Name + " starting.");

do {

Thread.Sleep(500);

Console.WriteLine("In " + Thrd.Name +

", Count is " + Count);

Count++;

} while(Count <10);

Console.WriteLine(Thrd.Name + " terminating.");

}

}

class MoreThreads {

static void Main() {

Console.WriteLine("Main thread starting.");

// Construct three threads.

MyThread mt1 = new MyThread("Child #1");

MyThread mt2 = new MyThread("Child #2");

MyThread mt3 = new MyThread("Child #3");

do {

Console.Write(".");

Thread.Sleep(100);

} while (mt1.Count < 10 ||

mt2.Count < 10 ||

mt3.Count < 10);

Console.WriteLine("Main thread ending.");

}

}

Sample output from this program is shown next:

Main thread starting.

.Child #1 starting.

Child #2 starting.

Child #3 starting.

....In Child #1, Count is 0

In Child #2, Count is 0

In Child #3, Count is 0

..... In Child #1, Count is 1

In Child #2, Count is 1

In Child #3, Count is 1

..... In Child #1, Count is 2

In Child #2, Count is 2

In Child #3, Count is 2

..... In Child #1, Count is 3

In Child #2, Count is 3

In Child #3, Count is 3

..... In Child #1, Count is 4

In Child #2, Count is 4

In Child #3, Count is 4

..... In Child #1, Count is 5

In Child #2, Count is 5

In Child #3, Count is 5

..... In Child #1, Count is 6

In Child #2, Count is 6

In Child #3, Count is 6

..... In Child #1, Count is 7

In Child #2, Count is 7

In Child #3, Count is 7

..... In Child #1, Count is 8

In Child #2, Count is 8

In Child #3, Count is 8

..... In Child #1, Count is 9

Child #1 terminating.

In Child #2, Count is 9

Child #2 terminating.

In Child #3, Count is 9

Child #3 terminating.

Main thread ending.

As you can see, once started, all three child threads share the CPU. Again, because of differences among system configurations, operating systems, and other environmental factors, when you run the program, the output you see may differ slightly from that shown here.

Often it is useful to know when a thread has ended. In the preceding examples, this was accomplished by watching the Count variable—hardly a satisfactory or generalizable solution. Fortunately, Thread provides two means by which you can determine whether a thread has ended. First, you can interrogate the read-only IsAlive property for the thread. It is defined like this:

IsAlive returns true if the thread upon which it is called is still running. It returns false otherwise. To try IsAlive, substitute this version of MoreThreads for the one shown in the preceding program:

// Use IsAlive to wait for threads to end.

class MoreThreads {

static void Main() {

Console.WriteLine("Main thread starting.");

// Construct three threads.

MyThread mt1 = new MyThread("Child #1");

MyThread mt2 = new MyThread("Child #2");

MyThread mt3 = new MyThread("Child #3");

do {

Console.Write(".");

Thread.Sleep(100);

} while (mt1.Thrd.IsAlive &&

mt2.Thrd.IsAlive &&

mt3.Thrd.IsAlive);

Console.WriteLine("Main thread ending.");

}

}

This version produces the same output as before. The only difference is that it uses IsAlive to wait for the child threads to terminate.

Another way to wait for a thread to finish is to call Join( ). Its simplest form is shown here:

public void Join( )

Join( ) waits until the thread on which it is called terminates. Its name comes from the concept of the calling thread waiting until the specified thread joins it. A ThreadStateException will be thrown if the thread has not been started. Additional forms of Join( ) allow you to specify a maximum amount of time that you want to wait for the specified thread to terminate.

Here is a program that uses Join( ) to ensure that the main thread is the last to stop:

// Use Join().

using System;

using System.Threading;

class MyThread {

public int Count;

public Thread Thrd;

public MyThread(string name) {

Count = 0;

Thrd = new Thread(this.Run);

Thrd.Name = name;

Thrd.Start();

}

// Entry point of thread.

void Run() {

Console.WriteLine(Thrd.Name + " starting.");

do {

Thread.Sleep(500);

Console.WriteLine("In " + Thrd.Name +

", Count is " + Count);

Count++;

} while(Count < 10);

Console.WriteLine(Thrd.Name + " terminating.");

}

}

// Use Join() to wait for threads to end.

class JoinThreads {

static void Main() {

Console.WriteLine("Main thread starting.");

// Construct three threads.

MyThread mt1 = new MyThread("Child #1");

MyThread mt2 = new MyThread("Child #2");

MyThread mt3 = new MyThread("Child #3");

mt1.Thrd.Join();

Console.WriteLine("Child #1 joined.");

mt2.Thrd.Join();

Console.WriteLine("Child #2 joined.");

mt3.Thrd.Join();

Console.WriteLine("Child #3 joined.");

Console.WriteLine("Main thread ending.");

}

}

Sample output from this program is shown here. Remember when you try the program, your output may vary slightly.

Main thread starting.

Child #1 starting.

Child #2 starting.

Child #3 starting.

In Child #1, Count is 0

In Child #2, Count is 0

In Child #3, Count is 0

In Child #1, Count is 1

In Child #2, Count is 1

In Child #3, Count is 1

In Child #1, Count is 2

In Child #2, Count is 2

In Child #3, Count is 2

In Child #1, Count is 3

In Child #2, Count is 3

In Child #3, Count is 3

In Child #1, Count is 4

In Child #2, Count is 4

In Child #3, Count is 4

In Child #1, Count is 5

In Child #2, Count is 5

In Child #3, Count is 5

In Child #1, Count is 6

In Child #2, Count is 6

In Child #3, Count is 6

In Child #1, Count is 7

In Child #2, Count is 7

In Child #3, Count is 7

In Child #1, Count is 8

In Child #2, Count is 8

In Child #3, Count is 8

In Child #1, Count is 9

Child #1 terminating.

In Child #2, Count is 9

Child #2 terminating.

In Child #3, Count is 9

Child #3 terminating.

Child #1 joined.

Child #2 joined.

Child #3 joined.

Main thread ending.

As you can see, after the calls to Join( ) return, the threads have stopped executing.

In the early days of the .NET Framework, it was not possible to pass an argument to a thread when the thread was started because the method that serves as the entry point to a thread could not have a parameter. If information needed to be passed to a thread, various workarounds (such as using a shared variable) were required. However, this deficiency was subsequently remedied, and today it is possible to pass an argument to a thread. To do so, you must use different forms of Start( ), the Thread constructor, and the entry point method.

An argument is passed to a thread through this version of Start( ):

public void Start(object parameter)

The object passed to parameter is automatically passed to the thread’s entry point method.

Thus, to pass an argument to a thread, you pass it to Start( ).

To make use of the parameterized version of Start( ), you must use the following form of the Thread constructor:

public Thread(ParameterizedThreadStart start)

Here, start specifies the method that will be called to begin execution of the thread. Notice in this version, the type of start is ParameterizedThreadStart rather than ThreadStart, as used by the preceding examples. ParameterizedThreadStart is a delegate that is declared as shown here:

public delegate void ParameterizedThreadStart(object obj)

As you can see, this delegate takes an argument of type object. Therefore, to use this form of the Thread constructor, the thread entry point method must have an object parameter.

Here is an example that demonstrates the passing of an argument to a thread:

// Passing an argument to the thread method.

using System;

using System.Threading;

class MyThread {

public int Count;

public Thread Thrd;

// Notice that MyThread is also passed an int value.

public MyThread(string name, int num) {

Count = 0;

// Explicitly invoke ParameterizedThreadStart constructor

// for the sake of illustration.

Thrd = new Thread(this.Run);

Thrd.Name = name;

// Here, Start() is passed num as an argument.

Thrd.Start(num);

}

// Notice that this version of Run() has

// a parameter of type object.

void Run(object num) {

Console.WriteLine(Thrd.Name +

" starting with count of " + num);

do {

Thread.Sleep(500);

Console.WriteLine("In " + Thrd.Name +

", Count is " + Count);

Count++;

} while(Count < (int) num);

Console.WriteLine(Thrd.Name + " terminating.");

}

}

class PassArgDemo {

static void Main() {

// Notice that the iteration count is passed

// to these two MyThread objects.

MyThread mt = new MyThread("Child #1", 5);

MyThread mt2 = new MyThread("Child #2", 3);

do {

Thread.Sleep(100);

} while (mt.Thrd.IsAlive | mt2.Thrd.IsAlive);

Console.WriteLine("Main thread ending.");

}

}

The output is shown here. (The actual output you see may vary.)

Child #1 starting with count of 5

Child #2 starting with count of 3

In Child #2, Count is 0

In Child #1, Count is 0

In Child #1, Count is 1

In Child #2, Count is 1

In Child #2, Count is 2

Child #2 terminating.

In Child #1, Count is 2

In Child #1, Count is 3

In Child #1, Count is 4

Child #1 terminating.

Main thread ending.

As the output shows, the first thread iterates five times and the second thread iterates three times. The iteration count is specified in the MyThread constructor and then passed to the thread entry method Run( ) through the use of the ParameterizedThreadStart version of Start( ).

As mentioned earlier, the .NET Framework defines two types of threads: foreground and background. The only difference between the two is that a process won’t end until all of its foreground threads have ended, but background threads are terminated automatically after all foreground threads have stopped. By default, a thread is created as a foreground thread. It can be changed to a background thread by using the IsBackground property defined by Thread, as shown here:

public bool IsBackground { get; set; }

To set a thread to background, simply assign IsBackground a true value. A value of false indicates a foreground thread.

Each thread has a priority setting associated with it. A thread’s priority determines, in part, how frequently a thread gains access to the CPU. In general, low-priority threads gain access to the CPU less often than high-priority threads. As a result, within a given period of time, a low-priority thread will often receive less CPU time than a high-priority thread. As you might expect, how much CPU time a thread receives profoundly affects its execution characteristics and its interaction with other threads currently executing in the system.

It is important to understand that factors other than a thread’s priority can also affect how frequently a thread gains access to the CPU. For example, if a high-priority thread is waiting on some resource, perhaps for keyboard input, it will be blocked, and a lower-priority thread will run. Thus, in this situation, a low-priority thread may gain greater access to the CPU than the high-priority thread over a specific period. Finally, precisely how task scheduling is implemented by the operating system affects how CPU time is allocated.

When a child thread is started, it receives a default priority setting. You can change a thread’s priority through the Priority property, which is a member of Thread. This is its general form:

public ThreadPriority Priority{ get; set; }

ThreadPriority is an enumeration that defines the following five priority settings:

ThreadPriority.Highest

ThreadPriority.AboveNormal

ThreadPriority.Normal

ThreadPriority.BelowNormal

ThreadPriority.Lowest

The default priority setting for a thread is ThreadPriority.Normal.

To understand how priorities affect thread execution, we will use an example that executes two threads, one having a higher priority than the other. The threads are created as instances of the MyThread class. The Run( ) method contains a loop that counts the number of iterations. The loop stops when either the count reaches 1,000,000,000 or the static variable stop is true. Initially, stop is set to false. The first thread to count to 1,000,000,000 sets stop to true. This causes the second thread to terminate with its next time slice. Each time through the loop, the string in currentName is checked against the name of the executing thread. If they don’t match, it means that a task-switch occurred. Each time a task-switch happens, the name of the new thread is displayed and currentName is given the name of the new thread. This allows you to watch how often each thread has access to the CPU. After both threads stop, the number of iterations for each loop is displayed.

// Demonstrate thread priorities.

using System;

using System.Threading;

class MyThread {

public int Count;

public Thread Thrd;

static bool stop = false;

static string currentName;

/* Construct a new thread. Notice that this

constructor does not actually start the

threads running. */

public MyThread(string name) {

Count = 0;

Thrd = new Thread(this.Run);

Thrd.Name = name;

currentName = name;

}

// Begin execution of new thread.

void Run() {

Console.WriteLine(Thrd.Name + " starting.");

do {

Count++;

if(currentName != Thrd.Name) {

currentName = Thrd.Name;

Console.WriteLine("In " + currentName);

}

} while(stop == false && Count < 1000000000);

stop = true;

Console.WriteLine(Thrd.Name + " terminating.");

}

}

class PriorityDemo {

static void Main() {

MyThread mt1 = new MyThread("High Priority");

MyThread mt2 = new MyThread("Low Priority");

// Set the priorities.

mt1.Thrd.Priority = ThreadPriority.AboveNormal;

mt2.Thrd.Priority = ThreadPriority.BelowNormal;

// Start the threads.

mt1.Thrd.Start();

mt2.Thrd.Start();

mt1.Thrd.Join();

mt2.Thrd.Join();

Console.WriteLine();

Console.WriteLine(mt1.Thrd.Name + " thread counted to " +

mt1.Count);

Console.WriteLine(mt2.Thrd.Name + " thread counted to " +

mt2.Count);

}

}

Here is sample output:

High Priority starting.

In High Priority

Low Priority starting.

In Low Priority

In High Priority

In Low Priority

In High Priority

In Low Priority

In High Priority

In Low Priority

In High Priority

In Low Priority

In High Priority

High Priority terminating.

Low Priority terminating.

High Priority thread counted to 1000000000

Low Priority thread counted to 23996334

In this run, of the CPU time allotted to the program, the high-priority thread got approximately 98 percent. Of course, the precise output you see may vary, depending on the speed of your CPU and the number of other tasks running on the system. Which version of Windows you are running will also have an effect.

Because multithreaded code can behave differently in different environments, you should never base your code on the execution characteristics of a single environment. For example, in the preceding example, it would be a mistake to assume that the low-priority thread will always execute at least a small amount of time before the high-priority thread finishes. In a different environment, the high-priority thread might complete before the low-priority thread has executed even once, for example.

When using multiple threads, you will sometimes need to coordinate the activities of two or more of the threads. The process by which this is achieved is called synchronization. The most common reason for using synchronization is when two or more threads need access to a shared resource that can be used by only one thread at a time. For example, when one thread is writing to a file, a second thread must be prevented from doing so at the same time. Another situation in which synchronization is needed is when one thread is waiting for an event that is caused by another thread. In this case, there must be some means by which the first thread is held in a suspended state until the event has occurred. Then the waiting thread must resume execution.

The key to synchronization is the concept of a lock, which controls access to a block of code within an object. When an object is locked by one thread, no other thread can gain access to the locked block of code. When the thread releases the lock, the object is available for use by another thread.

The lock feature is built into the C# language. Thus, all objects can be synchronized. Synchronization is supported by the keyword lock. Since synchronization was designed into C# from the start, it is much easier to use than you might first expect. In fact, for many programs, the synchronization of objects is almost transparent.

The general form of lock is shown here:

lock(lockObj) {

// statements to be synchronized

}

Here, lockObj is a reference to the object being synchronized. If you want to synchronize only a single statement, the curly braces are not needed. A lock statement ensures that the section of code protected by the lock for the given object can be used only by the thread that obtains the lock. All other threads are blocked until the lock is removed. The lock is released when the block is exited.

The object you lock on is an object that represents the resource being synchronized. In some cases, this will be an instance of the resource itself or simply an arbitrary instance of object that is being used to provide synchronization. A key point to understand about lock is that the lock-on object should not be publically accessible. Why? Because it is possible that another piece of code that is outside your control could lock on the object and never release it. In the past, it was common to use a construct such as lock(this). However, this only works if this refers to a private object. Because of the potential for error and conceptual mistakes in this regard, lock(this) is no longer recommended for general use. Instead, it is better to simply create a private object on which to lock. This is the approach used by the examples in this chapter. Be aware that you will still find many examples of lock(this) in legacy C# code. In some cases, it will be safe. In others, it will need to be changed to avoid problems.

The following program demonstrates synchronization by controlling access to a method called SumIt( ), which sums the elements of an integer array:

// Use lock to synchronize access to an object.

using System;

using System.Threading;

class SumArray {

int sum;

object lockOn = new object(); // a private object to lock on

public int SumIt(int[] nums) {

lock(lockOn) { // lock the entire method

sum = 0; // reset sum

for(int i=0; i < nums.Length; i++) {

sum += nums[i];

Console.WriteLine("Running total for " +

Thread.CurrentThread.Name +

" is " + sum);

Thread.Sleep(10); // allow task-switch

}

return sum;

}

}

}

class MyThread {

public Thread Thrd;

int[] a;

int answer;

// Create one SumArray object for all instances of MyThread.

static SumArray sa = new SumArray();

// Construct a new thread.

public MyThread(string name, int[] nums) {

a = nums;

Thrd = new Thread(this.Run);

Thrd.Name = name;

Thrd.Start(); // start the thread

}

// Begin execution of new thread.

void Run() {

Console.WriteLine(Thrd.Name + " starting.");

answer = sa.SumIt(a);

Console.WriteLine("Sum for " + Thrd.Name +

" is " + answer);

Console.WriteLine(Thrd.Name + " terminating.");

}

}

class Sync {

static void Main() {

int[] a = {1, 2, 3, 4, 5};

MyThread mt1 = new MyThread("Child #1", a);

MyThread mt2 = new MyThread("Child #2", a);

mt1.Thrd.Join();

mt2.Thrd.Join();

}

}

Here is sample output from the program. (The actual output you see may vary slightly.)

Child #1 starting.

Running total for Child #1 is 1

Child #2 starting.

Running total for Child #1 is 3

Running total for Child #1 is 6

Running total for Child #1 is 10

Running total for Child #1 is 15

Running total for Child #2 is 1

Sum for Child #1 is 15

Child #1 terminating.

Running total for Child #2 is 3

Running total for Child #2 is 6

Running total for Child #2 is 10

Running total for Child #2 is 15

Sum for Child #2 is 15

Child #2 terminating.

As the output shows, both threads compute the proper sum of 15.

Let’s examine this program in detail. The program creates three classes. The first is SumArray. It defines the method SumIt( ), which sums an integer array. The second class is MyThread, which uses a static object called sa that is of type SumArray. Thus, only one object of SumArray is shared by all objects of type MyThread. This object is used to obtain the sum of an integer array. Notice that SumArray stores the running total in a field called sum. Thus, if two threads use SumIt( ) concurrently, both will be attempting to use sum to hold the running total. Because this will cause errors, access to SumIt( ) must be synchronized. Finally, the class Sync creates two threads and has them compute the sum of an integer array.

Inside SumIt( ), the lock statement prevents simultaneous use of the method by different threads. Notice that lock uses lockOn as the object being synchronized. This is a private object that is used solely for synchronization. Sleep( ) is called to purposely allow a task-switch to occur, if one can—but it can’t in this case. Because the code within SumIt( ) is locked, it can be used by only one thread at a time. Thus, when the second child thread begins execution, it does not enter SumIt( ) until after the first child thread is done with it. This ensures the correct result is produced.

To understand the effects of lock fully, try removing it from the body of SumIt( ). After doing this, SumIt( ) is no longer synchronized, and any number of threads can use it concurrently on the same object. The problem with this is that the running total is stored in sum, which will be changed by each thread that calls SumIt( ). Thus, when two threads call SumIt( ) at the same time on the same object, incorrect results are produced because sum reflects the summation of both threads, mixed together. For example, here is sample output from the program after lock has been removed from SumIt( ):

Child #1 starting.

Running total for Child #1 is 1

Child #2 starting.

Running total for Child #2 is 1

Running total for Child #1 is 3

Running total for Child #2 is 5

Running total for Child #1 is 8

Running total for Child #2 is 11

Running total for Child #1 is 15

Running total for Child #2 is 19

Running total for Child #1 is 24

Running total for Child #2 is 29

Sum for Child #1 is 29

Child #1 terminating.

Sum for Child #2 is 29

Child #2 terminating.

As the output shows, both child threads are using SumIt( ) at the same time on the same object, and the value of sum is corrupted.

The effects of lock are summarized here:

• For any given object, once a lock has been acquired, the object is locked and no other thread can acquire the lock.

• Other threads trying to acquire the lock on the same object will enter a wait state until the code is unlocked.

• When a thread leaves the locked block, the object is unlocked.

Although locking a method’s code, as shown in the previous example, is an easy and effective means of achieving synchronization, it will not work in all cases. For example, you might want to synchronize access to a method of a class you did not create, which is itself not synchronized. This can occur if you want to use a class that was written by a third party and for which you do not have access to the source code. Thus, it is not possible for you to add a lock statement to the appropriate method within the class. How can access to an object of this class be synchronized? Fortunately, the solution to this problem is simple: Lock access to the object from code outside the object by specifying the object in a lock statement. For example, here is an alternative implementation of the preceding program. Notice that the code within SumIt( ) is no longer locked and no longer declares the lockOn object. Instead, calls to SumIt( ) are locked within MyThread.

// Another way to use lock to synchronize access to an object.

using System;

using System.Threading;

class SumArray {

int sum;

public int SumIt(int[] nums) {

sum = 0; // reset sum

for(int i=0; i < nums.Length; i++) {

sum += nums[i];

Console.WriteLine("Running total for " +

Thread.CurrentThread.Name +

" is " + sum);

Thread.Sleep(10); // allow task-switch

}

return sum;

}

}

class MyThread {

public Thread Thrd;

int[] a;

int answer;

/* Create one SumArray object for all

instances of MyThread. */

static SumArray sa = new SumArray();

// Construct a new thread.

public MyThread(string name, int[] nums) {

a = nums;

Thrd = new Thread(this.Run);

Thrd.Name = name;

Thrd.Start(); // start the thread

}

// Begin execution of new thread.

void Run() {

Console.WriteLine(Thrd.Name + " starting.");

// Lock calls to SumIt().

lock(sa) answer = sa.SumIt(a);

Console.WriteLine("Sum for " + Thrd.Name +

" is " + answer);

Console.WriteLine(Thrd.Name + " terminating.");

}

}

class Sync {

static void Main() {

int[] a = {1, 2, 3, 4, 5};

MyThread mt1 = new MyThread("Child #1", a);

MyThread mt2 = new MyThread("Child #2", a);

mt1.Thrd.Join();

mt2.Thrd.Join();

}

}

Here, the call to sa.SumIt( ) is locked, rather than the code inside SumIt( ) itself. The code that accomplishes this is shown here:

// Lock calls to SumIt().

lock(sa) answer = sa.SumIt(a);

Because sa is a private object, it is safe to lock on. Using this approach, the program produces the same correct results as the original approach.

The C# keyword lock is really just shorthand for using the synchronization features defined by the Monitor class, which is defined in the System.Threading namespace. Monitor defines several methods that control or manage synchronization. For example, to obtain a lock on an object, call Enter( ). To release a lock, call Exit( ). The simplest form of Enter( ) is shown here, along with the Exit( ) method:

public static void Enter(object obj)

public static void Exit(object obj)

Here, obj is the object being synchronized. If the object is not available when Enter( ) is called, the calling thread will wait until it becomes available. You will seldom use Enter( ) or Exit( ), however, because a lock block automatically provides the equivalent. For this reason, lock is the preferred method of obtaining a lock on an object when programming in C#.

One method in Monitor that you may find useful on occasion is TryEnter( ). One of its forms is shown here:

public static bool TryEnter(object obj)

It returns true if the calling thread obtains a lock on obj and false if it doesn’t. In no case does the calling thread wait. You could use this method to implement an alternative if the desired object is unavailable.

Monitor also defines these three methods: Wait( ), Pulse( ), and PulseAll( ). They are described in the next section.

Consider the following situation. A thread called T is executing inside a lock block and needs access to a resource, called R, that is temporarily unavailable. What should T do? If T enters some form of polling loop that waits for R, then T ties up the lock, blocking other threads’ access to it. This is a less than optimal solution because it partially defeats the advantages of programming for a multithreaded environment. A better solution is to have T temporarily relinquish the lock, allowing another thread to run. When R becomes available, T can be notified and resume execution. Such an approach relies upon some form of interthread communication in which one thread can notify another that it is blocked and be notified when it can resume execution. C# supports interthread communication with the Wait( ), Pulse( ), and PulseAll( ) methods.

The Wait( ), Pulse( ), and PulseAll( ) methods are defined by the Monitor class. These methods can be called only from within a locked block of code. Here is how they are used. When a thread is temporarily blocked from running, it calls Wait( ). This causes the thread to go to sleep and the lock for that object to be released, allowing another thread to acquire the lock. At a later point, the sleeping thread is awakened when some other thread enters the same lock and calls Pulse( ) or PulseAll( ). A call to Pulse( ) resumes the first thread in the queue of threads waiting for the lock. A call to PulseAll( ) signals the release of the lock to all waiting threads.

Here are two commonly used forms of Wait( ):

public static bool Wait(object obj)

public static bool Wait(object obj, int millisecondsTimeout)

The first form waits until notified. The second form waits until notified or until the specified period of milliseconds has expired. For both, obj specifies the object upon which to wait.

Here are the general forms for Pulse( ) and PulseAll( ):

public static void Pulse(object obj)

public static void PulseAll(object obj)

Here, obj is the object being released.

A SynchronizationLockException will be thrown if Wait( ), Pulse( ), or PulseAll( ) is called from code that is not within synchronized code, such as a lock block.

To understand the need for and the application of Wait( ) and Pulse( ), we will create a program that simulates the ticking of a clock by displaying the words “Tick” and “Tock” on the screen. To accomplish this, we will create a class called TickTock that contains two methods: Tick( ) and Tock( ). The Tick( ) method displays the word “Tick” and Tock( ) displays “Tock”. To run the clock, two threads are created, one that calls Tick( ) and one that calls Tock( ). The goal is to make the two threads execute in a way that the output from the program displays a consistent “Tick Tock”—that is, a repeated pattern of one “Tick” followed by one “Tock.”

// Use Wait() and Pulse() to create a ticking clock.

using System;

using System.Threading;

class TickTock {

object lockOn = new object();

public void Tick(bool running) {

lock(lockOn) {

if(!running) { // stop the clock

Monitor.Pulse(lockOn); // notify any waiting threads

return;

}

Console.Write("Tick ");

Monitor.Pulse(lockOn); // let Tock() run

Monitor.Wait(lockOn); // wait for Tock() to complete

}

}

public void Tock(bool running) {

lock(lockOn) {

if(!running) { // stop the clock

Monitor.Pulse(lockOn); // notify any waiting threads

return;

}

Console.WriteLine("Tock");

Monitor.Pulse(lockOn); // let Tick() run

Monitor.Wait(lockOn); // wait for Tick() to complete

}

}

}

class MyThread {

public Thread Thrd;

TickTock ttOb;

// Construct a new thread.

public MyThread(string name, TickTock tt) {

Thrd = new Thread(this.Run);

ttOb = tt;

Thrd.Name = name;

Thrd.Start();

}

// Begin execution of new thread.

void Run() {

if(Thrd.Name == "Tick") {

for(int i=0; i<5; i++) ttOb.Tick(true);

ttOb.Tick(false);

}

else {

for(int i=0; i<5; i++) ttOb.Tock(true);

ttOb.Tock(false);

}

}

}

class TickingClock {

static void Main() {

TickTock tt = new TickTock();

MyThread mt1 = new MyThread("Tick", tt);

MyThread mt2 = new MyThread("Tock", tt);

mt1.Thrd.Join();

mt2.Thrd.Join();

Console.WriteLine("Clock Stopped");

}

}

Here is the output produced by the program:

Tick Tock

Tick Tock

Tick Tock

Tick Tock

Tick Tock

Clock Stopped

Let’s take a close look at this program. In Main( ), a TickTock object called tt is created, and this object is used to start two threads of execution. Inside the Run( ) method of MyThread, if the name of the thread is “Tick,” calls to Tick( ) are made. If the name of the thread is “Tock,” the Tock( ) method is called. Five calls that pass true as an argument are made to each method. The clock runs as long as true is passed. A final call that passes false to each method stops the clock.

The most important part of the program is found in the Tick( ) and Tock( ) methods. We will begin with the Tick( ) method, which, for convenience, is shown here:

public void Tick(bool running) {

lock(lockOn) {

if(!running) { // stop the clock

Monitor.Pulse(lockOn); // notify any waiting threads

return;

}

Console.Write("Tick ");

Monitor.Pulse(lockOn); // let Tock() run

Monitor.Wait(lockOn); // wait for Tock() to complete

}

}

First, notice that the code in Tick( ) is contained within a lock block. Recall, Wait( ) and Pulse( ) can be used only inside synchronized blocks. The method begins by checking the value of the running parameter. This parameter is used to provide a clean shutdown of the clock. If it is false, then the clock has been stopped. If this is the case, a call to Pulse( ) is made to enable any waiting thread to run. We will return to this point in a moment. Assuming the clock is running when Tick( ) executes, the word “Tick” is displayed, and then a call to Pulse( ) takes place followed by a call to Wait( ). The call to Pulse( ) allows a thread waiting on the same lock to run. The call to Wait( ) causes Tick( ) to suspend until another thread calls Pulse( ). Thus, when Tick( ) is called, it displays one “Tick,” lets another thread run, and then suspends.

The Tock( ) method is an exact copy of Tick( ), except that it displays “Tock.” Thus, when entered, it displays “Tock,” calls Pulse( ), and then waits. When viewed as a pair, a call to Tick( ) can be followed only by a call to Tock( ), which can be followed only by a call to Tick( ), and so on. Therefore, the two methods are mutually synchronized.

The reason for the call to Pulse( ) when the clock is stopped is to allow a final call to Wait( ) to succeed. Remember, both Tick( ) and Tock( ) execute a call to Wait( ) after displaying their message. The problem is that when the clock is stopped, one of the methods will still be waiting. Thus, a final call to Pulse( ) is required in order for the waiting method to run. As an experiment, try removing this call to Pulse( ) inside Tick( ) and watch what happens. As you will see, the program will “hang,” and you will need to press ctrl-c to exit. The reason for this is that when the final call to Tock( ) calls Wait( ), there is no corresponding call to Pulse( ) that lets Tock( ) conclude. Thus, Tock( ) just sits there, waiting forever.

Before moving on, if you have any doubt that the calls to Wait( ) and Pulse( ) are actually needed to make the “clock” run right, substitute this version of TickTock into the preceding program. It has all calls to Wait( ) and Pulse( ) removed.

// A nonfunctional version of TickTock.

class TickTock {

object lockOn = new object();

public void Tick(bool running) {

lock(lockOn) {

if(!running) { // stop the clock

return;

}

Console.Write("Tick ");

}

}

public void Tock(bool running) {

lock(lockOn) {

if(!running) { // stop the clock

return;

}

Console.WriteLine("Tock");

}

}

}

After the substitution, the output produced by the program will look like this:

Tick Tick Tick Tick Tick Tock

Tock

Tock

Tock

Tock

Clock Stopped

Clearly, the Tick( ) and Tock( ) methods are no longer synchronized!

When developing multithreaded programs, you must be careful to avoid deadlock and race conditions. Deadlock is, as the name implies, a situation in which one thread is waiting for another thread to do something, but that other thread is waiting on the first. Thus, both threads are suspended, waiting for each other, and neither executes. This situation is analogous to two overly polite people both insisting that the other step through a door first!

Avoiding deadlock seems easy, but it’s not. For example, deadlock can occur in roundabout ways. Consider the TickTock class. As explained, if a final Pulse( ) is not executed by Tick( ) or Tock( ), then one or the other will be waiting indefinitely and the program is deadlocked. Often the cause of the deadlock is not readily understood simply by looking at the source code to the program, because concurrently executing threads can interact in complex ways at runtime. To avoid deadlock, careful programming and thorough testing is required. In general, if a multithreaded program occasionally “hangs,” deadlock is the likely cause.

A race condition occurs when two (or more) threads attempt to access a shared resource at the same time, without proper synchronization. For example, one thread may be writing a new value to a variable while another thread is incrementing the variable’s current value. Without synchronization, the new value of the variable will depend on the order in which the threads execute. (Does the second thread increment the original value or the new value written by the first thread?) In situations like this, the two threads are said to be “racing each other,” with the final outcome determined by which thread finishes first. Like deadlock, a race condition can occur in difficult-to-discover ways. The solution is prevention: careful programming that properly synchronizes access to shared resources.

It is possible to synchronize an entire method by using the MethodImplAttribute attribute. This approach can be used as an alternative to the lock statement in cases in which the entire contents of a method are to be locked. MethodImplAttribute is defined within the System.Runtime.CompilerServices namespace. The constructor that applies to synchronization is shown here:

public MethodImplAttribute(MethodImplOptions methodImplOptions)

Here, methodImplOptions specifies the implementation attribute. To synchronize a method, specify MethodImplOptions.Synchronized. This attribute causes the entire method to be locked on the instance (that is, via this ). (In the case of static methods, the type is locked on.) Thus, it must not be used on a public object or with a public class.

Here is a rewrite of the TickTock class that uses MethodImplAttribute to provide synchronization:

// Use MethodImplAttribute to synchronize a method.

using System;

using System.Threading;

using System.Runtime.CompilerServices;

// Rewrite of TickTock to use MethodImplOptions.Synchronized.

class TickTock {

/* The following attribute synchronizes the entire

Tick() method. */

[MethodImplAttribute(MethodImplOptions.Synchronized)]

public void Tick(bool running) {

if(!running) { // stop the clock

Monitor.Pulse(this); // notify any waiting threads

return;

}

Console.Write("Tick ");

Monitor.Pulse(this); // let Tock() run

Monitor.Wait(this); // wait for Tock() to complete

}

/* The following attribute synchronizes the entire

Tock() method. */

[MethodImplAttribute(MethodImplOptions.Synchronized)]

public void Tock(bool running) {

if(!running) { // stop the clock

Monitor.Pulse(this); // notify any waiting threads

return;

}

Console.WriteLine("Tock");

Monitor.Pulse(this); // let Tick() run

Monitor.Wait(this); // wait for Tick() to complete

}

}

class MyThread {

public Thread Thrd;

TickTock ttOb;

// Construct a new thread.

public MyThread(string name, TickTock tt) {

Thrd = new Thread(this.Run);

ttOb = tt;

Thrd.Name = name;

Thrd.Start();

}

// Begin execution of new thread.

void Run() {

if(Thrd.Name == "Tick") {

for(int i=0; i<5; i++) ttOb.Tick(true);

ttOb.Tick(false);

}

else {

for(int i=0; i<5; i++) ttOb.Tock(true);

ttOb.Tock(false);

}

}

}

class TickingClock {

static void Main() {

TickTock tt = new TickTock();

MyThread mt1 = new MyThread("Tick", tt);

MyThread mt2 = new MyThread("Tock", tt);

mt1.Thrd.Join();

mt2.Thrd.Join();

Console.WriteLine("Clock Stopped");

}

}

The proper Tick Tock output is the same as before.

As long as the method being synchronized is not defined by a public class or called on a public object, then whether you use lock or MethodImplAttribute is your decision. Both produce the same results. Because lock is a keyword built into C#, that is the approach the examples in this book will use.

REMEMBER Do not use MethodImplAttribute with public classes or public instances. Instead, use lock, locking on a private object (as explained earlier).

Although C#’s lock statement is sufficient for many synchronization needs, some situations, such as restricting access to a shared resource, are sometimes more conveniently handled by other synchronization mechanisms built into the .NET Framework. The two described here are related to each other: mutexes and semaphores.

A mutex is a mutually exclusive synchronization object. This means it can be acquired by one and only one thread at a time. The mutex is designed for those situations in which a shared resource can be used by only one thread at a time. For example, imagine a log file that is shared by several processes, but only one process can write to that file at any one time. A mutex is the perfect synchronization device to handle this situation.

The mutex is supported by the System.Threading.Mutex class. It has several constructors. Two commonly used ones are shown here:

public Mutex( )

public Mutex(bool initiallyOwned)

The first version creates a mutex that is initially unowned. In the second version, if initiallyOwned is true, the initial state of the mutex is owned by the calling thread. Otherwise, it is unowned.

To acquire the mutex, your code will call WaitOne( ) on the mutex. This method is inherited by Mutex from the Thread.WaitHandle class. Here is its simplest form:

public bool WaitOne( );

It waits until the mutex on which it is called can be acquired. Thus, it blocks execution of the calling thread until the specified mutex is available. It always returns true.

When your code no longer needs ownership of the mutex, it releases it by calling ReleaseMutex( ), shown here:

public void ReleaseMutex( )

This releases the mutex on which it is called, enabling the mutex to be acquired by another thread.

To use a mutex to synchronize access to a shared resource, you will use WaitOne( ) and ReleaseMutex( ), as shown in the following sequence:

Mutex myMtx = new Mutex();

// ...

myMtx.WaitOne(); // wait to acquire the mutex

// Access the shared resource.

myMtx.ReleaseMutex(); // release the mutex

When the call to WaitOne( ) takes place, execution of the thread will suspend until the mutex can be acquired. When the call to ReleaseMutex( ) takes place, the mutex is released and another thread can acquire it. Using this approach, access to a shared resource can be limited to one thread at a time.

The following program puts this framework into action. It creates two threads, IncThread and DecThread, which both access a shared resource called SharedRes.Count. IncThread increments SharedRes.Count and DecThread decrements it. To prevent both threads from accessing SharedRes.Count at the same time, access is synchronized by the Mtx mutex, which is also part of the SharedRes class.

// Use a Mutex.

using System;

using System.Threading;

// This class contains a shared resource (Count),

// and a mutex (Mtx) to control access to it.

class SharedRes {

public static int Count = 0;

public static Mutex Mtx = new Mutex();

}

// This thread increments SharedRes.Count.

class IncThread {

int num;

public Thread Thrd;

public IncThread(string name, int n)

Thrd = new Thread(this.Run);

num = n;

Thrd.Name = name;

Thrd.Start();

}

// Entry point of thread.

void Run() {

Console.WriteLine(Thrd.Name + " is waiting for the mutex.");

// Acquire the Mutex.

SharedRes.Mtx.WaitOne();

Console.WriteLine(Thrd.Name + " acquires the mutex.");

do {

Thread.Sleep(500);

SharedRes.Count++;

Console.WriteLine("In " + Thrd.Name +

", SharedRes.Count is " + SharedRes.Count);

num--;

} while(num > 0);

Console.WriteLine(Thrd.Name + " releases the mutex.");

// Release the Mutex.

SharedRes.Mtx.ReleaseMutex();

}

}

// This thread decrements SharedRes.Count.

class DecThread {

int num;

public Thread Thrd;

public DecThread(string name, int n) {

Thrd = new Thread(new ThreadStart(this.Run));

num = n;

Thrd.Name = name;

Thrd.Start();

}

// Entry point of thread.

void Run() {

Console.WriteLine(Thrd.Name + " is waiting for the mutex.");

// Acquire the Mutex.

SharedRes.Mtx.WaitOne();

Console.WriteLine(Thrd.Name + " acquires the mutex.");

do {

Thread.Sleep(500);

SharedRes.Count--;

Console.WriteLine("In " + Thrd.Name +

", SharedRes.Count is " + SharedRes.Count);

num--;

} while(num > 0);

Console.WriteLine(Thrd.Name + " releases the mutex.");

// Release the Mutex.

SharedRes.Mtx.ReleaseMutex();

}

}

class MutexDemo {

static void Main() {

// Construct three threads.

IncThread mt1 = new IncThread("Increment Thread", 5);

Thread.Sleep(1); // let the Increment thread start

DecThread mt2 = new DecThread("Decrement Thread", 5);

mt1.Thrd.Join();

mt2.Thrd.Join();

}

}

The output is shown here:

Increment Thread is waiting for the mutex.

Increment Thread acquires the mutex.

Decrement Thread is waiting for the mutex.

In Increment Thread, SharedRes.Count is 1

In Increment Thread, SharedRes.Count is 2

In Increment Thread, SharedRes.Count is 3

In Increment Thread, SharedRes.Count is 4

In Increment Thread, SharedRes.Count is 5

Increment Thread releases the mutex.

Decrement Thread acquires the mutex.

In Decrement Thread, SharedRes.Count is 4

In Decrement Thread, SharedRes.Count is 3

In Decrement Thread, SharedRes.Count is 2

In Decrement Thread, SharedRes.Count is 1

In Decrement Thread, SharedRes.Count is 0

Decrement Thread releases the mutex.

As the output shows, access to SharedRes.Count is synchronized, with only one thread at a time being able to change its value.

To prove that the Mtx mutex was needed to produce the preceding output, try commenting out the calls to WaitOne( ) and ReleaseMutex( ) in the preceding program. When you run the program, you will see the following sequence (the actual output you see may vary):

In Increment Thread, SharedRes.Count is 1

In Decrement Thread, SharedRes.Count is 0

In Increment Thread, SharedRes.Count is 1

In Decrement Thread, SharedRes.Count is 0

In Increment Thread, SharedRes.Count is 1

In Decrement Thread, SharedRes.Count is 0

In Increment Thread, SharedRes.Count is 1

In Decrement Thread, SharedRes.Count is 0

In Increment Thread, SharedRes.Count is 1

As this output shows, without the mutex, increments and decrements to SharedRes.Count are interspersed rather than sequenced.

The mutex created by the previous example is known only to the process that creates it. However, it is possible to create a mutex that is known systemwide. To do so, you must create a named mutex, using one of these constructors:

public Mutex(bool initiallyOwned, string name)

public Mutex(bool initiallyOwned, string name, out bool createdNew)

In both forms, the name of the mutex is passed in name. In the first form, if initiallyOwned is true, then ownership of the mutex is requested. However, because a systemwide mutex might already be owned by another process, it is better to specify false for this parameter. In the second form, on return createdNew will be true if ownership was requested and acquired. It will be false if ownership was denied. (There is also a third form of the Mutex constructor that allows you to specify a MutexSecurity object, which controls access.) Using a named mutex enables you to manage interprocess synchronization.

One other point: It is legal for a thread that has acquired a mutex to make one or more additional calls to WaitOne( ) prior to calling ReleaseMutex( ), and these additional calls will succeed. That is, redundant calls to WaitOne( ) will not block a thread that already owns the mutex. However, the number of calls to WaitOne( ) must be balanced by the same number of calls to ReleaseMutex( ) before the mutex is released.

A semaphore is similar to a mutex except that it can grant more than one thread access to a shared resource at the same time. Thus, the semaphore is useful when a collection of resources is being synchronized. A semaphore controls access to a shared resource through the use of a counter. If the counter is greater than zero, then access is allowed. If it is zero, access is denied. What the counter is counting are permits. Thus, to access the resource, a thread must be granted a permit from the semaphore.

In general, to use a semaphore, the thread that wants access to the shared resource tries to acquire a permit. If the semaphore’s counter is greater than zero, the thread acquires a permit, which causes the semaphore’s count to be decremented. Otherwise, the thread will block until a permit can be acquired. When the thread no longer needs access to the shared resource, it releases the permit, which causes the semaphore’s count to be incremented. If there is another thread waiting for a permit, then that thread will acquire a permit at that time. The number of simultaneous accesses permitted is specified when the semaphore is created. If you create a semaphore that allows only one access, then a semaphore acts just like a mutex.

Semaphores are especially useful in situations in which a shared resource consists of a group or pool. For example, a collection of network connections, any of which can be used for communication, is a resource pool. A thread needing a network connection doesn’t care which one it gets. In this case, a semaphore offers a convenient mechanism to manage access to the connections.

The semaphore is implemented by System.Threading.Semaphore. It has several constructors. The simplest form is shown here:

public Semaphore(int initialCount, int maximumCount)

Here, initialCount specifies the initial value of the semaphore permit counter, which is the number of permits available. The maximum value of the counter is passed in maximumCount. Thus, maximumCount represents the maximum number of permits that can granted by the semaphore. The value in initialCount specifies how many of these permits are initially available.

Using a semaphore is similar to using a mutex, described earlier. To acquire access, your code will call WaitOne( ) on the semaphore. This method is inherited by Semaphore from the WaitHandle class. WaitOne( ) waits until the semaphore on which it is called can be acquired. Thus, it blocks execution of the calling thread until the specified semaphore can grant permission.

When your code no longer needs ownership of the semaphore, it releases it by calling Release( ), which is shown here:

public int Release( )

public int Release(int releaseCount)

The first form releases one permit. The second form releases the number of permits specified by releaseCount. Both return the permit count that existed prior to the release.

It is possible for a thread to call WaitOne( ) more than once before calling Release( ). However, the number of calls to WaitOne( ) must be balanced by the same number of calls to Release( ) before the permit is released. Alternatively, you can call the Release(int) form, passing a number equal to the number of times that WaitOne( ) was called.

Here is an example that illustrates the semaphore. In the program, the class MyThread uses a semaphore to allow only two MyThread threads to be executed at any one time. Thus, the resource being shared is the CPU.

// Use a Semaphore.

using System;

using System.Threading;

// This thread allows only two instances of itself

// to run at any one time.

class MyThread {

public Thread Thrd;

// This creates a semaphore that allows up to two

// permits to be granted and that initially has

// two permits available.

static Semaphore sem = new Semaphore(2, 2);

public MyThread(string name) {

Thrd = new Thread(this.Run);

Thrd.Name = name;

Thrd.Start();

}

// Entry point of thread.

void Run() {

Console.WriteLine(Thrd.Name + " is waiting for a permit.");

sem.WaitOne();

Console.WriteLine(Thrd.Name + " acquires a permit.");

for(char ch='A'; ch < 'D'; ch++) {

Console.WriteLine(Thrd.Name + " : " + ch + " ");

Thread.Sleep(500);

}

Console.WriteLine(Thrd.Name + " releases a permit.");

// Release the semaphore.

sem.Release();

}

}

class SemaphoreDemo {

static void Main() {

// Construct three threads.

MyThread mt1 = new MyThread("Thread #1");

MyThread mt2 = new MyThread("Thread #2");

MyThread mt3 = new MyThread("Thread #3");

mt1.Thrd.Join();

mt2.Thrd.Join();

mt3.Thrd.Join();

}

}

MyThread declares the semaphore sem, as shown here:

static Semaphore sem = new Semaphore(2, 2);

This creates a semaphore that can grant up to two permits and that initially has both permits available.

In MyThread.Run( ), notice that execution cannot continue until a permit is granted by the semaphore, sem. If no permits are available, then execution of that thread suspends. When a permit does become available, execution resumes and the thread can run. In Main( ), three MyThread threads are created. However, only the first two get to execute. The third must wait until one of the other threads terminates. The output, shown here, verifies this. (The actual output you see may vary slightly.)

Thread #1 is waiting for a permit.

Thread #1 acquires a permit.

Thread #1 : A

Thread #2 is waiting for a permit.

Thread #2 acquires a permit.

Thread #2 : A

Thread #3 is waiting for a permit.

Thread #1 : B

Thread #2 : B

Thread #1 : C

Thread #2 : C

Thread #1 releases a permit.

Thread #3 acquires a permit.

Thread #3 : A

Thread #2 releases a permit.

Thread #3 : B

Thread #3 : C

Thread #3 releases a permit.

The semaphore created by the previous example is known only to the process that creates it. However, it is possible to create a semaphore that is known systemwide. To do so, you must create a named semaphore. To do this, use one of these constructors:

public Semaphore(int initialCount, int maximumCount, string name)

public Semaphore(int initialCount, int maximumCount, string name, out bool createdNew)

In both forms, the name of the semaphore is passed in name. In the first form, if a semaphore by the specified name does not already exist, it is created using the values of initialCount and maximumCount. If it does already exist, then the values of initialCount and maximumCount are ignored. In the second form, on return, createdNew will be true if the semaphore was created. In this case, the values of initialCount and maximumCount will be used to create the semaphore. If createdNew is false, then the semaphore already exists and the values of initialCount and maximumCount are ignored. (There is also a third form of the Semaphore constructor that allows you to specify a SemaphoreSecurity object, which controls access.) Using a named semaphore enables you to manage interprocess synchronization.

C# supports another type of synchronization object: the event. There are two types of events: manual reset and auto reset. These are supported by the classes ManualResetEvent and AutoResetEvent. These classes are derived from the top-level class EventWaitHandle. These classes are used in situations in which one thread is waiting for some event to occur in another thread. When the event takes place, the second thread signals the first, allowing it to resume execution.

The constructors for ManualResetEvent and AutoResetEvent are shown here:

public ManualResetEvent(bool initialState)

public AutoResetEvent(bool initialState)

Here, if initialState is true, the event is initially signaled. If initialState is false, the event is initially non-signaled.

Events are easy to use. For a ManualResetEvent, the procedure works like this. A thread that is waiting for some event simply calls WaitOne( ) on the event object representing that event. WaitOne( ) returns immediately if the event object is in a signaled state. Otherwise, it suspends execution of the calling thread until the event is signaled. After another thread performs the event, that thread sets the event object to a signaled state by calling Set( ). Thus, a call Set( ) can be understood as signaling that an event has occurred. After the event object is set to a signaled state, the call to WaitOne( ) will return and the first thread will resume execution. The event is returned to a non-signaled state by calling Reset( ).

The difference between AutoResetEvent and ManualResetEvent is how the event gets reset. For ManualResetEvent, the event remains signaled until a call to Reset( ) is made. For AutoResetEvent, the event automatically changes to a non-signaled state as soon as a thread waiting on that event receives the event notification and resumes execution. Thus, a call to Reset( ) is not necessary when using AutoResetEvent.

Here is an example that illustrates ManualResetEvent:

// Use a manual event object.

using System;

using System.Threading;

// This thread signals the event passed to its constructor.

class MyThread {

public Thread Thrd;

ManualResetEvent mre;

public MyThread(string name, ManualResetEvent evt) {

Thrd = new Thread(this.Run);

Thrd.Name = name;

mre = evt;

Thrd.Start();

}

// Entry point of thread.

void Run() {

Console.WriteLine("Inside thread " + Thrd.Name);

for(int i=0; i<5; i++) {

Console.WriteLine(Thrd.Name);

Thread.Sleep(500);

}

Console.WriteLine(Thrd.Name + " Done!");

// Signal the event.

mre.Set();

}

}

class ManualEventDemo {

static void Main() {

ManualResetEvent evtObj = new ManualResetEvent(false);

MyThread mt1 = new MyThread("Event Thread 1", evtObj);

Console.WriteLine("Main thread waiting for event.");

// Wait for signaled event.

evtObj.WaitOne();

Console.WriteLine("Main thread received first event.");

// Reset the event.

evtObj.Reset();

mt1 = new MyThread("Event Thread 2", evtObj);

// Wait for signaled event.

evtObj.WaitOne();

Console.WriteLine("Main thread received second event.");

}

}

The output is shown here. (The actual output you see may vary slightly.)

Inside thread Event Thread 1

Event Thread 1

Main thread waiting for event.

Event Thread 1

Event Thread 1

Event Thread 1

Event Thread 1

Event Thread 1 Done!

Main thread received first event.

Inside thread Event Thread 2

Event Thread 2

Event Thread 2

Event Thread 2

Event Thread 2

Event Thread 2

Event Thread 2 Done!

Main thread received second event.

First, notice that MyThread is passed a ManualResetEvent in its constructor. When MyThread’s Run( ) method finishes, it calls Set( ) on that event object, which puts the event object into a signaled state. Inside Main( ), a ManualResetEvent called evtObj is created with an initially unsignaled state. Then, a MyThread instance is created and passed evtObj. Next, the main thread waits on the event object. Because the initial state of evtObj is not signaled, this causes the main thread to wait until the instance of MyThread calls Set( ), which puts evtObj into a signaled state. This allows the main thread to run again. Then the event is reset and the process is repeated for the second thread. Without the use of the event object, all threads would have run simultaneously and their output would have been jumbled. To verify this, try commenting out the call to WaitOne( ) inside Main( ).

In the preceding program, if an AutoResetEvent object rather than a ManualResetEvent object were used, then the call to Reset( ) in Main( ) would not be necessary. The reason is that the event is automatically set to a non-signaled state when a thread waiting on the event is resumed. To try this, simply change all references to ManualResetEvent to AutoResetEvent and remove the calls to Reset( ). This version will execute the same as before.

One other class that is related to synchronization is Interlocked. This class offers an alternative to the other synchronization features when all you need to do is change the value of a shared variable. The methods provided by Interlocked guarantee that their operation is performed as a single, uninterruptable operation. Thus, no other synchronization is needed. Interlocked provides static methods that add two integers, increment an integer, decrement an integer, compare and set an object, exchange objects, and obtain a 64-bit value. All of these operations take place without interruption.

The following program demonstrates two Interlocked methods: Increment( ) and Decrement( ). Here are the forms of these methods that will be used:

public static int Increment(ref int location)

public static int Decrement(ref int location)

Here, location is the variable to be incremented or decremented.

// Use Interlocked operations.

using System;

using System.Threading;

// A shared resource.

class SharedRes {

public static int Count = 0;

}

// This thread increments SharedRes.Count.

class IncThread {

public Thread Thrd;

public IncThread(string name) {

Thrd = new Thread(this.Run);

Thrd.Name = name;

Thrd.Start();

}

// Entry point of thread.

void Run() {

for(int i=0; i<5; i++) {

Interlocked.Increment(ref SharedRes.Count);

Console.WriteLine(Thrd.Name + " Count is " + SharedRes.Count);

}

}

}

// This thread decrements SharedRes.Count.

class DecThread {

public Thread Thrd;

public DecThread(string name) {