In the information systems business, ninety percent of product development efforts fail in some aspect. About thirty percent fail to produce anything at all, but most of the failures do produce a product—but people don't like it. They may not use it at all, or if they do, they may grumble endlessly. The largest part of our experience is in information systems, but similar dismal statistics seem to apply virtually everywhere products are developed.

The easiest way to avoid user dissatisfaction is to measure user satisfaction along the way, as the design takes form. Designers can also be considered users, which is another reason to employ a user satisfaction test as a communication vehicle between designers, as well as among clients, end users, and designers.

The test we use was inspired by an approach suggested by Osgood, et al., but sufficiently modified so that Osgood need not take any blame for what we've done. It was designed to have the following attributes:

1. It allows user satisfaction to be measured frequently, so changes during the design process can be detected and evaluated.

2. with a periodic indication of divergence of opinion about current design approaches. Designers can identify strong differences of opinion among informed individuals, differences warning of unrecognized ambiguity or political problems.

3. It enables the designer to pinpoint specific areas of dissatisfaction so specific remedies can be considered.

4. It enables the designer to identify dissatisfaction within specific user constituencies.

5. It enables the designer to determine statistical reliability of the sample, which is important because we usually don't have the luxury of surveying the entire population of users.

6. Because it is enticing to the user to complete, it generally musters a high percentage of respondents.

7. It provides the designers with a clear understanding of just how the completed design is to be evaluated.

8. Even if the results are never summarized, the test is useful to those who fill it out. In fact, even if nobody fills it out, the process of creating the test itself will provide useful information to the designer.

9. The test is cheap, easy to use, nonintrusive, and educational to those who administer it and to those who fill it out.

The test is the same in form, but different in content for each project. To create the user satisfaction test for a particular project, we ask the clients to select a limited subset (six to ten) of the original set of attributes by which the final product will be evaluated. For example, the user might select the following for the design of a Do Not Disturb system:

1. multicultural

2. inexpensive

3. easy to use

4. offering few options

5. highly reliable

6. easy to service

7. having easily understood information

From this subset, we create a set of bipolar adjective pairs, one adjective representing the most favorable condition and one representing the least favorable. These are organized on a seven-point scale, with the least favorable on the left and the most favorable on the right. In our example, the test would look like Figure 21-1. The large area of white space, conspicuously entitled, "Comments?" is often the most significant part.

Figure 21-1. A user satisfaction test for the Do Not Disturb Project.

When the design of the test has been drafted, show it to the clients and ask, "If you fill this out monthly (or whatever interval), will it enable you to express what you like and don't like?" If they answer negatively, then find out what attributes would enable them to express themselves. Then revise the test.

When the clients have eventually answered this question affirmatively, the designer knows that these seven attributes are to play a crucial role in the evaluation of the final product. The designer will keep these attributes in the foreground, rather than lapsing into implicit assumptions about how the design will be evaluated.

The user satisfaction test can be applied throughout the requirements process and on into the remainder of the project.

In designing the test itself, we use it as a tool to discover which are the important and less important attributes. We would have this benefit even if the test were never administered to anyone.

Filling out the test regularly, however, helps to keep the client involved and focused on what is really important. It also helps the design team determine how consistent their parts of their approach are with the client's wishes.

We can also use the test as a constraint on the design process. The requirements document itself could state, for example: All attributes must have an average score of, say, at least 5.0 when administered to a specified sample of users. This, however, is not the principal use of the satisfaction test.

The greatest importance of the test lies not in any absolute significance of the numbers, but in its ability to spot changes in satisfaction. Thus, the two most important things to look for in the responses are changes and additional comments, as discussed below.

A shift—up or down—in the average satisfaction rating of any attribute indicates something is happening. This shift is sufficient cause for the designers to follow up with an interview. Sometimes the shift is the result of no more than a general mood of depression or elation, which in itself is good to reveal. At other times, though, the shift will be an early indication of something potentially causing big trouble if allowed to continue unnoticed.

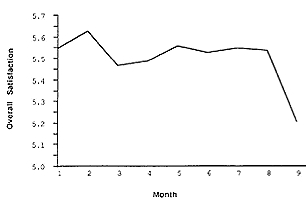

For example, Figure 21-2 shows what happened

late in the requirements phase of a project to create a cad/cam

(computer-aided design/computer-aided manufacturing) system. The

average overall ranking dropped from around 5.5 to 5.2. Considering

the large number of people in the sample, 0.3 was a significant

drop. It was traced to very severe drops in satisfaction by three

engineers. When interviewed, they revealed how in a meeting to work

out limitations, the one feature absolutely essential to their work

was deferred to a later modification.

Figure 21-2. Regular plotting can reveal shifts in satisfaction levels, which can be used to indicate where further information is needed. You may wish to regularly print, and post, plots of average satisfaction levels, as indicated in this Figure.

According to the three engineers, the person running the limitations meeting did not understand why their work was different from the other engineers, and did not listen to them when they tried to explain. The specific deferred item was easily raised to a current requirement, but the response didn't end there. Further inquiry revealed this particular facilitator had acted the same way in other meetings, though none of the people who had been disturbed happened to be in the satisfaction sample. The facilitator accepted an invitation to take further training in facilitation skills.

Listening in a meeting is much like reading any comments on the user satisfaction sheet. When people take the time to write something free-form, you can be sure it's important. But it's not always the thing they say that counts, but what's behind it. That's why puzzling comments must be tracked down.

For instance, one respondent said, simply, "This form stinks!" When interviewed, the respondent said he didn't like the form because he wasn't comfortable writing down some very delicate issues, issues he was more than happy to discuss in person. Translated, "This form stinks!" meant, "I don't know how to use this form to convey information I think is very important. Please come and see me about it!" That's what most "bizarre" comments usually mean.

Some years ago, we conducted a traveling seminar entitled, "Measuring and Increasing User Satisfaction." Hundreds of people attended, and many were stunningly enthusiastic. We were surprised, however, to find that about thirty percent of the attendees grew extremely angry when we presented the user satisfaction test.

Inasmuch as this was a case of user dissatisfaction with user satisfaction, we decided to apply our own methods. (Of course, we used a test to measure satisfaction with the seminar, which is how we discovered the problem in the first place. See Figure 21-3.) It turned out that most of the dissatisfied attendees equated "user satisfaction measurement" with "performance measurement'—the study of automatically measurable characteristics of a computer system, such as the distribution of response times for various interactions.

Figure 21-3. A user satisfaction test for seminar evaluation.

As one performance measurer said, "What do I care whether they say they are satisfied with response time? They signed off on a document that agreed they would be satisfied with ninety percent of the responses in less than one second, and that's what my measurements show. They have no rational cause for complaint."

It may be his users have no rational cause for complaint. It may even be they have no legal leg to stand on. But they are the customers, and their feelings are facts, just as much as the percentage of one-second response times is a fact. And, when it comes to designing new things, subjective reactions are the most important facts of all, because they warn us our design assumptions are not turning out as planned.

If you don't think feelings are facts, important facts, ask yourself the following questions: Why do customers often risk legal action and monetary penalties by breaking contracts for products that don't satisfy them? And why do they switch suppliers for future products?

In the above case, one of the performance measurer's users was standing next to him when he made his statement. "That's right," she said, with a trace of bitterness, "we did agree that ninety percent of the responses under one second would be satisfactory. But we assumed that the other ten percent would be more or less like in the old system—no longer than thirty seconds in any case. With your new system, sometimes I have to sit for ten minutes waiting for a response...'.'

"That's not true," he interrupted. "We've never measured a response time of ten minutes."

"Since I have no warning when it's going to happen, I've never measured it. It may not actually be ten minutes, but it seems that way. Whatever it is, it's not satisfactory, and if you don't fix it, we're going to go back to the old system."

"But it's not my fault. I built what the requirements called for."

"It is your fault, for not helping us write a meaningful requirement," she insisted, terminating the conversation by turning her back on him.

Don't ever allow yourself to dismiss a response you get on a user satisfaction test as "just feelings." If it weren't for feelings, nobody would ask for a new product to begin with.

The user satisfaction test has many benefits, independent of its use as a satisfaction measuring device.

Because it has what psychologists call "face validity," the satisfaction test is especially useful as a communication vehicle. "Face validity" means there are no psychological arcana needed to interpret its meaning. Like any good communication device, it says what it means and it means what it says.

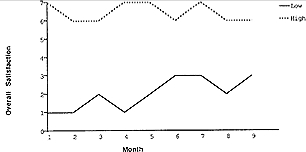

The average response measures major shifts in satisfaction, but face validity means that even the response of a single user can be used as a source of information. Figure 21-4 plots the highest and the lowest satisfaction ratings, over time, creating an "envelope" within which all responses lie. It would be easy to ignore these extremes and just go for the average, but the maximum information will be gleaned from those who rated the extremes.

Figure 21-4. Plotting the envelope of high and low responses indicates where we might go for maximum information.

You can also use maximum shifts to identify users who may have the most information. If a user changes "easy to use" from 6 to 3, the user no longer thinks the product is so easy to use. If another user changes the reliability scale from 0 to 6, it means most doubts about the system's reliability have dissipated. In either case, we would follow up with the user to discover the reasons for the change.

The satisfaction test can also be used, unchanged, after the product is delivered. The results then become a measure of how well people are learning to use the product, and how well it is being serviced. They also provide a starting point for initiating successor projects.

The user satisfaction test provides an additional service for teams of designers: It tells them how their performance will be measured. Without such a focusing device, each designer is likely to emphasize an idiosyncratic perception of the desired solution. Since millions of assumptions are possible, the final integrated product would undoubtedly lack a certain consistency with the real customer requirements.

In the design phase, the test can also be used to measure satisfaction among the design team members. If the designers in a large project use the test regularly, it will measure convergence among designers on what the design problem really is. Divergence provides an early indication of areas of misunderstanding, and an automatic excuse to sit down and discuss them.

There are, of course, many other ways to measure user satisfaction as a development project proceeds. The existence of a regular survey should never be used as an excuse for not supplementing it with other, common-sense methods. For instance, you can always take the opportunity simply to ask users, "How do you feel about the system right now?" They'll probably respond ninety percent of the time with an automatic, "Just fine," but the other ten percent may lead to a gold mine of information.

If the product is being built incrementally, of course, the best measure of satisfaction is the product itself—as far as it has been built. That is, you simply observe how people actually use it, avoid it, or modify it, and record this information to show what satisfies and what doesn't. This is the entire foundation of design using prototypes: You not only build a prototype, but you see what people actually do with it.

At the outset, you may not realize you're building a prototype. A classic case in computing was the design and implementation of the very first Fortran compiler. Although there are few people around today who remember this, about half of the effort in that first implementation went into the FREQUENCY statement. Few Fortran programmers today have ever heard of it, but yes, it truly did consume half of the effort.

The FREQUENCY statement was to be required after an IF-statement. The compiler's job was to make efficient use of the IBM 704's two arithmetic registers and three index registers. In those days, code efficiency was a paramount issue for potential users. As people began using Fortran, they discovered the greatest inefficiency was not in the code produced by the Fortran compiler, but in the compiler itself. A typical program would be compiled ten or twenty or more times during debugging. When it was finally judged free of bugs, it may have been executed only once. Quite often, the total compile time was a hundred times the final running time of the program, and users quickly discovered the reason why. Most of the compiler's time was spent analyzing FREQUENCY statements for optimization.

One of the first "enhancements" of that first Fortran system was to use a "sense switch" on the console to allow the user to turn off FREQUENCY optimization. In those days, all you had to do was look at the setting of sense switch #1 to survey the user satisfaction with Fortran's frequency optimization feature. That's why you never heard of the FREQUENCY statement.

If the Fortran developers had been more aware of the use of a prototype to measure satisfaction, they might have first built the compiler without the frequency feature, then gotten some customer reaction before investing so much in a feature that was never to be used.

That's easy to say in hindsight. The trick is to have foresight on your next development project.

1. When there are two or more rather distinct client groups, create a user satisfaction test for each group. Each test will have its own adjectives, and the different groups may find it informative to see the others' tests. The designer, too, will find this exercise useful for surfacing ambiguities and potential conflicts.

2. During the life of a long project, you can normally expect a small, steady decline in measured user satisfaction. People grow bored, or interested in other things, so nothing quite matches the excitement of a brand-new project. Don't be unduly concerned by such a gradual decline, but, on the other hand, consider whether you need to do something—not too severe—to revive flagging interest.

3. Be sure to choose your sample large enough so it won't be overly affected by one individual. If one individual has a real gripe, you'll see it in the comments, or just as a sharp change in the individual rankings.

4. Be sure to maintain the size of your sample throughout the life of the project. If people leave, they must be replaced to keep the samples reasonably comparable.

5. If you have trouble handling bizarre comments, you may want to put an additional check-box on your user satisfaction test:

□ I have more to say. Please see me or call.

6. To create a statistically significant survey, seek the advice of a competent statistician, but first you may want to ask if your survey needs to be statistically significant. Most of the time, it will be more important just to keep the information flowing, and to follow up on any changes with interviews. Don't get bogged down in mathematics. You're after usable information, not publishable theories.

7. Ken de Lavigne warns that if you do use a prototype as a satisfaction measuring device, "Don't sell it!" He's speaking from experience in the software industry, but in many industries such as aerospace, the first prototype is so expensive, it also has to be the first production model. In those cases where the prototype is not quite so expensive—when, say, designing furniture—you can sell it as a designer original. In the quest for maximum financial return, it's all too easy to forget the prototype's information function.

The easiest and only reliable way to ensure users will be satisfied with the ultimate design is to measure their satisfaction as the design takes form. Without regular measurement, it's too easy to engender the response of the American who went to Paris and ordered tete de veau (calf's head) in a three-star restaurant. When he saw the veal head's beady little eyes staring at him from the platter, all he could say was "What's that?" He never did manage to eat it.

Create the user satisfaction test as early in the project as possible, soon after the attributes are listed for the first time. Administer the test on a regular basis, with the interval depending on the project's lifetime. On a one-year project, monthly surveys would seem about right.

Follow this cycle:

1. Create a user satisfaction test for your own project. Use our form or some other that suits the culture of your organization. And above all, be sure to get user involvement in the process.

2. Administer the test regularly, as promised.

3. Tabulate each category and the overall satisfaction rating, then look for shifts. Follow up on the shifts to find out what's behind them.

4. Pay particular attention to any comments, especially if they express strong feelings. Never forget feelings are facts, the most important facts you have about the people for whom you're creating this system in the first place.

5. Whether or not you use a user satisfaction test, don't forget to use all the information about user satisfaction from other sources, such as reactions to prototypes, or simply off-hand comments.

All users should at least be represented in the sample. Be sure to replace people who leave the purview of the project.

Use the test with the design team, too, but keep the tabulations separate. If the measures differ widely, call a meeting of the designers and users to find out why.