Many ensemble learning methods use a statistical technique called bootstrap sampling. A bootstrap sample of a dataset is another dataset that's obtained by randomly sampling the observations from the original dataset with replacement.

This technique is heavily used in statistics, for example; it is used for estimating standard errors on sample statistics like mean or standard deviation of values.

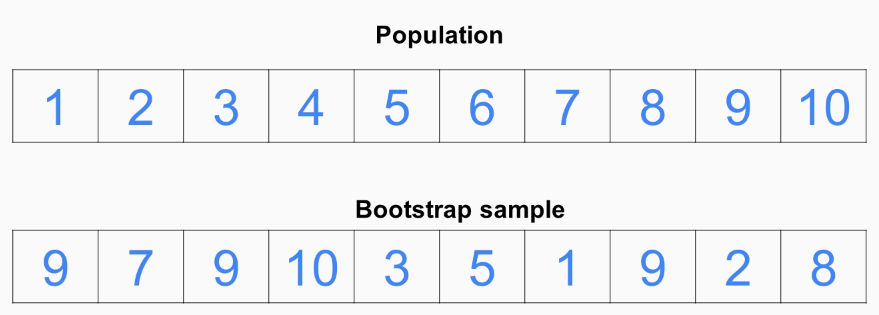

Let's understand this technique more by taking a look at the following diagram:

Let's assume that we have a population of 1 to 10, which can be considered original population data. To get a bootstrap sample, we need to draw 10 samples from the original data with replacement. Imagine you have the 10 numbers written in 10 cards in a hat; for the first element of your sample, you take one card at random from the hat and write it down, then put the card back in the hat and this process goes on until you get 10 elements. This is your bootstrap sample. As you can see in the preceding example, 9 is repeated thrice in the bootstrap sample.

This resampling of numbers with replacement improves the accuracy of the true population data. It also helps in understanding various discrepancies and features involved in the resampling process, thereby increasing accuracy of the same.