The dimensionality reduction method is the process of reducing the number of features under consideration by obtaining a set of principal variables. The Principal Component Analysis (PCA) technique is the most important technique used for dimensionality reduction. Here, we will talk about why we need dimensionality reduction, and we will also see how to perform the PCA technique in scikit-learn.

These are the reasons for having a high number of features while working on predictive analytics:

- It enables the simplification of models, in order to make them easier to understand and to interpret. There might be some computational considerations if you are dealing with thousands of features. It might be a good idea to reduce the number of features in order to save computational resources.

- Another reason is to avoid the "curse of dimensionality." Now, this is a technical term and a set of problems that arise when you are working with high-dimensional data.

- This also helps us to minimize overfitting because if you are including a lot of irrelevant features to predict the target, then your model can overfit to that noise. So, removing irrelevant features will help you with overfitting.

Feature selection, seen earlier in this chapter, can be considered a form of dimensionality reduction. When you have a set of features that are closely related or even redundant, PCA will be the preferred technique to encode the same information using less features. So, what is PCA? It's a statistical procedure that converts a set of observations of possibly correlated variables into a set of linearly uncorrelated variables called principal components. Let's not go into the mathematical details about what's going on with PCA.

Let's assume we have a dataset that is two-dimensional. PCA identifies a direction where the dataset varies the most and encodes the maximum amount of information on these two features into one single feature to reduce the dimensions from two to one. This method projects every point onto these axes or new dimensions.

As you can see in the following screenshot, the first principal component of these two features would be the projections of the points onto the red line, which is the main mathematical intuition behind what's going on in PCA:

Now, let's go to the Jupyter Notebook to see how to implement the dimensionality reduction method and to apply PCA on the given dataset:

In this case, we will use the credit card default dataset. So, here we are doing the transformations that we have covered so far:

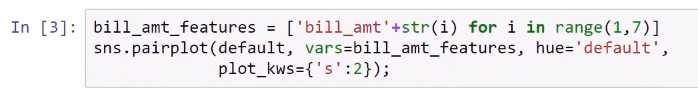

Now, let's take a look at the bill amount features. We have six of these features, the history of the bill amounts from one to six months ago, which are closely related, as you can see from the visualization generated from the following screenshot of code snippets:

So, they represent the same information. If you see a customer with a very high bill amount two or three months ago, it is very likely that they also got a very high bill amount one month ago. So, these features, as you can see from the visualization shown in the following screenshot, are really closely related:

We confirm this with the calculation of the correlation coefficient. As you can see, they are really highly correlated:

The correlation between the bill amount one month ago and two months ago is 0.95. We have very high correlations, which is a good opportunity to apply a dimensionality reduction technique, such as PCA in scikit-learn, for which we import it from sklearn.decomposition , as shown in the following screenshot:

After that, we instantiate this PCA object. Then, we pass the columns or the features that we want to apply PCA decomposition to:

So after using the fit() method derived from this object, we receive one of the attributes, the explained variance ratio, as shown in the following screenshot:

Let's plot this quantity to get a feel for what's going on with these features. As you can see here, we get the explained variance of all six components:

The way to read this plot is that the first component of the PCA that we did on these six features encodes more than 90% of the total variance of all six features. The second one shows a very small variance, and the third, fourth, fifth, and sixth components also have minimal variance.

Now, we can see this in the plot of cumulative explained variance shown in the following screenshot:

As you can see, the first component encodes more than 90% of the variance of the six features that we used. So, you are getting more than 90% of the information in just one feature. Therefore, instead of using six features, you can use just one single feature and still get more than 90% of the variance. Or, you can use the first two components and get more than 95% of the total information contained in the six features in just two features, the first and second components of this PCA. So, this is how this works in practice and we can use this as one technique for performing feature engineering.