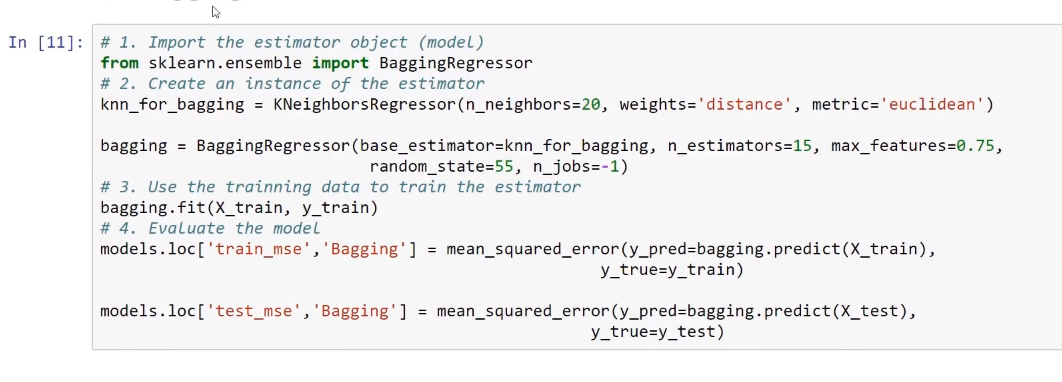

Bagging is an ensemble learning model. Any estimator can be used with the bagging method. So, let's take a case where we use KNN, as shown in the following screenshot:

Using the n_estimators parameter, we can produce an ensemble of 15 individual estimators. As a result, this will produce 15 bootstrap samples of the training dataset, and then, in each of these samples, it will fit one of these KNN regressors with 20 neighbors. In the end, we will get the individual predictions by using the bagging method. The method that this algorithm uses for giving individual predictions is a majority vote.