Chapter 8

Relying on AI to Improve Human Interaction

IN THIS CHAPTER

Communicating in new ways

Communicating in new ways

Sharing ideas

Sharing ideas

Employing multimedia

Employing multimedia

Improving human sensory perception

Improving human sensory perception

People interact with each other in myriad ways. In fact, few people realize just how

many different ways communication occurs. When many people think about communication,

they think about writing or talking. However, interaction can take many other forms,

including eye contact, tonal quality, and even scent (see https://www.smithsonianmag.com/science-nature/the-truth-about-pheromones-100363955/). An example of the computer version of enhanced human interaction is the electronic

nose, which relies on a combination of electronics, biochemistry, and artificial intelligence

to perform its task and has been applied to a wide range of industrial applications

and research (see https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3274163/). This chapter concentrates more along the lines of standard communication, however,

including body language. You get a better understanding of how AI can enhance human

communication through means that are less costly than building your own electronic

nose.

AI can also enhance the manner in which people exchange ideas. In some cases, AI provides

entirely new methods of communication, but in many cases, AI provides a subtle (or

sometimes not so subtle) method of enhancing existing ways to exchange ideas. Humans

rely on exchanging ideas to create new technologies, build on existing technologies,

or learn about technologies needed to increase an individual’s knowledge. Ideas are

abstract, which makes exchanging them particularly difficult at times, so AI can provide

a needed bridge between people.

At one time, if someone wanted to store their knowledge to share with someone else,

they generally relied on writing. In some cases, they could also augment their communication

by using graphics of various types. However, only some people can use these two forms

of media to gain new knowledge; many people require more, which is why online sources

such as YouTube (https://www.youtube.com/) have become so popular. Interestingly enough, you can augment the power of multimedia,

which is already substantial, by using AI, and this chapter tells you how.

The final section of this chapter helps you understand how an AI can give you almost

superhuman sensory perception. Perhaps you really want that electronic nose after

all; it does provide significant advantages in detecting scents that are significantly

less aromatic than humans can smell. Imagine being able to smell at the same level

that a dog does (which uses 100 million aroma receptors, versus the 1 million aroma

receptors that humans possess). It turns out two ways let you achieve this goal: using

monitors that a human accesses indirectly, and direct stimulation of human sensory

perception.

Developing New Ways to Communicate

Communication involving a developed language initially took place between humans via

the spoken versus written word. The only problem with spoken communication is that

the two parties must appear near enough together to talk. Consequently, written communication

is superior in many respects because it allows time-delayed communications that don’t

require the two parties to ever see each other. The three main methods of human nonverbal

communication rely on:

- Alphabets: The abstraction of components of human words or symbols

- Language: The stringing of words or symbols together to create sentences or convey ideas in

written form

- Body language: The augmentation of language with context

The first two methods are direct abstractions of the spoken word. They aren’t always

easy to implement, but people have been doing so for thousands of years. The body-language

component is the hardest to implement because you’re trying to create an abstraction

of a physical process. Writing helps convey body language using specific terminology,

such as that described at https://writerswrite.co.za/cheat-sheets-for-writing-body-language/. However, the written word falls short, so people augment it with symbols, such as

emoticons and emoji (read about their differences at https://www.britannica.com/demystified/whats-the-difference-between-emoji-and-emoticons). The following sections discuss these issues in more detail.

Creating new alphabets

The introduction to this section discusses two new alphabets used in the computer

age: emoticons (http://cool-smileys.com/text-emoticons) and emoji (https://emojipedia.org/). The sites where you find these two graphic alphabets online can list hundreds of

them. For the most part, humans can interpret these iconic alphabets without too much

trouble because they resemble facial expressions, but an application doesn’t have

the human sense of art, so computers often require an AI just to figure out what emotion

a human is trying to convey with the little pictures. Fortunately, you can find standardized

lists, such as the Unicode emoji chart at https://unicode.org/emoji/charts/full-emoji-list.html. Of course, a standardized list doesn’t actually help with translation. The article

at https://www.geek.com/tech/ai-trained-on-emoji-can-detect-social-media-sarcasm-1711313/ provides more details on how someone can train an AI to interpret and react to emoji

(and by extension, emoticons). You can actually see an example of this process at

work at https://deepmoji.mit.edu/.

The emoticon is an older technology, and many people are trying their best to forget

it (but likely won’t succeed). The emoji, however, is new and exciting enough to warrant

a movie (see https://www.amazon.com/exec/obidos/ASIN/B0746ZZR71/datacservip0f-20/). You can also rely on Google’s AI to turn your selfies into emoji (see https://www.fastcodesign.com/90124964/exclusive-new-google-tool-uses-ai-to-create-custom-emoji-of-you-from-a-selfie). Just in case you really don’t want to sift through the 2,666 official emoji that

Unicode supports (or the 564 quadrillion emoji that Google’s Allo, https://allo.google.com/, can generate), you can rely on Dango (https://play.google.com/store/apps/details?id=co.dango.emoji.gif&hl=en) to suggest an appropriate emoji to you (see https://www.technologyreview.com/s/601758/this-app-knows-just-the-right-emoji-for-any-occasion/).

Humans have created new alphabets to meet specific needs since the beginning of the

written word. Emoticons and emoji represent two of many alphabets that you can count

on humans creating as the result of the Internet and the use of AI. In fact, it may

actually require an AI to keep up with them all.

Humans have created new alphabets to meet specific needs since the beginning of the

written word. Emoticons and emoji represent two of many alphabets that you can count

on humans creating as the result of the Internet and the use of AI. In fact, it may

actually require an AI to keep up with them all.

Automating language translation

The world has always had a problem with the lack of a common language. Yes, English

has become more or less universal — to some extent, but it’s still not completely

universal. Having someone translate between languages can be expensive, cumbersome,

and error prone, so translators, although necessary in many situations, aren’t necessarily

a great answer either. For those who lack the assistance of a translator, dealing

with other languages can be quite difficult, which is where applications such as Google

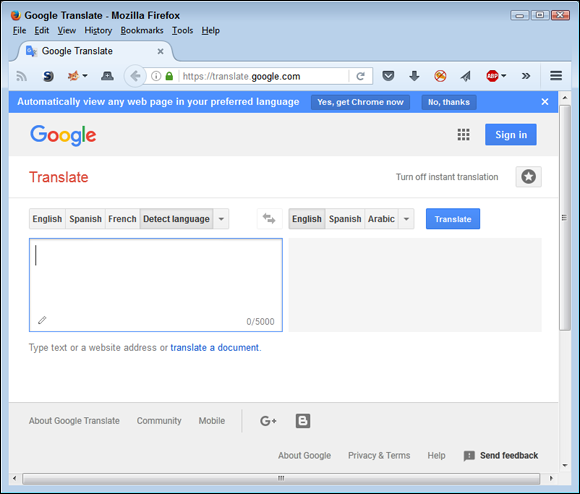

Translate (see Figure 8-1) come into play.

One of the things you should note in Figure 8-1 is that Google Translate offers to automatically detect the language for you. What’s

interesting about this feature is that it works extremely well in most cases. Part

of the responsibility for this feature is the Google Neural Machine Translation (GNMT)

system. It can actually look at entire sentences to make sense of them and provide

better translations than applications that use phrases or words as the basis for creating

a translation (see http://www.wired.co.uk/article/google-ai-language-create for details).

What is even more impressive is that GNMT can translate between languages even when

it doesn’t have a specific translator, using an artificial language, an interlingua (see

What is even more impressive is that GNMT can translate between languages even when

it doesn’t have a specific translator, using an artificial language, an interlingua (see https://en.oxforddictionaries.com/definition/interlingua). However, it’s important to realize that an interlingua doesn’t function as a universal

translator; it’s more of a universal bridge. Say that the GNMT doesn’t know how to

translate between Chinese and Spanish. However, it can translate between Chinese and

English and between English and Spanish. By building a 3-D network representing these

three languages (the interlingua), GNMT is able to create its own translation between

Chinese and Spanish. Unfortunately, this system won’t work for translating between

Chinese and Martian because no method is available yet to understand and translate

Martian in any other human language. Humans still need to create a base translation

for GNMT to do its work.

Incorporating body language

A significant part of human communication occurs with body language, which is why

the use of emoticons and emoji are important. However, people are becoming more used

to working directly with cameras to create videos and other forms of communication

that involve no writing. In this case, a computer could possibly listen to human input,

parse it into tokens representing the human speech, and then process those tokens

to fulfill a request, similar to the manner in which Alexa or Google Home and their

ilk work.

Unfortunately, merely translating the spoken word into tokens won’t do the job because

the whole issue of nonverbal communication remains. In this case, the AI must be able

to read body language directly. The article at

Unfortunately, merely translating the spoken word into tokens won’t do the job because

the whole issue of nonverbal communication remains. In this case, the AI must be able

to read body language directly. The article at https://www.cmu.edu/news/stories/archives/2017/july/computer-reads-body-language.html discusses some of the issues that developers must solve to make reading body language

possible. The picture at the beginning of that article gives you some idea of how

the computer camera must capture human positions to read the body language, and the

AI often requires input from multiple cameras to make up for such issues as having

part of the human anatomy obscured from the view of a single camera. The reading of

body language involves interpreting these human characteristics:

- Posture

- Head motion

- Facial expression

- Eye contact

- Gestures

Of course, there are other characteristics, but even if an AI can get these five areas

down, it can go a long way to providing a correct body-language interpretation. In

addition to body language, current AI implementations also take characteristics like

tonal quality into consideration, which makes for an extremely complex AI that still

doesn’t come close to doing what the human brain does seemingly without effort.

Once an AI can read body language, it must also provide a means to output it when

interacting with humans. Given that reading is in its infancy, robotic or graphic

presentation of body language is even less developed. The article at

Once an AI can read body language, it must also provide a means to output it when

interacting with humans. Given that reading is in its infancy, robotic or graphic

presentation of body language is even less developed. The article at https://spectrum.ieee.org/video/robotics/robotics-software/robots-learn-to-speak-body-language points out that robots can currently interpret body language and then react appropriately

in some few cases. Robots are currently unable to create good facial expressions,

so, according to the article at http://theconversation.com/realistic-robot-faces-arent-enough-we-need-emotion-to-put-us-at-ease-with-androids-43372, the best case scenario is to substitute posture, head motion, and gestures for body

language. The result isn’t all that impressive, yet.

Exchanging Ideas

An AI doesn’t have ideas because it lacks both intrapersonal intelligence and the

ability to understand. However, an AI can enable humans to exchange ideas in a manner

that creates a whole that is greater than the sum of its parts. In many cases, the

AI isn’t performing any sort of exchange. The humans involved in the process perform

the exchange by relying on the AI to augment the communication process. The following

sections provide additional details about how this process occurs.

Creating connections

A human can exchange ideas with another human, but only as long as the two humans

know about each other. The problem is that many experts in a particular field don’t

actually know each other — at least, not well enough to communicate. An AI can perform

research based on the flow of ideas that a human provides and then create connections

with other humans who have that same (or similar) flow of ideas.

One of the ways in which this communication creation occurs is in social media sites

such as LinkedIn (https://www.linkedin.com/), where the idea is to create connections between people based on a number of criteria.

A person’s network becomes the means by which the AI deep inside LinkedIn suggests

other potential connections. Ultimately, the purpose of these connections from the

user’s perspective is to gain access to new human resources, make business contacts,

create a sale, or perform other tasks that LinkedIn enables using the various connections.

Augmenting communication

To exchange ideas successfully, two humans need to communicate well. The only problem

is that humans sometimes don’t communicate well, and sometimes they don’t communicate

at all. The issue isn’t just one of translating words but also ideas. The societal

and personal biases of individuals can preclude the communication because an idea

for one group may not translate at all for another group. For example, the laws in

one country could make someone think in one way, but the laws in another country could

make the other human think in an entirely different manner.

Theoretically, an AI could help communication between disparate groups in numerous

ways. Of course, language translation (assuming that the translation is accurate)

is one of these methods. However, an AI could provide cues as to what is and isn’t

culturally acceptable by prescreening materials. Using categorization, an AI could

also suggest aids like alternative graphics and so on to help communication take place

in a manner that helps both parties.

Defining trends

Humans often base ideas on trends. However, to visualize how the idea works, other

parties in the exchange of ideas must also see those trends, and communicating using

this sort of information is notoriously difficult. AI can perform various levels of

data analysis and present the output graphically. The AI can analyze the data in more

ways and faster than a human can so that the story the data tells is specifically

the one you need it to tell. The data remains the same; the presentation and interpretation

of the data change.

Studies show that humans relate better to graphical output than tabular output, and

graphical output will definitely make trends easier to see. As described at http://sphweb.bumc.bu.edu/otlt/mph-modules/bs/datapresentation/DataPresentation2.html, you generally use tabular data to present only specific information; graphics always

work best for showing trends. Using AI-driven applications can also make creating

the right sort of graphic output for a particular requirement easier. Not all humans see graphics in precisely the same way,

so matching a graphic type to your audience is essential.

Using Multimedia

Most people learn by using multiple senses and multiple approaches. A doorway to learning

that works for one person may leave another completely mystified. Consequently, the

more ways in which a person can communicate concepts and ideas, the more likely it

is that other people will understand what the person is trying to communicate. Multimedia

normally consists of sound, graphics, text, and animation, but some multimedia does

more.

AI can help with multimedia in numerous ways. One of the most important is in the

creation, or authoring, of the multimedia. You find AI in applications that help with

everything from media development to media presentation. For example, when translating

the colors in an image, an AI may provide the benefit of helping you visualize the

effects of those changes faster than trying one color combination at a time (the brute-force

approach).

After using multimedia to present ideas in more than one form, those receiving the

ideas must process the information. A secondary use of AI relies on the use of neural

networks to process the information in various ways. Categorizing the multimedia is

an essential use of the technology today. However, in the future you can look forward

to using AI to help in 3-D reconstruction of scenes based on 2-D pictures. Imagine

police being able to walk through a virtual crime scene with every detail faithfully

captured.

People used to speculate that various kinds of multimedia would appear in new forms.

For example, imagine a newspaper that provides Harry Potter-like dynamic displays.

Most of the technology pieces are actually available today, but the issue is one of

market. For a technology to become successful, it must have a market — that is, a

means for paying for itself.

Embellishing Human Sensory Perception

One way that AI truly excels at improving human interaction is by augmenting humans

in one of two ways: allowing them to use their native senses to work with augmented

data or by augmenting the native senses to do more. The following sections discuss

both approaches to enhancing human sensing and therefore improve communication.

Shifting data spectrum

When performing various kinds of information gathering, humans often employ technologies

that filter or shift the data spectrum with regard to color, sound, or smell. The

human still uses native capabilities, but some technology changes the input such that

it works with that native capability. One of the most common examples of spectrum

shifting is astronomy, in which shifting and filtering light enables people to see

astronomical elements, such as nebula, in ways that the naked eye can’t, — and thereby

improving our understanding of the universe.

However, shifting and filtering colors, sounds, and smells manually can require a

great deal of time, and the results can disappoint even when performed expertly, which

is where AI comes into play. An AI can try various combinations far faster than a

human and locate the potentially useful combinations with greater ease because an

AI performs the task in a consistent manner.

The most intriguing technique for exploring our world, however, is completely different

from what most people expect. What if you could smell a color or see a sound? The

occurrence of synesthesia (

The most intriguing technique for exploring our world, however, is completely different

from what most people expect. What if you could smell a color or see a sound? The

occurrence of synesthesia (http://www.science20.com/news_releases/synaesthesia_smelling_a_sound_or_hearing_a_color), which is the use of one sense to interpret input from a different sense, is well

documented in humans. People use AI to help study the effect as described at http://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1004959. The interesting use of this technology, though, is to create a condition in which

other people can actually use synesthesia as another means to see the world (see https://www.fastcompany.com/3024927/this-app-aids-your-decision-making-by-mimicking-its-creators-synesthesia for details). Just in case you want to see how the use of synesthesia works for yourself,

check out the ChoiceMap app at https://choicemap.co/.

Augmenting human senses

As an alternative to using an external application to shift data spectrum and somehow

make that shifted data available for use by humans, you can augment human senses.

In augmentation, a device, either external or implanted, enables a human to directly

process sensory input in a new way. Many people view these new capabilities as the

creation of cyborgs, as described at https://www.theatlantic.com/technology/archive/2017/10/cyborg-future-artificial-intelligence/543882/. The idea is an old one: use tools to make humans ever more effective at performing

an array of tasks. In this scenario, humans receive two forms of augmentation: physical

and intellectual.

Physical augmentation of human senses already takes place in many ways and is guaranteed

to increase as humans become more receptive to various kinds of implants. For example,

night vision glasses currently allow humans to see at night, with high-end models

providing color vision controlled by a specially designed processor. In the future,

eye augmentation/replacement could allow people to see any part of the spectrum as

controlled by thought, so that people would see only that part of the spectrum needed

to perform a specific task.

Intelligence Augmentation requires more intrusive measures but also promises to allow humans to exercise far

greater capabilities. Unlike AI, Intelligence Augmentation (IA) has a human actor

at the center of the processing. The human provides the creativity and intent that

AI currently lacks. You can read a discussion of the differences between AI and IA

at https://www.financialsense.com/contributors/guild/artificial-intelligence-vs-intelligence-augmentation-debate.

Communicating in new ways

Communicating in new ways Sharing ideas

Sharing ideas Employing multimedia

Employing multimedia Improving human sensory perception

Improving human sensory perception Humans have created new alphabets to meet specific needs since the beginning of the

written word. Emoticons and emoji represent two of many alphabets that you can count

on humans creating as the result of the Internet and the use of AI. In fact, it may

actually require an AI to keep up with them all.

Humans have created new alphabets to meet specific needs since the beginning of the

written word. Emoticons and emoji represent two of many alphabets that you can count

on humans creating as the result of the Internet and the use of AI. In fact, it may

actually require an AI to keep up with them all.

What is even more impressive is that GNMT can translate between languages even when

it doesn’t have a specific translator, using an artificial language, an interlingua (see

What is even more impressive is that GNMT can translate between languages even when

it doesn’t have a specific translator, using an artificial language, an interlingua (see