Chapter 12

Developing Robots

IN THIS CHAPTER

Distinguishing between robots in sci-fi and in reality

Distinguishing between robots in sci-fi and in reality

Reasoning on robot ethics

Reasoning on robot ethics

Finding more applications to robots

Finding more applications to robots

Looking inside how a robot is made

Looking inside how a robot is made

People often mistake robotics for AI, but robotics are different from AI. Artificial

intelligence aims to find solutions to some difficult problems related to human abilities

(such as recognizing objects, or understanding speech or text); robotics aims to use

machines to perform tasks in the physical world in a partially or completely automated

way. It helps to think of AI as the software used to solve problems and of robotics

as the hardware for making these solutions a reality.

Robotic hardware may or may not run using AI software. Humans remotely control some

robots, as with the da Vinci robot discussed in the “Assisting a surgeon” section of Chapter 7. In many cases, AI does provide augmentation, but the human is still in control.

Between these extremes are robots that take abstract orders by humans (such as going

from point A to point B on a map or picking up an object) and rely on AI to execute

the orders. Other robots autonomously perform assigned tasks without any human intervention.

Integrating AI into a robot makes the robot smarter and more useful in performing

tasks, but robots don’t always need AI to function properly. Human imagination has

made the two overlap as a result of sci-fi films and novels.

This chapter explores how this overlap happened and distinguishes between the current

realities of robots and how the extensive use of AI solutions could transform them.

Robots have existed in production since 1960s. This chapter also explores how people

are employing robots more and more in industrial work, scientific discovery, medical

care, and war. Recent AI discoveries are accelerating this process because they solve

difficult problems in robots, such as recognizing objects in the world, predicting human behavior, understanding voice commands, speaking

correctly, learning to walk up-straight and, yes, back-flipping, as you can read in

this article on recent robotic milestones: https://www.theverge.com/circuitbreaker/2017/11/17/16671328/boston-dynamics-backflip-robot-atlas.

Defining Robot Roles

Robots are a relatively recent idea. The word comes from the Czech word robota, which means forced labor. The term first appeared in the 1920 play Rossum’s Universal Robots, written by Czech author Karel Čapek. However, humanity has long dreamed of mechanical

beings. Ancient Greeks developed a myth of a bronze mechanical man, Talus, built by

the god of metallurgy, Hephaestus, at the request of Zeus, the father of the gods.

The Greek myths also contain references to Hephaestus building other automata, apart

from Talus. Automata are self-operated machines that executed specific and predetermined sequences of

tasks (as contrasted to robots, which have the flexibility to perform a wide range

of tasks). The Greeks actually built water-hydraulic automata that worked the same

as an algorithm executed in the physical world. As algorithms, automata incorporate

the intelligence of their creator, thus providing the illusion of being self-aware,

reasoning machines.

You find examples of automata in Europe throughout the Greek civilization, the Middle

Ages, the Renaissance, and modern times. Many designs by mathematician and inventor

Al-Jazari appear in the Middle East (see http://www.muslimheritage.com/article/al-jazari-mechanical-genius for details). China and Japan have their own versions of automata. Some automata

are complex mechanical designs, but others are complete hoaxes, such as the Mechanical

Turk, an eighteenth-century machine that was said to be able to play chess but hid

a man inside.

Differentiating automata from other human-like animations is important. For example,

the Golem (

Differentiating automata from other human-like animations is important. For example,

the Golem (https://www.myjewishlearning.com/article/golem/) is a mix of clay and magic. No machinery is involved, so it doesn’t qualify as the

type of device discussed in this chapter.

The robots described by Čapek were not exactly mechanic automata, but rather living

beings engineered and assembled as if they were automata. His robots possessed a human-like

shape and performed specific roles in society meant to replace human workers. Reminiscent

of Mary Shelley’s Frankenstein, Čapek’s robots were something that people view as

androids today: bioengineered artificial beings, as described in Philip K. Dick’s novel Do Androids Dream of Electric Sheep? (the inspiration for the film Blade Runner). Yet, the name robot also describes autonomous mechanical devices not made to amaze and delight, but rather

to produce goods and services. In addition, robots became a central idea in sci-fi,

both in books and movies, furthermore contributing to a collective imagination of

the robot as a human-shaped AI, designed to serve humans — not too dissimilar from

Čapek’s original idea of a servant. Slowly, the idea transitioned from art to science

and technology and became an inspiration for scientists and engineers.

Čapek created both the idea of robots and that of a robot apocalypse, like the AI

takeover you see in sci-fi movies and that, given AI’s recent progress, is feared

by notable figures such as the founder of Microsoft, Bill Gates, physicist Stephen

Hawking, and the inventor and business entrepreneur Elon Musk. Čapek’s robotic slaves

rebel against the humans who created them at the end of the play by eliminating almost

all of humanity.

Čapek created both the idea of robots and that of a robot apocalypse, like the AI

takeover you see in sci-fi movies and that, given AI’s recent progress, is feared

by notable figures such as the founder of Microsoft, Bill Gates, physicist Stephen

Hawking, and the inventor and business entrepreneur Elon Musk. Čapek’s robotic slaves

rebel against the humans who created them at the end of the play by eliminating almost

all of humanity.

Overcoming the sci-fi view of robots

The first commercialized robot, the Unimate (https://www.robotics.org/joseph-engelberger/unimate.cfm), appeared in 1961. It was simply a robotic arm — a programmable mechanical arm made

of metal links and joints — with an end that could grip, spin, or weld manipulated

objects according to instructions set by human operators. It was sold to General Motors

to use in the production of automobiles. The Unimate had to pick up die-castings from

the assembly line and weld them together, a physically dangerous task for human workers.

To get an idea of the capabilities of such a machine, check out this video: https://www.youtube.com/watch?v=hxsWeVtb-JQ. The following sections describe the realities of robots today.

Considering robotic laws

Before the appearance of Unimate, and long before the introduction of many other robot

arms employed in industry that started working with human workers in assembling lines,

people already knew how robots should look, act, and even think. Isaac Asimov, an

American writer renowned for his works in science fiction and popular science, produced

a series of novels in the 1950s that suggested a completely different concept of robots

from those used in industrial settings.

Asimov coined the term robotics and used it in the same sense as people use the term mechanics. His powerful imagination still sets the standard today for people’s expectations

of robots. Asimov set robots in an age of space exploration, having them use their

positronic brains to help humans daily to perform both ordinary and extraordinary

tasks. A positronic brain is a fictional device that makes robots in Asimov’s novels act autonomously and be

capable of assisting or replacing humans in many tasks. Apart from providing human-like

capabilities in understanding and acting (strong-AI), the positronic brain works under the three laws of robotics

as part of the hardware, controlling the behavior of robots in a moral way:

Asimov coined the term robotics and used it in the same sense as people use the term mechanics. His powerful imagination still sets the standard today for people’s expectations

of robots. Asimov set robots in an age of space exploration, having them use their

positronic brains to help humans daily to perform both ordinary and extraordinary

tasks. A positronic brain is a fictional device that makes robots in Asimov’s novels act autonomously and be

capable of assisting or replacing humans in many tasks. Apart from providing human-like

capabilities in understanding and acting (strong-AI), the positronic brain works under the three laws of robotics

as part of the hardware, controlling the behavior of robots in a moral way:

- A robot may not injure a human being or, through inaction, allow a human being to

come to harm.

- A robot must obey the orders given it by human beings except where such orders would

conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict

with the First or Second Laws.

Later the author added a zeroth rule, with higher priority over the others in order

to assure that a robot acted to favor the safety of the many:

- A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Central to all Asimov’s stories on robots, the three laws allow robots to work with

humans without any risk of rebellion or AI apocalypse. Impossible to bypass or modify,

the three laws execute in priority order and appear as mathematical formulations in

the positronic brain functions. Unfortunately, the laws have loophole and ambiguity

problems, from which arise the plots of most of his novels. The three laws come from

a fictional Handbook of Robotics, 56th Edition, 2058 A.D. and rely on principles of harmless, obedience and self-survival.

Asimov imagined a universe in which you can reduce the moral world to a few simple

principles, with some risks that drive many of his story plots. In reality, Asimov

believed that robots are tools and that the three laws could work even in the real

world to control their use (read this 1981 interview in Compute! magazine for details: https://archive.org/stream/1981-11-compute-magazine/Compute_Issue_018_1981_Nov#page/n19/mode/2up). Defying Asimov’s optimistic view, however, current robots don’t have the capability

to:

- Understand the three laws of robotics

- Select actions according to the three laws

- Sense and acknowledge a possible violation of the three laws

Some may think that today’s robots really aren’t very smart because they lack these

capabilities and they’d be right. However, the Engineering and Physical Sciences Research

Council (EPSRC), which is the UK’s main agency for funding research in engineering

and the physical sciences, promoted revisiting Asimov’s laws of robotics in 2010 for

use with real robots, given current technology. The result is much different from

the original Asimov statements (see: https://www.epsrc.ac.uk/research/ourportfolio/themes/engineering/activities/principlesofrobotics/). These revised principles admit that robots may even kill (for national security

reasons) because they are a tool. As with all the other tools, complying with the

law and existing morals is up to the human user, not the machine, with the robot perceived

as an executor. In addition, someone (a human being) should always be accountable

for the results of a robot’s actions.

The EPSRC’s principles offer a more realistic point of view on robots and morality,

considering the weak-AI technology in use now, but they could also provide a partial

solution in advanced technology scenarios. Chapter 14 discusses problems related to using self-driving cars, a kind of mobile robot that

drives for you. For example, in the exploration of the trolley problem in that chapter, you face possible but unlikely moral problems that challenge the reliance on automated

machines when it’s time to make certain choices.

The EPSRC’s principles offer a more realistic point of view on robots and morality,

considering the weak-AI technology in use now, but they could also provide a partial

solution in advanced technology scenarios. Chapter 14 discusses problems related to using self-driving cars, a kind of mobile robot that

drives for you. For example, in the exploration of the trolley problem in that chapter, you face possible but unlikely moral problems that challenge the reliance on automated

machines when it’s time to make certain choices.

Defining actual robot capabilities

Not only are existing robot capabilities still far from the human-like robots found

in Asimov’s works, they’re also of different categories. The kind of biped robot imagined

by Asimov is currently the rarest and least advanced.

The most frequent category of robots is the robot arm, such as the previously described

Unimate. Robots in this category are also called manipulators. You can find them in factories, working as industrial robots, where they assemble

and weld at a speed and precision unmatched by human workers. Some manipulators also

appear in hospitals to assist in surgical operations. Manipulators have a limited

range of motion because they integrate into their location (they might be able to

move a little, but not a lot because they lack powerful motors or require an electrical

hookup), so they require help from specialized technicians to move to a new location.

In addition, manipulators used for production tend to be completely automated (in

contrast to surgical devices, which are remote controlled, relying on the surgeon

to make medical operation decisions). More than one million manipulators appear throughout

the world, half of them located in Japan.

The second largest, and growing, category of robots is that of mobile robots. Their specialty, contrary to manipulators, is to move around by using wheels, rotors,

wings, or even legs. In this large category, you can find robots delivering food (https://nypost.com/2017/03/29/dominos-delivery-robots-bring-pizza-to-the-final-frontier/) or books (https://www.digitaltrends.com/cool-tech/amazon-prime-air-delivery-drones-history-progress/) to commercial enterprises, and even exploring Mars (https://mars.nasa.gov/mer/overview/). Mobile robots are mostly unmanned (no one travels with them) and remotely controlled,

but autonomy is increasing, and you can expect to see more independent robots in this

category. Two special kinds of mobile robots are flying robots, drones (Chapter 13), and self-driving cars (Chapter 14).

The last kind of robots is the mobile manipulator, which can move (as do mobile robots) and manipulate (as do robot arms). The pinnacle

of this category doesn’t simply consist of a robot that moves and has a mechanical

arm but also imitates human shape and behavior. The humanoid robot is a biped (has two legs) that has a human torso and communicates with humans through

voice and expressions. This kind of robot is what sci-fi dreamed of, but it’s not

easy to obtain.

Knowing why it’s hard to be a humanoid

Human-like robots are hard to develop, and scientists are still at work on them. Not

only does a humanoid robot require enhanced AI capabilities to make them autonomous,

it also needs to move as we humans do. The biggest hurdle, though, is getting humans

to accept a machine that looks like humans. The following sections look at various

aspects of creating a humanoid robot.

Creating a robot that walks

Consider the problem of having a robot walking on two legs (a bipedal robot). This is something that humans learn to do adeptly and without conscious thought,

but it’s very problematic for a robot. Four-legged robots balance easily and they

don’t consume much energy doing so. Humans, however, do consume energy simply by standing

up, as well as by balancing and walking. Humanoid robots, like humans, have to continuously

balance themselves, and do it in an effective and economic way. Otherwise, the robot

needs a large battery pack, which is heavy and cumbersome, making the problem of balance

even more difficult.

A video provided by IEEE Spectrum gives you a better idea of just how challenging

the simple act of walking can be. The video shows robots involved in the DARPA Robotics

Challenge (DRC), a challenge held by the U.S. Defense Advanced Research Projects Agency

from 2012 to 2015: https://www.youtube.com/watch?v=g0TaYhjpOfo. The purpose of the DRC is to explore robotic advances that could improve disaster

and humanitarian operations in environments that are dangerous to humans (https://www.darpa.mil/program/darpa-robotics-challenge). For this reason, you see robots walking in different terrains, opening doors, grasping

tools such as an electric drill, or trying to operate a valve wheel. A recently developed

robot called Atlas, from Boston Dynamics, shows promise, as described in this article:

https://www.theverge.com/circuitbreaker/2017/11/17/16671328/boston-dynamics-backflip-robot-atlas. The Atlas robot truly is exceptional but still has a long way to go.

A robot with wheels can move easily on roads, but in certain situations, you need

a human-shaped robot to meet specific needs. Most of the world’s infrastructures are

made for a man or woman to navigate. The presence of obstacles, such the passage size,

or the presence of doors or stairs, makes using differently shaped robots difficult.

For instance, during an emergency, a robot may need to enter a nuclear power station and close a valve. The human shape enables the robot to walk

around, descend stairs, and turn the valve wheel.

A robot with wheels can move easily on roads, but in certain situations, you need

a human-shaped robot to meet specific needs. Most of the world’s infrastructures are

made for a man or woman to navigate. The presence of obstacles, such the passage size,

or the presence of doors or stairs, makes using differently shaped robots difficult.

For instance, during an emergency, a robot may need to enter a nuclear power station and close a valve. The human shape enables the robot to walk

around, descend stairs, and turn the valve wheel.

Overcoming human reluctance: The uncanny valley

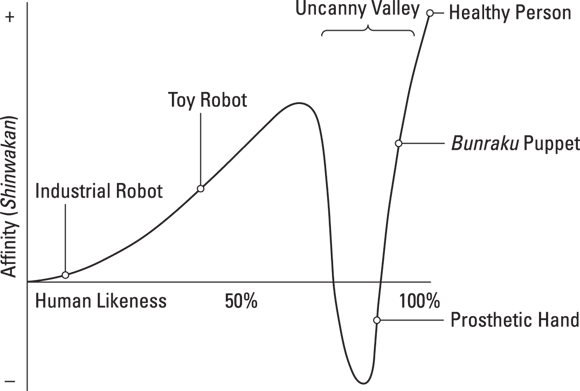

Humans have a problem with humanoid robots that look a little too human. In 1970,

a professor at the Tokyo Institute of Technology, Masahiro Mori, studied the impact

of robots on Japanese society. He coined the term Bukimi no Tani Genshō, which translates to uncanny valley. Mori realized that the more realistic robots look, the greater affinity humans feel

toward them. This increase in affinity remains true until the robot reaches a certain

degree of realism, at which point, we start disliking them strongly (even feeling

revulsion). The revulsion increases until the robot reaches the level of realism that

makes them a copy of a human being. You can find this progression depicted in Figure 12-1 and described in Mori’s original paper at: https://spectrum.ieee.org/automaton/robotics/humanoids/the-uncanny-valley.

Various hypotheses have been formulated about the reasons for the revulsion that humans

experience when dealing with a robot that is almost, but not completely, human. Cues

that humans use to detect robots are the tone of the robotic voice, the rigidity of

movement, and the artificial texture of the robot’s skin. Some scientists attribute

the uncanny valley to cultural reasons, others to psychological or biological ones.

One recent experiment on monkeys found that primates might undergo a similar experience

when exposed to more or less realistically processed photos of monkeys rendered by

3-D technology (see the story here: https://www.wired.com/2009/10/uncanny-monkey/). Monkeys participating in the experiment displayed a slight aversion to realistic photos, hinting at a common biological reason for the

uncanny valley. An explanation could therefore relate to a self-protective reaction

against beings negatively perceived as unnatural looking because they’re ill or even

possibly dead.

The interesting point in the uncanny valley is that if we need humanoid robots because

we want them to assist humans, we must also consider their level of realism and key

aesthetic details to achieve a positive emotional response that will allow users to

accept robot help. Recent observations show that even robots with little human resemblance

generate attachment and create bonds with their users. For instance, many U.S. soldiers

report feeling a loss when their small tactical robots for explosive detection and

handling are destroyed in action. (You can read an article about this on the MIT Technological

Review: https://www.technologyreview.com/s/609074/how-we-feel-about-robots-that-feel/.)

Working with robots

Different types of robots have different applications. As humans developed and improved

the three classes of robots (manipulator, mobile, and humanoid), new fields of application

opened to robotics. It’s now impossible to enumerate exhaustively all the existing

uses for robots, but the following sections touch on some of the most promising and

revolutionary uses.

Enhancing economic output

Manipulators, or industrial robots, still account for the largest percentage of operating

robots in the world. According to World Robotics 2017, a study compiled by the International Federation of Robotics, by the end of 2016

more than 1,800,000 robots were operating in industry. (Read a summary of the study

here: https://ifr.org/downloads/press/Executive_Summary_WR_2017_Industrial_Robots.pdf.) Industrial robots will likely grow to 3,000,000 by 2020 as a result of booming

automation in manufacturing. In fact, factories (as an entity) will use robots to

become smarter, a concept dubbed Industry 4.0. Thanks to widespread use of the Internet, sensors, data, and robots, Industry 4.0

solutions allow easier customization and higher quality of products in less time than

they can achieve without robots. No matter what, robots already operate in dangerous

environments, and for tasks such as welding, assembling, painting, and packaging,

they operate faster, with higher accuracy, and at lower costs than human workers can.

Taking care of you

Since 1983, robots have assisted surgeons in difficult operations by providing precise

and accurate cuts that only robotic arms can provide. Apart from offering remote control

of operations (keeping the surgeon out of the operating room to create a more sterile environment), an increase in automated operation is steadily opening

the possibility of completed automated surgical operations in the near future, as

speculated in this article: https://www.huffingtonpost.com/entry/is-the-future-of-robotic-surgery-already-here_us_58e8d00fe4b0acd784ca589a.

Providing services

Robots provide other care services, both in private and public spaces. The most famous

indoor robot is the Roomba vacuum cleaner, a robot that will vacuum the floor of your

house by itself (it’s a robotic bestseller, having exceeded 3 million units sold),

but there are other service robots to consider as well:

Assistive robots for elder people are far from offering general assistance the way

a real nurse does. Robots focus on critical tasks such as remembering medications,

helping patients move from a bed to a wheelchair, checking patient physical conditions,

raising an alarm when something is wrong, or simply acting as a companion. For instance,

the therapeutic robot Paro provides animal therapy to impaired elders, as you can

read in this article at https://www.huffingtonpost.com/the-conversation-global/robot-revolution-why-tech_b_14559396.html.

Venturing into dangerous environments

Robots go where people can’t, or would be at great risk if they did. Some robots have

been sent into space (with the NASA Mars rovers Opportunity and Curiosity being the

most notable attempts), and more will support future space exploration. (Chapter 16 discusses robots in space.) Many other robots stay on earth and are employed in underground

tasks, such as transporting ore in mines or generating maps of tunnels in caves. Underground

robots are even exploring sewer systems, as Luigi (a name inspired from the brother

of a famous plumber in videogames) does. Luigi is a sewer-trawling robot developed

by MIT’s Senseable City Lab to investigate public health in a place where humans can’t go unharmed because of high

concentrations of chemicals, bacteria, and viruses (see http://money.cnn.com/2016/09/30/technology/mit-robots-sewers/index.html).

Robots are even employed where humans will definitely die, such as in nuclear disasters

like Three Mile Island, Chernobyl, and Fukushima. These robots remove radioactive

materials and make the area safer. High-dose radiation even affects robots because

radiation causes electronic noise and signal spikes that damage circuits over time.

Only radiation hardened electronic components allow robots to resist the effects of radiation enough to carry out their job, such

as the Little Sunfish, a underwater robot that operates in one of Fukushima’s flooded

reactors where the meltdown happened (as described in this article at http://www.bbc.com/news/in-pictures-40298569).

In addition, warfare or criminal scenes represent life-threatening situations in which

robots see frequent use for transporting weapons or defusing bombs. These robots can

also investigate packages that could include a lot of harmful things other than bombs.

Robot models such as iRobot’s PackBot (from the same company that manufactures Rumba,

the house cleaner) or QinetiQ North America’s Talon handle dangerous explosives by

remote control, meaning that an expert in explosives controls their actions at a distance.

Some robots can even act in place of soldiers or police in reconnaissance tasks or

direct interventions (for instance, police in Dallas used a robot to take out a shooter

http://edition.cnn.com/2016/07/09/opinions/dallas-robot-questions-singer/index.html).

People expect the military to increasingly use robots in the future. Beyond the ethical

considerations of these new weapons, it’s a matter of the old guns-versus-butter

model (

People expect the military to increasingly use robots in the future. Beyond the ethical

considerations of these new weapons, it’s a matter of the old guns-versus-butter

model (https://www.huffingtonpost.com/jonathan-tasini/guns-versus-butter-our-re_b_60150.html), meaning that a nation can exchange economic power for military power. Robots seem

a perfect fit for that model, moreso than traditional weaponry that needs trained

personnel to operate. Using robots means that a country can translate its productive

output into an immediately effective army of robots at any time, something that the

Star Wars prequels demonstrate all too well.

Understanding the role of specialty robots

Specialty robots include drones and self-driving cars. Drones are controversial because

of their usage in warfare, but unmanned aerial vehicles (UAVs) are also used for monitoring,

agriculture, and many less menacing activities as discussed in Chapter 13.

People have long fantasized about cars that can drive by themselves. These cars are

quickly turning into a reality after the achievements in the DARPA Grand Challenge.

Most car producers have realized that being able to produce and commercialize self-driving cars could change the actual economic balance in the world

(hence the rush to achieve a working vehicle as soon as possible: https://www.washingtonpost.com/news/innovations/wp/2017/11/20/robot-driven-ubers-without-a-human-driver-could-appear-as-early-as-2019/). Chapter 14 discusses self-driving cars, their technology, and their implications in more detail.

Assembling a Basic Robot

An overview of robots isn’t complete without discussing how to build one, given the

state of the art, and considering how AI can improve its functioning. The following

sections discuss robot basics.

Considering the components

A robot’s purpose is to act in the world, so it needs effectors, which are moving legs or wheels that provide the locomotion capability. It also needs arms and pincers to grip, rotate, translate (modify the orientation

outside of rotation), and thus provide manipulating capabilities. When talking about the capability of the robot to do something, you may also hear

the term actuator used interchangeably with effectors. An actuator is one of the mechanisms that compose

the effectors, allowing a single movement. Thus, a robot leg has different actuators,

such as electric motors or hydraulic cylinders that perform movements like orienting

the feet or bending the knee.

Acting in the world requires determining the composition of the world and understanding

where the robot resides in the world. Sensors provide input that reports what’s happening outside the robot. Devices like cameras,

lasers, sonars, and pressure sensors measure the environment and report to the robot

what’s going on as well as hint at the robot’s location. The robot therefore consists

mainly of an organized bundle of sensors and effectors. Everything is designed to

work together using an architecture, which is exactly what makes up a robot. (Sensors

and effectors are actually mechanical and electronic parts that you can use as stand-alone

components in different applications.)

The common internal architecture is made of parallel processes gathered into layers

that specialize in solving one kind of problem. Parallelism is important. As human

beings, we perceive a single flow of consciousness and attention; we don’t need to

think about basic functions such as breathing, heartbeat, and food digestion because

these processes go on by themselves in parallel to conscious thought. Often we can

even perform one action, such as walking or driving, while talking or doing something else (although it may prove dangerous in some situations). The

same goes for robots. For instance, in the three-layer architecture, a robot has many

processes gathered into three layers, each one characterized by a different response

time and complexity of answer:

- Reactive: Takes immediate data from the sensors, the channels for the robot’s perception of

the world, and reacts immediately to sudden problems (for instance, turning immediately

after a corner because the robot is going to crash on an unknown wall).

- Executive: Processes sensor input data, determines where the robot is in the world (an important

function called localization), and decides what action to execute given the requirements

of the previous layer, the reactive one, and the following one, the deliberative.

- Deliberative: Makes plans on how to perform tasks, such as planning how to go from one point to

another and deciding what sequence of actions to perform to pick up an object. This

layer translates into a series of requirements for the robot that the executive layer

carries out.

Another popular architecture is the pipeline architecture, commonly found in self-driving

cars, which simply divides the robot’s parallel processes into separate phases such

as sensing, perception (which implies understanding what you sense), planning, and

control.

Sensing the world

Chapter 14 discusses sensors in detail and presents practical applications to help explain self-driving

cars. Many kinds of sensors exist, with some focusing on the external world and others

on the robot itself. For example, a robotic arm needs to know how much its arm extended

or whether it reached its extension limit. Furthermore, some sensors are active (they

actively look for information based on a decision of the robot), while others are

passive (they receive the information constantly). Each sensor provides an electronic

input that the robot can immediately use or process in order to gain a perception.

Perception involves building a local map of real-world objects and determining the location

of the robot in a more general map of the known world. Combining data from all sensors,

a process called sensor fusion, creates a list of basic facts for the robot to use. Machine learning helps in this case by providing vision algorithms using deep learning

to recognize objects and segment images (as discussed in Chapter 11). It also puts all the data together into a meaningful representation using unsupervised

machine learning algorithms. This is a task called low-dimensional embedding, which means translating complex data from all sensors into a simple flat map or other

representation. Determining a robot’s location is called simultaneous localization and mapping (SLAM), and it is just like when you look at a map to understand where you are in a city.

Controlling a robot

After sensing provides all the needed information, planning provides the robot with

the list of the right actions to take to achieve its objectives. Planning is done

programmatically (by using an expert system, for example, as described in Chapter 3) or by using a machine learning algorithm, such as Bayesian networks, as described

in Chapter 10. Developers are experimenting with using reinforcement learning (machine leaning

based on trial and error), but a robot is not a toddler (who also relies on trial

and error to learn to walk); experimentation may prove time inefficient, frustrating,

and costly in the automatic creation of a plan because the robot can be damaged in

the process.

Finally, planning is not simply a matter of smart algorithms, because when it comes

to execution, things aren’t likely go as planned. Think about this issue from a human

perspective. When you’re blindfolded, even if you want to go straight in front of

you, you won’t unless you have constant source of corrections. The result is that

you start going in loops. Your legs, which are the actuators, don’t always perfectly

execute instructions. Robots face the same problem. In addition, robots face issues

such as delays in the system (technically called latency) or the robot doesn’t execute instructions exactly on time, thus messing things up.

However, most often, the issue is a problem with the robot’s environment, in one of

the following ways:

Robots have to operate in environments that are partially unknown, changeable, mostly

unpredictable, and in a constant flow, meaning that all actions are chained, and the

robot has to continuously manage the flow of information and actions in real time.

Being able to adjust to this kind of environment can’t be fully predicted or programmed,

and such an adjustment requires learning capabilities, which AI algorithms provide

more and more to robots.

Robots have to operate in environments that are partially unknown, changeable, mostly

unpredictable, and in a constant flow, meaning that all actions are chained, and the

robot has to continuously manage the flow of information and actions in real time.

Being able to adjust to this kind of environment can’t be fully predicted or programmed,

and such an adjustment requires learning capabilities, which AI algorithms provide

more and more to robots.

Distinguishing between robots in sci-fi and in reality

Distinguishing between robots in sci-fi and in reality Reasoning on robot ethics

Reasoning on robot ethics Finding more applications to robots

Finding more applications to robots Looking inside how a robot is made

Looking inside how a robot is made Differentiating automata from other human-like animations is important. For example,

the Golem (

Differentiating automata from other human-like animations is important. For example,

the Golem ( Čapek created both the idea of robots and that of a robot apocalypse, like the AI

takeover you see in sci-fi movies and that, given AI’s recent progress, is feared

by notable figures such as the founder of Microsoft, Bill Gates, physicist Stephen

Hawking, and the inventor and business entrepreneur Elon Musk. Čapek’s robotic slaves

rebel against the humans who created them at the end of the play by eliminating almost

all of humanity.

Čapek created both the idea of robots and that of a robot apocalypse, like the AI

takeover you see in sci-fi movies and that, given AI’s recent progress, is feared

by notable figures such as the founder of Microsoft, Bill Gates, physicist Stephen

Hawking, and the inventor and business entrepreneur Elon Musk. Čapek’s robotic slaves

rebel against the humans who created them at the end of the play by eliminating almost

all of humanity.

The EPSRC’s principles offer a more realistic point of view on robots and morality,

considering the weak-AI technology in use now, but they could also provide a partial

solution in advanced technology scenarios.

The EPSRC’s principles offer a more realistic point of view on robots and morality,

considering the weak-AI technology in use now, but they could also provide a partial

solution in advanced technology scenarios.