6 Alice and Bob Go Public

During the Second World War, British codebreakers had the upper hand over German codemakers, mainly because the men and women at Bletchley Park, following the lead of the Poles, developed some of the earliest codebreaking technology. In addition to Turing’s bombes, which were used to crack the Enigma cipher, the British also invented another codebreaking device, Colossus, to combat an even stronger form of encryption, namely the German Lorenz cipher. Of the two types of codebreaking machine, it was Colossus that would determine the development of cryptography during the latter half of the twentieth century.

The Lorenz cipher was used to encrypt communications between Hitler and his generals. The encryption was performed by the Lorenz SZ40 machine, which operated in a similar way to the Enigma machine, but the Lorenz was far more complicated, and it provided the Bletchley codebreakers with an even greater challenge. However, two of Bletchley’s codebreakers, John Tiltman and Bill Tutte, discovered a weakness in the way that the Lorenz cipher was used, a flaw that Bletchley could exploit and thereby read Hitler’s messages.

Breaking the Lorenz cipher required a mixture of searching, matching, statistical analysis and careful judgment, all of which was beyond the technical abilities of the bombes. The bombes were able to carry out a specific task at high speed, but they were not flexible enough to deal with the subtleties of Lorenz. Lorenz-encrypted messages had to be broken by hand, which took weeks of painstaking effort, by which time the messages were largely out of date. Eventually, Max Newman, a Bletchley mathematician, came up with a way to mechanize the cryptanalysis of the Lorenz cipher. Drawing heavily on Alan Turing’s concept of the universal machine, Newman designed a machine that was capable of adapting itself to different problems, what we today would call a programmable computer.

Implementing Newman’s design was deemed technically impossible, so Bletchley’s senior officials shelved the project. Fortunately, Tommy Flowers, an engineer who had taken part in discussions about Newman’s design, decided to ignore Bletchley’s skepticism, and went ahead with building the machine. At the Post Office’s research center at Dollis Hill, North London, Flowers took Newman’s blueprint and spent ten months turning it into the Colossus machine, which he delivered to Bletchley Park on December 8, 1943. It consisted of 1,500 electronic valves, which were considerably faster than the sluggish electromechanical relay switches used in the bombes. But more important than Colossus’s speed was the fact that it was programmable. It was this fact that made Colossus the precursor to the modern digital computer.

Colossus, as with everything else at Bletchley Park, was destroyed after the war, and those who worked on it were forbidden to talk about it. When Tommy Flowers was ordered to dispose of the Colossus blueprints, he obediently took them down to the boiler room and burned them. The plans for the world’s first computer were lost forever. This secrecy meant that other scientists gained the credit for the invention of the computer. In 1945, J. Presper Eckert and John W. Mauchly of the University of Pennsylvania completed ENIAC (Electronic Numerical Integrator And Calculator), consisting of 18,000 electronic valves, capable of performing 5,000 calculations per second. For decades, ENIAC, not Colossus, was considered the mother of all computers.

Having contributed to the birth of the modern computer, cryptanalysts continued after the war to develop and employ computer technology in order to break all sorts of ciphers. They could now exploit the speed and flexibility of programmable computers to search through all possible keys until the correct one was found. In due course, the cryptographers began to fight back, exploiting the power of computers to create increasingly complex ciphers. In short, the computer played a crucial role in the postwar battle between codemakers and codebreakers.

Using a computer to encipher a message is, to a large extent, very similar to traditional forms of encryption. Indeed, there are only three significant differences between computer encryption and the sort of mechanical encryption that was the basis for ciphers like Enigma. The first difference is that a mechanical cipher machine is limited by what can be practically built, whereas a computer can mimic a hypothetical cipher machine of immense complexity. For example, a computer could be programmed to mimic the action of a hundred scramblers, some spinning clockwise, some anticlockwise, some vanishing after every tenth letter, others rotating faster and faster as encryption progresses. Such a mechanical machine would be practically impossible to build, but its “virtual” computerized equivalent would deliver a highly secure cipher.

The second difference is simply a matter of speed. Electronics can operate far more quickly than mechanical scramblers: a computer programmed to mimic the Enigma cipher could encipher a lengthy message in an instant. Alternatively, a computer programmed to perform a vastly more complex form of encryption could still accomplish the task within a reasonable time.

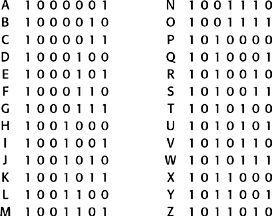

The third, and perhaps most significant, difference is that a computer scrambles numbers rather than letters of the alphabet. Computers deal only in binary numbers-sequences of ones and zeros known as binary digits, or bits for short. Before encryption, any message must therefore be converted into binary digits. This conversion can be performed according to various protocols, such as the American Standard Code for Information Interchange, known familiarly by the acronym ASCII, pronounced “asskey.” ASCII assigns a 7-digit binary number to each letter of the alphabet. For the time being, it is sufficient to think of a binary number as merely a pattern of ones and zeros that uniquely identifies each letter (Table 24), just as Morse code identifies each letter with a unique series of dots and dashes. There are 128 (27) ways to arrange a combination of 7 binary digits, so ASCII can identify up to 128 distinct characters. This allows plenty of room to define all the lowercase letters (e.g., a = 1100001), all necessary punctuation (e.g., ! = 0100001), as well as other symbols (e.g., & = 0100110). Once the message has been converted into binary, encryption can begin.

Even though we are dealing with computers and numbers, and not machines and letters, the encryption still proceeds by the age-old principles of substitution and transposition, in which elements of the message are substituted for other elements, or their positions are switched, or both. Every encipherment, no matter how complex, can be broken down into combinations of these simple operations. The following two examples demonstrate the essential simplicity of computer encipherment by showing how a computer might perform an elementary substitution cipher and an elementary transposition cipher.

First, imagine that we wish to encrypt the message HELLO, employing a simple computer version of a transposition cipher. Before encryption can begin, we must translate the message into ASCII according to Table 24:

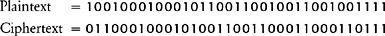

One of the simplest forms of transposition cipher would be to swap the first and second digits, the third and fourth digits, and so on. In this case the final digit would remain unchanged because there are an odd number of digits. In order to see the operation more clearly, I have removed the spaces between the ASCII blocks in the original plaintext to generate a single string, and then lined it up against the resulting ciphertext for comparison:

An interesting aspect of transposition at the level of binary digits is that the transposing can happen within the letter. Furthermore, bits of one letter can swap places with bits of the neighboring letter. For example, by swapping the seventh and eighth numbers, the final 0 of H is swapped with the initial 1 of E. The encrypted message is a single string of 35 binary digits, which can be transmitted to the receiver, who then reverses the transposition to re-create the original string of binary digits. Finally, the receiver reinterprets the binary digits via ASCII to regenerate the message HELLO.

Table 24 ASCII binary numbers for the capital letters.

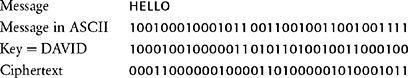

Next, imagine that we wish to encrypt the same message, HELLO, this time employing a simple computer version of a substitution cipher. Once again, we begin by converting the message into ASCII before encryption. As usual, substitution relies on a key that has been agreed between sender and receiver. In this case the key is the word DAVID translated into ASCII, and it is used in the following way. Each element of the plaintext is “added” to the corresponding element of the key. Adding binary digits can be thought of in terms of two simple rules. If the elements in the plaintext and the key are the same, the element in the plaintext is substituted for 0 in the ciphertext. But, if the elements in the message and key are different, the element in the plaintext is substituted for 1 in the ciphertext:

The resulting encrypted message is a single string of 35 binary digits which can be transmitted to the receiver, who uses the same key to reverse the substitution, thus recreating the original string of binary digits. Finally, the receiver reinterprets the binary digits via ASCII to regenerate the message HELLO.

Computer encryption was restricted to those who had computers, which in the early days meant the government and the military. However, a series of scientific, technological and engineering breakthroughs made computers, and computer encryption, far more widely available. In 1947, AT&T Bell Laboratories invented the transistor, a cheap alternative to the electronic valve. Commercial computing became a reality in 1951 when companies such as Ferranti began to make computers to order. In 1953 IBM launched its first computer, and four years later it introduced Fortran, a programming language that allowed “ordinary” people to write computer programs. Then, in 1959, the invention of the integrated circuit heralded a new era of computing.

During the 1960s, computers became more powerful, and at the same time they became cheaper. Businesses were increasingly able to afford computers, and could use them to encrypt important communications such as money transfers or delicate trade negotiations. However, as more and more businesses bought computers, and as encryption between businesses spread, cryptographers were confronted with new problems, difficulties that had not existed when cryptography was the preserve of governments and the military. One of the primary concerns was the issue of standardization. A company might use a particular encryption system to ensure secure internal communication, but it could not send a secret message to an outside organization unless the receiver used the same system of encryption. Eventually, on May 15, 1973, America’s National Bureau of Standards planned to solve the problem, and formally requested proposals for a standard encryption system that would allow business to speak secretly unto business.

One of the more established cipher algorithms, and a candidate for the standard, was an IBM product known as Lucifer. It had been developed by Horst Feistel, a German émigré who had arrived in America in 1934. He was on the verge of becoming a U.S. citizen when America entered the war, which meant that he was placed under house arrest until 1944. For some years after, he suppressed his interest in cryptography to avoid arousing the suspicions of the American authorities. When he did eventually begin research into ciphers, at the Air Force’s Cambridge Research Center, he soon found himself in trouble with the National Security Agency (NSA), the organization with overall responsibility for maintaining the security of military and governmental communications, and which also attempts to intercept and decipher foreign communications. The NSA employs more mathematicians, buys more computer hardware, and intercepts more messages than any other organization in the world. It is the world leader when it comes to snooping.

The NSA did not object to Feistel’s past, they merely wanted to have a monopoly on cryptographic research, and it seems that they arranged for Feistel’s research project to be canceled. In the 1960s Feistel moved to the Mitre Corporation, but the NSA continued to apply pressure and forced him to abandon his work for a second time. Feistel eventually ended up at IBM’s Thomas J. Watson Laboratory near New York, where for several years he was able to conduct his research without being harassed. It was there, during the early 1970s, that he developed the Lucifer system.

Lucifer encrypts messages according to the following scrambling operation. First, the message is translated into a long string of binary digits. Second, the string is split into blocks of 64 digits, and encryption is performed separately on each of the blocks. Third, focusing on just one block, the 64 digits are shuffled, and then split into two half-blocks of 32, labeled Left0 and Right0. The digits in Right0 are then put through a “mangler function,” which changes the digits according to a complex substitution. The mangled Right0 is then added to Left0 to create a new halfblock of 32 digits called Right1. The original Right0 is relabeled Left1. This set of operations is called a “round.” The whole process is repeated in a second round, but starting with the new half-blocks, Left1 and Right1, and ending with Left2 and Right2. This process is repeated until there have been 16 rounds in total. The encryption process is a bit like kneading a slab of dough. Imagine a long slab of dough with a message written on it. First, the long slab is divided into blocks that are 64 cm in length. Then, one half of one of the blocks is picked up, mangled, folded over, added to the other half and stretched to make a new block. Then the process is repeated over and over again until the message has been thoroughly mixed up. After 16 rounds of kneading the ciphertext is sent, and is then deciphered at the other end by reversing the process.

The exact details of the mangler function can change, and are determined by a key agreed by sender and receiver. In other words, the same message can be encrypted in a myriad of different ways depending on which key is chosen. The keys used in computer cryptography are simply numbers. Hence, the sender and receiver merely have to agree on a number in order to decide the key. Thereafter, encryption requires the sender to input the key number and the message into Lucifer, which then outputs the ciphertext. Decryption requires the receiver to input the same key number and the ciphertext into Lucifer, which then outputs the original message.

Lucifer was generally held to be one of the strongest commercially available encryption products, and consequently it was used by a variety of organizations. It seemed inevitable that this encryption system would be adopted as the American standard, but once again the NSA interfered with Feistel’s work. Lucifer was so strong that it offered the possibility of an encryption standard that was probably beyond the codebreaking capabilities of the NSA; not surprisingly, the NSA did not want to see an encryption standard that they could not break. Hence, it is rumored that the NSA lobbied to weaken one aspect of Lucifer, the number of possible keys, before allowing it to be adopted as the standard.

The number of possible keys is one of the crucial factors determining the strength of any cipher. A cryptanalyst trying to decipher an encrypted message could attempt to check all possible keys, and the greater the number of possible keys, the longer it will take to find the right one. If there are only 1,000,000 possible keys, a cryptanalyst could use a powerful computer to find the correct one in a matter of minutes, and thereby decipher an intercepted message. However, if the number of possible keys is large enough, finding the correct key becomes impractical. If Lucifer were to become the encryption standard, then the NSA wanted to ensure that it operated with only a restricted number of keys.

The NSA argued in favor of limiting the number of keys to roughly 100,000,000,000,000,000 (technically referred to as 56 bits, because this number consists of 56 digits when written in binary). It seems that the NSA believed that such a key would provide security within the civilian community, because no civilian organization had a computer powerful enough to check every possible key within a reasonable amount of time. However, the NSA itself, with access to the world’s greatest computing resource, would just about be able to break into messages. The 56-bit version of Feistel’s Lucifer cipher was officially adopted on November 23, 1976, and was called the Data Encryption Standard (DES). A quarter of a century later, DES remains America’s official standard for encryption.

The adoption of DES solved the problem of standardization, encouraging businesses to use cryptography for security. Furthermore, DES was strong enough to guarantee security against attacks from commercial rivals. It was effectively impossible for a company with a civilian computer to break into a DES-encrypted message because the number of possible keys was sufficiently large. Unfortunately, despite standardization and despite the strength of DES, businesses still had to deal with one more major issue, a problem known as key distribution.

Imagine that a bank wants to send some confidential data to a client via a telephone line, but is worried that there might be somebody tapping the wire. The bank picks a key and uses DES to encrypt the data message. In order to decrypt the message, the client needs not only to have a copy of DES on its computer, but also to know which key was used to encrypt the message. How does the bank inform the client of the key? It cannot send the key via the telephone line, because it suspects that there is an eavesdropper on the line. The only truly secure way to send the key is to hand it over in person, which is clearly a time-consuming task. A less secure but more practical solution is to send the key via a courier. In the 1970s, banks attempted to distribute keys by employing special dispatch riders who had been vetted and who were among the company’s most trusted employees. These dispatch riders would race across the world with padlocked briefcases, personally distributing keys to everyone who would receive messages from the bank over the next week. As business networks grew in size, as more messages were sent, and as more keys had to be delivered, the banks found that this distribution process became a horrendous logistical nightmare, and the overhead costs became prohibitive.

The problem of key distribution has plagued cryptographers throughout history. For example, during the Second World War the German High Command had to distribute the monthly book of day keys to all its Enigma operators, which was an enormous logistical problem. Also, Uboats, which tended to spend extended periods away from base, had to somehow obtain a regular supply of keys. In earlier times, users of the Vigenère cipher had to find a way of getting the keyword from the sender to the receiver. No matter how secure a cipher is in theory, in practice it can be undermined by the problem of key distribution.

To some extent, government and the military have been able to deal with the problem of key distribution by throwing money and resources at it. Their messages are so important that they will go to any lengths to ensure secure key distribution. The U.S. Government keys are managed and distributed by CO MS EC, short for Communications Security. In the 1970s, COMSEC was responsible for transporting tons of keys every day. When ships carrying COMSEC material came into dock, crypto-custodians would march onboard, collect stacks of cards, paper tapes, floppy disks, or whatever other medium the keys might be stored on, and then deliver them to the intended recipients.

Key distribution might seem a mundane issue, but it became the overriding problem for postwar cryptographers. If two parties wanted to communicate securely, they had to rely on a third party to deliver the key, and this became the weakest link in the chain of security. The dilemma for businesses was straightforward-if governments with all their money were struggling to guarantee the secure distribution of keys, then how could civilian companies ever hope to achieve reliable key distribution without bankrupting themselves?

Despite claims that the problem of key distribution was unsolvable, a team of mavericks triumphed against the odds and came up with a brilliant solution in the mid-1970s. They devised an encryption system that appeared to defy all logic. Although computers transformed the implementation of ciphers, the greatest revolution in twentieth-century cryptography has been the development of techniques to overcome the problem of key distribution. Indeed, this breakthrough is considered to be the greatest cryptographic achievement since the invention of the monoalphabetic cipher, over two thousand years ago.