|  |

“Data matures like wine, applications life fish.” – James Governor

Neural networks, which are sometimes referred to as Artificial Neural Networks, are a simulation of machine learning and human brain functionality problems. You should understand that neural networks don’t provide a solution for all of the problems that come up but instead provide the best results with several other techniques for various machine learning tasks. The most common neural networks are classification and clustering, which could also be used for regression, but you can use better methods for that.

A neuron is a building unit for a neural network, which works like a human neuron. A typical neural network will use a sigmoid function. This is typically used because of the nature of being able to write out the derivative using f(x), which works great for minimizing error.

Sigmoid function: f(x) = 1/1+e^-x

Neurons are then connected in layers in order for a single layer to communicate with other layers which will form the network. The layers that are within the input and output layers are known as hidden layers. The outputs of a layer are sent to the inputs of a different layer.

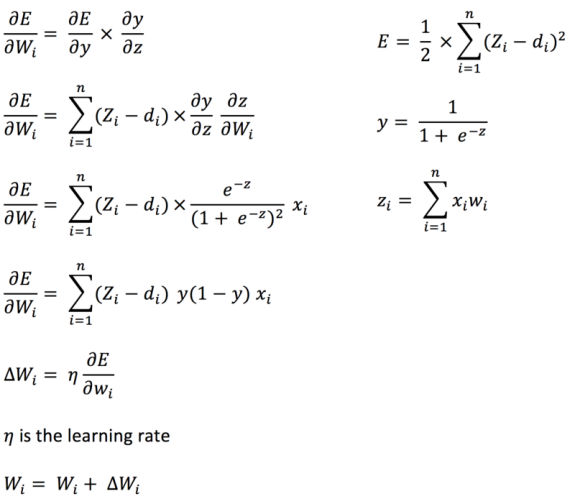

For a neural network to learn, you have to adjust the weights to get rid of most of the errors. This can be done by performing back propagation of the error. When it comes to a simple neuron that uses the Sigmoid function as its activation function, you can demonstrate the error as we did below. We can consider that a general case where the weight is termed as W and the inputs as X.

With this equation, the weight adjustment can be generalized, and you would have seen that this will only require the information from the other neuron levels. This is why this is a robust mechanism for learning, and it is known as back propagation algorithm.

To practice this, we can write out a simple JavaScript application that uses two images and will apply a filter to a specific image. All you will need is an image you want to change and fill in its filename where it says to in the code.

“ import Jimp = require(“jimp”);

Import Promise from “ts-promist”;

Const synaptic = require(“synaptic”);

Const _ = require(“lodash”);

Const Neuron = synaptic.Neuron,

Layer = synaptic.Layer,

Network = synaptic.Network,

Trainer = synaptic.Trainer,

Architect = synaptic.Architect;

Function getImgData(filename) {

Return new Promise((resolve, reject) => {

Jimp.read(filename).then((image) => {

Let inputSet: any = [];

Image.scan(0, 0, image.bitmap.width, image.bitmap.height, function (x, y, idx) {

Var red = image.bitmap.data[idx + 0];

Var green = image.bitmap.data[idx + 1];

inputSet.push([re, green]);

});

Resolve(inputSet);

}).catch(function (err) {

Resolve([]);

});

});

}

Const myPerceptron = new Archietect.Perceptron(4, 5);

Const trainer = new Trainer(myPerceptron);

Const traininSet: any = [];

getImgData(‘ imagefilename.jpg’). then((inputs: any) => {

getImageData(‘imagefilename.jpg’).then((outputs: any) => {

for (let i=0; I < inputs.length; i++) {

trainingSet.push({

input: _.map(inputs[i], (val: any) => val/255),

output: _.map(outputs[i], (val: any) => val/255)

});

}

Trainer.train(trainingSet, {

Rate:.1,

Interations: 200,

Error: .005,

Shuffle: true,

Log: 10,

Cost: Trainer.cost.CROSS_ENTROPY

});

Jimp.read(‘yours.jpg’).then((image) => {

Image.scan(0, 0, image.bitmap.width, image.bitmap.height, (x, y, idx) => {

Var red = image.bitmap.data[idx + 0];

Var green = image.bitmap.data [idx + 1];

Var out – myPerceptron.activate([red/255, green/255);

Image.bitmap.data[idx + 0] = _.round(out[0] * 255);

Image.bitmap.data[idx + 1] = _.round(out[1] * 255);

});

Console.log(‘out.jpg’);

Image.write(‘out.jpg’);

}).catch(function (err) {

Console.error(err);

});

});

});