We already used the ExUnit framework to write tests for our Issues tracker app. But that chapter only scratched the surface of Elixir testing. Let’s dig deeper.

When I document my functions, I like to include examples of the function being used—comments saying things such as, “Feed it these arguments, and you’ll get this result.” In the Elixir world, a common way to do this is to show the function being used in an IEx session.

Let’s look at an example from our Issues app. The TableFormatter formatter module defines a number of self-contained functions that we can document.

| | defmodule Issues.TableFormatter do |

| | |

| | import Enum, only: [ each: 2, map: 2, map_join: 3, max: 1 ] |

| | |

| | @doc """ |

| | Takes a list of row data, where each row is a Map, and a list of |

| | headers. Prints a table to STDOUT of the data from each row |

| | identified by each header. That is, each header identifies a column, |

| | and those columns are extracted and printed from the rows. |

| | We calculate the width of each column to fit the longest element |

| | in that column. |

| | """ |

| | def print_table_for_columns(rows, headers) do |

| | with data_by_columns = split_into_columns(rows, headers), |

| | column_widths = widths_of(data_by_columns), |

| | format = format_for(column_widths) |

| | do |

| | puts_one_line_in_columns(headers, format) |

| | IO.puts(separator(column_widths)) |

| | puts_in_columns(data_by_columns, format) |

| | end |

| | end |

| | |

| | @doc """ |

| | Given a list of rows, where each row contains a keyed list |

| | of columns, return a list containing lists of the data in |

| | each column. The `headers` parameter contains the |

| | list of columns to extract |

| | |

| | ## Example |

| | |

| | iex> list = [Enum.into([{"a", "1"},{"b", "2"},{"c", "3"}], %{}), |

| | ...> Enum.into([{"a", "4"},{"b", "5"},{"c", "6"}], %{})] |

| | iex> Issues.TableFormatter.split_into_columns(list, [ "a", "b", "c" ]) |

| | [ ["1", "4"], ["2", "5"], ["3", "6"] ] |

| | |

| | """ |

| | def split_into_columns(rows, headers) do |

| | for header <- headers do |

| | for row <- rows, do: printable(row[header]) |

| | end |

| | end |

| | |

| | @doc """ |

| | Return a binary (string) version of our parameter. |

| | ## Examples |

| | iex> Issues.TableFormatter.printable("a") |

| | "a" |

| | iex> Issues.TableFormatter.printable(99) |

| | "99" |

| | """ |

| | def printable(str) when is_binary(str), do: str |

| | def printable(str), do: to_string(str) |

| | |

| | @doc """ |

| | Given a list containing sublists, where each sublist contains the data for |

| | a column, return a list containing the maximum width of each column. |

| | ## Example |

| | iex> data = [ [ "cat", "wombat", "elk"], ["mongoose", "ant", "gnu"]] |

| | iex> Issues.TableFormatter.widths_of(data) |

| | [ 6, 8 ] |

| | """ |

| | def widths_of(columns) do |

| | for column <- columns, do: column |> map(&String.length/1) |> max |

| | end |

| | |

| | @doc """ |

| | Return a format string that hard-codes the widths of a set of columns. |

| | We put `" | "` between each column. |

| | |

| | ## Example |

| | iex> widths = [5,6,99] |

| | iex> Issues.TableFormatter.format_for(widths) |

| | "~-5s | ~-6s | ~-99s~n" |

| | """ |

| | def format_for(column_widths) do |

| | map_join(column_widths, " | ", fn width -> "~-#{width}s" end) <> "~n" |

| | end |

| | |

| | @doc """ |

| | Generate the line that goes below the column headings. It is a string of |

| | hyphens, with + signs where the vertical bar between the columns goes. |

| | |

| | ## Example |

| | iex> widths = [5,6,9] |

| | iex> Issues.TableFormatter.separator(widths) |

| | "------+--------+----------" |

| | """ |

| | def separator(column_widths) do |

| | map_join(column_widths, "-+-", fn width -> List.duplicate("-", width) end) |

| | end |

| | |

| | @doc """ |

| | Given a list containing rows of data, a list containing the header selectors, |

| | and a format string, write the extracted data under control of the format string. |

| | """ |

| | def puts_in_columns(data_by_columns, format) do |

| | data_by_columns |

| | |> List.zip |

| | |> map(&Tuple.to_list/1) |

| | |> each(&puts_one_line_in_columns(&1, format)) |

| | end |

| | |

| | def puts_one_line_in_columns(fields, format) do |

| | :io.format(format, fields) |

| | end |

| | end |

Note how some of the documentation contains sample IEx sessions. I like doing this. It helps people who come along later understand how to use my code. But, as importantly, it lets me understand what my code will feel like to use. I typically write these sample sessions before I start on the code, changing stuff around until the API feels right.

But the problem with comments is that they just don’t get maintained. The code changes, the comment gets stale, and it becomes useless. Fortunately, ExUnit has doctest, a tool that extracts the iex sessions from your code’s @doc strings, runs it, and checks that the output agrees with the comment.

To invoke it, simply add one or more

| | doctest ModuleName |

lines to your test files. You can add them to existing test files for a module (such as table_formatter_test.exs) or create a new test file just for them. That’s what we’ll do here. Let’s create a new test file, test/doc_test.exs, containing this:

| | defmodule DocTest do |

| | use ExUnit.Case |

| » | doctest Issues.TableFormatter |

| | end |

We can now run it:

| | $ mix test test/doc_test.exs |

| | ...... |

| | Finished in 0.00 seconds |

| | 5 doctests, 0 failures |

And, of course, these tests are integrated into the overall test suite:

| | $ mix test |

| | .............. |

| | |

| | Finished in 0.1 seconds |

| | 5 doctests, 9 tests, 0 failures |

Let’s force an error to see what happens:

| | @doc """ |

| | Return a binary (string) version of our parameter. |

| | |

| | ## Examples |

| | |

| | iex> Issues.TableFormatter.printable("a") |

| | "a" |

| | iex> Issues.TableFormatter.printable(99) |

| | "99.0" |

| | """ |

| | |

| | def printable(str) when is_binary(str), do: str |

| | def printable(str), do: to_string(str) |

And run the tests again:

| | $ mix test test/doc_test.exs |

| | ......... |

| | 1) test doc at Issues.TableFormatter.printable/1 (3) (DocTest) |

| | Doctest failed |

| | code: " Issues.TableFormatter.printable(99) should equal \"99.0\"" |

| | lhs: "\"99\"" |

| | stacktrace: |

| | lib/issues/table_formatter.ex:52: Issues.TableFormatter (module) |

| | 6 tests, 1 failures |

You’ll often find yourself wanting to group your tests at a finer level than per module. For example, you might have multiple tests for a particular function, or multiple functions that work on the same test data. ExUnit has you covered.

Let’s test this simple module:

| | defmodule Stats do |

| | def sum(vals), do: vals |> Enum.reduce(0, &+/2) |

| | def count(vals), do: vals |> length |

| | def average(vals), do: sum(vals) / count(vals) |

| | end |

Our tests might look something like this:

| | defmodule TestStats do |

| | use ExUnit.Case |

| | |

| | test "calculates sum" do |

| | list = [1, 3, 5, 7, 9] |

| | assert Stats.sum(list) == 25 |

| | end |

| | |

| | test "calculates count" do |

| | list = [1, 3, 5, 7, 9] |

| | assert Stats.count(list) == 5 |

| | end |

| | |

| | test "calculates average" do |

| | list = [1, 3, 5, 7, 9] |

| | assert Stats.average(list) == 5 |

| | end |

| | end |

There are a couple of issues here. First, these tests only pass in a list of integers. Presumably we’d want to test with floats, too. So let’s use the describe feature of ExUnit to document that these are the integer versions of the tests:

| | defmodule TestStats0 do |

| | use ExUnit.Case |

| | |

| | describe "Stats on lists of ints" do |

| | test "calculates sum" do |

| | list = [1, 3, 5, 7, 9] |

| | assert Stats.sum(list) == 25 |

| | end |

| | |

| | test "calculates count" do |

| | list = [1, 3, 5, 7, 9] |

| | assert Stats.count(list) == 5 |

| | end |

| | |

| | test "calculates average" do |

| | list = [1, 3, 5, 7, 9] |

| | assert Stats.average(list) == 5 |

| | end |

| | end |

| | end |

If any of these fail, the message would include the description and test name:

| | test Stats on lists of ints calculates sum (TestStats0) |

| | test/describe.exs:12 |

| | Assertion with == failed |

| | ... |

A second issue with our tests is that we’re duplicating the test data in each function. In this particular case this is arguably not a major problem. There are times, however, where this data is complicated to create. So let’s use the setup feature to move this code into a single place. While we’re at it, we’ll also put the expected answers into the setup. This means that if we decide to change the test data in the future, we’ll find it all in one place.

| | defmodule TestStats1 do |

| | use ExUnit.Case |

| | |

| | describe "Stats on lists of ints" do |

| | |

| | setup do |

| | [ list: [1, 3, 5, 7, 9, 11], |

| | sum: 36, |

| | count: 6 |

| | ] |

| | end |

| | |

| | test "calculates sum", fixture do |

| | assert Stats.sum(fixture.list) == fixture.sum |

| | end |

| | test "calculates count", fixture do |

| | assert Stats.count(fixture.list) == fixture.count |

| | end |

| | |

| | test "calculates average", fixture do |

| | assert Stats.average(fixture.list) == fixture.sum / fixture.count |

| | end |

| | end |

| | end |

The setup function is invoked automatically before each test is run. (There’s also a setup_all function that is invoked just once for the test run.) The setup function returns a keyword list of named test data. In testing circles, this data, which is used to drive tests, is called a fixture.

This data is passed to our tests as a second parameter, following the test name. In my tests, I’ve called this parameter fixture. I then access the individual fields using the fixture.list syntax.

In the code here I passed a block to setup. You can also pass the name of a function (as an atom).

Inside the setup code you can define callbacks using on_exit. These will be invoked at the end of the test. They can be used to undo changes made by the test.

There’s a lot of depth in ExUnit. I’d recommend spending a little time in the ExUnit docs.[25]

When you use assertions, you work out ahead of time the result you expect your function to return. This is good, but it also has some limitations. In particular, any assumptions you made while writing the original code are likely to find their way into the tests, too.

A different approach is to consider the overall properties of the function you’re testing. For example, if your function converts a string to uppercase, then you can predict that, whatever string you feed it,

These are intrinsic properties of the function. And we can test them statistically by simply injecting a (large) number of different strings and verifying the results honor the properties. If all the tests pass, we haven’t proved the function is correct (although we have a lot of confidence it should be). But, more importantly, if any of the tests fail, we’ve found a boundary condition our function doesn’t handle. And property-based testing is surprisingly good at finding these errors. Let’s look again at our previous example:

| | defmodule Stats do |

| | def sum(vals), do: vals |> Enum.reduce(0, &+/2) |

| | def count(vals), do: vals |> length |

| | def average(vals), do: sum(vals) / count(vals) |

| | end |

Here are some simple properties we could test:

To test the properties, the framework needs to generate large numbers of sample values of the correct type. For the first test, for example, we need a bunch of numeric values.

That’s where the property-testing libraries come in. There are a number of property-based testing libraries for Elixir (including one I wrote, called Quixir). But here we’ll be using a library called StreamData.[26] As José Valim is one of the authors, I suspect it may well make its way into core Elixir one day.

I could write something like this:

| | check all number <- real() do |

| | # ... |

| | end |

There are two pieces of magic here. The first is the real function. This is a generator, which will return real numbers. This is invoked by check all. You might think from its name that this will try all real numbers (which would take some time), but it instead just iterates a given number of times (100 by default).

Let’s code this. First, add StreamData to our list of dependencies:

| | defp deps do |

| | [ |

| | { :stream_data, ">= 0.0.0" }, |

| | ] |

| | end |

Now we can write the property tests. Here’s the first:

| | defmodule StatsPropertyTest do |

| | use ExUnit.Case |

| | use ExUnitProperties |

| | |

| | describe "Stats on lists of ints" do |

| | property "single element lists are their own sum" do |

| | check all number <- integer() do |

| | assert Stats.sum([number]) == number |

| | end |

| | end |

| | end |

| | end |

You’ll see this looks a lot like a regular test. We have to include use ExCheck at the top to include the property test framework.

The actual test is in the property block. It has the check all block we saw earlier. Inside this we have a test: assert Stats.sum([number]) == number.

Let’s run it:

| | $ mix test test/stats_property_test.exs |

| | . |

| | .............. |

| | |

| | Finished in 0.1 seconds |

| | 1 property, 0 failures |

Let’s break the test, just to see what a failure looks like:

| | check all number <- real do |

| | assert Stats.sum([number]) == number + 1 |

| | end |

| | 1) property Stats on lists of ints single-element lists are |

| | their own sum (StatsPropertyTest) |

| | test/stats_property_test.exs:17 |

| | Failed with generated values (after 0 successful run(s)): |

| | |

| | number <- integer() |

| | #=> 0 |

| | |

| | Assertion with == failed |

| | code: assert Stats.sum([number]) == number + 1 |

| | left: 0 |

| | right: 1 |

We failed, and the value of number at the time was zero.

Let’s fix the test, and add tests for the other two properties.

| | property "count not negative" do |

| | check all l <- list_of(integer()) do |

| | assert Stats.count(l) >= 0 |

| | end |

| | end |

| | |

| | property "single element lists are their own sum" do |

| | check all number <- integer() do |

| | assert Stats.sum([number]) == number |

| | end |

| | end |

| | |

| | property "sum equals average times count" do |

| | check all l <- list_of(integer()) do |

| | assert_in_delta( |

| | Stats.sum(l), |

| | Stats.count(l)*Stats.average(l), |

| | 1.0e-6 |

| | ) |

| | end |

| | end |

The two new tests use a different generator: list(int) generates a number of lists, each containing zero or more ints.

Running this code is surprising—it fails!

| | $ mix test test/stats_property_test.exs |

| | .... |

| | |

| | 1) property Stats on lists of ints sum equals average times |

| | count (StatsPropertyTest) |

| | test/stats_property_test.exs:27 |

| | ** (ExUnitProperties.Error) failed with generated values |

| | (after 40 successful run(s)): |

| | |

| » | l <- list_of(integer()) |

| » | #=> [] |

| | |

| » | ** (ArithmeticError) bad argument in arithmetic expression |

| | code: check all l <- list_of(integer()) do |

| | . . . |

| | Finished in 0.1 seconds |

| | 5 properties, 1 failure |

| | |

| | Randomized with seed 947482 |

The exception shows we failed with an Arithmeticerror, and the value that caused the failure was l = [], the empty list. That’s because we were trying to find the average of an empty list. This means our code will be dividing the sum (0) by the list size (0), and dividing by zero is an error.

This is cool. The property tests explored the range of possible input values, and found one that causes our code to fail.

Arguably, this is a bug in our Stats module. But let’s treat it instead as a boundary condition that the tests should avoid. We can do this in two ways.

First, we can tell the property test to skip generated values that fail to meet a condition. We do this with the nonempty function:

| | property "sum equals average times count (nonempty)" do |

| | check all l <- list_of(integer()) |> nonempty do |

| | assert_in_delta( |

| | Stats.sum(l), |

| | Stats.count(l)*Stats.average(l), |

| | 1.0e-6 |

| | ) |

| | end |

| | end |

Now, whenever the generator returns an empty list, the nonempty function will filter it out. This is just one example of StreamData filters. A number are predefined, and you can also write your own.

A second approach is to prevent the generator from creating empty lists in the first place. This uses the min_length option:

| | property "sum equals average times count (min_length)" do |

| | check all l <- list_of(integer(), min_length: 1) do |

| | assert_in_delta( |

| | Stats.sum(l), |

| | Stats.count(l)*Stats.average(l), |

| | 1.0e-6 |

| | ) |

| | end |

| | end |

In case you’re interested in exploring property-based testing, the documentation for ExUnitProperties has some examples and references.[27]

The StreamData module is designed to be used on its own—it’s not just for testing. If you find yourself needing to generate streams of values that meet some criteria, it might be your library of choice.

Some people believe that if there are any lines of application code that haven’t been exercised by a test, the code is incomplete. (I’m not one of them.) These folks use test coverage tools to check for untested code.

Here we’ll use excoveralls to see where to add tests for the Issues app.[28] (Another good coverage tool is coverex.)[29]

All of the work to add the tool to our project takes place in mix.exs.

First, we add the dependency:

| | defp deps do |

| | [ |

| | {:httpoison, "~> 0.9"}, |

| | {:poison, "~> 2.2"}, |

| | {:ex_doc, "~> 0.12"}, |

| | {:earmark, "~> 1.0", override: true}, |

| » | {:excoveralls, "~> 0.5.5", only: :test} |

| | ] |

| | end |

Then, in the project section, we integrate the various coveralls commands into mix, and force them to run in the test environment:

| | def project do |

| | [ |

| | app: :issues, |

| | version: "0.0.1", |

| | name: "Issues", |

| | source_url: "https://github.com/pragdave/issues", |

| | escript: escript_config(), |

| | build_embedded: Mix.env == :prod, |

| | start_permanent: Mix.env == :prod, |

| » | test_coverage: [tool: ExCoveralls], |

| » | preferred_cli_env: [ |

| » | "coveralls": :test, |

| » | "coveralls.detail": :test, |

| » | "coveralls.post": :test, |

| » | "coveralls.html": :test |

| » | ], |

| | deps: deps() |

| | ] |

| | end |

After a quick mix deps.get, you can run your first coverage report:

| | $ mix coveralls |

| | ............. |

| | |

| | Finished in 0.1 seconds |

| | 5 doctests, 8 tests, 0 failures |

| | |

| | Randomized with seed 5441 |

| | ---------------- |

| | COV FILE LINES RELEVANT MISSED |

| | 0.0% lib/issues.ex 5 0 0 |

| | 46.7% lib/issues/cli.ex 73 15 8 |

| | 0.0% lib/issues/github_issues.ex 46 6 6 |

| | 100.0% lib/issues/table_formatter.ex 109 15 0 |

| | [TOTAL] 61.1% |

| | ---------------- |

It runs the tests first, and then reports on the files in our application.

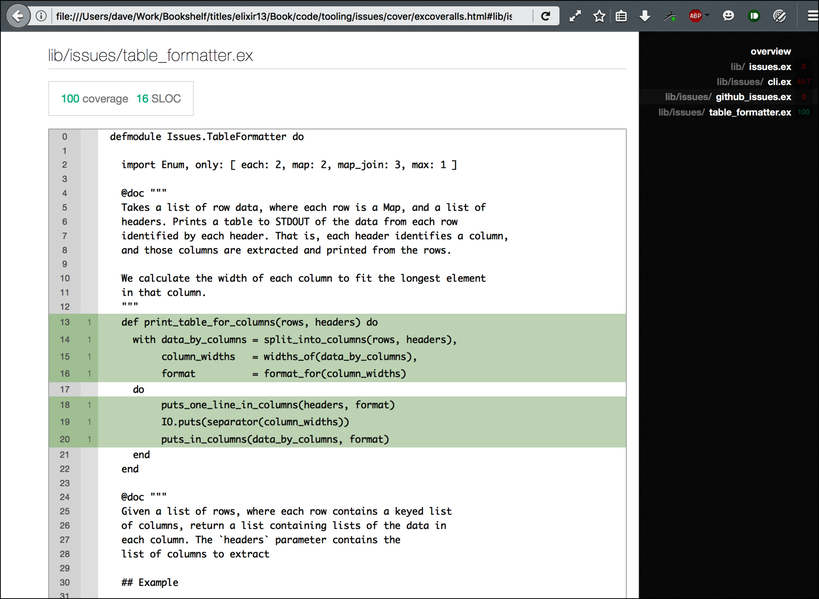

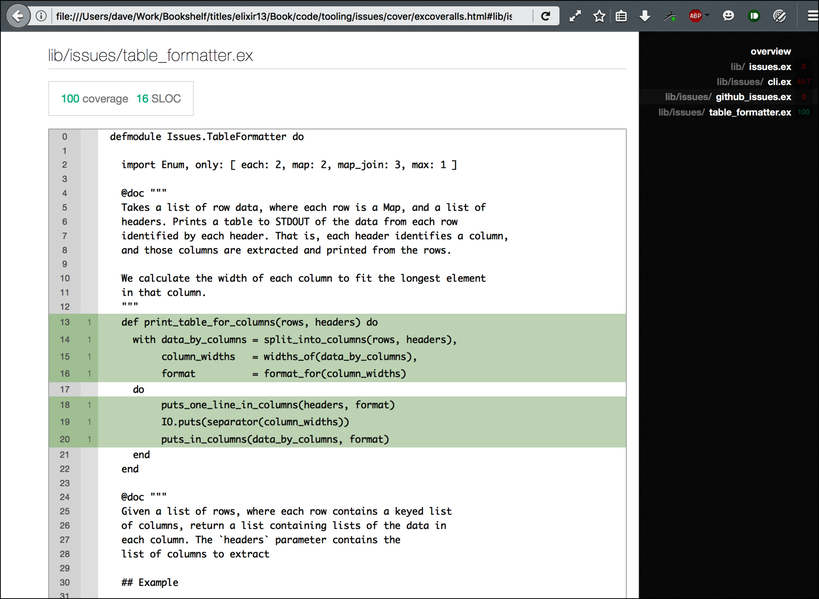

We have no tests for issues.ex. As this is basically a boilerplate no-op, that’s not surprising. We wrote some tests for cli.ex, but could do better. The github_issues.ex file is not being tested. But, saving the best for last, we have 100% coverage in the table formatter (because we used it as an example of doc testing).

excoveralls can produce detailed reports to the console (mix coveralls.detail) and as an HTML file (mix coveralls.html). The latter generates the file cover/excoveralls.html, as shown in the following figure.

Finally, excoveralls works with a number of continuous integration systems. See its GitHub page for details.