6. Epistemology

Epistemology is the part of philosophy that deals with problems concerning the validity, nature, and limits of knowledge. It differs from ontology, which, as we have seen, attempts to tackle the nature of reality directly. Epistemology, by contrast, looks at theories of knowledge and of how we know what we know.[1] Also valid for my purposes is Elsasser’s definition of epistemology as the ‘thinking and reinterpretation of general concepts, primarily about space, time and causality [and, I will add, of “mind” and “consciousness”]’.[2] In this chapter, we will consider a range of concepts that will be helpful in our subsequent explorations of consciousness.

I will also argue, in different ways, for epistemological pluralism. This is the view that many different epistemological methodologies are necessary to attain a full description of the world. I oppose the reductionism and fundamentalism of many parts of science, encouraging multiple approaches, and acknowledging that different explanatory levels often cannot be reduced to one another.[3]

Epistemological questions themselves are often terribly difficult and convoluted, and frequently lead to dead ends. Salient examples include the mid-twentieth century disputes over perception, memory, ‘sense-data’, and language.[4] Such impasses were what led to the rejection of the idea that one could ever build a model or ‘mirror’ of reality, and in part to Feyerabend’s form of epistemological anarchism where he claimed that ‘anything goes’ when it came to constructing theories about the universe.[5]

Entangled with the problems concerning theories of knowledge are problems with realism in science. Most twentieth-century epistemologies were realist in that they assumed that our perceptions were of a ‘real’ world, and, for practical purposes, I uphold this metaphysical assumption. The real/anti-real debate in the philosophy of science, however, refers to a different debate which is whether scientific theories accurately reflect an unseen reality or whether the theories are just useful tools that allow us to understand observational data better but have no other basis in reality.

We also need to ask whether or not such models offer universal and exhaustive descriptions of reality. In the pluralistic spirit, I will argue against the idea of universal ‘laws’ of nature and a unified hierarchy of science and in favour of a patchwork view, where specific theories cover limited domains.[6]

These threads form part of a wider argument that will move our thoughts on the mind, consciousness and human nature away from the fundamentalist and absolutist, and towards more pluralistic and open-ended views. A primary concern, therefore, is to question the many imagined restraints on the universe that ‘force’ us to conceive of human nature—as part of that universe—in a particular way.

To quote Paul Feyerabend, ‘… the world which we want to explore is a largely unknown entity. We must, therefore, keep our options open and … not restrict ourselves in advance’.[7] Our world-pictures, too, are inevitably partial and local to our own observations, no matter how wide-ranging we attempt to be.[8] If we accept this partiality, then I think we are led in the direction of pluralism and local, rather than ‘universal’, realism.

Modelling the Real

In the twentieth century, philosophers generally took the ‘real’ world for granted and tried to attempt piecemeal or local solutions to problems of forming reliable knowledge about it. Of primary importance in the early to mid-twentieth century disputes was the issue of how objects in the world could be related to perceptual knowledge about them.[9] Sight was a prime example, because the claim that seeing was a direct experience of an external object (say a tree or car) did not seem compatible with the complex physiological processes of vision. A gap exists between our knowledge of what happens in the eye and brain and our mental awareness of a tree or car. This, of course, is closely related to our primary problem of conscious experience.

The problem is compounded by illusions and hallucinations. We know that our perceptions can fool us and that we can sometimes see things that are not there at all. We might under some circumstances see a tree or car as double. We assume that this is wrong, but how can we know whether double or single vision is a more accurate depiction of the real object? There are a number of possible solutions to this sort of dilemma, but none are entirely satisfactory.

One problem is that it is very easy to lapse into a representational view of perception, or the idea that one’s mind stores a discrete ‘representation’ of, say, a tree or car and reacts to the representation rather than the ‘real’ car. But this sort of argument often leads to infinite regress, because if one cannot interact with the external world and only representations, then how can one be said to be interacting with the external world at all? It becomes apparent that even superficially ‘simple’ questions like the relation of our perceptions to reality seem fraught with possibly unresolvable difficulties.

Cracking the Cartesian Mirror

Such problematic representational views of perception and thought, according to some, underlay early modern science.[10] According to the revisionist histories of Richard Rorty, Descartes had held the view that science should be the ‘mirror of nature’, or a direct and unmediated ‘representation’ of the material world revealed by our senses. In order to make this work, Descartes split the mind off from nature in order to have a realm in which this representational form of science could be built. Rorty saw such a ‘mind’, in the sense of an interior ‘place’ or theatre, as a cultural construction with no other reality.[11]

Immanuel Kant (1724–1804) thought that there were or could be privileged representations of reality, which built upon Descartes’ naïve belief that one could discern the ‘truth’ if one thought clearly enough. Kant acknowledged that we experience nothing directly, and that everything was filtered through our senses, but he still thought that appropriate representational structures could reflect the ‘real’ world.

So, again according to Rorty, the particular philosophical programme developed by Kant relied upon two assumptions:

(1) It required the mind to be conceived as a ‘mirror’, reflective glass or separate space, and

(2) It assumed a correspondence theory of truth, or that one could build a true and accurate representation of the world that ‘mirrored’ reality.

Rorty, following Wittgenstein, disputed whether any philosophy or logical structure could actually do this, and criticized a number of twentieth-century philosophers who, albeit implicitly, retained the hope of finding such privileged representations of reality.

In contrast, Rorty observed, by the end of the nineteenth century, such philosophical schemes seemed untenable. He observed that ‘… the “naturalization” of epistemology by psychology suggested that a simple and relaxed physicalism might be the only sort of ontological view needed’.[12] In a situation like this, he thought, the idea that one needs privileged representations of the world might fall into eclipse. This didn’t happen, according to Rorty, because thinkers like Russell and Husserl were still committed to untenable ‘hidden’ truths. Both of these latter thinkers rejected the idea that psychology could simply take over philosophy; Russell favoured logic as the essence of philosophy and Husserl focused upon private experiences.

Rorty, by contrast, embraced what he termed as ‘epistemological behaviourism’. This involved a rejection of ontology, or the idea that we need an underlying philosophical way of describing human beings. It also involves the rejection of inner entities. As Rorty says; ‘What we cannot do is take knowledge of “inner” or “abstract” entities as premises from which our knowledge of other entities is normally inferred …’[13] Hidden or unobservable entities like minds cannot, Rorty claimed, be used as a foundation for knowledge as external, eternal standards. In the absence of these external standards, philosophers had little left to do but discuss the world as they saw it personally.

Rorty’s critiques have some strong points, such as his deconstruction of the specifics of Descartes’ mirror of nature, but in other places, they are very much of their time. His rejection of private experience is inspired by behaviourism, and the elimination of hidden entities is based upon the philosophy of the positivists. His assertion that a ‘relaxed physicalism’ is desirable because, by implication, it doesn’t require hidden entities seems to me fallacious. As argued in the previous chapter, at least some versions of materialism or physicalism do seem to imply a particular hidden entity named ‘matter’, and even those that do not require the reduction of mental states to physical processes, which are often described in terms of hidden entities (see below).

Rorty’s view that psychology (or, currently, neuroscience) should ‘take over’ from philosophy has become remarkably widespread. Tallis notes that philosophers of mind have developed what he terms ‘science-cringe’, which effectively means the tacit acceptance of the demotion of philosophy to a branch of natural science.[14] So we have writers like Metzinger claiming that epistemological problems will be ‘solved’ by advances in neuroscience, and claims that neuroscience can or should ‘shoulder the burden’ of the conceptual nature of knowledge.[15]

The move to naturalize philosophy seems to me one that should be resisted. This is in part because, as others have pointed out, philosophy and neuroscience actually do very different jobs. Philosophy is about the analysis of concepts, and neuroscience is about the empirical investigation of the brain and its processes. Of course, one might philosophize about the findings of neuroscience, or come to understand how humans think or act better via neuroscience, but this does not seem to me to justify collapsing one discipline into the other.

The most valuable part of Rorty’s work is the demolition of the idea that a system of knowledge can ‘mirror’ nature. But if we acknowledge this, then it seems to weaken the claim of any system of thought, including naturalized science, to provide a coherent or comprehensive picture of the world. A naturalized physicalist ontology, even a ‘relaxed’ one, still constitutes a system of thought and a particular orientation to the world. The insistence that it is or should be the only view needed would constitute an ideological claim that can be rejected, because there may well be cases where different orientations towards the world can provide us with insights that Rorty’s favoured philosophy cannot. This point can be clarified by further consideration of ‘hidden entities’.

Surface versus Depth: An Implicit Epistemic Conflict

There is a split in philosophy between those who advocate the use of ‘hidden’ or unseen realities to enhance our understanding of phenomena in the natural world, and those who reject such attempts as incoherent and/or linguistic phantoms.[16] I am going to suggest that despite various notable demolition efforts, both viewpoints have some merit and both are alive and well in different contexts.

The notion of a hidden reality that is, under some interpretations, as or more ‘real’ than that revealed by our senses goes right back to Pythagoras and Plato. Plato, we will recall, supposed an ideal world of form and number of which the visible world was a shadow. Such a notion still underpins physics, which routinely relies upon mathematically required but ‘hidden’ entities (inertia, space, gravitation, dark matter and energy, super-strings, etc.) to ‘explain’ the workings of the world. This seems, as Penrose notes, thoroughly Platonic.[17]

At the other extreme lies the rejection of all such hidden entities. It is often forgotten that David Hume’s (1711–1776) critiques included a rejection of, for example, Newtonian notions of causation in favour of phenomenology. This was partly because Hume saw Newton’s laws as supposing unseen elements that could not be reduced to experience.

The concern about hidden entities became acute when philosophers began to question what nouns actually stand for—and so how to define clearly any hidden or abstract entities. When I say dog, tree, house, black, white, we commonly assume that I am talking about something readily identifiable in the ‘real’ world. But the problem is actually far from straightforward, especially when we discuss abstract nouns like ‘beauty’, ‘love’, ‘mind’ and ‘consciousness’, because it is actually very unclear what these words stand for in concrete terms.

Difficulties like this are in part what led philosophers like Wittgenstein and later Rorty to doubt that philosophical or logical structures could form schemes by which we could coherently mirror the world. In Philosophical Investigations, Wittgenstein rejected the idea that there was an essential core of meaning in a word.[18] The best we could hope for, he thought, wasa family resemblance between the word and what it signified. Biletzi notes that:

Family resemblance also serves to exhibit the lack of boundaries and the distance from exactness that characterize different uses of the same concept. Such boundaries and exactness are the definitive traits of form—be it Platonic form, Aristotelian form, or the general form of a proposition adumbrated in [Wittgenstein’s earlier work]. It is from such forms that applications of concepts can be deduced, but this is precisely what Wittgenstein now eschews in favor of appeal to similarity of a kind with family resemblance.[19]

Wittgenstein claimed that words do not stand for a specific, Platonic ‘token’ object (say an archetypal, perfect tree) but instead could only stand for families of specific objects (trees in general). This issue with generalizable categories gets more complicated when one considers more abstract ‘objects’ like minds and consciousness, and led thinkers like Rorty and Ryle to suppose that minds had no inherent existence at all.

Even today, there are conflicts between those who invoke a hidden or discrete ‘mind’ or ‘consciousness’ as a theoretical entity and those who regard either or both as some form of linguistic or, latterly, neurological ‘illusion’.[20] But these views represent two poles on a continuum. On the one hand, it seems possible to demolish or severely critique many philosophies that rest upon hidden or unobservable entities, especially if they are held to be discrete. On the other, those unobservable entities have proved very useful in science in innumerable ways in the past. In the end, it seems better to judge the success or otherwise of invoking a hidden entity in pragmatic terms, or by whether the invocation of an entity is useful. This accords well with Karl Popper’s idea of ‘conjectural explanations’ that postulate entities as part of scientific hypotheses but do not regard them as ultimate truths.[21]

Real and Anti-Real Currents in Science

The next question is, given that we cannot as easily eliminate hidden entities as the positivists hoped, whether said entities can be considered real or accurate descriptions of unseen or unobservable portions of the cosmos. There are two conflicting schools here. Realism assumes that science tells us the true and literal nature of the universe and its contents, about atoms and molecules, the nature of light, about cells and the furthest galaxies, about the distant past and maybe a little about the cosmos’s future. In a realist interpretation, scientific knowledge about the natural world, even hidden portions, can be taken as more-or-less literally true. Many—but certainly not all—scientists lean heavily in the literalist, realist direction.

Anti-realists, by contrast, assert that the theoretical, unobservable parts of science are not literally real, but only useful models by which we can understand our actual observations better.[22] Anti-realists argue that theories like relativity and quantum mechanics are useful models that may not correspond at all with the unobservable aspects of reality, and think that only direct observations can be thought of as ‘real’.

Anti-realists tend to appeal to a pragmatic definition of truth, which means that one can calla theory true because it is useful as opposed to useful because it is true. The practical utility of a scientific theory is often used as evidence for its superiority. For example, the ‘aeroplane defence’ holds that extreme relativism (or the idea that all ideas about the world are equal) cannot be true because scientific theories have provided us with the means for building working aeroplanes, whereas idealist or religious theories about the world, for example, have not given us this power.[23] Whilst these sorts of arguments seem to me reasonable counters to extreme philosophical relativism, I would also note that they do not really resolve the real/anti-real debate, and on their own rely upon a pragmatic definition of truth. This is because utility does not necessarily follow from underlying or inherent ‘truth’ (however it may be defined).

This is a problem for some. Midgley complains that such pragmatic approaches only go half way because there are many facts in science that only really make sense if we suppose that they are meant more or less literally. So continental drift or the theory that dinosaurs are descended from birds need to be taken somehow literally. She also worries that pragmatism might lead us to take obviously absurd ideas as true if they are useful, giving the example that if it is expedient to believe there is a ferocious demon in electricity wires to prevent electrocution, then a pragmatist approach would compel us to say this is ‘true’.[24] Midgley makes a valid point, and yet surely we are not forced into either a real, anti-real or pragmatic straitjacket. It seems to me entirely reasonable to treat some scientific theories as more literal than others, and often one ends up making a personal judgment on a case by case basis about these issues.

Hidden Structures?

Chalmers makes the case that science is realist ‘in the sense that it attempts to characterise the structure of reality, and has made steady progress insofar as it has succeeded in doing so to an increasingly accurate degree’.[25] Broadly speaking, and in at least some circumstances, this seems a reasonable approximation of scientific theory, but I would suggest that this claim has significant limitations, especially in the field of human behaviour.

Chalmers’ claim for realism in science hinges upon the definition and applicability of the word ‘structure’. The word implies something that is more-or-less stable, regular and identifiable apart from the general background of the universe. Examples might be the division of the human brain into left and right hemispheres, or the heart into four chambers.

But this definition is less useful when considering other kinds of phenomena. Many things have unstable and temporary ‘structures’, whirlpools and cyclones being examples. Whilst the equations of fluid dynamics might allow us to predict or simulate a generalized whirlpool, they cannot predict the precise form that whirlpools or cyclones might take in the real world; any structure is loose. This is in contrast to the movements of the planets, which can be predicted with accuracy centuries in advance.

The exact behaviour of many dynamic and complex systems cannot be predicted, because there are too many variables and because even minor variations can have dramatic and unpredictable effects on the outcome. The ‘Butterfly Effect’ is the classic example of this: the idea that a butterfly flapping its wings in Tokyo can cause a storm in New York, making long-term weather prediction impossible.[26] This has significant implications for the study of the very complex human mind. Noam Chomsky has gone so far as to suggest that most explanations for human behaviour amount to opinions, because the exact sciences can only really answer questions about very simple systems.[27]

In addition, I would assert that many psychological phenomena that are taken to be derived from definite, definable and more or less permanent ‘structures’ (specifically, neural or ‘information-processing’ modules) are probably not reducible in this way. This view contrasts with a significant wing of contemporary psychology that tries to explain human nature almost entirely in terms of very specific behavioural ‘modules’ within the brain.[28]

Others, like Stephen Braude, go further, suggesting that mental states cannot really be called structured in any meaningful way.[29] He points out that mental content and meaning (a mental image, or what a thought is about) are not reducible to structure (neural events in the brain, biochemistry). For example, a memory of a person could be of almost anything (appearance, quirks, anecdotes, significant works) which do not have any underlying, intrinsic link or structure to which they can be reduced. If this is correct, then Chalmers’ view of science cannot apply to psychology.

A more moderate stance may be to acknowledge that Chalmers’ kind of realism, based upon a concept of structure, might only be of limited use in psychology. Sometimes it may be appropriate to try and identify the ‘structures’ associated with human cognition, behaviour or perception; sometimes it might not. But if Chalmers is correct that science equals locating structure, and Braude is correct that many mental states lack structure, then this hints at severe limits for a science of psychology.

However, we are not necessarily bound to define science in this way. The problem with Chalmers’ view is that it restricts the practice of science to the structural (or, as we will discover below, law-generating systems only), which may be too severe a limit, especially in the social sciences. It is perfectly possible, for example, to observe and record idiosyncratic, unstructured behaviour in a systematic and ‘scientific’ way, although the fact of its idiosyncrasy may prevent one formulating systematic theories in the way favoured by natural science.

No Grand Schemes: A Nomological Alternative

But even if we accept that some form of realism seems applicable for at least some of the theories of science, we are not compelled to suppose that these models can be grouped into a universal scheme of explanation.[30] Nancy Cartwright observes that facts can be roughly categorized into either those that are ordered into theoretical schemes, often reflecting behaviour in controlled environments (i.e. in laboratory conditions), and those that are not so ordered. She notes a widespread tendency to privilege the first kind of fact as exemplars of the way in which nature should work, and to assume that the facts that are not so ordered should conform to them.

Cartwright questions this sort of fundamentalist thinking, challenging the idea that one can ‘downwards reduce’ everything to the laws of physics, and also the idea that similar physical systems that are not observed in the laboratory might be ‘reduced’ to the models developed to account for strictly controlled lab experiments. She asks:

Can our refugee (or unordered) facts always, with sufficient effort and attention, be remoulded into proper members of the physics community, behaving tidily in accord with the fundamental code? Or must—and should—they be admitted into the body of knowledge on their own merit?[31]

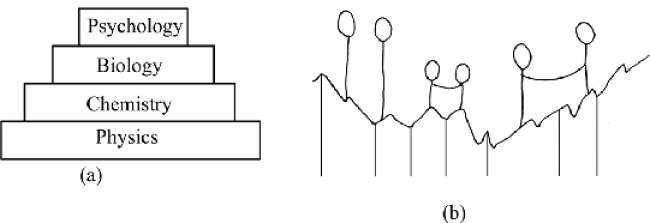

What is at stake here is the sort of picture that suggests that scientific knowledge can be considered a kind of pyramid, with the fundamental laws of physics on the bottom tier, then chemistry, then biology, then psychology, etc. (Figure 1a). The reductionist programme suggests that everything should, or could in principle, be reducible to the ‘laws’ of physics on the bottom tier, as psychology ‘should’ be reducible to biology which should be reducible to chemistry which should be reducible to physics. This picture is commonly accepted without question in science, and is I suspect the source of the insistence that any theory of consciousness must conform to known laws of physics and chemistry.

Cartwright rejects this picture of science, including the notion that there is a universal cover of law, instead adopting Neurath’s picture of a patchwork of appropriate domains (Figure 1b). According to Neurath, explanations are ‘tied’, like balloons, to different parts of the world, but there is no system beyond this. ‘Balloons’ can sometimes be tied together to co-operate in different ways when solving problems, and the boundaries that ‘balloons’ cover are not fixed, and can expand, contract and overlap. But specific laws do not operate outside these boundaries.[32] This accords with Feyerabend’s view of science as a patchwork of partly overlapping, factually adequate but often mutually inconsistent theories.[33]

Figure 1. Pyramids versus Balloons. Scientific knowledge is usually pictured as a hierarchy, (a) with fundamental and universal laws at the bottom. Neurath and Cartwright suggest instead that theories can be pictured as balloons (b), pinned to bits of reality and with limited domains. In the latter picture, there are no truly ‘universal’ laws, although different knowledge domains can overlap or be ‘tied’ together via theoretical structures.

Cartwright expands upon this ‘patchwork’ notion by introducing the nomological machine. Nomological means ‘expressing basic physical laws or rules of reasoning’,[34] and stands for the various models in physics and other sciences by which we understand processes in the universe. These rule-expressing machines are only applicable in restricted circumstances, or ceteris paribus. This is Latin phrase meaning ‘all other things being equal or held constant’.[35] Outside limited circumstances, specific nomological machines do not work, so they cannot be regarded as generating universal laws.

Cartwright illustrates this via the laws of planetary motion. Newton invented a model that established the magnitude of force needed to keep a planet in elliptical orbit by an application of the inverse-square kind of attraction in gravitational pull.[36] Newton’s laws of gravitation did not seem to apply quite correctly to the orbit of Uranus, which did not move as expected. The irregularities in Uranus’s orbit were eventually explained by the existence of a new planet, Neptune, whose gravitational pull perturbed Uranus’s motion.

Cartwright interprets the failure of Uranus to conform to Newton’s law in the following terms:

The observed irregularity points … to a failure of description of the specific circumstances that characterise the Newtonian planetary machine. The discovery of Neptune results from a revision of the shielding conditions that are necessary to ensure the stability of the original Newtonian machine.[37]

Instead of universal laws, Cartwright argues for an understanding of capacities. Laws, she claims, need nomological machines to generate them, and hold only under the limited conditions in which these machines work. Capacities, by contrast, signify abilities, tendencies and propensities to do. So we might expect a simple object, like a billiard ball, to behave, or to have the capacity to behave, in a restricted set of ways in different circumstances. By contrast, a far more complex object, like a human being, would have a vastly expanded range of capacities that would be expressed differently according to different circumstances. Capacities are defined in very generic terms and stand for tendencies rather than highly specifiable and deterministic functions.[38] Nomological machines mark (the limited) places where general capacities can be narrowed into highly specific and determinate systems.[39]

One example might be learning. One can learn in a million different ways, via probably millions of different biological ‘structures’, and one can only loosely define what learning might be. Cognitive science, however, has developed a number of different theoretical models that can tell us something about some of the parameters of some forms of learning—say, learning facts, or how to distinguish oranges from apples. These latter models can be defined as nomological machines, because they apply only in restricted circumstances and concern restricted and specifiable subjects. Machine learning, even with neural nets or genetic algorithms, is also rule-restricted.

So Cartwright’s work is of significance for those wishing to gain a better understanding of organisms in general and conscious beings in particular. Specifically it is relevant to subsequent discussions that will assess the validity of those who claim that the high complexity and organization of organisms makes current models of physics inadequate for biology. In this interpretation, such complexity signifies a boundary condition beyond which the nomological machines of current physics and chemistry do not give us wholly satisfactory answers.

The ‘dappled world’ notion has wider implications, because it suggests that many commonly expressed notions like, for example, universal or strong determinism might be wrong. This particular belief underlies many of the claims that free will or consciously-determined actions ‘must’ be illusory.[40] But as Cartwright notes, we have no particular reason to suppose that everything operates under strict, causal laws, and every reason to suspect great causal diversity and even causal ‘gaps’.[41] Cartwright:

… even our best theories are limited in scope. For, to all appearances, not many of the situations that occur naturally in our world fall under the concepts of these theories. That is why physics, though a powerful tool for predicting and changing the world, is a tool of limited utility.[42]

Implications for Cognitive Science

As indicated above, nomological machines are commonly used in cognitive science and most explicitly in Artificial Intelligence. A common hope in this field—especially amongst the pioneers—was that all human behaviour might be reducible to rule-based systems. This was Turing’s basis for assuming that machine intelligence was possible.[43]

Cartwright’s re-formulation forces us to look at these rule-generating systems in a different way. One of the objections to classical AI, which based its simulations upon strictly rule-based systems, was that human beings often do not seem to behave in ways that are restrained by formal rules.[44] However, systems with general capacities constrained and/or expressed by local variations in structure might serve as a more useful picture than simply assuming that humans ‘are’ rule generating systems.

Cartwright’s other point, about unordered facts and the tendency to force them to conform to tidy models, seems to me especially salient in the human sciences. This is because a lot of human behaviour (and thought, if we accept Braude’s arguments) seems unpatterned or idiosyncratic. Bauer notes a tension in the social sciences between those who wish to reduce human behaviour to models akin to those in the natural sciences and those who seek ‘interpretive’ approaches that respect the autonomy of human beings and acknowledge the impossibility of accurately predicting that behaviour.[45] But today the latter sorts of investigation have been eclipsed by reductive neuroscience.

Much of experimental psychology seems hell-bent upon reducing all ‘real’ human behaviours to that which are reproducible under specific conditions and, increasingly, associated with specific neural structures. Mimicking physicists, the aim seems to be to produce evidence of rule-based or structured behaviour that can be wholly described in terms of nomological machines. But what if most human capacities are not expressed in reducible, structured ways at all?

For it is quite possible for a wide range of human capacities to be expressed in idiosyncratic or singular ways. This would suggest that the attempt to restrict ‘scientific’ descriptions of human behaviour to the easily reproducible or law-generated might be in error. Like Cartwright, I think that facts and observations that cannot be fit into tidy schemes need to be respected, and that this is especially important in the human sciences because of the dangers of dehumanization.

Are Scientific Models Exhaustive?

Related to this concern is the probability that scientific models do not and probably cannot provide exhaustive descriptions of the portions of the cosmos that they describe. This seems especially true for human beings. And yet, we often encounter statements that seem to imply that exhaustive descriptions can be obtained from such models. Weizenbaum noted the tendency of AI researchers to assume, without any strong evidence, that computers could in principle do anything a human could.[46] More recently, we have claims like human minds are ‘nothing more than a creation of genes and memes in a unique environment’,[47] or that ‘there is nothing more [than brain function] no magic, no additional components to account for every thought, each perception and emotion, all our memories, our personality, fears, loves and curiosities’.[48] These sorts of statements can only be taken seriously if we accept that scientific models present exhaustive accounts of reality, but there are reasons to doubt this.

As long ago as 1947, Aldous Huxley cautioned that:

Confronted with the data of experience, men of science [sic] begin by leaving out of account all those aspects of the facts which do not lend themselves to measurement and to explanation in terms of antecedent causes rather than purpose, intention and values … some scientists, many technicians and most consumers of gadgets … tend to accept the world picture implicit in the theories of science as a complete and exhaustive account of reality; they tend to regard those aspects of experience which scientists leave out of the account, because they are incompetent to deal with them, as being somehow less real than the aspects which science has arbitrarily chosen to abstract from the infinitely rich totality of given facts.[49]

Huxley termed this ‘nothing but’ thinking, which is unfortunately present in much popular science writing today, as the preceding quotes demonstrate. Paul Feyerabend saw this dogmatic slide as common in science and ultimately pernicious to it.[50] Assume, he suggested, that scientists have adopted a particular theory, working exclusively upon it and excluding the consideration of alternatives. This pursuit might lead to certain empirical success and the theory may explain some observations that were previously mysterious. Such success will reinforce the commitment to the theory. But, Feyerabend observed, often alternative facts only come about by the consideration of alternative theories under differing methodological rules; the history of science is replete with revolutions that only occurred because conventional approaches were ignored and novel approaches adopted.

If, however, our successful scientists justify their refusal to consider alternative theories because of the reputed success of their theory, then this refusal, Feyerabend claimed, ‘will result in the elimination of potentially refuting facts as well’.[51] This, too, is a situation that is common in science; inconvenient facts that do not conform to established theories are often sidelined or ignored. Kuhn[52] saw these inconvenient facts as potentially revolutionary, but Feyerabend was concerned about the dogmatic potential of ignoring them. For if one is resolute in creating conditions that are favourable for orthodox theories and unfavourable to unorthodoxy, two things will happen. Inconvenient facts will become inaccessible simply because they are not pursued. Secondly, it will appear that ‘all’ the [selected] evidence will point with ‘merciless’ definiteness that all processes in a theory’s domain will be consistent with the given theory.

Feyerabend went further, claiming that the ‘appearance of success cannot in the least be regarded as a sign of truth and correspondence with nature’ (his italics). He suspected that such appearance or the absence of major difficulties might be the result of a decrease in empirical content brought about the elimination of alternatives. If one refuses to consider alternative theories, and focuses only upon the evidence that suits your particular theory, then of course it will seem that your approach is comprehensive and exhaustive. But this is, to coin a phrase, an ‘illusion’.

Consider this observation in the context of a cognitive science that insists upon reducing the human personality to rule-based, structured systems only and rejects any aspect of human personality that doesn’t fit into these systems. This will result in a picture of the human being as, essentially, an automaton, because rule-based systems (nomological machines) are by their nature strongly deterministic (indeed, according to some this is the only ‘scientific’ way of thinking).[53] I question the desirability of reducing human beings in this way, especially if we accept Feyerabend’s point as valid.

Feyerabend also thought that these tendencies should and must be resisted because they block progress in science and can lead to oppressive attitudes. His solution of ‘anything goes’ was far too radical for many, and has been fairly criticized in some of its aspects,[54] but his basic point remains sound. Assuming that one’s (scientific) models reveal some aspect of the universe comprehensively and exhaustively is actually rather pernicious, and this seems doubly true for claims about human nature.

Conclusion

No philosophy can ever be anything but a summary sketch, a picture of the world in abridgment, a foreshortened bird’s-eye view of the perspective of events. And the first thing to notice is this, that the only material we have at our disposal for making a picture of the whole world is supplied by the various portions of that world of which we have already had experience. We can invent no new forms of conception, applicable to the whole exclusively, and not suggested originally by the parts. All philosophers, accordingly, have conceived of the whole world after the analogy of some particular feature of it which has particularly captivated their attention.[55]

Thus William James outlines a strong reason for epistemological pluralism and for resisting calls to settle upon one particular philosophy, approach or metaphysic to ‘explain’ the world. We end this chapter where we began: with the conclusion that our theories about the world are rather more restricted than they are often imagined to be. They are partial, applicable to restricted circumstances, and even if they reflect reality in some sense, they reflect local rather than universal truths. The preceding, brief, survey has shown that:

(1) The general trend of epistemology in the twentieth century was away from the universal and towards particular and localized forms of explanation.

(2) That despite resolute efforts to demolish hidden entities, they remain useful in some contexts.

(3) That laws that were previously supposed to be universal are fact applicable only in ceteris paribus conditions, rendering local forms of realism more plausible than universal.

(4) That we have no good reason to suppose that all phenomena in the universe are generated by nomological or fully deterministic systems. Human behaviour in particular seems partially or even poorly understandable in these terms.

(5) We have no reason to think that scientific models are anything like exhaustive descriptions, and if we want to make progress, we have every reason to pursue alternative methodologies and theories to those currently available.

All this might seem tangential to questions of consciousness and the mind, but we have seen that the assumption that any theory of the mind and consciousness must fit within ‘universal’ physical laws remains a widely-expressed sentiment, even amongst those non-reductive physicalists; see the quotation from Searle in the introduction. The thrust of his statement is that since ‘science’ has most of the universe sewn up, why can’t we get consciousness to fit?

But if we assume that even our best theories are not universal and exhaustive , but are restricted and parochial, then the contradictions noted by Searle between a ‘fields and forces’ universe, and intentional, conscious human beings becomes far less troubling. It is simply that even our most general theories about the universe are far more fragmentary and limited than is generally appreciated. In short, the cosmos is quite wide enough for both fields and forces and conscious beings, and an immense number of other things, besides. To progress, we must be bold in our thinking and resistant to epistemological dogmatism.

1 Encyclopedia Britannica, 1973 ed.

2 Elsasser, 1998, p. 6.

3 en.wikipedia.org/wiki/Epistemological_pluralism accessed on 16/06/10.

4 See discussion in Encyclopedia Britannia, 1973 ed.

5 Rorty, 1979/2009; Feyerabend, 1975.

6 Cartwright, 1999; Dupré, 1993.

7 Feyerabend, 1975, p. 20.

8 James, 1909.

9 Encyclopedia Brittanica, 1973 ed.

10 Rorty, 1979/2009.

11 Rorty, 1979/2009.

12 Rorty, 1979/2009, p. 165.

13 Rorty, 1979/2009, p. 177.

14 Tallis, 2004.

15 Metzinger, 2009; Zeki, 1999; see also critical comments in Bennett & Hacker, 2003.

16 Although Rorty classes private or subjective experiences as ‘hidden’, I am leaving this issue for a later chapter. This is because I see the question of whether subjective, conscious experiences can be termed as private as distinct from whether, for example, human behaviour requires the presence of a hidden or Platonic ‘mind’. In the first case, we are trying to determine how best to understand, accommodate or classify direct personal experience and in the second, we are asking whether a distinct entity that no one can see or directly observe is necessary to explain said subjective experiences.

17 Penrose, 2004.

18 Wittgenstein, 1953.

19 Biletzki & Matar, 2010. Web page.

20 Although it would be fair to say that, as far as minds go, the Ryleans have the upper hand, because in cognitive science, ‘mind’ is supposed to be decomposable to function. The jury is still out on consciousness.

21 Popper, 1959.

22 Chalmers, 1999. Chapter fifteen contains a summary of the realist and anti-realist debate.

23 See, for example, Dawkins, 2004.

24 Midgley, 1992, p. 131.

25 Chalmers, 1999, p. 245.

26 Gleik, 1987.

27 Chomsky, 2003.

28 Pinker, 1998; 2002; Fodor, 1983.

29 Braude, 2002.

30 Cartwright, 1999.

31 Cartwright, 1999, p. 25.

32 Cartwright, 1999.

33 Feyerabend, 1975.

34 Merriam-Webster online dictionary, www.merriam-webster.com/dictionary/nomological accessed on 22/06/10.

36 Cartwright, 1999, discussing Newton’s Principia.

37 Cartwright, 1999, pp. 52–3.

38 Ryle, 1949.

39 Cartwright, 1999.

40 Wegner, 2002.

41 See also Dupré, 2001.

42 Cartwright, 1999, p. 9.

43 Turing, 1950.

44 Gardner, 1985.

45 Bauer, 2001.

46 Weizenbaum, 1984.

47 Blackmore, 1997, p. 40.

48 O’Shea, 2008, p. 12.

49 Huxley, 1947, pp. 28–9.

50 Feyerabend, 1975; 1978.

51 Feyerabend, 1975, p. 42.

52 Kuhn, 1996/1962.

53 Wegner, 2002; Metzinger, 2009.

54 Chalmers, 1999.

55 James, 1909, ebook on Project Gutenberg, http://www.gutenberg.org/cache/epub/11984/pg11984.html accessed on 23/06/10.