9. Some Theories of Consciousness

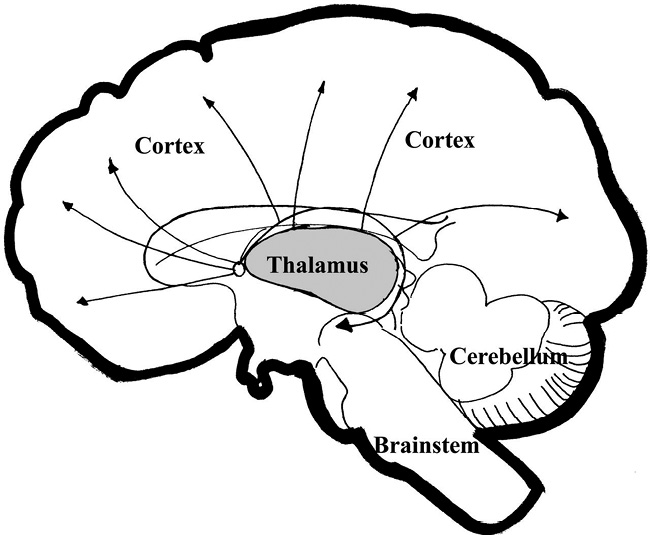

Here we will examine a selection of current, Western, theories of consciousness, all but one of which is propounded within conventional science. The consensus in mainstream cognitive science is that novel science is not needed to explain conscious experiences, and that what we do not understand now will eventually be understood in physical terms. This conclusion is supported by significant amounts of evidence linking waking awareness to activity in the thalamocortical system (Figure 1), which constitutes most of the mammalian brain.[1] This justifies Koch and Mormonn’s definition of consciousness as ‘a puzzling, state-dependent property of certain types of complex, biological, adaptive, and highly interconnected systems’.[2] In Kuhn’s terms, the hunt for consciousness is seen as part of a ‘mopping up’ operation that can be handled by conventional science.[3]

But Kuhn also spoke of the ‘essential tension’ between explanatory frames within conventional science, and those without.[4] In cursory surveys of mainstream literature, these alternative accounts are generally conspicuous by their absence; one looks in vain for extended discussions of quantum theories of consciousness in Nature or Science. Where such theories are mentioned, they tend to be raised to be dismissed.[5] The only alternative account I have seen widely discussed within cognitive science is the quantum gravity theory proposed by Penrose and Hammeroff, and this is probably because of the high academic status of the former author.

This is potentially a problem because, despite claims that general theoretical approaches can be disproved by significant internal problems or novel empirical findings, this is often not enough. Both Kuhn and Polyani observed how good adherents of established theories often become at ignoring or explaining away problems or anomalous observations via ad hoc hypotheses, and we have already encountered Feyerabend’s warnings about the consequences of sticking to a theory no matter what.[6] The problem is that such a slavish adherence can result in a theoretical system becoming self-confirmatory, rather as in astrology. In addition, one often needs alternative traditions as comparisons with dominant ones; Feyerabend notes numerous instances where the comparison, contrast and clashes of these rival systems can reveal the strengths and weaknesses of both. In the current chapter, therefore, I will examine the ‘quantum’ theory of consciousness of Henry Stapp.

Figure 1. Highly Simplified Section of the Brain Showing Thalamocortical Connections. Conscious awareness seems closely linked to the thalamus and the cortex, which are closely interlinked via looping neuronal pathways. The cerebellum is not thought to support consciousness. Simplified from Mormonn & Koch, 2007.

First, I will give an overview of some conventional theories. The purpose here is not an exhaustive examination, but a search for general patterns and themes within these theories, which are generally framed within the information-processing and neurobiological paradigms. ‘Consciousness’ here tends to be defined as either the contents of consciousness or as synonymous with ‘conscious awareness’, or arousal;[7] see Damasio’s definition of consciousness as ‘an organism’s awareness of its own self and surroundings’.[8] Attention tends to be seen as closely linked but distinct from consciousness; Koch and Tsuchiya speculate that the two have separate mechanisms.[9] Consciousness is perceived as basically mechanistic or functional and segmentable into sub-components, in line with the Cartesian method.

The work of Christof Koch exemplifies this approach. Koch is confident that the way forward for studying consciousness is to proceed with established experimental approaches and to stop worrying about philosophical problems.[10] This view is shared by at least one reviewer of his work, who comments that Koch has already ‘ascended to Base Camp 2 on his quest to the summit’.[11] Since his approach is almost exclusively neurobiological and reductionist, both Koch and his reviewer can be regarded as having a positivist outlook, or a view that knowledge should be based only upon direct sensory appearance and experimentation.[12] A common accompaniment to this outlook is a high level of confidence that metaphysical and theoretical worries about the universe will eventually fall to empirical research.

Koch is by no means alone, as much of conventional cognitive science is committed to mechanistic, specifically Cartesian, explanations. For example, in his survey of the ‘mosaic unity’ of neuroscience, Craver embraces a form of pluralism but formulates this in terms of multiple levels of mechanisms, invoking a ‘causal-mechanical model of constitutive explanation’.[13] He does this to offer an alternative to classical reductionism, which still predominates in much of neuroscience, and to revise the approach that attempts to analyse sections of the nervous system in terms of specific functions. The result is a considerably more liberal and pluralistic approach to causation within the brain, which includes discussion of the boundary conditions of causative models. However, even this liberal approach remains committed to a more general form of Cartesian reductionism, as are the conventional approaches outlined below. Therefore, mechanism marks a boundary condition of conventional science within which, it is assumed, any theory of consciousness must fit.

Underlying Mechanisms: Consciousness Within Conventional Science

The following is a brief survey of some proposed mechanisms underlying conscious states. As noted above, many of them focus primarily on conscious awareness, although qualia and conscious contents may also be considered. The theories described here presuppose that neural mechanisms, of various degrees of specificity, underlie subjective experiences. Most, but not all, of these theories are routed in the overarching paradigms of information-processing, computation and/or dynamical systems and cybernetics. These theories attempt to describe the specific brain-functions by which conscious experiences arise. They share some common features, but can also differ significantly in terms of emphasis. This is partly because of the lack of a clear definition of consciousness.

Seth makes some important general observations about these theories. Firstly, he observes, models of consciousness should be distinguished from neural correlates of consciousness. Neural correlates of consciousness discovered during the examination of the brain do not by themselves provide causal theories about consciousness. Just because a part of the brain is active when one thinks about apples, one is not entitled to conclude that the active part is an ‘apples centre’. There could be any number of reasons why the said correlation between subjective thoughts and the activation of a brain region has occurred.

A theory of consciousness, ideally, would give us a good idea of why the phenomenological awareness of an ‘apple’ arises when a given brain region is activated. What those within conventional science are after is a theory that will allow us to explain exactly how a conscious event of this sort can be produced by a neural event. An identity is conventionally assumed between said event and phenomenological experience. U.T. Place made this clear by comparing the case where we say that lightning is a motion of electric charges. He pointed out that no matter how much we scrutinize the lightning, we will never be able to observe the electric charges. Place:

… just as the operations for determining the nature of one’s state of consciousness are radically different from those involved in determining the nature of one’s brain processes, so the operations for determining the occurrence of lightning are radically different from those involved in determining the occurrence of a motion of electric charges.[14]

In the case of lightning, scientific theory is detailed and predictive enough to tell us with some confidence that lightning can be described in terms of electric charges. Place assumed the same to be true of consciousness and neural states; but in fact, a detailed and widely-accepted theory of just how the one ‘is’ or becomes the other must wait for a theory of consciousness. I would note in this context that a theory of conscious attention would by itself be insufficient for this purpose because it would not really tackle how physical states are related to conscious mental states. All it would tell us was why we happen to be aware of some events and not others, not why we experience them in the way that we at least seem to.

Conventional models of consciousness attempt to provide an understanding of the causal relationship between neural correlates and consciousness and how we come to have phenomenological experience in the first place. Neural correlates of consciousness have become commonplace in recent years, due to the advent of neuroimaging. A large number of observations have been made that suggest a close relation between patterns of blood flow and metabolism observed in the brain and many conscious experiences. For example, an area of the extrastriate cortex, the fusiform face area, reacts at least twice as strongly to faces as other classes of non-face stimuli such as objects, hands and houses whilst another region, the parahippocampal place area (PPA), responds strongly to images of places including houses but only weakly when the subjects are presented with images of faces.[15] Kanwisher concludes that ‘… these findings show impressive correlations between the ability to identify an object, letter, or word, and the strength of the neural signal in the relevant cortical area’.[16] An ideal theory might, for example, be able to predict what we were thinking about if we observed a particular pattern of activity in the brain; hence the dreams of a ‘mind-reading’ machine or cerebroscope, to which we will return in the next chapter.

The second important observation that Seth makes is that ‘no single model of consciousness appears sufficient to account fully for the multidimensional properties of conscious experience’.[17] From the pluralist’s viewpoint, one can make the case that no single model ever will, and this suspicion grows if we survey the models in a little more detail. The following brief and superficial survey is also based upon Seth’s review article.

Global Workspace Theories

‘Global workspace’ theories attempt to reconcile the apparent unity of conscious experience with the lack of unifying mechanisms in the brain (and hence the binding problem). In his theory, Baars[18] introduced the idea of a ‘theatre of consciousness’. In Baars’ view, consciousness/attention is like a bright spot on the stage of a darkened theatre. The actors in the bright spot get all the attention, whilst we might be only vaguely conscious of those on the periphery (William James called this the ‘fringe’).[19] This can be related to observations in psychology that our attention or ‘working memory’ is limited to a select number of items. This view is virtually identical to Freud and Jung’s theory that consciousness could be viewed as a searchlight,[20] where experiences or ‘mental events’ move into or out of the searchlight.

The mechanisms underlying this ‘workspace’ have been described in various ways, but the general idea is that patterns of neurons are activated by sensory information and work in concert, simultaneously inhibiting weaker patterns of input. Thus attention and decision-making are associated with a global pattern of neural excitation arising and inhibiting competing patterns in the cerebral cortex.[21] Neurons are thought to be synchonized by oscillatory electrical activity in the ‘gamma’ range of 30–70 Hz.

Crick and Koch, who utilize a similar idea, emphasize the role of coalitions of interconnected neurons acting together to increase the activity of their fellow members.[22] The proposal is that different coalitions compete with each other, and the ‘winning’ set constitutes that of which we are the most conscious. Koch & Crick:

Coalitions can vary both in size and character. For example, a coalition produced by visual imagination (with one’s eyes closed) may be less widespread than a coalition produced by a vivid and sustained visual input from the environment … Coalitions in dreams may be somewhat different from waking ones.[23]

One interesting feature of the analogies Koch and Crick use to describe this process is that they tend to palm off intentional language (in this case, a democratic analogy) onto parts of the brain. So, for example, they liken attention to the efforts of journalists and pollsters to get the system as a whole to focus on certain issues. This, of course, is meant metaphorically, but it does highlight the problem of reducing intentional phenomena to mechanistic explanations. The general idea, a la the intentional stance, is that apparently intentional phenomena can be at some level discharged into the mechanical, but one often ends up using intentional metaphors when discussing supposedly mechanical or purely functional phenomena. Calling genes ‘selfish’ is probably the classic example of this, but here it is extended to the brain.[24]

Crick and Koch’s framework has another significant element that should be mentioned. Firstly, they cite the extensive evidence for the existence of what they term ‘explicit representations’ in various parts of the cortex. This refers to sets of neurons that respond as ‘detectors’ for visual features, for which there is plenty of evidence. They also cite cases in which damage to those parts of the brain prevents subjects from being consciously aware of the perceptual feature to which the detectors were attuned. Two clinical examples are achromatopsia, or loss of colour perception, and akinetopsia, or loss of motion perception, both of which are associated with damage to the visual cortex.[25] This constitutes clear evidence that certain kinds of neural structure are needed to support certain kinds of conscious experience.

As I will show in the next chapter, this sort of approach is proving fruitful in uncovering the specific sorts of neural mechanisms that are closely associated with various specific aspects of sensory experience. In particular, they reveal that early, purely sensory experiences are tied with more-or-less specifiable neural structures. This is not really surprising; just as one needs eyes to see, so one probably needs a specific piece of intact neural ‘machinery’ at the other end to be able to experience seeing anything.

However, because Crick and Koch share a low opinion of philosophy, their approaches tend to paper over the significant issue of phenomenology. In denying metaphysics, one often ends up using a covert form of naturalism by default, and assuming that identity theory must be true; one saw a similar situation in the previous chapter, in Aleksander’s work. The thrust of Koch’s work implies that once specific mechanisms have been resolved, many of these problems will disappear or become irrelevant, and if one defines consciousness as conscious awareness only, then the goal of such a theory can only really be a long list of neural wiring diagrams and functional maps that describe when this awareness is present and when it is absent. But this leaves awkward questions unanswered. Searle, criticizing an earlier book of Francis Crick’s, put it this way; ‘How, to put it na vely, does the brain get us over the hump from electrochemistry to feeling?’[26] I find it hard to call this a non-problem.

Dynamic Core

‘Dynamic core’ theories also utilize the idea of neural competition, and are based upon Gerald Edelman’s concept of ‘Neural Darwinism’. This is the theory that brain development and function can be understood in Darwinian terms, with groups of neurons competing amongst each other for supremacy. When we experience any particular conscious scene, Edelman argues, we make a highly ‘informative’ discrimination, because conscious scenes are both integrated and experienced as a whole. Qualia are supposed to correspond to these unique scenes, and come about via functional clusters in the thalamocortical system, where neural processes result in a series of different but individually stable states.[27] This theory is no doubt helpful in suggesting lines of research that may enrich our view of the neuroscience of everyday experience, but as with Crick and Koch’s theory, the basic philosophical problems also get underemphasized.

Information Integration

By contrast, ‘information integration’ theories propose that consciousness ‘is’ the ability of the brain to integrate information. Like the dynamic core, these models also suggest that the appearance of one scene in consciousness also means that a potentially vast number of other scenes/neural patterns have not been selected. This has echoes of Elsasser’s proposals that whole living systems are capable of selecting a pattern out of a vast range of possible alternatives, based upon past selections. It is also reasonably compatible with Ho’s depiction of the organism, and even has echoes of Myers’ latent or unexpressed potentials, except that the prevailing assumption is that this can be achieved mechanistically.

‘Thalamocortical rhythms’ theory proposes that synchronous rhythms, in thalamocortical loops in the gamma-band of frequencies ‘creates’ the conscious state.[28] It is also theorized that the brainstem plays some role in modulating the ‘thalamocortical resonance’. The content of consciousness is provided by sensory input during waking and by intrinsic inputs during dreaming. The model has been proposed to account for the binding problem, and also has relevance to James’s observation of ‘pulses of consciousness’.[29] As stated above, the currently predominant view, backed up by a significant amount of evidence, links waking awareness to activation in the thalamocortical areas.

Field Theories

A variant on the ‘workspace’ models are those based upon field theory. John, for example, theorizes that a resonating electrical field allows unified experience to occur.[30] John theorizes that the content of consciousness becomes dominated by apperception, or the integration of momentary perception of the internal and external environment with episodic and working memories. This is activated by associative reactions to a given perception, so the present gets interpreted in terms of the recent and distant past.[31] Like Baars and others, he assumes that ‘information’ gets ‘encoded’ via temporal patterns of synchronized firing. His theory is thus a variant on the workspace theories, and, as we saw, also congruent with Mae-Wan Ho’s theory that supposes conscious awareness to arise from more general capacities of the organism, and to be distributed throughout the body. This is because these neural patterns are related to other kinds of phase correlations that regulate more general biological processes.[32]

Consciousness as Virtual Machine

Finally, we come to a group of theories most directly inspired by computer science. This school sees consciousness as a sort of ‘virtual machine’, along the lines of simulator software, and the brain as a place for self-modelling and self-simulation.[33] Revonsuo sees the world we experience as a virtual-reality simulation in the brain. He suggests that dreaming might be a time for ‘risk-free simulation’ of dangerous scenarios.[34] The ‘self’, too, is thought to be part of the simulation, representing the body. This sort of theory is also popular with those studying Out of Body Experiences, an ‘altered state’ where the experiencer appears to stand or float outside of their body. According to these theories, during periods of sensory deprivation or accident the brain tries to reconstruct this ‘virtual-reality’ image of the world, placing ‘self’ outside where it is normally located.[35] I will discuss Thomas Metzinger’s variant of this sort of theory in chapter fourteen, in the context of the ‘self’.

Some Limitations of Conventional Models

Because the above models operate within the ‘game-rules’ of conventional science, they tend to share a number of limitations:

• They paper over, ignore, or treat as unproblematic the ‘gap’ between phenomenological accounts or qualia and the corresponding neural mechanisms.

• They tend to assume, implicitly or explicitly, that consciousness is a passive product of the underlying mechanisms.

• As previously stated, they often assume that specifiable mechanisms of consciousness exist and can be understood comprehensively by the Cartesian method of breaking them into subsystems.

• They tend to focus primarily on waking states and awareness. Although some consideration is given to dreams, comas and some altered states, the rational-analytical, everyday Western state of consciousness is implicitly the gold standard.

• They ignore completely or else dismiss parapsychological data that prima facie refutes some underlying assumptions.

Altered states tend to be accommodated only when a particular theory provides an obvious means for ‘explaining’ them. For example, ‘virtual-reality’ metaphors lend themselves to phenomena like lucid dreaming and Out of Body Experiences, in which a person experiences an unusual hallucinatory or illusory environment in which they travel (setting aside for the moment apparently veridical data from Out of Body Experiences). In the metaphor, the brain acts as a kind of simulation generator of this environment.

Parapsychological data is rarely considered during theory formulation, or where considered, dismissed.[36] There are both good and bad reasons for this, and I will discuss the difficult problem of the inclusion or otherwise of parapsychological and other controversial phenomena in a later chapter. I would note that parapsychological phenomena often seem associated with various forms of dissociation, extreme states of consciousness and sleep onset, and not with everyday conscious awareness.[37] It is sociologically curious, to say the least, that taboo phenomena and taboo states of mind seem to go hand in hand.

I think it is fair to say that, often for reasons of convenience, most experiments on consciousness occur in a waking state. Although other states of consciousness—like dreaming, comas, meditation and hypnosis—have certainly not been ignored, the primary discussions often centre on consciousness as experienced every day.[38] In my view, this implies that said theories of consciousness that are based mostly upon observations in this state can strictly speaking only be said to apply within this state. So waking consciousness could be said to be a boundary condition, in Cartwright’s terms, beyond which the theory may not be wholly valid or even be invalid.

This demarcation seems to me important, because without it we might lapse into what Tart terms ‘normocentric’ thinking, or that the ‘normal’, rational-analytical state of consciousness is the only one for a sensible understanding of the universe, or consciousness itself.[39] This is both prejudicial against other states of consciousness and other cultures, because humans are capable of experiencing a huge variety of very different and often very strange states of consciousness, not all of which may be accessible within the confines of our culture. It also mostly ignores the abilities of exceptional people, and those with exceptional levels of training. And even within our boundaries, we should not forget that there is a great variation in conscious states over the course of a day.[40]

Limits to Mechanistic Reductionism

In order to work, mechanistic theories have to redefine consciousness in quantitative and/or narrow ways that end up privileging the proposed ‘neural mechanisms’ over qualitative states. This is often done in quite subtle ways. For example, in Thomas Metzinger’s theory, qualia gets reduced to a maximally determinate value (i.e. the specific shade of a colour like green), and Metzinger quotes with approval Dennett’s dismissal of qualia, although he admits that the ‘Ineffability Problem is a serious challenge for a scientific theory of consciousness’.[41] This reduction allows him to discuss consciousness in mechanistic terms, but in my view, such a move erases the very phenomenon that these theories aim to explain. This may be inevitable; as Levine puts it, ‘if one attempts to understand life, including human life, in terms of pure quantities, one must eliminate the human soul’.[42]

This may well be a fatal stumbling block in the development of any theory of consciousness. The prevailing assumption seems to be that one can easily separate quantifiable causal powers and unquantifiable ‘qualitative aspects’, and comprehensively explain human behaviour in terms of quantifiable effects only.[43] This is the justification for thinking zombies are possible. Zombies are things that look and behave just like human beings, but lack the ‘inner light’ of consciousness.[44] The main reason why they are considered plausible even by those who acknowledge subjective consciousness is the tacit assumption that all causes are quantifiable and in principle at least separable from the qualitative aspects of consciousness. The qualitative aspects, in this view, either do not really exist or float off as acausal epiphenomena. I personally strongly doubt this, but if I am correct, this creates a significant dilemma, because currently predominant approaches are simply not geared towards the qualitative, and depends upon the quantifiable only.

There may also be significant theoretical limits by which the contents of consciousness can be resolved into localized, underlying ‘mechanisms’. Stephen Braude suggests that an underlying assumption of both cognitive science and physiology, that the structures of the brain display their function, may be false. He begins with the observation that we tend to assume that learning and memorizing always result in specific structural modifications in the brain. As a result, memory recall occurs because of an activation of this modification, or memory trace, in our brain.[45] Braude argues that this assumption, dubbed the Principle of the Internal Mechanism, is wrong.

He believes it is mistaken to assume the existence of a set of necessary and sufficient conditions for a given thought to arise. One reason is that any given brain structure is functionally ambiguous; it might display an identical state for a range of different mental states. But the potential power of the Internal Mechanism theory rests upon the supposed mechanism being unambiguously linked to a specific mental state (e.g. mental state M and only M is linked to brain state b and only b). However, if the brain state is potentially tied to many mental states, then the idea of a specific trace has no explanatory function.

Secondly, even memories about specific events or people come in such variety that it is often a problem to assign a specific type label to them. A thought of a certain ‘kind’ may have any number of forms. ‘Christmas’ might be identified with turkeys, Christmas trees, the smell of pine, Santa, food poisoning, or the fact that last Boxing Day the cat had a bladder infection and it was hard to find a vet during holiday time. There is no reason to suppose any specific ‘token’ linking any of these memories, as there is no one form for a ‘Christmas’ mental state and no general feature or set of features that remembering Christmas must have.

Braude argues that if we do posit the existence of an underlying physical trace for a specific memory, then we need to invoke a Platonic ‘token’ to link the mental state and the underlying brain mechanism. Some contemporary theories invoke just such a ‘Platonic token’, except that it is rationalized as a ‘stored representation’; for example, in discussing memory, Kosslyn acknowledges that much of our stored knowledge is ‘non-modality specific’ and that we can access it by multiple routes. Recognition is supposed to occur because of a ‘stored unimodal representation’,[46] which is effectively evoking a Platonic token to ‘tie’ the memories together. But, Braude observes, this sort of theory means a return to the assumption that given classes of memories have some ‘token’ or ‘essence’ that makes them what they are.

This problem persists even if we assume that there are different kinds of memory traces for remembering kinds of a specific class of memory. Braude argues that this leads to an endless regress, because subsets of remembering are no more linked by a relevant common property than single instances. Again, we would need these memories to be ‘tagged’ with some Platonic token to be usefully linked to a specific brain state; but we have no reason to suppose that memories are so tagged.

Braude’s arguments seem to me quite extreme, because he suggests that such theoretical difficulties are sufficient to dismiss most of cognitive science. But theoretical arguments cannot by themselves be enough, and seem at least partly contradicted by the neural correlates that do suggest a close association between features of the nervous system and subjective experience, and also that reasonably discrete, albeit distributed, ‘representations’ do seem to occur in the brain.[47] (In fairness, Braude does exclude basic sensory experiences from his discussion—and this is quite often where the closest correlates are found.) Braude could be seen as sitting at the opposite pole to Koch, who expects to bury theory under empirical data. Both positions have some validity, but in my view, both are overstated.

Beyond the Mainstream: A Quantum Model of Consciousness

A number of different individuals, dissatisfied with purely neurobiological theories of consciousness, have proposed theories of consciousness that involve quantum mechanics. The highest profile of such theories was the one proposed by Roger Penrose and Stuart Hameroff.[48] Their theory has problems because it relies upon a property called ‘quantum coherence’ extending over large parts of the brain, which looks difficult to achieve in such a wet, warm environment. Although the debates over this have proved interesting and instructive, the problems are sufficiently acute that I propose to examine another theory that seems in some respects more viable.

Stapp’s Theory of Process Dualism

Henry Stapp begins by arguing that one needs quantum theory to understand brain functions properly, rejecting the common notion that classical determinism holds in the brain. Classical physics specifies bottom-up causation only, and so one should be able to predict the behaviour of the brain as a whole from its microscopic constituents. But there are limits to this approach, partly because of the unfathomable complexity of the brain, which can be revealed by some basic statistics. The total number of glial cells and neurons in the neocortex is around 49.3 billion in females and 65.2 billion in males.[49] Each one of the 1011 (one hundred billion) neurons has on average 7,000 synaptic connections to other neurons, making 1014 connections.[50] This problem is compounded, says Stapp, by a feature of quantum theory known as the uncertainty principle, which limits knowledge of microscopic events. He concludes that we cannot justify the claim that deterministic behaviour actually holds at either the microscopic or macroscopic level. As a result classical physics can, at best, help us build an approximation of brain-states.

Another problem is the interaction between quantum descriptions, which involve smears of probabilities produced by the uncertainty principle, and the description of a single, classically-described, macroscopic brain-state. In the latter case, and theoretically speaking, the quantum mechanical ‘smear’ of possibilities needs to be reduced to the single, classical, macroscopic state. To answer the question of how such a reduction might occur, Stapp focuses upon the nerve terminals (see Figure 1, chapter eight).[51]

Every time a neuron fires, an electrochemical impulse travels up the length of the nerve fibre to a nerve terminal. When the impulse reaches the nerve terminal it opens tiny ion channels, where calcium ions flow to trigger the release of neurotransmitters from the vesicles. The released neurotransmitters diffuse across the gap and influence the next nerve to fire. The ion channels are small enough for a kind of quantum effect to occur, causing a ‘probability cloud’ to fan out over a small area of about 50 nanometres.

This effect happens many times, over many channels all over the brain and it means that the actions at nerve terminals, and for various reasons the whole brain, cannot be understood entirely in classical terms. Stapp: ‘According to quantum theory, the state of the brain can become a cloudlike quantum mixture of many classically describable brain states.’[52]

This has significant consequences for those who hope to describe brain-states in terms of classical physics. Stapp points out that since the brain is a highly complex, nonlinear system with a very sensitive dependence on unstable elements it is neither reasonable to suppose nor possible to demonstrate that this sort of situation will lead to a nearly classically describable brain-state. The end result is, rather, a mixture of several alternative classically describable brain-states.

Stapp then brings in a formalism from orthodox quantum theory, invented by John von Neumann. This is an extension of the Copenhagen interpretation that formalizes what are termed the ‘probing actions’ of scientists as a separate process from the classically-describable world. For example, a scientist might take a measurement of radioactivity at a given site. In this formalism, the system being measured can be described in deterministic terms, but the act of measuring cannot. Stapp observes that probing actions of this kind are performed not only by scientists but by people all the time. Such actions are composed of conscious intentions and linked physical actions. Von Neumann called this probing action ‘process 1’ and the mechanical process being probed he called ‘process 2’.[53]

This formalization can be interpreted as a violation of the closure principle, which assumes that such interventions cannot occur in mechanical (physical) systems. If we accept this interpretation, it would mean that Stapp’s theory lies outside the ‘game-rules’ accepted by most cognitive scientists. And yet the theory itself is within orthodox or conventional physics, and as Stapp has observed on several occasions, cognitive scientists are building models inside a world-picture that is thoroughly dated.

Stapp applies this process formalism onto the brain, because he sees the reduction of the ‘smeared out’ quantum mixture of states as being achieved via a process 1 intervention, which selects from a smear of potential states generated by a mechanical process 2 evolution. ‘The choice involved in such an intervention seems to us to be influenced by consciously felt evaluations …’[54] Stapp sees this approach as offering a way of rescuing free will from the clockwork, mechanical universe. He also suggests that a form of mental causation may be possible via something termed the ‘quantum Zeno’ effect.

The quantum Zeno effect is a consequence of the dynamical rules of quantum theory. If the process 1 enquiry leads to a ‘yes’, it is followed by a sequence of process 1 queries for which the answer is also ‘yes’. In this case, the dynamical rules of quantum theory will entail that the sequence of outcomes will with a high probability also be ‘yes’, even despite strong physical forces that would otherwise cause a state to evolve differently. According to Stapp, the timings of these process 1 actions would be controlled by the agent’s ‘free choice’. This would imply, via the basic dynamical laws of quantum mechanics, ‘a potentially powerful effect of mental effort on the brain of the agent!’[55] Applying mental effort increases the rapidity of a sequence of mostly identical acts of intentionality, which goes on to produce intended feedback. This sort of holding in place effect is the quantum Zeno effect.

The outcome of Stapp’s theory is very similar to William James’s idea of attention and pulses of thought.[56] Stapp also re-interprets a number of findings from psychology and neuropsychology in terms of this theory. This bridging is important, because it helps place the new theory in a conventional context.

On the whole, cognitive scientists have been far from impressed by this sort of approach, in part because Stapp’s theory amounts to a form of dualism. In a widely cited paper, Koch and Hepp gave a number of reasons why they believed that there was ‘little reason to appeal to quantum mechanics to explain higher brain functions, including consciousness’.[57] (1) In quantum formalisms, consciousness has only been a ‘place holder’, and has little relevance to the study of neural circuits; (2) large quantum systems are very difficult to analyse rigorously, except in highly idealized models; (3) brains obey quantum mechanics, but they do not seem to exploit any of its special features; (4) chemical transmission across the synaptic cleft and the generation of action potentials involve many thousands of ions and neurotransmitter molecules coupled by diffusion of the membrane potential that extends across tens of micrometres. Both processes will destroy any coherent quantum states; (5) massive parallel computation is adequate to account for consciousness. They conclude that ‘[i]t is far more likely that a material basis of consciousness can be understood within a purely neurobiological framework’.[58]

Of these points, (3) should bother us least. Paradigm-led conventional science often misses novel phenomena that is not geared to its specific forms of research. Kuhn noted that ‘Initially, only the anticipated and usual are experienced even under circumstances where anomaly is later to be observed’.[59] The chaotic oscillations of a simple pendulum went unnoticed for centuries before the arrival of chaos theory.[60] Novel approaches often take new research techniques, equipment and observational criteria. The issues often cannot be settled by a simple, single experiment, as Koch and Hepp also suggest.

Stapp’s reply to Koch and Hepp, which Nature declined to publish, was that the arguments of Koch and Hepp missed the point. He pointed out that the von Neumann formalism explicitly brings consciousness into the dynamics and that ‘a conscious experience occurs and the state of the observed system is reduced to the part of itself that corresponds to that experience’.[61] He also claimed that although superpositions were mostly eliminated in the brain, that this did not eliminate the need for process 1. Stapp suggests that by focusing on the elimination of macroscopic superpositions which would disrupt both Penrose–Hammeroff models and quantum computation, they did not address the core issue which is the process 1 free choice.

Stapp’s response covers some but not all of Hepp and Koch’s objections. A key issue is probably point (2), the problem of rigorously analysing large-scale quantum states. Also problematic is the mapping of the interaction between classical and quantum models, which tends to be idiosyncratic even in far simpler, controlled experiments.[62] Stapp’s theory will also probably not be taken seriously until the wider issue of the role of quantum mechanics in biological systems generally is better understood.

Having said this, I think that Stapp’s application of von Neumann’s formulation to these problems is important. The main reason is that, even if he has the details wrong, he has shown that it is possible to violate the closure principle in a way that does not invoke anything like the verboten substance dualism. His dualism is epistemological and formal rather than ontological and substantial, and even if his specific application is inappropriate it indicates that it is at least possible to talk about the world in a formal way that is not wholly deterministic, and inclusive of an active agent. The importance of this development cannot be understated.

Conclusion

Some of the conventional theories above form the basis of reasonably coherent research programmes of neurobiological research, but none really address the deeper philosophical problems of consciousness. The conventional theories tend to cluster close to the ‘representational’ rather than ‘behaviourist’ pole of conventional thinking, mainly because they acknowledge consciousness as a problem. This is significant because it shows that the only way of accommodating consciousness in conventional terms is with some sort of representational approach.

The ‘quantum’ theories, which tend to be inadequately discussed in the mainstream, face similar problems although they do offer ways out of some of the strictures of conventional thinking; Stapp’s theory, which challenges strict determinism, being a salient example. But it is far from clear whether bringing quantum mechanics deepens our understanding of why we have subjective experiences in the first place.

Despite these problems, the confidence that wholly conventional theories will prove adequate is considerable. Resolution is seen as merely a matter of time, research money and new techniques. This positivistic approach is justified by the growth of new technologies that allow us to peer into the brain and find correlates of conscious experience that are sometimes very striking indeed.

1 Mormonn & Koch, 2007; see Granger & Hearn, 2007, for a description of the thalamocortical system.

2 Mormonn & Koch, 2007. Web article.

3 Kuhn, 1996/1962.

4 Kuhn, 1996/1962.

5 See Koch & Hepp, 2006, and also a discussion in Hofstadter & Dennett, 1981.

6 Kuhn, 1996/1962; Polyani, 1964; Feyerabend, 1975.

7 Mormonn & Koch, 2007.

8 Damasio, 1999. Although he does acknowledge the importance of subjective experiences, and accepts that a theory of consciousness must accommodate them.

9 Tsuchiya & Koch, 2009.

10 Koch, 2004.

11 Martinez-Conde, 2004.

12 Daniels, 2005.

13 Craver, 2007, p. 107.

14 U.T. Place extract in Flew, 1964. Quote on p. 282; similar sentiments are voiced by the Churchlands, interview in Blackmore, 2005.

15 Kanwisher, 2001; original work in Allison et al., 1999; Ishai et al., 1999; Kanwisher, McDermott & Chun, 1997; McCarthy et al., 1997.

16 Kanwisher, 2001, p. 95. Although Harpaz, after surveying the data, claims that the ‘… FFA [fusiform face area] is not reproducible across individuals, but you can find face-sensitive patches in each individual and call them “the FFA”’—see Harpaz, 2009, Misunderstanding in Cognitive Brain Imaging: http://human-brain.org/imaging.html accessed on 08/06/11.

17 Seth, 2007.

18 Baars, 1988.

19 Varela & Shear, 1999.

20 Gauld, 1968.

21 Dehaene, Sergent & Changeux, 2003.

22 Crick & Koch, 2003.

23 Crick & Koch, 2003, p. 121.

24 Dawkins, 1976.

25 Crick & Koch, 2003.

26 Searle, 1997, p. 28, commenting on Crick, 1994.

27 Seth, 2007; Edelman & Tononi, 2000.

28 Llin s, Ribary, Contreras & Pedroarena, 1998; Seth, 2007.

29 See discussion in Kelly et al., 2007, p. 626.

30 John, 2001; 2005.

31 John, 2005.

32 Ho, 2008.

33 Sloman, 2009.

34 Revonsuo, 2005.

35 Blackmore, 1993; Metzinger, 2009.

36 For example, Metzinger, 2009, ignores apparent examples of ESP perception in OBEs, and Blackmore, 1982; 1993, finds various ways of dismissing—admittedly fairly rare but not non-existent—such examples in the OBE/Near-Death Experiences literature. See Kelly et al., 2007, for a critique of this dismissal.

37 Myers noted this long ago, and the investigation of dissociative and hypnotic phenomena was a major preoccupation of the early SPR. See Myers, 1903; Gauld, 1968; Crabtree, 1993.

38 Warren, 2008.

39 Tart, 1972.

40 See Warren, 2008, for an overview.

41 Metzinger, 2009, p. 51.

42 Levine, 2001, p. 263. This is a figurative rather than a literal statement.

43 Heil, 2004.

44 Blackmore, 2003.

45 Braude, 2002.

46 Kosslyn, 1999, p. 1284. See Kosslyn, 1994, for his theory of memory and visual processing.

47 See discussions in Carter, 2002; Kanwisher, 2001; Ishai et al., 1999; Crick & Koch, 2003.

48 Penrose, 1989; 1994; Hameroff, 2010.

49 Pelvig et al., 2008.

50 Drachman, 2005.

51 This explanation is based upon the discussion in Stapp, 2007, chapter four.

52 Stapp, 2007, p. 31.

53 Von Neumann, 1955/1932.

54 Stapp, 2007, p. 32.

55 Stapp, 2007, p. 36.

56 James, 1890.

57 Koch & Hepp, 2006, p. 612.

58 Koch & Hepp, 2006, p. 612.

59 Kuhn, 1996/1962, p. 64.

60 Gleik, 1987.

61 Stapp, 2009, p. 1.

62 See Cartwright, 1999, chapter nine.