From action to speech

Studies of primate premotor cortex, and, in particular, of the so-called mirror system, including humans, suggest the existence of a double hand/mouth motor command system involved in ingestion activities. This may be the platform on which a combined manual and vocal communication system was constructed. In support of this view, we will present behavioral and TMS data showing that the execution/observation of hand transitive actions, such as grasping and bringing-to-the-mouth, influences mouth movements and production of phonological units (i.e. syllables). Conversely, mouth postures and vocalization influence the control of transitive actions. This hand/mouth motor command system is found to play an important role in the development of speech in infants. Finally, we suggest that this system evolved a system controlling words and symbolic gestures: we propose that this system is located in Broca’s area.

INTRODUCTION

Gesture is a universal feature of human communication. Indeed, in every culture speakers produce gestures, although the extent and typology of the produced gestures vary. For some types of gestures, the execution is frequently associated with speech production (see Goldin-Meadow, 1999; Kendon, 2004; McNeill, 1992, 2000). Consider as an example the case of expressing approbation: while pronouncing “OK,” we often form a circle with the forefinger and the thumb in contact at their tips, while the other fingers extend outward.

There are two alternative views about the relationship between gesture and speech. The first posits that gesture and speech are two different communication systems (Hadar et al., 1998; Krauss and Hadar, 1999; Levelt et al., 1985). According to this view, gesture works as an auxiliary support when the verbal expression is temporally disrupted or word retrieval is difficult. The other view (Kendon, 2004; McNeill, 1992) posits that gesture and speech form a single system of communication, since they are linked to the same thought processes even if differing in expression modalities.

According to the view by McNeill (1992) and Kendon (2004), we have hypothesized that manual gestures and speech share the same control circuit (Bernardis and Gentilucci, 2006; Gentilucci and Corballis, 2006; Gentilucci et al. 2006). This hypothesis can be supported by evidence that speech itself may be a gestural system rather than an acoustic system, an idea captured by the motor theory of speech perception (Liberman et al., 1967) and articulatory phonology (Browman and Goldstein, 1995). According to this view speech is regarded not as a system for producing sounds, but rather one for producing mouth articulatory gestures. We will review neurophysiological and behavioral data in order to support the point that this circuit controlling gestures and speech evolved from a circuit involved in the control of arm and mouth movements related to ingestion. We suggest that both these circuits contributed to the evolution of the spoken language, moving from a system of communication based on arm gestures (Gentilucci and Corballis, 2006).

NEURAL CIRCUITS

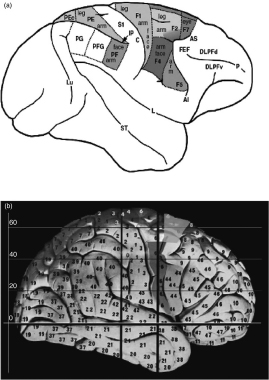

The neural circuits that are possible precursors of manual and oral communication are located in monkey premotor and parietal cortices. Based on cytoarchitectural and histochemical data, the agranular frontal cortex of macaque monkey has been parceled by Matelli and colleagues (Matelli et al., 1985, 1991) in the areas shown in Figure 3.1a. Area F1 corresponds basically to Brodmann’s area 4 (primary motor cortex), and the other areas correspond to subdivisions of Brodmann’s area 6. Areas F2 and F7, which lie in the superior part of area 6, are referred to as “dorsal premotor cortex,” whereas areas F4 and F5, which lie in the inferior area 6, are referred to as “ventral premotor cortex” (Matelli and Luppino, 2000). Neurophysiological studies showed that, in area F5, which occupies the most rostral part of ventral premotor cortex, there is a motor representation of distal movements (Hepp-Reymond et al., 1994; Kurata and Tanji, 1986; Rizzolatti et al., 1981, 1988). This area consists of two main sectors, one located on the dorsal convexity (F5c), and the other one on the posterior bank of the inferior arcuate sulcus (F5ab). Both sectors receive a strong input from the second somatosensory area (SII) and area PF. In addition, F5ab is the selective target of the parietal area AIP (for a review, see Rizzolatti and Luppino, 2001).

In area F5 two classes of neurons were recorded, which might have been instrumental in the development of a system controlling speech and gestures.

Figure 3.1 Lateral view of the monkey (a) and human (b) cortex.

The first class (the so-called “mirror neurons,” MNs), generally found in sector F5c, becomes active when the animal executes an action with the hand and when it observes the same action performed by another individual (Gallese et al., 1996). In area F5, the MN system has been demonstrated primarily for the observation of reaching and grasping, although it also maps the sound of certain movements, such as tearing paper or cracking nuts, on to the execution of those movements (Kohler et al., 2002). In addition, Ferrari et al. (2003) recorded discharges in the premotor area F5 of monkeys both from mirror neurons during lip smacking—the most common communicative facial gesture in monkeys—and from other mirror neurons during mouth movements related to eating. This suggests that nonvocal facial gestures may indeed be transitional between visual gesture and speech (see below). Indeed, MacNeilage (1998) has drawn attention to the similarity between human speech and primate sound-producing facial gestures such as lip smacks, tongue smacks, and teeth chatters. He proposed that the mouth open–close alternation associated with mastication, sucking, and licking took on communicative significance as facial gesture and then was transformed in syllable in which mouth opening and closing corresponded to vowel and consonant pronunciation.

Neurons activated by the observation of movements of different body effectors were recorded in the superior temporal sulcus region (STS; Perrett et al., 1990). However, STS neurons do not appear to be endowed with motor properties. On the contrary, mirror neurons were recorded in the rostral part of the inferior parietal lobule (Gallese et al., 2002). This area receives input from STS and sends an important output to ventral premotor cortex, including area F5. Therefore, the cortical mirror neuron circuit is formed by two main regions: the rostral part of the inferior parietal lobule and the ventral premotor cortex. STS is strictly related to it; however, it lacks motor properties.

Rizzolatti and colleagues (Gallese et al., 1996; Rizzolatti et al., 1996) proposed that the mirror neurons’ activity is involved in representing actions. This motor representation, by matching observation with execution, makes it possible for individuals to understand observed actions. In this way, individuals are able to recognize the meaning and the aim of actions performed by another individual. Therefore, providing a link between actor and observer, similar to the one existing between a sender and a receiver of a message, mirror neurons may have played a role in the development of a gestural communication system. Thanks to this mechanism, actions done by other individuals become messages that are understood by an observer.

The second class of neurons recorded in the sector F5ab commands grasp actions with hand and mouth (Rizzolatti et al., 1988). A typical neuron of this class discharges when the animal grasps a piece of food with its mouth or when the animal grasps the same piece of food with the hand contralateral or ipsilateral to the recorded cortical side. Frequently, the discharge of this class of neurons is selective for a specific type of grasp (for example, a neuron discharges when a precision grasp is used, but not for a power one), and it is even elicited by the visual presentation of a graspable object, provided that its size is congruent with the type of grasp coded by the neuron (“canonical neurons”; Murata et al., 1997; Rizzolatti et al., 1988). Rizzolatti and colleagues (1988) proposed that these neurons are involved in coding the aim of the grasp action, i.e. to take possession of an object. From a functional point of view, these neurons can be involved in planning a strategy in order to perform successive grasp actions. For example, they can command the grasp of an object with the hand while preparing the mouth to grasp the same object. Coupling between hand and mouth movements, likely finalized to edible activities, has been described for other frontal regions. For an example, Graziano (2006) found that, in regions of monkey motor cortex, hand movements toward the animal’s own mouth coupled with mouth openings were elicited by electrical microstimulation.

From an evolutionary point of view, this circuit of commands might have evolved a system of hand–mouth double command, becoming instrumental in the transfer of a gesture communication system, from movements of the hand to movements of the mouth. This system might have been used in language evolution (Gentilucci and Corballis, 2006), according to the proposal that language evolved from manual gestures rather than from vocalizations. Indeed, whereas vocalizations of non-speaking primates are mainly related to emotional states, manual actions can provide more obvious iconic links with objects and, consequently, they might have been initially used to represent the physical world (e.g., Arbib, 2005; Armstrong, 1999; Armstrong et al., 1995; Corballis, 1992, 2002; Donald, 1991; Gentilucci and Corballis, 2006; Givòn, 1995; Hewes, 1973; Rizzolatti and Arbib, 1998; Ruben, 2005).

RELATIONS BETWEEN EXECUTION/OBSERVATION OF HAND/ARM ACTIONS AND SPEECH

In humans, even if there is no certainty about the existence of MNs (Mukamel et al., 2010), there is a large amount of data proving the presence of a system active during action observation and action execution (mirror system). The evidence comes from electroencephalography (Muthukumaraswamy et al., 2004), magnetoencephalography (Hari et al., 1998), transcranial magnetic stimulation (TMS; Baldissera et al., 2001; Fadiga et al., 1995; Maeda et al., 2000; Patuzzo et al., 2003; Strafella and Paus, 2000), and functional magnetic resonance imaging (Gazzola and Keysers, 2009; Kilner et al. 2009; for a review see Rizzolatti and Craighero, 2004). These studies showed, in addition, that in humans the motor system becomes active when observing arm transitive actions as found in monkeys, as well as intransitive movements. In a TMS study, Gentilucci et al. (2009) found that the observation of different types of grasp selectively affects the excitability of the hand motor cortex. The authors stimulated the hand motor cortex of participants while observing the precision grasp of small fruits and the power grasp of large fruits. As control conditions, the hand motor cortex was stimulated during the observation of the same fruits presented alone. The motor-evoked potentials (MEPs) of the Opponens Pollicis (OP) muscle were larger when observing the power grasp as compared to the precision grasp, whereas no effect was observed during presentation of the fruits alone (Figure 3.2). Similarly, in a control session the electromyography activity of the OP muscle was larger when the same participants actively executed the power grasp as compared to the precision grasp of the same fruits presented in the TMS session. Thus, this motor activation related to the grasp observation has the same characteristics of the activity of the monkey MNs, which were found to be frequently selective for the type of grasp observed and executed (Gallese et al., 1996). In addition to that previously described for monkey’s auditory MNs (Kohler et al., 2002), there is evidence that another mechanism does exist in humans, mapping the sound of actions on to their motor representation. Hauk et al. (2006), using high-density EEG, found that motor areas in the human brain are part of neural systems subserving the early automatic recognition of action-related sound. So far, there is no clear evidence that the perception of vocalizations is mapped on to the production of vocalizations by using a system that evolved from this circuit. However, this mapping is implicit in humans in the so-called motor theory of speech perception (Liberman et al., 1967), which holds that we understand spoken speech in terms of how it is produced rather than in terms of its acoustic properties.

The visual mirror system, according to Rizzolatti and Craighero (2004), is involved in either overt or covert imitation of observed movements. Humans might understand the meaning of an action by covertly imitating the observed action, and initially they might have used this gestural repertoire to share information on food and for predatory activities. Using the hand–mouth double command system, this arm gestural repertoire might have been shared with and successively transferred to the mouth (Gentilucci and Corballis, 2006). A system of double grasp commands to hand and mouth seems to be still active in modern humans, as proposed in the following behavioral and TMS studies. Gentilucci and colleagues (2001) showed that, when subjects were instructed to open their mouth while grasping objects, the size of mouth opening increased with the size of the grasped object. The kinematic analysis showed that, concurrently to an increase in kinematic parameters of the finger shaping during the grasp of the large as compared to the small object, there was an increase in the parameters of lip opening even if the participants were required to open their lips of a fixed amount. Conversely, when the participants opened their hands while grasping objects with their mouth, the size of the hand opening increased also with the size of the object. Control experiments showed that neither the simple fixation of the object nor the proximal component of the grasp was responsible for the effect (Gentilucci et al., 2001). Recent evidence (Gentilucci and Campione, 2011) shows that mouth postures, in addition to mouth actions, do affect the control of grasping. Participants were required to open or to close the mouth, or to maintain it relaxed. Then, they performed a manual grasp, maintaining that mouth posture. Maximal finger aperture was larger when the mouth was opened as compared to when it was closed (Figure 3.3). An intermediate aperture was observed in the relaxed mouth condition. A control experiment verified whether similar relation also exists between foot and hand. Participants executed a manual grasp of an object while their right foot toes were extended or flexed or relaxed. No significant effect of the foot posture was found on maximal finger aperture (Figure 3.3). This result disproves a link between hand movements and foot postures: on the contrary, a link was preferentially found between hand and mouth.

If this system, coupled with the mirror system, is also used to share a communication gestural repertoire of the hand with the mouth, the execution of transitive actions (i.e. acted upon an object) should affect speech, and specifically the production of phonological units. In addition, the effects on speech should be the same also when observing the same actions. Gentilucci and colleagues required participants to reach and grasp small and large objects while pronouncing syllables (Gentilucci et al., 2001). They found that when grasping the large objects as compared to the small objects the lip opening and parameters of the vowel vocal spectra increased. Conversely, the pronunciation of a vowel during the entire execution of the grasp affected maximal finger aperture (Gentilucci and Campione, 2011). Specifically, the vowel /a/ induced an increase if compared to /i/. The vocalization /ɔ/ (which is unrelated to native language of the participants) induced an intermediate effect (Figure 3.3). The vowel /a/ is characterized by higher Formant 1 (F1; depending on internal mouth aperture; Leoni and Maturi, 2002) and lower Formant 2 (F2; depending on tongue protrusion). In contrast, /i/ is characterized by lower F1 and higher F2. The vowel /ɔ/ has intermediate values. In sum, configurations of the internal mouth related to vocalizations seem to be responsible for effects on finger shaping during grasping.

Figure 3.2 Variation in OP (Opponens Pollicis) MEPs (motor-evoked potentials) evoked by TMS on hand motor cortex during observation of power and precision grasps of large and small fruits and the same fruits presented alone. Upper row: frames of the videos, showing the final phase of power grasp of large (apple) fruit and precision grasp of small (strawberry) fruit. Lower row: frames of the videos, presenting the same fruits alone. Middle row: OP MEPs after single pulse TMS on hand motor cortex while observing power and precision grasps (circles) of fruits and the same fruits presented alone (squares). Bars are SE. (Drawn from Gentilucci et al., 2009.)

Figure 3.3 Upper panel: maximal finger apertures of grasps executed while maintaining mouth postures (experiment 1), foot finger postures (experiment 2) and producing vocalizations (experiment 3). Lower panel: the mean mouth apertures during grasping in experiments 1 and 3. Bars are SE. Asterisks indicate significance in the statistical analyses. (Drawn from Gentilucci and Campione, 2011.)

Results similar to execution were found when the participants observed grasp actions and pronounced the syllable /ba/ (Gentilucci, 2003; Gentilucci et al., 2004b). The results of these experiments showed that kinematic parameters of the lip opening and F1 in the voice spectra varied, corresponding to variation in the actor’s finger shaping during the grasp movements. In a recent study, Gentilucci and colleagues (2009) required participants to pronounce the syllable /da/ while observing video-clips showing (1) a right hand grasping large and small objects (fruits and solids) with power and precision grasps, respectively; (2) a foot interacting with large and small objects; and (3) the differently sized objects, either grasped in (1) or touched in (2), presented alone. The results showed that voice F1 was higher when observing power as compared to precision grasp, whereas it remained unaffected by the observation of the different types of foot interaction and objects alone (Figure 3.4). Moreover, the lip kinematics were poorly affected by the grasp observation; this finding seems in contrast with the previous study by Gentilucci et al. (2004b), in which the type of grasp consistently affected the lip kinematics. This can be explained by considering that the syllable /ba/ was previously pronounced and the command to the mouth could be planned in order to modulate the release of lip occlusion. In contrast, this did not occur during /da/ pronunciation because lip movements are poorly related to consonant pronunciation.

In a TMS study (Gentilucci et al., 2009), stimulation was applied to tongue motor cortex of participants silently reading a syllable and simultaneously observing video-clips showing power and precision grasps, pantomimes of the two types of grasps, and differently sized objects presented alone. TMS was applied after presentation of the syllable /da/ on the target objects during the final phase of grasp or at a corresponding time when the objects were presented alone. Tongue MEPs were greater when observing power than precision grasp either executed or pantomimed (Figure 3.5). In contrast, the observation of the type of foot interactions with objects of different size did not affect tongue MEPs after TMS of tongue motor cortex. These results are in accordance with data from neuroimaging studies, which have shown that the observation of manual and mouth actions directed to an object (transitive actions) activates sectors of Broca’s area and premotor areas, whereas the observation of transitive foot actions activates only a dorsal sector of premotor area (Buccino et al., 2001). Because of the proximity and the partial overlapping in Broca’s area of the sectors activated by the observation of hand and mouth actions and speech sectors (Demonet et al., 1992; Paulesu et al., 1993; Zatorre et al., 1992), it is more plausible to suppose an effect of the hand observation system, rather than of the foot observation system, on mouth movements and speech. However, we assume that the foot may be linked to the mouth, but only through a common representation with the hand. Our reasoning was that foot actions affect arm/hand gestures (Baldissera et al., 2002). In turn, arm/hand gestures might affect mouth movements by means of the double hand–mouth command system. Consequently, an activation of foot action representation, triggered by observation, might affect the hand kinematics, but was unlikely to directly affect mouth kinematics and voice. This hypothesis seems to contrast with the finding that foot postures did not affect grasp actions (Gentilucci and Campione, 2011). This can be easily explained by considering that we studied the effects of meaningless postures of foot fingers rather than meaningful leg/foot actions.

Figure 3.4 Variation in F1 of the syllable /da/ pronounced during observation of hand and foot interactions with large and small objects and the same objects presented alone. Upper row: frames of the videos, showing the final phase of power grasp of large object and precision grasp of small object and the final phase of foot interactions with large (football) and small (tennis-ball) object. Lower row: frames of the videos, presenting the same objects alone. Middle row: F1 of the syllable /da/ pronounced while observing hand and foot interaction with objects (circles) and the same objects presented alone (squares). Bars are SE. (Drawn from Gentilucci et al., 2009.)

Figure 3.5 Variation in tongue muscle MEPs evoked by TMS on tongue motor cortex during observation of pantomimes of power and precision grasps. Lower row: frames of the videos, showing the final phase of pantomimes of power and precision grasps. Upper row: tongue muscle MEPs after single pulse TMS on mouth motor cortex while observing pantomimes of the two types of grasp (circles). Bars are SE. (Drawn from Gentilucci et al., 2009.)

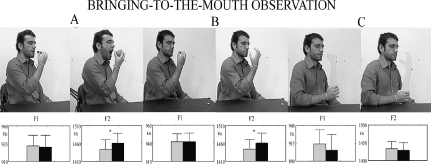

We hypothesize that the observation of actual grasps and pantomimed grasps (i.e. more abstract representations of the action) activated motor commands to the mouth as well as to the hand, congruently with the hand kinematics implemented in the observed type of grasp. The commands to the mouth selectively affected postures of phonation organs and consequently basic features of phonological units. These effects were not described for execution and observation of grasp actions only. Indeed, the execution of bringing fruits of different size to the mouth modified F2 of the syllable /ba/ pronounced simultaneously with action execution (Gentilucci et al., 2004a). The execution of the action guided by the large fruit induced an increase in F2 of the voice spectra as compared to the execution of the action guided by the small fruit. This effect seemed to be selective for speech, since in a control experiment (Gentilucci et al., 2004a) participants were trained to repeat a vocalization unrelated to the native language. After the training, they emitted the vocalization simultaneously to the execution of the bringing-to-the-mouth action. In this case, no effect of the action was found on vocalization (Gentilucci et al., 2004a). By comparing this finding with that found by Gentilucci and Campione (2011), it seems that the effects of arm/hand actions on phonological units (syllables) are more selective than the effects of the production of vocalizations on arm/hand actions.

As hypothesized, the observation of the action of bringing large and small fruits to the mouth induced the same effects on the simultaneous pronunciation of the syllable /ba/ as the execution did (Figure 3.6a; Gentilucci et al., 2004a). Interestingly, the observation of a pantomime of the action (during which the mouth was not opened) was as effective as the action itself (Figure 3.6b). Thus, neither the presence of the fruit, nor the opening of the mouth was responsible for the effect. This datum, in addition, suggests that, as found for the grasp (Gentilucci et al., 2009), a more abstract representation of the action (i.e. a pantomime) could affect syllable pronunciation. However, the presence of the biological communicator (i.e. a human arm) was necessary to influence speech. In fact, the observation of bringing an arm shadow to the mouth was ineffective (Figure 3.6c; Gentilucci et al., 2004a).

Figure 3.6 Mean values of voice parameters when pronouncing /ba/ and simultaneously observing (a) the act of bringing a cherry (grey bars) or an apple (black bars) to the mouth; (b) a pantomime of the act; and (c) a pantomime of the act using a non-biological hand. Note the increase in F2 (like when the act was executed) in the conditions A and B. No effect was observed in condition C. Bars are SE. (Drawn from Gentilucci et al., 2004a.)

Both the execution and the observation of actual and pantomimed grasps with the hand activated commands of grasp with the mouth. This modified the opening of the mouth, according to the shape of the hand used to grasp the object. This, in turn, modified F1 of the voice spectra (Gentilucci, 2003; Gentilucci et al., 2001, 2004b), which is related to the anterior internal mouth aperture (Leoni and Maturi, 2002). Conversely, the execution and the observation of actual and pantomimed bringing-to-the-mouth actions probably induced an internal mouth movement (in order to prepare actions such as, for example, chewing or swallowing), which affected tongue displacement according to the size of the object being brought to the mouth. This, in turn, modified F2 of the voice spectra (Gentilucci et al., 2004a, b), which is related to tongue position (Leoni and Maturi, 2002). On the basis of these results we proposed that communication signals related to the meaning of actions (e.g. taking possession of an object by grasping, or bringing an edible object to the mouth) might be associated with the activity of particular articulatory organs of the mouth co-opted for speaking (Gentilucci and Corballis, 2006). It is possible that, in the course of evolution, pantomimes of actions might have incorporated gestures that are analog representations of objects or actions (Donald, 1991), but through time these gestures may have lost the analog features and became symbolic. The shift over time from iconic gestures to arbitrary symbols is termed conventionalization, and appears to be common to both human and animal communication systems, and is probably driven by increased economy of reference (Burling, 1999). Moreover, words, like symbolic gestures, bear little (e.g. zanzara (mosquito) in Italian) or no physical relation to the objects, actions, or properties they represent. Thus, it is plausible to suppose the existence of interactions between symbolic gestures and words; these interactions might be used to explain the transfer of aspects of symbolic gesture meaning to lexical units during spoken language evolution (Bernardis and Gentilucci, 2006; Gentilucci and Corballis, 2006; Gentilucci et al., 2006).

A possible criticism is that the results of the studies by Gentilucci and colleagues (Gentilucci, 2003; Gentilucci et al., 2001, 2004a, b, 2009) might be explained in terms of simple motor resonance (Rizzolatti et al., 2002), without adopting the notion of a mirror system. In other words, these experiments support the hypothesis that mouth and hand are intimately linked to each other and their activation is evoked by observation; however, it is possible that these systems do not code the aim of the action according to the definition of a mirror system given by Rizzolatti and colleagues (Rizzolatti et al. 2002), and, mainly, the joint activity of the two systems is not really implicated also in cognitive functions, such as the interactions between gestures and spoken language. Against this possibility, there is the following experimental evidence. First, the motor command is independent of the used effector (hand and mouth) and consequently it did code the action aim (see Rizzolatti et al., 1988, 2002). Second, the observation of different transitive actions affects selectively mouth articulatory organs, which are used to speak (see the results of the TMS study); that is, it affected specific formants of vowel voice spectra. If the hypothesis about a simple motor resonance were true, no specificity in the relationships between action and articulation organ involved in speech should be found.

These arguments are in accordance with the proposal that speech is fundamentally gestural. This idea is captured by the motor theory of speech perception (Liberman et al., 1967), and by what has more recently become known as articulatory phonology (Browman and Goldstein, 1995). In this view, speech is regarded not as a system for producing sounds, but rather as a system for producing articulatory gestures, through the independent, but temporally coordinated, action of the six articulatory organs—namely, the lips, the velum, the larynx, and the blade, body, and root of the tongue. This approach is largely based on the fact that the basic units of speech (i.e. phonemes) do not exist as discrete units in the acoustic signal (Joos, 1948), and are not discretely discernible in mechanical recordings of sound, as in a sound spectrograph (Liberman et al., 1967). One reason for this is that the acoustic signals corresponding to individual phonemes vary widely, depending on the context in which they are embedded. In particular, the formant transitions for a particular phoneme can be quite different, depending on the neighboring phonemes. Yet, we can perceive speech at remarkably high rates, up to at least 10–15 phonemes per second, which seems at odds with the idea that some complex, context-dependent transformation is necessary. Indeed, even relatively simple sound units, such as tones or noises, cannot be perceived at comparable rates, further suggesting that a different principle underlies the perception of speech. The conceptualization of speech as gesture or, more precisely, as motor representation of mouth gesture overcomes these difficulties, at least to some extent, since the articulatory gestures that give rise to speech partially overlap in time (co-articulation), which makes possible the high rates of production and perception (Studdert-Kennedy, 2005). The link between motor mechanisms for phonological perception and production is also supported by TMS (D’Ausilio et al., 2009; Fadiga et al., 2002) and event-related functional MRI studies (Pulvermüller and Shtyrov, 2006; for a review see Pulvermüller and Fadiga, 2010). These studies showed that listening to particular phonemes elicits cortical activity in motor regions involved in producing these phonemes.

LANGUAGE ACQUISITION IN CHILDREN AND TRANSITIVE ACTIONS

Gentilucci and colleagues proposed that the mirror system coupled with the double hand–mouth command system might be involved in language acquisition in children (Gentilucci et al., 2004b). Indeed, they found that it was more effective in children aged 6–7 years than in adults. This hypothesis is in accordance with the notion that there is a strict relationship between early speech development in children and several aspects of manual activities, such as communicative and symbolic gestures (Bates and Dick, 2002; Volterra et al., 2005). For example, canonical babbling in infants aged from 6 to 8 months is accompanied by rhythmic hand movements (Masataka, 2001). Manual gestures predate early development of speech in infants, and predict later success even up to the two-word level (Iverson and Goldin-Meadow, 2005). Word comprehension in infants between 8 and 10 months and word productions between 11 and 13 months are accompanied by deictic and declarative gestures, respectively (Bates and Snyder, 1987; Volterra et al., 1979).

Bernardis et al. (2008) tested the hypothesis that manual activities concur to language development, by recording vocalizations of infants (age: 11–13 months) experiencing the emergent lexicon phase. Vocalization occurred during manipulation of solids and toys of different size and during request gestures directed to the same objects manipulated by the experimenter. F1 in the voice spectra was higher when both the two motor activities were directed toward the large rather than the small objects. The authors explained these results postulating that the manipulation commands were sent to the hands as well as to the mouth. Higher F1 is associated with larger internal mouth apertures (Leoni and Maturi, 2002). Consequently, the command to open the fingers by a larger amount was sent also to the mouth whose aperture was further enlarged producing higher vocalizations. We also hypothesize that the observation of manipulated objects activated manipulation commands to both the hand and the mouth according to an imitation process elicited by a mirror system hypothesized in infants (Dapretto et al., 2006). They induced the same effects on the voices of infants gesturing toward those objects as they did on the voices of infants manipulating the same object. The fact that request gestures directed toward an object can be associated with manipulation commands can be explained by the proposal by Vygotsky (1934) that request gestures derive from reaching to grasp actions, which are guided by intrinsic object properties, like the manipulation activity is. Consequently, in infants experiencing the emergent lexicon phase the intrinsic object properties extracted from manual interactions may be used as a preverbal form of object identification and then—transformed in vocalization—used to communicate with other individuals (request gesture activity).

A remaining open question is whether the mirror system and the dual hand–mouth motor command system are specific for speech or rather they are general-purpose systems. In other words, are the two systems related to both cognitive processes, such as spoken language, and hand–mouth motor control? In favor of the first hypothesis, neuroimaging data have shown localization of the hand mirror system in Broca’s area (Gazzola and Keysers, 2009; Kilner et al., 2009; for a review see Rizzolatti and Craighero, 2004). Moreover, higher levels of integration between execution/observation of gestures and words have been observed as compared to execution/observation of transitive actions and syllable pronunciation: When congruent communicative words and symbolic gestures are simultaneously produced, gestures are slowed down and word vocal parameters are enhanced. Moreover, the vocal parameters of verbal responses to the observation of gestures while simultaneously listening to congruent words are enhanced if compared to the observation/listening of the sole gesture/word (Bernardis and Gentilucci, 2006). These effects are transitorily abolished after brief inactivation of Broca’s area by means of repetitive TMS (Gentilucci et al., 2006). In favor of the second hypothesis, behavioral data have shown the existence of double hand–mouth commands effective for simple mouth opening as well as for syllable pronunciation (Gentilucci et al., 2001). Arising from the fact that the two systems were discovered in monkey premotor cortex for activities of hand–mouth grasp, we have previously suggested that the two systems may have evolved initially in the context of ingestion and object exploration, and later formed a platform for combined manual and vocal communication (Gentilucci and Corballis, 2006; Gentilucci et al., 2006). A consistent hypothesis is that, in modern humans, the systems have two different functions: The first is involved in ingestion activities, the other in relating speech to gestures (Bernardis and Gentilucci, 2006; Gentilucci et al., 2006). The two functions work synergistically and may be concurrently involved in language development in children (Bernardis et al., 2008; Gentilucci et al., 2004b).

ACKNOWLEDGMENTS

The work was supported by a grant from MIUR (Ministero dell’Istruzione, dell’Università e della Ricerca) to M.G.

REFERENCES

Arbib, M.A. (2005). From monkey-like action recognition to human language: an evolutionary framework for neurolinguistics. Behavioral and Brain Sciences, 28: 105–168.

Armstrong, D.F. (1999). Original Signs: Gesture, Sign, and the Source of Language. Washington, DC: Gallaudet University Press.

Armstrong, D.F., Stokoe, W.C., and Wilcox, S.E. (1995). Gesture and the Nature of Language. Cambridge: Cambridge University Press.

Baldissera, F., Cavallari, P., Craighero, L., and Fadiga, L. (2001). Modulation of spinal excitability during observation of hand actions in humans. European Journal of Neuroscience, 13: 190–194.

Baldissera, F., Borroni, P., Cavallari, P., and Cerri, G. (2002). Excitability changes in human corticospinal projections to forearm muscles during voluntary movement of ipsilateral foot. Journal of Physiology, 539: 903–911.

Bates, E. and Dick, F. (2002). Language, gesture, and the developing brain. Developmental Psychobiology, 40: 293–310.

Bates, E. and Snyder, L.S. (1987). The cognitive hypothesis in language development, in Uzgiris, Ina C. and McVicker Hunt, J. (eds), Infant Performance and Experience: New Findings with the Ordinal Scales (pp. 168–204). Urbana, IL: University of Illinois Press.

Bernardis, P. and Gentilucci, M. (2006). Speech and gesture share the same communication system. Neuropsychologia, 44: 178–190.

Bernardis, P., Bello, A., Pettinati, P., Stefanini, S., and Gentilucci, M. (2008). Manual actions affect vocalizations of infants. Experimental Brain Research, 184: 599–603.

Browman, C.P. and Goldstein, L.F. (1995). Dynamics and articulatory phonology, in van Gelder, T. and Port, R.F. (eds), Mind as Motion (pp. 175–193). Cambridge, MA: MIT Press.

Buccino, G., Binkofski, F., Fink, G.R., Fadiga, L., Fogassi, L., Gallese, V., Seitz, R.J., Zilles, K., Rizzolatti, G., and Freund, H.J. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. European Journal of Neuroscience, 13: 400–404.

Burling, R. (1999). Motivation, conventionalization, and arbitrariness in the origin of language, in King, B.J. (ed.), The Origins of Language: What Nonhuman Primates can Tell Us (pp. 307–350). Santa Fe, NM: School of American Research Press.

Corballis, M.C. (1992). On the evolution of language and generativity. Cognition, 44: 197–226.

Corballis, M.C. (2002). From Hand to Mouth: The Origins of Language. Princeton, NJ: Princeton University Press.

Dapretto, M., Davies, M.S., Pfeifer, J.H., Scott, A.A., Sigman, M., Bookheimer, S.Y., and Iacoboni, M. (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nature Neuroscience, 9: 28–30.

D’Ausilio, A., Pulvermüller, F., Salmas, P., Bufalari, I., Begliuomini, C., and Fadiga, L. (2009). The motor somatotopy of speech perception. Current Biology, 19: 381–385.

Demonet, J.F., Chollet, F., Ramsay, S., Cardebat, D., Nespoulous, J.L., Wise, R., Rascol, A., and Frackowiak, R. (1992). The anatomy of phonological and semantic processing in normal subjects. Brain, 115: 1753–1768.

Donald, M. (1991). Origins of the Modern Mind. Cambridge, MA: Harvard University Press.

Fadiga, L., Fogassi, L., Pavesi, G., and Rizzolatti, G. (1995). Motor facilitation during action observation: a magnetic stimulation study. Journal of Neurophysiology, 73: 2608–2611.

Fadiga, L., Craighero, L., Buccino, G., and Rizzolatti, G. (2002). Speech listening specifically modulates the excitability of tongue muscles: a TMS study. European Journal of Neuroscience, 15: 399–402.

Ferrari, P.F., Gallese, V., Rizzolatti, G., and Fogassi, L., (2003). Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. European Journal of Neuroscience, 17: 1703–1714.

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (1996). Action recognition in the premotor cortex. Brain, 119: 593–609.

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (2002). Action representation and the inferior parietal lobule, in Prinz, W. and Hommel, B. (eds), Attention and Performance XIX. Common Mechanisms in Perception and Action (pp. 334–355). Oxford, UK: Oxford University Press.

Gazzola, V. and Keysers, C. (2009). The observation and execution of actions share motor and somatosensory voxels in all tested subjects: single-subject analyses of unsmoothed fMRI data. Cerebral Cortex, 19: 1239–1255.

Gentilucci, M. (2003). Grasp observation influences speech production. European Journal of Neuroscience, 17: 179–184.

Gentilucci, M. and Campione, G.C. (2011). Do postures of distal effectors affect the control of actions of other distal effectors? Evidence for a system of interactions between hand and mouth. PLoS One, 6(5): e19793.

Gentilucci, M. and Corballis, M.C. (2006). From manual gesture to speech: a gradual transition. Neuroscience and Biobehavioral Reviews, 30: 949–960.

Gentilucci, M., Benuzzi, F., Gangitano, M., and Grimaldi, S. (2001). Grasp with hand and mouth: a kinematic study on healthy subjects. Journal of Neurophysiology, 86: 1685–1699.

Gentilucci, M., Santunione, P., Roy, A. C., and Stefanini, S. (2004a). Execution and observation of bringing a fruit to the mouth affect syllable pronunciation. European Journal of Neuroscience, 19: 190–202.

Gentilucci, M., Stefanini, S., Roy, A.C., and Santunione, P. (2004b). Action observation and speech production: study on children and adults. Neuropsychologia, 42: 1554–1567.

Gentilucci, M., Bernardis, P., Crisi, G., and Dalla Volta, R. (2006). Repetitive transcranial magnetic stimulation of Broca’s area affects verbal responses to gesture observation. Journal of Cognitive Neuroscience, 18: 1059–1074.

Gentilucci, M., Campione, G.C., Dalla Volta, R., and Bernardis P. (2009). The observation of manual grasp actions affects the control of speech: a combined behavioral and transcranial magnetic stimulation study. Neuropsychologia, 47: 3190–3202.

Givòn, T. (1995). Functionalism and Grammar. Philadelphia, PA: Benjamins.

Goldin-Meadow, S. (1999). The role of gesture in communication and thinking. Trends in Cognitive Sciences, 3: 419–429.

Graziano, M. (2006). The organization of behavioral repertoire in motor cortex. Annual Review of Neuroscience, 29: 105–134.

Hadar, U.D., Wenkert-Olenik, R.K., and Soroker, N. (1998). Gesture and the processing of speech: neuropsychological evidence. Brain and Language, 62: 107–126.

Hari, R., Forss, N., Avikainen, S., Kirveskari, E., Salenius, S., and Rizzolatti, G. (1998). Activation of human primary motor cortex during action observation: a neuromagnetic study. Proceedings of the National Academy of Sciencesof the USA, 95: 15061–15065.

Hauk, O., Davis, M.H., Ford, M., Pulvermüller, F. and Marslen-Wilson, W.D. (2006). The time course of visual word recognition as revealed by linear regression analysis of ERP data. Neuroimage, 30: 1383–1400.

Hepp-Reymond, M.C., Hüsler E.J., Maier, M.A, and Qi, H.X. (1994). Force-related neuronal activity in two regions of the primate ventral premotor cortex. Canadian Journal of Physiology and Pharmacology, 72: 571–579.

Hewes, G.W. (1973). Primate communication and the gestural origins of language. Current Anthropology, 14: 5–24.

Iverson, J.M. and Goldin-Meadow, S. (2005). Gesture paves the way for language development. Psychological Science, 16: 367–371.

Joos, M., (1948). Acoustic Phonetics. Language Monograph No. 23. Baltimore, MD: Linguistic Society of America.

Kendon, A. (2004). Gesture: Visible Action as Utterance. New York: Cambridge University Press.

Kilner, J.M., Neal, A., Weiskopf, N., Friston, K.J., and Frith, C.D. (2009). Evidence of mirror neurons in human inferior frontal gyrus. Journal of Neuroscience, 29: 10153–10159.

Kohler, E., Keysers, C., Umiltà, M.A., Fogassi, L., Gallese, V., and Rizzolatti, G. (2002). Hearing sounds, understanding actions: action representation in mirror neurons. Science, 297: 846–848.

Krauss, R.M. and Hadar, U.D. (1999). The role of speech-related arm/hand gestures in word retrieval, in Campbell, R. and Messing, L. (eds), Gesture, Speech, and Sign (pp. 93–116). Oxford: Oxford University Press.

Kurata, K. and Tanji, J. (1986). Premotor cortex neurons in macaques: activity before distal and proximal forelimb movements. Journal of Neuroscience, 6: 403–411.

Leoni, F. A. and Maturi, P. (2002). Manuale di fonetica. Rome: Carocci.

Levelt, W.J., Richardson, G., and La Heij, W. (1985). Pointing and voicing in deictic expressions. Journal of Memory and Language, 24: 133–164.

Liberman, A.M., Cooper, F.S., Shankweiler, D.S., and Studdert-Kennedy, M. (1967). Perception of the speech code. Psychological Review, 74: 431–461.

MacNeilage, P.F. (1998). The frame/content theory of evolution of speech. Behavioural Brain Research, 21: 499–546.

McNeill, D. (1992). Hand and Mind: What Gestures Reveal about Thought. Chicago, IL: University of Chicago Press.

McNeill, D. (2000). Language and Gesture. Cambridge: Cambridge University Press.

Maeda, F., Keenan, J.P., Tormos, J.M., Topka, H., and Pascual-Leone, A. (2000). Modulation of corticospinal excitability by repetitive transcranial magnetic stimulation. Clinical Neurophysiology, 111: 800–805.

Masataka, N. (2001). Why early linguistic milestones are delayed in children with Williams syndrome: late onset of hand banging as a possible rate-limiting constraint on the emergence of canonical babbling. Developmental Science, 4: 158–164.

Matelli, M. and Luppino, G. (2000). Parietofrontal circuits: parallel channels for sensory-motor integrations. Advances in Neurology, 84: 51–61.

Matelli, M., Luppino, G., and Rizzolatti, G. (1985). Patterns of cytochrome oxidase activity in the frontal agranular cortex of the macaque monkey. Behavioural Brain Research, 18: 125–136.

Matelli, M., Luppino, G., and Rizzolatti, G. (1991). Architecture of superior and mesial area 6 and the adjacent cingulate cortex in the macaque monkey. Journal of Comparative Neurology, 311: 445–462.

Mukamel, R., Ekstrom, A.D., Kaplan, J., Iacoboni, M., and Fried, I. (2010). Singleneuron responses in humans during execution and observation of actions. Current Biology, 20(8): 750–756.

Murata, A., Fadiga, L., Fogassi, L., Gallese, V., Raos, V., and Rizzolatti, G. (1997). Object representation in the ventral premotor cortex (area F5) of the monkey. Journal of Neurophysiology, 78: 2226–2230.

Muthukumaraswamy, S.D., Johnson, B.W., and McNair, N.A. (2004). Mu rhythm modulation during observation of an object-directed grasp. Cognitive Brain Research, 19: 195–201.

Patuzzo, S., Fiaschi, A., and Manganotti, P. (2003). Modulation of motor cortex excitability in the left hemisphere during action observation: a single- and paired-pulse transcranial magnetic stimulation study of self- and non-self-action observation. Neuropsychologia, 41: 1272–1278.

Paulesu, E., Frith, C.D., and Frackowiak, R. (1993). The neural correlates of the verbal component of working memory. Nature, 362: 342–345.

Perrett, D.I., Mistlin, A.J., Harries, M.H., and Chitty, A.J. (1990). Understanding the visual appearance and consequence of hand actions, in Goodale, M.A. (ed.), Vision and Action: The Control of Grasping (pp. 163–242). Norwood, NJ: Ablex.

Pulvermüller, F. and Fadiga, L. (2010). Active perception: sensorimotor circuits as a cortical basis for language. Nature Reviews Neuroscience, 11: 351–360.

Pulvermüller, F. and Shtyrov, Y. (2006). Language outside the focus of attention: the mismatch negativity as a tool for studying higher cognitive processes. Progress in Neurobiology, 79: 49–71.

Rizzolatti, G. and Arbib, M.A. (1998). Language within our grasp. Trends in Neurosciences, 21: 188–194. Rizzolatti, G. and Craighero, L. (2004). The mirror-neuron system. Nature Reviews Neuroscience, 27: 169–192. Rizzolatti, G. and Luppino, G., (2001). The cortical motor system. Neuron, 31: 889–901.

Rizzolatti, G., Scandolara, C., Gentilucci, M., and Camarda, R. (1981). Response properties and behavioral modulation of “mouth” neurons of the postarcuate cortex (area 6) in macaque monkeys. Brain Research, 225: 421–424.

Rizzolatti, G., Camarda, R., Fogassi, L., Gentilucci, M., Luppino, G., and Matelli, M. (1988). Functional organization of inferior area 6 in the macaque monkey. II. Area F5 and the control of distal movements. Experimental Brain Research, 71: 491–507.

Rizzolatti, G., Fadiga, L., Gallese, V., and Fogassi, L. (1996). Premotor cortex and the recognition of motor actions. Cognitive Brain Research, 3: 131–141.

Rizzolatti, G., Fogassi, L., and Gallese, V. (2002). Motor and cognitive functions of the ventral premotor cortex. Current Opinion in Neurobiology, 12: 149–154.

Ruben, R.J. (2005). Sign language: its history and contribution to the understanding of the biological nature of language. Acta Oto-Laryngologica, 125: 464–467.

Strafella, A.P. and Paus, T. (2000). Modulation of cortical excitability during action observation: a transcranial magnetic stimulation study. Neuroreport, 11: 2289–2292.

Studdert-Kennedy, M. (2005). How did language go discrete?, in Tallerman, M. (ed.), Language Origins: Perspectives on Evolution (pp. 48–67). Oxford: Oxford University Press.

Volterra, V., Bates, E., Benigni, L., Bretherton, I., and Campioni, L. (1979). First words in language and action: a qualitative look, in Bates, E. (ed.), The Emergence of Symbols: Cognition and Communication in Infancy (pp. 141–222). New York: Academic Press.

Volterra, V., Caselli, M.C., Capirci, O., and Pizzuto, E. (2005). Gesture and the emergence and development of language, in Tomasello, M. and Slobin, D.I. (eds), Beyond Nature-Nurture: Essays in Honor of Elizabeth Bates (pp. 3–40). Mahwah, NJ: Lawrence Erlbaum Associates.

Vygotsky, L.S. (1934). Thought and Language. Cambridge, MA: MIT Press.

Zatorre, R.J., Evans, A.C., Meyer, E., and Gjedde, A. (1992). Lateralization of phonetic and pitch discrimination in speech processing. Science, 256: 846–849.